Storage Peer Incite: Notes from Wikibon’s February 9, 2012 Research Meeting

Recorded audio from the Peer Incite:

On February 6 EMC gave the IT industry an early Valentine in the form of VFCache (a.k.a. Project Lightning), the first server-based flash storage card system from a major server or storage vendor. At first glance this might not seem exciting as the product itself is a 1.0 version. In addition, EMC's marketing is, in part, intended to freeze the market, or at least notify EMC customers that there's an EMC option, backed by EMC services and its strong brand promise. After all, several startups have been marketing PCIe flash storage systems for quite some time, and Fusion-io, the pioneer in this area, has a track record of major installations in hyperscale environments that is already more than a year old.

However, VFCache is significant for several reasons. First, it is the first product from a major vendor rather than a startup. This validates the trend and changes the question from "Is this just a niche market product for Internet companies?" to "When is my server or storage vendor going to start selling flash, and how can my company leverage it?"

Second, while many of the startups are focused on Internet companies like Google, Yahoo, Facebook, and Twitter, and on hyperscale/big data applications, VFCache is obviously intended to support traditional enterprise applications such as ERP and CRM. And while Fusion-io advocates a single-tier, totally server-based storage approach, VFCache is designed to work with, rather than completely replace, traditional storage arrays. While this might be seen as EMC protecting its installed base, companies have huge amounts of data that are only semi-active or inactive but that they need to preserve and keep available. Therefore Wikibon agrees with EMC that the storage array is not going away, although as EMC President & COO Pat Gelsinger said during the announcement, high-speed disk will be replaced with PCIe flash over time. This means that companies need flash that works with the underlying storage array.

VFCache has two functions:

- As a cache, which will intercept and select the most relevant writes and retain them for subsequent reads. The cache does not hold up the writes; they are written through to the disk or write protected cache of an array in the normal way.

- As a split cache, where a portion of the cache can be used to hold a complete volume of data.

At the moment, the size of the EMC PCIe card (300GB SLC) makes it inappropriate for large-scale IO intensive big-data deployments such as those of Facebook and Apple, where very large databases are held in flash only and spread across multiple commodity servers. In fairness, EMC has been clear that this offering is not positioned for such applications. Nonetheless these are the sexy use cases and all vendors covet them. We note that EMC's product is really still in the early development phase and Wikibon expects a string of follow-on announcements from EMC over the next year or more including Project Thunder, which may change this equation. One important strength is that VFCache can work with any vendor's storage, not just EMC's. As well, EMC has put forth a vision using its Fully Automated Storage Tiering (FAST) software which optimizes the placement of data on the most appropriate device (from flash to spinning disk).

Today, while VFCache is the first product of a family early in its lifecycle, it is an important signal to the market that PCIe flash will become a mainstream technology. CIOs need to pay attention and look at applications where cost-per-IO is a more important measure than cost-per GByte. That is where flash can provide a real pay-off.

The articles below examine the features and implications of the VFCache announcement based on the February 9 Peer Incite. G. Berton Latamore, Editor

EMC Introduces VFcache to Spark Application Performance

EMC’s Project Lightning tendrils reached well into the server, with the formal announcement of VFCache on February 6. VFCache, as available today, is a maximum 300GB server flash storage card connected to a server’s PCIe bus. EMC supports a single VFCache card per server. The cache is designed to hold small blocks of frequently-accessed data, reducing random read response times from milliseconds to microseconds, at the same time preventing the cache from being flooded by large sequential reads associated with backup, for example.

EMC manages the VFCache via a light-weight filter driver that identifies frequently-accessed small-block data and promotes it from disk storage to the VFCache. Once in the cache, data is managed on a first-in, first-out (FIFO) basis. Responsibility for data protection remains within the array, which is why data is first written to the array, before being promoted to VFCache. As a server-side cache, each VFCache card is dedicated to a single server, with no coordination across servers and is therefore, not designed for use in clustered-server environments. VFCache is supported in VMware environments, but there is no native support for or built-in coordination with high-availability and workload balancing applications such as VMware’s VMotion. Notably, VFCache, being a server-side cache, will work with both EMC and non-EMC arrays.

Where VFCache can be used today

In this first generation version, VFCache is designed to improve performance for small-block, read transactions. The maximum benefit will be seen when reading 8KB blocks in read-intensive applications, where the data has limited locality of reference and a high degree of concurrent access, or, in layman’s terms, the same blocks keep getting hammered. In presentations, EMC specifically noted that VFCache was designed to deliver performance boosts in database applications, on-line transaction processing (OLTP) systems, e-mail, Web applications, reporting applications, and analytics. EMC provides a good guide for when to use VFCache in this Introduction to VFCache.

Where VFCache should not be used today

According to EMC “VFCache is currently not supported in shared-disk environments or active/active clusters.” As a read cache, VFCache should have limited benefit for write-intensive workloads but little or no negative impact either. In servers running a mix of applications, some read-intensive and some write-intensive, VFCache should still benefit read-intensive workloads. EMC did caution that write-intensive workloads with a large number of disk spindles dedicated to a single server might be negatively impacted by the presence of VFCache.

VFCache can be used at the virtual machine (VM) level in VMware environments, thus boosting performance of applications on a per-VM basis. Since VFCache is a local resource to the VM, however, those VMs using VFCache cannot take advantage of VMotion for workload balancing, nor can they use features such as vCenter Site Recovery Manager (SRM) or Distributed Resource Scheduler (DRS).

EMC does not currently offer VFCache for blade servers. During the Peer Incite research meeting, however, one EMC participant mentioned a blade-server solution under development.

Future enhancements

Beyond the hinted-at blade-server offering, EMC will boost the capacity of VFCache to 700GB sometime in the summer and will deliver Project Thunder, an all-flash appliance that connects via a server network, most likely Infiniband, later this year. While not quite as fast as being in a server on the PCIe bus, the external appliance will provide a significant performance boost over array-based drives and will support active/active clusters and blade servers.

Although the current VFCache solution works in conjunction with Fully Automated Storage Tiering (FAST) from EMC, and both offerings provide performance benefits and efficiency gains to applications, the company promises more complete integration with FAST later this year. Some participants on the call questioned the logic of controlling a server resource from a storage controller-based software. Deeper integration with FAST also implies that, while VFCache works with both EMC and non-EMC arrays, EMC will, in the future, lay claim to greater benefits when used in conjunction with EMC arrays and FAST.

Action item: Determine the trusted advisor.

A storage administrator who is told “I have an application performance problem, and I think it’s a storage problem,” is faced with a dizzying array of performance-enhancing options:

- Add solid state drives to the array,

- Increase memory in the array,

- Increase or upgrade channels to the array,

- Implement storage tiering and automated management,

- Migrate volumes to a different array,

- Stripe volumes across more disk drives,

- Archive and migrate stale data off the array,

- Compress data,

- Deduplicate data,

- Add a caching appliance.

Even the most educated storage professionals may be hard-pressed to make a choice, but all of these are within the typical locus of control of storage administrators. Add to this, now, the option for flash cards in the servers, which are typically hands-off to the storage administrator, and their job has become even more challenging.

Because the options are so plentiful, storage suppliers, or at least their systems engineers (SEs), have increasingly attempted to assume the role of trusted advisor to the storage administrator. Many SEs have earned that trust. If storage suppliers continue to move further into the server domain, however, SEs will find that they need to now gain the trust of server and network administrators as well as application managers.

At the same time, and precisely because so many options exist, storage administrators must remain constantly up-to-date on the relative merits and limitations of various performance-enhancing options. Each option has performance, operational, and cost trade-offs and possibly budgetary and locus-of-control trade-offs. The challenge for the storage administrator will be in determining whether the proposed solution to a storage performance problem is based upon supplier margins, the sales executive’s compensation plan, the desire to sell up the organization, or is, in fact, the best fit for the environment.

Assessment of EMC Project Lightning, EMC VFCache

Introduction

On Monday February 6 2012, EMC formally announced two projects;

- Project Lightning, now named EMC VFCache, available in March 2012,

- Project Thunder, which will which will be in beta testing in 2Q 2012. Follow the link for an assessment.

The most significant statement of the announcement came from Pat Gelsinger, who forecast that in the future nearly all active data would be held in flash and that the days of high-speed disk drives were numbered. He also emphasized that high density drives would continue to hold the majority of data. The view is supported by the comment on this Alert by Alan Benway that investment in 15K drives has ceased, and manufacturing will last only 18-24 months. Even the very recent announcement that the speed of writing to disks might be increased by 1,000 times does nothing to solve the problem that once data is on disk, it is slow and expensive to retrieve anything beyond a tiny fraction of that data.

Over the last two decades, EMC has led a highly successful drive to migrate data management function from servers to networked storage arrays, saving enterprises billions in the process. The realization that with flash the pendulum of data control is now moving back towards servers led EMC to form the EMC Flash Business Unit in May 2011 with the mission to compete strongly in the emerging flash market. This mission will also compete with EMC's other highly successful data storage business units.

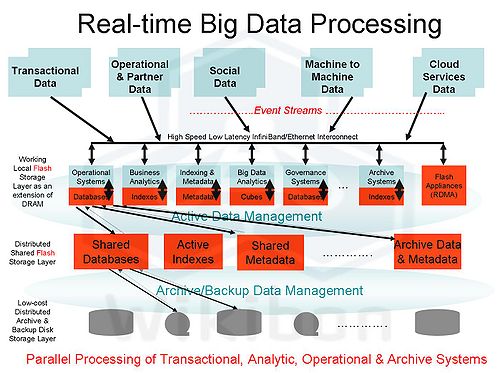

In a previous alert Wikibon put forward a five layer model for future computing. This is shown in figure 1 below.

EMC VFCache Summary

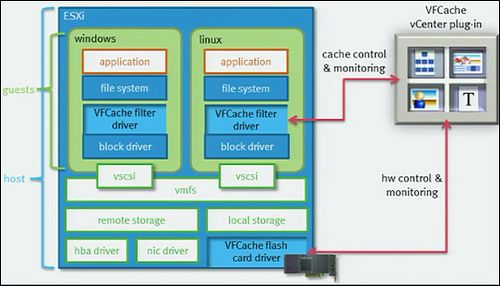

EMC Project Lightning (a.k.a. EMC VFCache) is mapped onto this model in Figure 2 below.

The VFCache is a 300GB SLC flash PCIe card from Micron or LSI that can plug into qualified CISCO, Dell, HP, and IBM racks and free-standing servers. EMC indicated that mezzanine VFCache cards would be available for some blade servers in the future. Only one VFCache can be installed on a server and requires installation of an EMC VFCache filter driver.

Suitable IOs are then cached in the card on a FIFO basis. When the card is full, the oldest data is overwritten. The VFCache filter driver does not cache IO with 64KB block sizes or greater to reduce the risk of large sequential IOs flushing the cache of more useful data.

The VFCache card can be split and some of the space used for complete volumes that will fit the space allocated. At the moment protection and availability of the data in the split portion of the cache is completely up to the user to determine and maintain.

EMC VFCache Recommendations & Conclusions

The best use guidelines for VFCache are:

- Use VF Cache only for simple caching situations:

- Read Intensive Workloads – Applications that have a read-heavy workload, where the ratio of reads to writes is at least 50% are good candidates for caching.

- Small I/O Size – Applications whose read requests are 64KB or smaller are eligible to be cached with VFCache.

- Random I/O – Random I/O activity makes a better candidate for caching than sequential I/O. Sequential I/O activity does not often result in “hot” data or repeated access to the same data.

- Good locality of reference.

- Don’t use the split cache yet for production work unless the data is truly transient and does not need to be recovered if the server fails. Users are on their own for recovery. Users should wait for greater support.

- At the moment the support for VMware is limited, as the filter driver for VFCache is installed in each VM guest. There is no hypervisor driver as yet. **A vCenter plug-in for management is available.

- Native support for vMotion is not available; scripting will be required to remove and re-add storage.

- The underlying issue is that VFCache appears to the host as a local storage volume, which would disappear if a virtual machine is moved to another server.

- The problem has been solved by vendors such as Virsto that virtualize the storage presentation directly to the hypervisor.

- Wikibon believes that EMC are likely to go in this direction in the future, but it’s a big hole in the product for now. Wikibon believes that EMC is working hard on solutions.

- There is no support for clustering environments such as Windows clusters.

- The initial supported environments are Microsoft Windows and Linux either native or under VMware without vMotion support.

The Micron PCIe card uses the latest flash technology and has been well reviewed. EMC claims to compete in three areas with the Micron & LSI cards:

- Improved IOPS, Up to 4x throughput increase. These are reasonable claims for normal database environments such as Oracle and Microsoft SQL. A benchmark by Dennis Martin of Demartek concluded that in single applications benchmarks could achieve 2.5 - 3.5 times the throughput in the database environments he ran. These types of improvement can be achieved in environments where IOPS are the performance constraint and the IO profile meets the best use guidelines listed above.

- EMC claimed in an announcement slide that its cards are four-times better than another "popular" PCIe card for 100% read and 2 times better for 50% read. This result may be true of a specific card and a specific workload but will a very unlikely finding in the real world. In the same Demartek report above, Demartek found identical throughput at 100% read and a small improvement (<10%) for 80% and 60% read environments. These improvement are within the expected improvement from a technology turn.

- Reduced Latency Latency and latency variance are the greatest challenges to database designers and administrators. The average latency will be much better for reads, and this will help in obtaining the all-important write locks when they are required. However caching solutions increase the variance very significantly. Environments that are sensitive to rate infrequent but have very long IO times are unlikely to be improved.

- EMC claimed lower latency for the reads from a VFCache solution than other "popular" cards. This is likely to be true for the Micron card but less likely for the LSI card. The Micron card had about 15% lower maximum latency in each of the comparison tests shown in the study above.

- EMC claimed that server CPU processor utilization was lower with the VFCache card because processing is offloaded to the card. This may have been important with previous servers with a small number of cores. However, Wikibon has consistently pointed out that server utilization is very low (~10%) on multi-core servers. The trend is to use server cycles from spare cores instead of offloading loads to slower outboard processors because the server processors are faster and more system information is available, especially for recovery and write optimization. This is a design decision and should not be a buying criteria.

Overall, the EMC VFCache PCIe solution performs well against a large number of good PCIe cards from Fusion-io, Virident, Texas Memory and others. However, it is has fewer options (e.g., cache size, MLC or SLC) and less support at this early stage of development. For VMware the solution is not complete, and solutions from Fusion-io, Virsto, and other are currently superior. EMC should and probably will bring out a much improved solution for VMware and Hyper-V.

At the moment there is no integration between FAST tiered storage on VMAX and VNX storage arrays and VFCache; EMC has indicated it is looking at greater integration between the VFCache and the FAST system with hints and improved pre-fetching between the two sets of software. De-duplication by identifying identical blocks in the cache could also be a future improvement. It seems likely that the flow of information will flow down and be able to assist the array. Other improvements may come from using spare SCSI fields to tag where the data is coming from. This could even start to look like system managed storage on the mainframe.

Action item: EMC has committed significant resources to the flash business unit, and has taken a small but important step into moving the locus of control towards the server. VFCache will be most valuable for workloads under performance pressure that fit the best use case above. There will be some overhead in reviewing and adjusting the system based on performance metrics. CTOs and database administrators should also review other methods of using flash, including SSDs in the array, PCIe cards in the array and putting key tables and volumes as read-write volumes on the PCIe card. EMC's entry has verified the market, and EMC is very likely to significantly enhance the VFCache over time.

Assessment of EMC Project Thunder, Server Area Networks

Introduction

On Monday February 6 2012, EMC formally announced two projects;

- Project Lightning, now named EMC VFCache, available in March 2012. Follow the link for a detailed assessment.

- Project Thunder, which will which will be in Beta testing in 2Q 2012.

Project Thunder - Introducing Server Area Networks

One of the keys to EMC's success was the early embracing a SANs (storage area networks). SANs allowed operations great flexibility in connecting servers to storage and have grown from simple to complex SANs over metro distances. Single arrays have grown to federated clusters of storage arrays. Operating systems, file systems, database systems, and hypervisors have developed to take advantage of the rich storage infrastructure.

This topology has two major constraints. The first is the relative slowness of IO to persistent storage, mainly disk drives. Well-tuned storage environments struggle to keep IO response times in the low milliseconds, while the power of processors have grown with Moore's Law. The problem of IO variance (the phantom 700ms IO response times that come and go without reason) has grown worse. The cost-per-gigabyte of storage has dropped with Moore's Law, while the cost of IO has remained static. Vendors and practitioners have struggled to keep storage from being the bottleneck. Storage is assumed to be the problem in the data center whenever an application does not perform.

Flash has completely changed the cost, speed, and IO-density capabilities of IO to persistent storage by orders of magnitude, and will continue to do so for several more generations. As with any technology, smart technologists have predicted that performance increase will cease because of some barrier, only to see that barrier removed by even smarter people. Signal processing algorithms from Anobit (now owned by Apple) are the latest miracle cure for increasing the life of flash bit cells.

EMC's thunder project aims to replicate it's success in SANs into server area networks. The network throughput can be 10 times faster, the network latency can be 1,000 times faster, the IO rate can be 100,000 times faster, and the IO density (MIOP/TB) can be 200,000 times greater. The pure cost of storage can be 78 times less expensive than storage on SANs if only a trickle of data is required to be read or written. Cost/GB as a metric to describe the value of storage is no longer useful.

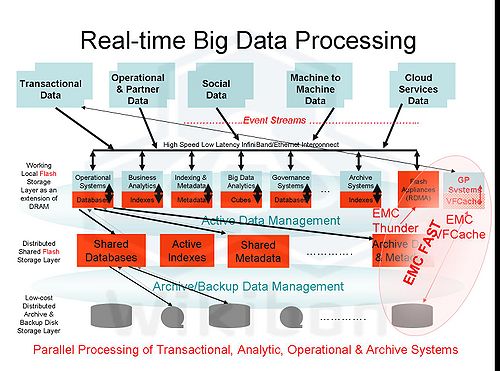

Figure 1 shows EMC's vision of a Server Area Network, a set of appliances that sit between the blade servers and traditional storage arrays.

In a previous alert Wikibon put forward a five layer model for future computing. This is shown in Figure 2 below.

The EMC Project Thunder is mapped onto this model in Figure 3 below.

One key difference between the two visions is the use of flash as an extension of main memory, the ability for the processor to write atomically directly to persistent storage. This can significantly improve the performance of files systems and databases and many operating system and hypervisor functions but will require changes to software to take full advantage. These changes are likely to take several years to come into general use.

This technology is at a very early stage of development, and other vendors are presumably working on solutions. Fusion-io has been an important early developer of technology and will continue to contribute. CISCO, EMC with VCE, HP, IBM, and Oracle will all be contributing, as well as software giants such as Microsoft, SAP, and database vendors.

Action item: The most important potential of this announcement is for future systems design. As Wikibon has stated before, future systems should be designed top-down in an IO-centric era, assuming that IOPS are now very low cost compared to disk-based systems. Big data transaction systems and big data analytic systems are likely to be designed together with the data flowing top-down from servers to layers of flash, picking up indexes and metadata, before finally ending up on disk for long-term storage. The most important capability will be the ability to manage active data across the SAN, levels of persistent flash storage, remote copies, and indexes and metadata. This management layer will be the most important long term decision that CTOs and CIOs will need to assess.

Maturing the Flash Storage Ecosystem

The locus of controlling infrastructure changes as technology advances. In the 1990’s, EMC led the charge to move intelligence and value out of the server, into centralized storage arrays. In the last decade, virtualization (led by VMware) again shifted industry power structures by abstracting physical infrastructure; rippling across servers, networks, storage, and beyond. Beyond virtualization, the explosive growth of data and new inflection points of technology have led to a what Wikibon calls an IO-centric model of future computing.

Source: Wikibon 2012

As shown in Figure 1, flash storage is not a single technology and fits for many use cases. PCIe flash delivers a different performance profile than SSD in the storage array. While Fusion-io and EMC’s VFCache partly overlap, today the use cases and deployment models are different. The companies are on a collision course for the next year as Fusion-io expands its offering based on the acquisition of IO Turbine and EMC looks to expand its flash portfolio. A large battlefield will be the PCIe Flash Integration Layer. PCIe appliances are the only way to deliver the horsepower for clustered servers. The real issue is who will control the active data management within EMC’s Project Thunder solution that will be in competition with a growing number of flash startups.

EMC’s VFCache is a 1.0 product release and has noticeable gaps in the product offering that will be filled in the near future. First, it lacks solutions for blade servers. EMC stated that these would be coming soon. While Micron is EMC’s primary source for VFCache flash, LSI announced that it is a supplier to EMC and separately that it is building a solution for Cisco’s UCS (B-Series) blades. While HP is a competitor to EMC on the storage side, HP’s server group has a history of broad industry support (like the recent Cisco FEX module), so adding a VFCache module in the future (HP already has a Fusion-io solution) is not out of the question. Alternatively, EMC’s future “Project Thunder” solution is an external appliance that allows multiple VFCache cards placed in an appliance to be shared amongst multiple servers that are connected via a high speed connection (either InfiniBand or Ethernet).

Another noticeable gap for VFCache is that VMware support is currently through a driver in the Guest OS rather than the hypervisor. As noted by EMC’s Chad Sakac, this means that vMotion cannot be used while the cache is active and the applicability for the solution for virtualization environments is limited. Once VMware integration and VFCache modules for Cisco blade servers are available, the solution is expected to move into VCE’s Vblock environments. A new startup, Nutanix, is already shipping a converged compute and storage solution that leverages integrated flash (both Fusion-io PCIe and SSD).

Action item: CIOs looking to transform the business should keep a close eye on flash storage. Deployed properly, flash technologies can allow businesses to create new applications and look for new ways to derive greater value from information. That being said, the ecosystem for flash technologies is evolving rapidly, so should be used tactically where there is a clear benefit for specific applications.

How Storage Admins can Stay Relevant

At last week's Peer Incite reviewing EMC's VFCache, the Wikibon community discussed the ramifications of the emerging flash-based IO hierarchy and its implications for the role of the storage admin. In today's do-more-with-less economy, CIOs are talking about streamlining organizational structures generally and specifically reducing "admin creep." This is a wake-up all to classic storage administrators to educate themselves in new disciplines.

As documented in the Professional Alert Opportunity: The Veritas of the Flash Stack, 20 years ago storage function began moving from the host server to the array. As this occurred, many DBAs got out of the business of storage performance management, tuning, and volume management and the SAN manager became an important role within many organizations, co-opting many of these tasks.

As signaled by announcements such as EMC's VFCache, the storage hierarchy is evolving. This is both an opportunity and a threat to storage administrators. On the one hand, as storage management function moves up the stack and closer to the processor, it is an opportunity for storage professionals to expand their knowledge and their sphere of influence. On the other, as applications begin to take advantage of new system architecture and design (see http://wikibon.org/wiki/v/Real-time_IO_Centric_Processing_for_Big_Data), a sea change may occur within organizations whereby new skills will be required to thrive.

In particular, storage admins have an opportunity to focus on honing virtualization skills (e.g. VMware and Hyper-V) while at the same time becoming more server savvy. Industry trends are pointing toward server architectures becoming more "Big Data Friendly" and data-centric, and storage admins who update their skill sets stand to prosper. The most prudent path is to become conversant in related but non-core areas and specialize and go deep in a particular discipline. For example, a storage pro might become more familiar with VMware and new server architectures while deeply specializing in an application area such as database performance.

By identifying emerging industry waves and staying ahead of the education curve, storage admins can remain relevant and thrive in a world where the storage hierarchy that's been familiar for decades is about to undergo significant change.

Action item: Storage admins have seen function move in their direction-- i.e. toward the array-- for decades. The pendulum is now swinging as function moves back toward the server. However we are entering a data-centric (big data) era, and this bodes well for storage admins who update their skills and follow the functional movement. Storage admins should increase their skills in the areas of virtualization, new server design, and even specific application areas as a means of increasing their value within their organizations and identifying ways to exploit the trend toward IO-centric architectures.

Opportunity: The Veritas of the Flash Stack

For the past 15-20 years, storage function (e.g. snapshots, data protection, recovery, etc.) has steadily migrated away from the server or host processor, outside the channel, and toward the storage array. Ironically, EMC started this trend on a rapid trajectory with its original Symmetrix announcement.

A key milestone in the reversal of this phenomenon came in 2008 when EMC landed a haymaker and shocked the high-end array market with the introduction of enterprise flash drives as a plug-in to the array. In another ironic twist, Fusion-io was coming out of stealth at this exact timeframe with a vision to disrupt the current order and move a persistent storage resource much closer to the CPU.

A new storage hierarchy is emerging inclusive of memory class extensions on the CPU side of the channel, flash cache, all-cache arrays, network flash controllers and flash in the array. This new hierarchy will ultimately have to provide a connection to the 'bit bucket' - the cheap and deep storage array full of low-cost SATA devices - and speak the language of virtualization and clustering.

EMC's VFCache announcement is a clear attempt to extend the value proposition of its arrays closer to the processor. By putting forth a vision that ties array function to PCIe flash through automated tiering software, EMC hopes to stake an early claim on the new emerging IO hierarchy. There are two broad strategies vendors will take to go after this "land grab," including:

- Storage-centric: EMC and other storage companies will link their installed bases to the emerging flash hierarchy through automated tiering software. In this approach, the locus of control will be the processing power in the array.

- Processor-centric: Server vendors including HP, IBM, Dell and perhaps Intel will extend the server and adapt it to more data-oriented workloads; making the CPU the central point of control.

Fusion-io for its part will continue to build out its stack and attempt to get ISVs to write to its API. EMC, other storage suppliers, and the server vendors will angle to create both de facto and de jure standards around the new emerging IO hierarchy.

A potentially significant opportunity is emerging for the supplier that can create this standard, or perhaps even become the independent software supplier to this new storage hierarchy. In the same way Veritas was able to build a base among ISVs, the channel, and users, this new storage hierarchy will need to be performant, protected, efficient and shared. It will require a logical place in the stack, a non-disruptive technology approach and a rich set of storage management functions. While this opportunity may be shared amongst many large suppliers, it is possible that one of the whales, an established startup, or a new software company could deliver this new class of storage services - only time will tell.

Action item: EMC's VFCache signals that a storage-centric model is in play to manage the emerging flash hierarchy. While EMC will put forth a very strong story over time and bring many resources to bear to promote its approach, other models will test the market's desire for a high performance, persistent, highly available management resource for the new "flash stack." Suppliers should take note that software, not hardware will be the key differentiator and deliver the leverage for competitive advantage over the long run. No clear winner currently has emerged, and those vendors with the highest function, best ISV support, and ability to plug into important channels of distribution will likely emerge with the biggest prizes.

Will Faster Hard Disk Drives Compete with SSDs and Flash-based Caches like VFCache?

During the announcement of VFCache, Pat Gelsinger, President and COO of EMC Information Infrastructure Products, forecasted that in the future nearly all active data would be held in flash and that the days of high-speed disk drives were numbered.

Moreover, according to Alan Benway, a master performance consultant with HDS, “10K RPM SFF drives have most of the performance of 15K RPM LFF drives - less than 10% delta in our extensive lab tests when running tests on many LUNs from many RAID Groups (120 disks or more under load). They are the same for heavy write loads. The 15K drives are too costly to make for the volume and will not be manufactured much longer (18-24 months at most). Development on 15K drives has stopped, and they cannot be increased in capacity due to physics.”

Note that during Wikibon’s Peer Incite call on VFCache, EMC’s Barry Burke disagreed with Alan and claimed that many EMC customers need 15K drives for their performance advantage over 10K drives.

Nonetheless, although high-RPM drives have held the edge in Tier 1 applications, they are clearly being challenged by solid-state drives and caches – especially as the cost of flash memory keeps dropping like a rock. So why doesn’t the industry just make faster spinning drives – say 20K – since they are still much less costly than solid state drives? Ultrahigh-speed HDDs rotating at speeds exceeding 20K rpm have been researched but not commercialized due to heat generation, power consumption, noise, vibration, and other problems in characteristics, and a lack of long term reliability. Recall that the power required of a drive is proportional to nearly the cubed power of its RPM, and power translates to heat. Also note that while scientists have successfully demonstrated higher areal densities and transfer rates, they have not been able to significantly improve mechanical delays.

So, with faster hard drives out of the question and a lack of or diminishing investment in higher performance 15K drives just how long will 15K drives be available? Wikibon believes that production will begin stopping in the 2014-2015 time frame.

Action item: Users strategic plans should include aggressive adoption of solid-state storage technologies for high performance applications and the phasing out of higher RPM hard drives. This phase-out will also alter power and cooling needs.

SSD: Does it belong in the host, the network, or the array?

The storage industry has discovered the power of SSD Flash technology. We know that SSD delivers exponentially higher IOPS and consumes less power but is more expensive per GB than traditional HDD. Every few weeks a new product is introduced to the market that is the answer to all enterprises’ performance headaches.

The challenge so far has been inadequate information. The industry as a whole is trying to figure out where SSD should live to provide the best performance per dollar. The options are endless: the host, the network, in the array, managed by the file system, or controlled through dynamic tiering. Recently, EMC announced VFCache, a PCIe SSD card that is deployed in the host with software to cache hot blocks from the server IO system, with future potential for receiving hints on what to cache from a storage array. EMC already has the option to deploy a shelf of disk form factor SSD in its storage system and move data in and out of SSD by the FAST software. The question then is: What should the channel be recommending?

In the field we are being asked to differentiate all the options, forget about different vendors. Why PCIe versus just an SSD drive? What do I gain by deploying SSD in the network as cache? Should I be using SSD as cache or as primary storage media? There is only one answer to these questions: It depends.

Action item: As a result of the complexity associated with the use of SSD in the storage industry, I would ask vendors in general for the following:

Please make the message to the market as clear as possible. Sometimes less marketing speak is better. Clearly state what workloads are best suitable for your technology based on two or three criteria. Finally, if you have multiple SSD options, please communicate your strategy to your channel partners so that the vendor’s sales teams and their channels are in synch on the message to the end-user. This may be achieved through compensation incentives or special program. Don’t leave to the field to decide because the field will always go with what makes them the most money.

PCIe Card Proliferation Equals New Opportunities for Professional Services

The last three years have seen a profusion of PCIe flash card products brought to market, mostly by startups such as Fusion-io, Micron, Meridian, Warp Drive, etc. EMC's February 6 VFCache announcement is the first, but certainly not the last, such announcement from a major hardware vendor. The flood gates are certainly open, with the promise of order-of-magnitude drops in read and write latency.

This profusion of products provides a great deal of choice for users. And choice is good, right? For instance, Fusion-io provides a large capacity product supporting both high volume reads and writes backed by a single-tier, flash-only storage-on-server philosophy which in many ways is a throwback to the architectures of the 1990s. Data redundancy and sharing is provided by copying data between servers. In contrast EMC has smaller capacity cards providing a read-only cache intended as an extension of a traditional disk or hybrid flash/disk storage array into the server. It is intended to hold the 10% of data in most traditional IT environments that gets 90% of the reads.

So who is right here? As Noemi Greyzdorf, VP of strategy & alliances at Cambridge Computer Services writes in her article SSD: Does it belong in the host, the network, or the array? “There is only one answer to these questions: It depends.” A user trying to improve slow response times from the corporate ERP system is probably going to need an entirely different system than a large online retailer concerned about protecting a high volume of transactions from server or connectivity problems. Questions such as do other applications also need to access or supply data, is the environment virtualized, is the data in small or large chunks, who is the user's main storage and server vendor (and what is that vendor's timetable for bringing out flash products), and how many PCIe slots are open on the server complicate the equation.

Flash is expensive, and a wrong decision can cost a user dearly. Nor is flash just an Internet or enterprise issue. SMBs also have performance issues and will need the new kinds of applications that flash will enable. Few users have the resources to develop the expertise to design an optimal system in house, which means that the door is open for consultants, whether they are from big six firms or smaller organizations.

Action item: Consultancies should start developing expertise and hands-on experience with various PCIe products now to be prepared to help their customers through the often confusing forest of these new products. This is a genuine new opportunity to provide important value to clients of all sizes. And Wikibon, which has been a leader in covering this fast-developing area within storage, is a good place to start acquiring that expertise.