Introduction

On Monday February 6 2012, EMC formally announced two projects;

- Project Lightning, now named EMC VFCache, available in March 2012,

- Project Thunder, which will which will be in beta testing in 2Q 2012. Follow the link for an assessment.

The most significant statement of the announcement came from Pat Gelsinger, who forecast that in the future nearly all active data would be held in flash and that the days of high-speed disk drives were numbered. He also emphasized that high density drives would continue to hold the majority of data. The view is supported by the comment on this Alert by Alan Benway that investment in 15K drives has ceased, and manufacturing will last only 18-24 months. Even the very recent announcement that the speed of writing to disks might be increased by 1,000 times does nothing to solve the problem that once data is on disk, it is slow and expensive to retrieve anything beyond a tiny fraction of that data.

Over the last two decades, EMC has led a highly successful drive to migrate data management function from servers to networked storage arrays, saving enterprises billions in the process. The realization that with flash the pendulum of data control is now moving back towards servers led EMC to form the EMC Flash Business Unit in May 2011 with the mission to compete strongly in the emerging flash market. This mission will also compete with EMC's other highly successful data storage business units.

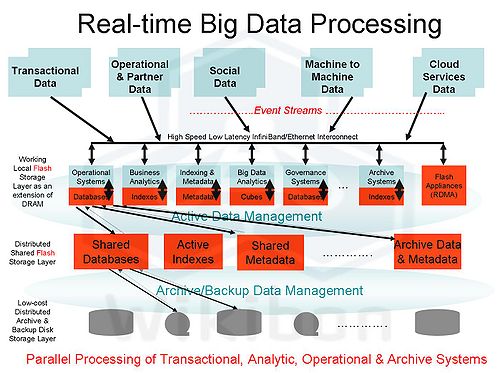

In a previous alert Wikibon put forward a five layer model for future computing. This is shown in figure 1 below.

EMC VFCache Summary

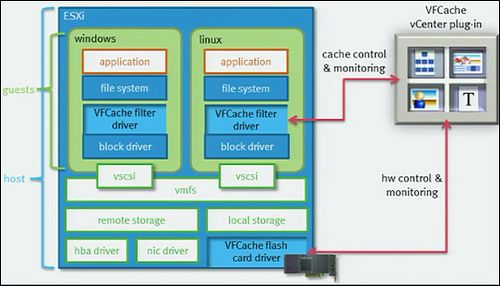

EMC Project Lightning (a.k.a. EMC VFCache) is mapped onto this model in Figure 2 below.

The VFCache is a 300GB SLC flash PCIe card from Micron or LSI that can plug into qualified CISCO, Dell, HP, and IBM racks and free-standing servers. EMC indicated that mezzanine VFCache cards would be available for some blade servers in the future. Only one VFCache can be installed on a server and requires installation of an EMC VFCache filter driver.

Suitable IOs are then cached in the card on a FIFO basis. When the card is full, the oldest data is overwritten. The VFCache filter driver does not cache IO with 64KB block sizes or greater to reduce the risk of large sequential IOs flushing the cache of more useful data.

The VFCache card can be split and some of the space used for complete volumes that will fit the space allocated. At the moment protection and availability of the data in the split portion of the cache is completely up to the user to determine and maintain.

EMC VFCache Recommendations & Conclusions

The best use guidelines for VFCache are:

- Use VF Cache only for simple caching situations:

- Read Intensive Workloads – Applications that have a read-heavy workload, where the ratio of reads to writes is at least 50% are good candidates for caching.

- Small I/O Size – Applications whose read requests are 64KB or smaller are eligible to be cached with VFCache.

- Random I/O – Random I/O activity makes a better candidate for caching than sequential I/O. Sequential I/O activity does not often result in “hot” data or repeated access to the same data.

- Good locality of reference.

- Don’t use the split cache yet for production work unless the data is truly transient and does not need to be recovered if the server fails. Users are on their own for recovery. Users should wait for greater support.

- At the moment the support for VMware is limited, as the filter driver for VFCache is installed in each VM guest. There is no hypervisor driver as yet. **A vCenter plug-in for management is available.

- Native support for vMotion is not available; scripting will be required to remove and re-add storage.

- The underlying issue is that VFCache appears to the host as a local storage volume, which would disappear if a virtual machine is moved to another server.

- The problem has been solved by vendors such as Virsto that virtualize the storage presentation directly to the hypervisor.

- Wikibon believes that EMC are likely to go in this direction in the future, but it’s a big hole in the product for now. Wikibon believes that EMC is working hard on solutions.

- There is no support for clustering environments such as Windows clusters.

- The initial supported environments are Microsoft Windows and Linux either native or under VMware without vMotion support.

The Micron PCIe card uses the latest flash technology and has been well reviewed. EMC claims to compete in three areas with the Micron & LSI cards:

- Improved IOPS, Up to 4x throughput increase. These are reasonable claims for normal database environments such as Oracle and Microsoft SQL. A benchmark by Dennis Martin of Demartek concluded that in single applications benchmarks could achieve 2.5 - 3.5 times the throughput in the database environments he ran. These types of improvement can be achieved in environments where IOPS are the performance constraint and the IO profile meets the best use guidelines listed above.

- EMC claimed in an announcement slide that its cards are four-times better than another "popular" PCIe card for 100% read and 2 times better for 50% read. This result may be true of a specific card and a specific workload but will a very unlikely finding in the real world. In the same Demartek report above, Demartek found identical throughput at 100% read and a small improvement (<10%) for 80% and 60% read environments. These improvement are within the expected improvement from a technology turn.

- Reduced Latency Latency and latency variance are the greatest challenges to database designers and administrators. The average latency will be much better for reads, and this will help in obtaining the all-important write locks when they are required. However caching solutions increase the variance very significantly. Environments that are sensitive to rate infrequent but have very long IO times are unlikely to be improved.

- EMC claimed lower latency for the reads from a VFCache solution than other "popular" cards. This is likely to be true for the Micron card but less likely for the LSI card. The Micron card had about 15% lower maximum latency in each of the comparison tests shown in the study above.

- EMC claimed that server CPU processor utilization was lower with the VFCache card because processing is offloaded to the card. This may have been important with previous servers with a small number of cores. However, Wikibon has consistently pointed out that server utilization is very low (~10%) on multi-core servers. The trend is to use server cycles from spare cores instead of offloading loads to slower outboard processors because the server processors are faster and more system information is available, especially for recovery and write optimization. This is a design decision and should not be a buying criteria.

Overall, the EMC VFCache PCIe solution performs well against a large number of good PCIe cards from Fusion-io, Virident, Texas Memory and others. However, it is has fewer options (e.g., cache size, MLC or SLC) and less support at this early stage of development. For VMware the solution is not complete, and solutions from Fusion-io, Virsto, and other are currently superior. EMC should and probably will bring out a much improved solution for VMware and Hyper-V.

At the moment there is no integration between FAST tiered storage on VMAX and VNX storage arrays and VFCache; EMC has indicated it is looking at greater integration between the VFCache and the FAST system with hints and improved pre-fetching between the two sets of software. De-duplication by identifying identical blocks in the cache could also be a future improvement. It seems likely that the flow of information will flow down and be able to assist the array. Other improvements may come from using spare SCSI fields to tag where the data is coming from. This could even start to look like system managed storage on the mainframe.

Action Item: EMC has committed significant resources to the flash business unit, and has taken a small but important step into moving the locus of control towards the server. VFCache will be most valuable for workloads under performance pressure that fit the best use case above. There will be some overhead in reviewing and adjusting the system based on performance metrics. CTOs and database administrators should also review other methods of using flash, including SSDs in the array, PCIe cards in the array and putting key tables and volumes as read-write volumes on the PCIe card. EMC's entry has verified the market, and EMC is very likely to significantly enhance the VFCache over time.

Footnotes: