Contents |

Big Data and Real-time

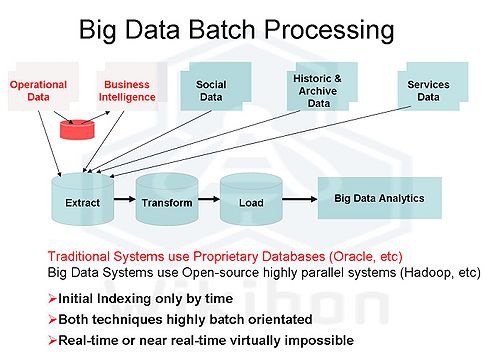

Big data frameworks such as Cloudera's Hadoop solve problems of speed and cost, but do not solve the problem of real-time processing. Figure 1 shows the historical use of data warehouses mainly derived from operational data, and both using traditional databases such as Oracle and SQL to process the data and prepare it for use in analytic tools. The bottlenecks for the system are the database architecture and the time needed to load data from disk drives. Traditional databases were first developed to solve transactional problems and are of high value for those systems. However, they are very expensive way of managing large amounts of data for use in analytic systems.

Big data solves the problem of cost with open-source-based tools that were first used by Yahoo and Google. It solves the problem of speed by using highly parallel processes and slightly reducing the cost of IO and the cost of data storage. It significantly reduces the cost of software. However, at heart it is still a batch process, as shown by the extract, transform and load steps. The limiting performance factor is still the speed and cost of disk IO.

DRAM & High-Performance IO

The first attempt at increasing the speed of IO was DRAM storage. To overcome the problem of volatility, large battery backup systems were required to retain data in the case of power failure. These devices improved IO speeds but increased the cost of data and the cost of IO. They were used in only niche applications requiring very fast IO, such as currency and stock trading and some databases, and work for applications with a high value on response times and relatively small amounts of very high-IO data.

The next candidate puts large amounts of RAM storage in servers, essentially turning the server RAM into a storage device. Several “in-memory databases” are on the market, including NewSQL from Clustrix, TM1 from IBM, TimesTen from Oracle, WX2 from Kognitio, and HANA from SAP. NewSQL and HANA are delivered as appliances, bundling software, servers, battery backup systems, and additional system management capabilities.

The following case study is a good illustration of the types of general purpose solutions that can be addressed with In-Memory databases:

Large memory will clearly be part of systems going forward, but the cost of large and ultra-large memory DRAM systems is unlikely to make it a general purpose solution, and it is likely to be superseded by the DRAM/Flash hybrid systems that are discussed below. The reasons for this are:

- The consumer market is unlikely to find any wide-scale requirement for large DRAM systems. Flash is more than adequate for the vast majority of consumer devices. Because of that, very large and ultra-large DRAM systems will not enjoy the economies of scale and investment that drive the consumer market, and will remain expensive.

- The power protection systems for DRAM are bulky and again only useful for part of the server market.

- DRAM is byte addressable, which makes it fast and efficient but makes addressing DRAM very expensive at scale. Flash storage is block orientated.

- The ability to deal with IO is dramatically increased, but the cost of the software and the specialized servers is high.

- The cost of converting all the software for enterprise systems will not be underwritten by the consumer market.

Wikibon believes that this approach works for well defined high-value projects where the data to be held requires both significant processing and significant IO, and the dataset size is not too large. However the ability of DRAM only systems to deal with very large and ultra-large systems cost effectively will reduce its impact on the market long term. Many of the techniques developed for in-memory databases and systems will be adopted by directly addressable DRAM/Flash-based solutions.

IO Centric Processing – a Real-time Solution

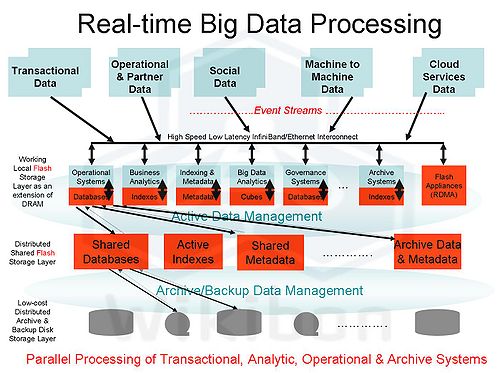

Wikibon has written extensively about the potential of flash to disrupt industries and designing systems and infrastructure in the Big Data IO Centric era. The model developed by Wikibon is shown in Figure 3.

The key to this capability is the ability to directly address the flash storage from the processor with lockable atomic writes, as explained in a previous Wikibon discussion on designing systems and infrastructure in the Big Data IO Centric era. This technology has brought down the cost of IO intensive systems by two orders or magnitude, 100 times, whereas the cost of hard disk only solutions has remained constant. This trend will continue.

This technology removes the constraints of disk storage and allows the real-time parallel ingest of transactional, operational and social media data streams, and sufficient IO at low-enough cost that allows parallel processing of Big Data transactional systems at the same time performing Big Data indexing and metadata processing to drive Big Data Analytics.

The implications for system and application design are profound:

- Consolidation of Transactional Systems

One of the constraints of getting the most out of packages is that the central database constrains the level of integration. For example, in ERP implementations many modules are run independently of the main database to avoid performance degredation for core modules. The Revere case study illustrate the impact of these types of decisions on the productivity of a whole organization. The ability to improve the latency of IO can remove locking constraints, and enable far greater integration of applications. The payback on application consolidation is far, far greater that server and storage consolidation.

- Integrated Design of Big Data Analytics together with Operational Systems

Instead of designing or implementing the operational systems or packages at first, and then designing the data warehouse and extracts required as a subsequent project, the two can and should be designed together, and the real-time outputs of the analytic systems can be an input into the transactional and operational systems. - All Big Data Streams become Potential Application Inputs

Big Data Transaction and Event Systems can leverage the vast amount of information coming from people, systems, and M2M, ingest it and use the information to manage business operations, government operations, battlefields, physical distribution systems, transport systems, power distribution systems, agricultural systems, weather systems, etc., etc., etc.

- Big Data Analytics

The high MIOPS/TB capabilities allows the extraction of metadata, index data and summary data directly from the input stream of operational big data. This in turn allows the development of much smarter analytical systems designed as an extension of the operational system in close to real time instead of a much delayed extract from processed operational data. Companies can monitor fraud attempts in real time, extract information from log analysis cloud services to identify and plug network weaknesses, monitor the exact power being used in utility customers to anticipate and ameliorate brown-outs, monitor the real-time usage and failure of components in products as diverse as cars and storage arrays and modify purchases and production appropriately. The potential capabilities are only limited by knowledge of the industry and imagination.

- Big Data Archive Systems

Archive systems are the orphan of the data center, with good intentions to spend and sort things out, but without budget to make a difference. The difference an IO Centric environment makes is the ability to exploit the extracted metadata and indexes in real time. This could at last allow a complete separation of backup and archiving, improve the functionality of archive systems, and reduce the cost of implementing them. The emails associated with an order could be identified and cross referenced, governance systems kept current, quality systems able to review trends over time in real-time and identify potential issues. The ability to hold archive metadata and indexes in the active storage layers will allow much richer ability to mine data, and at the same time allow more effective access to detailed data records and the deletion of old data.

Conclusions

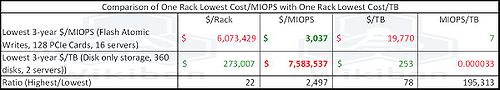

Wikibon concludes that IO Centric computing will change the design of Big Data systems to being truly real-time event-driven systems. Table 1 shows comparison metrics between one rack of the highest performing flash compared with one rack of the lowest cost capacity of hard disk. Flash technologies and innovative software such as the Auto Commit API in VSL from Fusion-io have driven the performance up to 7 million IOPS per terabyte (MIOPS/terabyte), compared with only 33 IOPS/Terabyte (.000033 MIOPS/Terabyte), a difference of nearly 200,000 times. The difference in $/MIOPS is about 2,500 times lower for flash, and the difference in $/terabybe is about 78 times lower cost for hard disk. There is very high innovation in the storage marketplace at the moment, and there will be many technologies that will fit in in the middle.

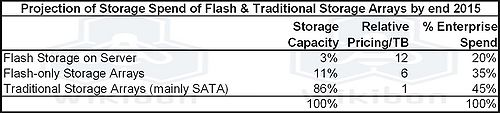

Wikibon believes that the relative terabyte cost premium of Flash compared with Storage Disks will continue to decrease, and the MIOPS cost premium for disk will continue to increase. This leads to the 2015 projection of enterprise data center spend by storage type that was published in 2011, as shown in Table 2.

Source: Table 1 in 2011 Flash Memory Summit Enterprise Roundup, Wikibon 2011

Flash will be a small percentage of storage capacity for Big Data, but will be a large percentage of IT storage spend. The industry and storage executives will need to wean themselves from measuring the storage spend and storage market by capacity; this metric is becoming more meaningless by the minute.

The bottom line is that the simplification and enhanced capability of Big Data applications will more than cost justify the additional cost of flash systems with high MIOPS capabilities. The road has been prepared beforehand by in-memory databases based on DRAM, such as HANA from SAP referenced in Figure 2 above. However, these systems are limited in scope and scaleability, and will be overtaken by lower cost and more flexible flash-based technology. The early adopters of flash in large-scale social media sites such as Facebook and Apple, and the impact on the total business productivity for customers such as Revere illustrate the business benefits that high MIOPS flash devices can and will bring to organizations large and small.

Action Item: The trend will impact all IT operations, and especially the development of operational and analytic systems. CTOs and CIOs need to understand this trend in depth, and determine what potential competitive advantage can be derived in their industry. They should be working with vendors and ISVs who understand and embrace the potential.

Footnotes: