Tip ctrl +/- to increase/decrease font size.

Contents |

Research Premise

Increasingly, the Microsoft and Oracle application stacks include storage-related services. Examples include Microsoft Data Availability Groups (DAG) for Exchange 2010 and Oracle Automatic Storage Management (ASM). Leveraging the application stack to provide storage services can simplify storage infrastructure, speed recovery, and eliminate organizational friction between application groups and SAN infrastructure practitioners. But this approach brings tradeoffs that IT organizations need to assess.

Independent software vendors (ISVs) generally, and Microsoft and Oracle specifically will emphasize that vertically integrated storage services reduce overhead, simplify infrastructure, and reduce cost. CIO’s should ask two questions to determine if a vertically integrated storage strategy makes sense:

- To what degree can SAN infrastructure be shared across the application portfolio?

- What is the impact of a vertically integrated stack approach on software license and maintenance costs and overall end-user availability?

Often, organizations will find that sharing a SAN across three or more applications will provide the lowest cost infrastructure. As well, especially in Microsoft environments, SAN will provide higher availability than using direct attached storage (DAS) combined with a vertically integrated software stack approach.

Our research shows that in shops with more than 100 TB of shared data, DAS is consistently between 20-35% more expensive than SAN on a TCO basis. The reasons are twofold: 1) SAN is a shared resource and is more efficient than DAS when it can support multiple applications; 2) DAS requires more CPU resources, which increases software license costs, a clear motivating factor for ISVs to recommend DAS.

This is the first in a two-part series comparing different approaches to storage deployment. Part 2 defines a storage services architecture and discusses the benefits, economics and drawbacks of a storage services approach as compared to a One-off or bespoke strategy.

Background and Introduction

Over the past year, several Wikibon members have indicated they are actively evaluating the benefits and drawbacks of vertically integrating storage services into application stacks. To investigate the benefits, drawbacks, and applicability of this strategy and compare the approach with a storage-centric philosophy (i.e. shared function in the array), we interviewed 17 IT professionals from the community which forms the basis for this research note (see Figure 1). As well, we researched two major application stacks including Microsoft Exchange, with an emphasis on Exchange 2010 and Oracle 11g.

Summary of Findings

Key findings of this research include:

- CIO's, Application heads and infrastructure leaders alike want to simplify infrastructure.

- CIO's are primarily concerned with delivering business value, reducing risk, and delivering efficient services.

- Application heads care most about application performance, end-user availability, and delivering function to the business in a speedy fashion.

- Infrastructure executives are most concerned about efficient service delivery (i.e. cost effective service delivery) and keeping the applications groups satisfied.

- Both Microsoft with E2K10 and Oracle with 11g are aggressively bundling storage function into their respective application stacks. Both companies frequently are recommending DAS as the preferred storage infrastructure for these applications.

- Building storage function into the application stack will simplify infrastructure, but it will often increase costs relative to an array-centric approach because embedded stack function is not as leverageable across the application portfolio; especially when deploying DAS instead of SAN, as is often recommended by Microsoft and Oracle.

- As well, specifically in the case of Microsoft E2K10, an application stack approach is less robust than a well-constructed SAN from an availability standpoint.

This research is designed to help CIOs, application owners, and infrastructure heads determine the best strategic fit for vertically integrated storage services (within the application stack) versus shared storage (on a SAN). The conclusions of this study are specifically focused on Microsoft E2K10- and Oracle 11g-based applications.

Application-centric Versus Storage-centric Strategies

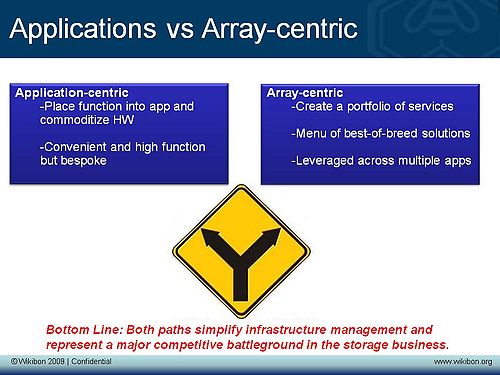

As indicated, consistently, IT executives want to simplify infrastructure and are evaluating two competing strategies to accomplish this goal as shown in Figure 2:

- Move function from the storage array and vertically integrate into the application stack (applications-centric or bespoke).

- Build a standardized set of storage services that can be re-used across applications (array-centric or shared).

The two most prominent examples of ISV’s pushing a vertically integrated stack approach are Microsoft and Oracle. For this research, we specifically focused on Microsoft Exchange 2010 and Oracle Real Application Clusters (RAC) for 11g and Exadata as reference examples in our research.

Both Microsoft and Oracle are making substantial improvements to their application stacks in an effort to reduce complexity and improve both performance and availability. Their motivations vary but in general, both companies are trying to improve the performance and availability of their applications as a means of competing more effectively. As well, these firms are trying to capture as much value as possible to justify and preserve their respective license fees. The following takes a brief look at the innovations and benefits of each vendor’s approach:

Microsoft Exchange 2010

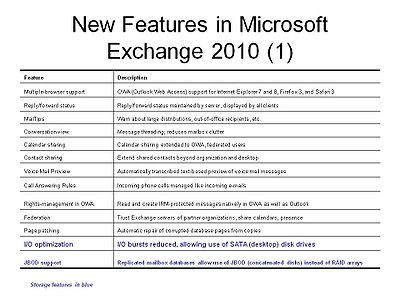

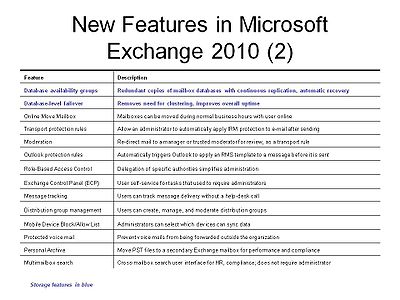

Microsoft Exchange 2010 is a major release with significant new features as seen in Tables below. The storage-related features are highlighted in blue.

Microsoft announced GA of E2K10 on November 9, 2009, and Wikibon expects a very strong drive from Microsoft to upgrade to E2K10 in 2010; especially since so many clients bypassed E2K7. A major emphasis of Microsoft is reduced storage costs. In addition there are several notable points regarding E2K10:

- E2K10 reduces IOPS by additional 50% relative to E2K7 (85% vs. Exchange 2003).

- E2K10 high availability is simpler and more flexible with the introduction of Database Availability Groups (DAG):

- DAGs provide redundant copies of mailbox databases with continuous replication & automatic recovery.

- Automatic page patching repairs corrupted database pages from copies.

- Move clients away from shared-storage clustering.

- Move all Exchange databases on inexpensive storage (DAS) and eliminate tiered storage.

- Clustering within Exchange 2010 – no server/storage clustering support required.

- Improve availability, flexibility and functionality.

Microsoft is aggressively providing highly detailed storage advice that stresses the use of DAS and SATA as best practice.

We believe this vertically integrated push is appealing, especially to smaller clients that cannot bear the complexity of SAN and want to simplify infrastructure and organizational layers (i.e. single Microsoft throat to choke). However as we will show below, larger clients will pay a penalty in terms of cost as the economics of shared SAN continue to be compelling.

Oracle 11g

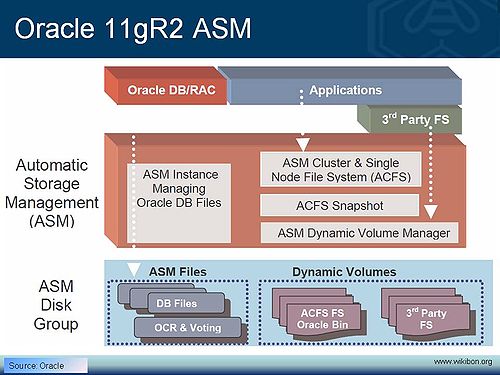

Oracle 11g Release 2 is Oracle’s latest version of its database announced in September of 2009 (see Figure 2). Currently it only supports Linux. Later in 2010, Oracle will roll out support for Sun Solaris, Windows, AIX, and HP/UX.

Oracle 11g features Automatic Storage Management (ASM), a vertically integrated file system and volume manager, purpose-built for Oracle database files. Here’s Oracle’s description.

Automatic Storage Management (ASM) is a feature in Oracle Database 10g/11g that provides the database administrator with a simple storage management interface that is consistent across all server and storage platforms. As a vertically integrated file system and volume manager, purpose-built for Oracle database files, ASM provides the performance of async I/O with the easy management of a file system. ASM provides capability that saves the DBAs time and provides flexibility to manage a dynamic database environment with increased efficiency.

The Automatic Storage Management (ASM) feature in Oracle Database 11g Release 2 extends ASM functionality to manage ALL data: Oracle database files, Oracle Clusterware files and non-structured general-purpose data such as binaries, externals files and text files. ASM simplifies, automates, and reduces cost and overhead by providing a unified and integrated solution stack for all your file management needs eliminating the need for 3rd party volume managers, file systems and clusterware platforms.

Oracle also offers additional important storage and storage management functions, including:

- ASM Cluster File System (ACFS) – a file system that enables the dynamic movement of I/O in the event of a processor failure. This is the component of ASM that supports Oracle Real Application Clusters (RAC).

- ASM Dynamic Volume Manager – a capability that allows the movement of volumes from one storage device to another.

- ACFS Snapshots enable space efficient (i.e. change only copy) and consistent (at a point in time) snapshot copies.

- Data Guard replicates databases for high availability at a consistent point in time.

Oracle also offers Exadata, a pre-bundled appliance that includes database software, storage, columnar compression, and flash. Exadata in our view is primarily aimed at data warehouse applications. Oracle also has a virtualization capability – Oracle VM – which is based on Virtual Iron intellectual property which Oracle acquired in 2009.

On balance, from a pure storage perspective, Wikibon believes that Oracle storage function is substantially more robust than that offered by Microsoft with E2K10. In fairness, the two software applications serve different purposes. Nonetheless, in our view, Oracle places a much greater emphasis on consistent recovery and is more aligned with enterprise requirements. The question remains: Is it more cost-effective to place this function into the software stack as recommended by both Microsoft and Oracle? Their clear advice is to buy the “least expensive” storage, specifically DAS. Users should be aware this means storage buffer management, cache management, and other storage function must be managed by the host.

In our view, the benefits of placing storage function in the application stack and using DAS instead of SAN are it simplifies infrastructure and unifies the recovery process at a single point of control. The drawbacks are:

- In the case of Microsoft, storage function is deficient as compared to SAN. Specifically, Microsoft relies on IP-based replication which is ‘lossy,’ meaning there isn’t a definitive consistent point in time between multiple copies;

- In both the Microsoft and Oracle cases, this approach increases server resource requirements and substantially increases software license and maintenance costs;

- Except in certain smaller use cases (Microsoft), shared storage will provide a better economic return.

Having made these points, in some use cases, specifically where recovery time is paramount, certain components of Oracle’s technology will deliver superior capability. Also, there is no reason that users can’t combine Oracle function with a shared SAN resource.

The size of the processor is often the critical resource constraint in database applications. If a database grows beyond a certain size and locking rate capability, users must understand they can’t just simply add another server, because each processor added will be fundamentally constrained by the overall database architecture. As such, loading up servers with storage function should only be done with careful consideration. Unless you absolutely need critical recovery times, this practice should be avoided.

Economics of Applications v Storage-centric Functional Stacks

To understand the economics of an Application Stack approach versus a Storage Services functional approach, Wikibon constructed a model to evaluate the cost of each. To accurately assess the economics, we developed a return-on-assets (ROA) model. An ROA model differs from a classical ROI model in several ways, the most important being that an ROA model takes into account the leverage from installed assets. When assessing infrastructure, an ROA model is useful in that it reflects more accurately how an IT manager’s budget behaves.

For example, an ROI model might often make a pure black-and-white comparison between doing things the ‘old way’ (e.g. a do nothing) strategy and updating the infrastructure with modern technology. The reality is that users rarely rip out assets on the books with wholly new technology. In fairness, a thorough ROI model can be constructed in a manner to handle this nuance—the ROA model we’ve built is specifically designed to assess this fact and as such is not as applicable as a generalized ROI model in many cases.

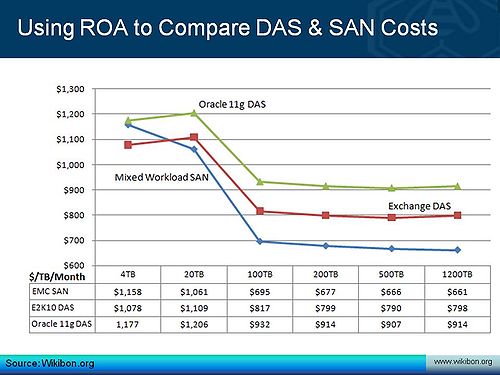

Figure 3 shows the results of the ROA model when comparing three scenarios across a range of storage installations. The data depicts cost-per-TB-per-month over a 48-month budget period. The important point to remember is the capacity shown reflects the capacity of the installation, not the database instance:

- Oracle 11g installations using DAS infrastructure.

- A Microsoft E2K10 DAS infrastructure.

- A shared SAN infrastructure.

*Data accounts for capex as costs depreciated over a 48-month period or lease costs.

*In no instance is Oracle DAS less expensive than SAN due to Oracle’s license fees at $20K - $30K per server.

*SANs of 20TB and under assume iSCSI SAN.

*Larger SANs use EMC CX (100/200 TB) and V-Max (500/1200 TB).

*Includes incremental server and software license costs to accommodate increased compute requirements.

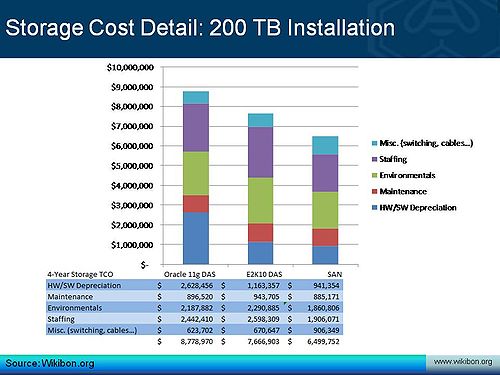

Figure 4 shows a drill-down of storage costs in a 200 TB installation. The scenario assumes not the size of the Exchange or Oracle database but the entire capacity of the shop.

*Assumes three or more applications in the portfolio share the same storage infrastructure.

A SAN Caveat

In the course of our research we encountered specific issues with SAN installations. In particular, several practitioners cited the following three concerns:

- SAN is complex -- in many ways it's a 'Dark Art' and requires specific skill sets to manage.

- If a DAS goes down, I only lose part of my storage, but if my SAN goes down, I lose an entire data store.

- SAN services are delivered by a separate group in many organizations, and frequently application professionals (e.g. the Exchange team) don't want to rely on another group to provide storage services. The politics of shared storage require that application heads negotiate to make certain changes, and that adds complexity.

Wikibon members have consistently indicated that SAN brings complexities. A well-designed SAN, however, will almost always deliver higher availability than a DAS infrastructure. In our view, there are two keys to succeeding with SAN in this regard:

- Ensuring the right skill sets and processes are in place to manage the SAN.

- Tapping a vendor's resources to provide best practices and reference architectures in specific application environments.

Both points will improve SAN infrastructure, lower costs in the long run, reduce risks, and speed time-to-market. The key to the latter point (leaning on the vendor) is that these "cookbook" services are often delivered for free as a loss leader in the sales process. IT professionals should aggressively push vendors for these "freebies."

Conclusions and Recommendations

The natural progression of application stacks from Microsoft and Oracle brings value to organizations by streamlining performance, improving availability, and reducing management complexity. Increasingly, ISVs are eying function that has been traditionally placed in disk arrays as a means of simplifying infrastructure and strategically capturing more value in customer accounts. Frequently, a key part of ISV recommended best practice is to use DAS as the storage backbone.

While relying on storage function from ISVs can simplify infrastructure, organizations need to carefully consider the tradeoffs of both deploying DAS and moving function closer to the CPU. Specifically, as the saying goes, there’s no free lunch. The main drawback of this approach is there is currently no effective way to share data across applications. As such, an application-centric strategy reduces operating leverage and increases costs. In shops with more than 100 TB of shared data, DAS is consistently between 20-35% more expensive than SAN on a TCO basis. The reasons are twofold: SAN is a shared resource and is more efficient than DAS when it can support multiple applications, and DAS requires more CPU resources which increases software license costs.

This doesn’t mean that users shouldn’t consider an application-centric approach. In specific cases it will make sense to do so to improve application performance and overall efficiency. Also, frequently organizations prefer to avoid the politics of going to a separate SAN group for storage services. However there is an economic price to be paid for this strategy.

The following questions can serve as guidelines for determining the best course:

- Can a storage infrastructure effectively support more than two major applications? If so, SAN will almost always be less expensive than DAS in E2K10 and Oracle 11g environments at scale (e.g. greater than 100 TB).

- In the case of Microsoft E2K10, is IP-based replication adequate – in other words is some potential data loss acceptable?

- In the case of Oracle 11g, is the fastest data recovery from a unified point-of-control an absolute business imperative? If not, taking an application–centric approach will require increased server resources and may even hinder performance at scale.

- Are chargebacks in place within the organization. If so, a storage-centric model where storage services are standardized and shared across applications will deliver better storage efficiency and lower costs. If chargebacks are not in place, organizational friction against standardization will be more substantial.

- Has the vendor proven and documented certified reference architectures in sufficient detail such that a trained team can implement the solution with speed, confidence, and the lowest risk possible?

The bottom line in our view is that while SAN introduces a layer of complexity into organizations and requires specialized skill sets, over a multi-year period, when the SAN asset can be shared across multiple applications, SAN will generally deliver far lower capex and opex relative to DAS in E2K10 and Oracle 11g environments.

Action Item: While placing storage function in the application stack can often add significant value, the tradeoff for larger customers is often higher costs that result from the lower operating leverage of this approach. CIOs should carefully weigh the business case for an application-centric storage strategy, especially when application groups are pushing for direct attach storage (DAS).

Footnotes: Related Research: