Peer Incite: Notes from Wikibon’s November 27, 2012 Research Meeting

Recorded audio from the Peer Incite:

Peer Incites are always interesting, but the November 27 meeting with Dag Liodden, cofounder and CTO of ad placement agency Tapad, reached the realm of the breathtaking.

The last few years have seen a revolution in online advertising, driven by the fast-evolving e-publishing marketplace. Taking a page from two centuries of paper-based history, these publishers have turned to advertising for their major income source. However, the new, highly interactive, high-speed electronic media demand a new approach.

How advertising works on the Internet is that publishers leave spaces for display ads in their online layouts. On the other end of the supply chain, ad agencies create the equivalent of print display ads for their clients. So far this sounds like traditional advertising. But today ad space is not sold on spec, and ads are not static parts of pages that every reader sees. Instead, each ad comes with metadata from the agency that specifies who they want to see that ad -- so for instance blonde females in the 20-25 age bracket with disposable income in a certain range who have been married for less than one year and do not yet have children, and who in the recent past have taken actions that indicate interest in very specific subjects, and who have not seen this ad more than twice before in the last 48 hours.

What Tapad does, uniquely for the moment according to Liodden, is match the person opening the ad with background data on the person accessing the page, including the person's location and the service that person is using at that moment -- computer, tablet, or smartphone/PDA. Then it matches this against the large number of ad campaigns it handles in its database and notifies the computers of agencies with campaigns that more-or-less match the person opening the ad. The agency computers submit bids for that specific space on that specific person's screen at that moment. Tapad selects the highest and presents the ad.

And here is the killer. All of that has to happen in less than 100 milliseconds or you lose the opportunity. That's not just doing a simple transaction like reserving an airplane seat; it is both handling a complex transaction and doing fairly sophisticated analysis.

How does Tapad do that? And what does that mean for the rest of us? You can read the answers in the articles below. But one thing I can tell you: This is the future. Today this may seem exotic and bleeding edge. Soon this will be how your business runs.

Bert Latamore, Editor

Big Fast Data Changes the Rules of the Game

Introduction

The ability to ingest, analyze, and take action on high-volume, multi-structured data in real-time is quickly becoming a 'must have' capability for enterprises across vertical industries. Such real-time, intelligent decision-making is enabling new lines-of-business and is reinventing both horizontal business functions and industry-specific transactional services.

From an infrastructure perspective, Big, Fast Data requires a rethink of the way enterprises architect analytic and transactional systems. Traditionally, analytic and transactional systems are designed and deployed in relative isolation from one another and operate in a linear or sequential fashion. In order to support real-time, intelligent decision-making, the two systems must be combined to allow analytic insights and transaction processing to inform and support one another in sub-second time frames such that decisions can be made and executed upon fast enough to deliver maximize business value.

Traditional relational database management systems were neither designed nor optimized to support such large-scale, real-time analytic and transaction-oriented use cases simultaneously. Increasingly, new approaches that include one or another flavor of NoSQL database system and the use of flash SSDs and in-memory storage engines is required to make Big Fast Data a reality.

Tapad Disrupts Online Advertising

Tapad is an early adopter of this approach. The company, which operates in the ad-tech sector, enables real-time analytics, bidding and placement of personalized, online advertisements. Tapad employs a NoSQL, real-time analytics engine to facilitate this process. Dag Liodden, the company's Co-Founder and CTO, recently took part in a Wikibon Peer Incite discussion to share best practices and lessons learned as Tapad architected, deployed, and used its internal infrastructure.

In order to benefit from Liodden's insights, it is first important to understand the process behind ad-tech.

Many commercial Web publishers make space available on their Web pages for banner and display advertisements. Typically, when a user opens such a Web page, the browser reaches out to an online ad exchange network and requests an ad unit to serve to that user. The ad exchange broadcasts this information, often enriched with behavior data specific to the user in question, to multiple advertisers. Each advertiser compares the information against its internal ad inventory and existing ad campaigns to determine what that ad impression is "worth" to them. It then decides whether to place a bid and at what amount. Bids are returned to the ad exchange, which determines who the highest bidder is and delivers the winning advertisement.

This entire process - from the user opening the Web page and the ad exchange transmitting the request to the advertisers analyzing the enriched data and delivering the ad itself - must be executed in just milliseconds. Tapad's job is to facilitate this multi-step, real-time process.

The company is just two years old, so it had the luxury of a clean slate when choosing the database technology it used as the foundation of its infrastructure. Liodden identified several factors Tapad took into consideration.

- First was the type of data involved. To deliver the quality of analysis it required, Tapad's system must be able to process multi-structured data. This includes data that identifies the device from which a user is viewing a Web page, its geographic location, and historical browsing patterns and click-through behavior.

- The second factor was speed. Analysis of the data, the bidding process, and ad delivery all must take place in less than 100 milliseconds before the lag becomes noticeable to the user.

- A third factor was latency. The system must be able to incorporate just-created transaction data into its analysis in real time so that advertisers have up-to-the-second data against which to evaluate bids.

- A fourth factor was concurrency. At peak times, Tapad's system must be able to simultaneously handle up to 150,000 ad units per second.

Liodden said Tapad briefly considered traditional relation databases for the job, but quickly ruled them out. Optimizing and scaling an RDBMS for such real-time decision-making workloads would have been time and cost prohibitive, plus relational systems also contained superfluous functionality not applicable to Tapad's use case.

Tapad then looked to the emerging NoSQL space. While there are many flavors of NoSQL data stores, Liodden said Tapad's use case lent itself to the simplest type, a key value store, which supports querying and updating single IDs. Due to the speed requirements, Liodden also determined that spinning disk was not going to provide the performance Tapad required. This meant he needed to consider a NoSQL key value store that enabled in-memory storage and data processing.

Flash Over DRAM

One option was a NoSQL database with dynamic random access memory, or DRAM, serving as the persistent storage layer. DRAM could potentially provide the performance Tapad required, but Liodden determined it would also be prohibitively expensive as the system grew in scale. Tapad's data access pattern varies widely, Liodden said, meaning a DRAM-based caching layer would quickly become saturated, requiring increasing reads from disk. This would result in an unacceptable hit performance. To overcome this scenario, Liodden's team would need to frequently add more DRAM-based hardware as data volumes grew. Each new enterprise-class server added to the cluster would cost tens of thousands dollars, however, a potentially unsustainable approach from a cost perspective relative to Tapad's business model. Reading terabytes of data into DRAM every time new hardware is added to the cluster would also take more time than Liodden was willing to sacrifice.

The better option turned out to be a hybrid flash/DRAM approach from Aerospike, a Mountain View, Calif.-based NoSQL database maker. The Aerospike database is a key value store that uses flash-based solid-state drives (SSDs) as the persistent storage layer, supplemented with DRAM to store the related indices. Flash does not provide the same level of performance as DRAM, but it does deliver significantly higher read/write performance and consistency than disk, and its price-to-performance ratio, when used in combination with limited DRAM-based storage, hits the sweet-spot for supporting real-time, intelligent decision-making applications such as Tapad's, Liodden said.

Aerospike also employs techniques for spreading reads and writes evenly across SSDs, maximizing their lifespan. To wit, Tapad has not had to replace a single SSD in the 18 months the system has been live.

Currently, Tapad runs a five-node Aerospike cluster, with each server utilizing six 120 GB drives. The system manages more than 150 billion ad impressions per month across 2 billion devices. The system routinely supports 50,000 queries per second per server, reaching 150,000 ads per second during peak activity. Total data volume currently stands at 3.6 TBs and growing. Architecturally, the system is latency-aware down to the individual node and is able to intelligently route data to maximize performance, Liodden said.

The Aerospike-based system enables Tapad to serve a growing client base that includes Dell, Evidon, and three of the top four telecom providers. These and other clients rely on Tapad to get their ads in front of the right eyeballs at the right time. The results include improved ad conversion rates across end-user platforms, engagement with new segments of likely customers and, most importantly, increased sales and revenue.

Big Fast Data Use Cases

While Tapad's experience is focused on ad-tech, real-time, intelligent decision-making as enabled by Big Fast Data is applicable to numerous use cases across various industries. These include high-speed financial asset trading in which real-time analysis of multiple, multi-structured data sources must be performed and trading decisions made and executed in sub-second time-frames. Utility exchanges that facilitate bidding for and delivery of electricity and other forms of energy in real time are another logical use case. Recommendations engines that present cross-sell and up-sell offers to online consumers, which currently draw upon analysis of pre-processed data, are also candidates for real-time, intelligent decision-making approaches such as employed by Tapad.

Action item: Many mission-critical business processes and applications that once took minutes, hours, or even days to complete are now candidates for real-time, intelligent execution thanks to Big Fast Data. Early adopters such as Tapad are living proof that real-time, intelligent decision-making is now a viable and practical option for those enterprises willing to shake the cobwebs from their old ways of doing business and the technologies that support these outdated processes. Those that do so will be in the best position to redefine and dominate existing markets and innovate to create new and highly profitable new lines of business. CIOs must recognize this shift and begin exploring new ways of delivering real-time, intelligent transactional services that customers, both enterprises and consumers, now expect.

CIOs: Scale-Based Services Require Rethinking of Performance Needs and Databases

Introduction

On November 27, the Wikibon community was treated to a Peer Incite entitled Optimizing Infrastructure for Analytics-Driven Real-Time Decision Making. We were joined by Dag Liodden, Co-Founder and CTO of Tapad.

Tapad’s business model relies in the ability for its underlying technology to make millisecond decisions based on a variety of factors and then to deliver the ultimate results to a user’s browser session. If the company fails to meet stringent timing needs, it loses the opportunity to bid on placing ads for that particular user session. In other words, the wrong technology for Tapad would directly result in a bottom line issue.

Tapad’s needs are what most mainstream CIOs would consider very niche as most don’t require sub-millisecond response times, even for their most critical line-of-business apps. However, beyond Tapad’s technology was the thought process that led the company to eschew the use of RAM-based storage in favor of SSD-based storage. In that, I see three points of consideration for CIOs.

Predictable and consistent performance

We all hear about companies releasing marketing fluff that describes their products as being able to provide what amounts to insane levels of performance. For example, “Our new storage system stress test just pushed more than 1 million IOPS!” While it’s great that engineers were able to tweak things to achieve that level, what most CIOs are expecting is some semblance of predictability in how a system performs on an ongoing basis, not just in one specific use case.

Tapad had the same challenges. It needed to ensure that its advertising solution performed at very predictable levels. While there were solutions available that might have been able to push performance a bit higher, there was no guarantee regarding ongoing performance.

Sometimes, the most efficient is not the most effective

This has become something of another mantra of mine: Efficient does not always equal effective. I could design an incredibly efficient process, for example, but if the result is that a customer leaves dissatisfied every time, it’s not an effective process. The same goes for technology. Tapad needed database storage that was extremely fast. Although in-RAM solutions provide the best possible performance levels, they do so in ways that can’t always be predictable and that are certainly on the high end of the expense scale.

So, rather than investing in the most efficient solution, RAM-based storage, it instead relies on slower solid-state drives that provide it with the more effective, sustainable, and predictable outcomes based on its business needs.

A merging of operational and analytical databases is inevitable

Finally, it’s clear that Liodden's solution meets his very specific business use case and that the mainstream doesn’t yet have these kinds of needs. But, we’re getting there. Over time, what was once a niche solution can quickly become mainstream as the technology catches up and prices come down.

As CIOs continue to struggle under ever-increasing masses of data, it’s the kinds of technologies discussed in this Peer Incite that will enable the opportunity to merge today’s separated transactional and analytical applications. As CIOs look at the two kinds of databases today:

- Transactional databases are used to carry out ongoing business as usual, such as processing orders, updating customer information and so on.

- Analytics is used to monitor ongoing performance metrics, gauge current vs. historical trends, and, in some cases, to inform the transaction system of trends that might change how operations are handled.

With the capability of today’s hardware and software, the ability to combine these systems is starting to become reality, with the result being both the transactional system and the analytical applications will operate in real time and dynamically update one another so that the most current possible information is being served based on everything known about the current transaction as well as trends that might be related to parts of that transaction. This type of transition will require CIOs to consider new architectures and new databases which are additive to, not replacements for, existing databases.

Action item: Potential action items to consider from this Peer Incite include:

- CIOs should always continue to focus on the most effective outcome that appropriately considers all elements of the process, not just on buying the fastest equipment out there.

- It’s easy to get impressed by vendor hype touting niche, massively-tweaked outcomes, but the focus should be on how solutions operate on an ongoing basis.

- CIOs might consider ways that they can begin creating deeper combinations of their siloed databases in order to ensure that the organization can make the best possible data-driven decisions.'

Data-in-Memory Topologies

Data-in-memory databases have become popular where rapid response times are necessary, either to help process a lot of data and iterate rapidly (such as SAP HANA), or in transactional systems requiring sub-second response time (such as advertisement placement systems). For applications that need predicable low transaction latency, the bottleneck is never the processor: modern x86 processors almost always have enough GHz and cores to provide the processing power for transactions. The performance issue always comes from the storage. Until recently, the disk drive has been the only persistent storage device. However, unless the whole database can be held in DRAM, this makes low consistent latency (e.g., sub-second response times) almost impossible to achieve because of the very high variance of disk sub-systems.

Data-in-memory systems, where the whole database is held in memory, have become more popular, with the use of use of topologies such as Membase within NoSQL database systems. However, the problems of dealing with large amounts of data in a memory-based system are extremely difficult to manage:

- The cost of large amount of DRAM on servers (up to 512GB of DRAM and higher is possible) requires extremely expensive servers;

- The larger the DRAM on a server, the slower the restart time of a node from disk-based persistent storage;

- As the amount of DRAM is increased, the probability of the L1 & L2 caches being trashed and potentially decreasing performance becomes higher, and applications become more sensitive and more costly to maintain;

- The cost of protecting the data-in-memory with battery protection of memory and/or multiple distributed copies of data goes up sharply as the number of nodes are increased.

In general, it is cheaper to use a larger number of small servers with (say) 96GB of storage with a relatively small number of nodes, and the resulting database size is limited. However, the limitations of scaleability of database size are significant.

Use of NAND Flash in Databases

NAND flash has superb characteristics for reading random data, and although flash is slower than DRAM, it is possible to create a layer below DRAM and dramatically increase the size of the database. The forecasts that improvements in NAND flash performance and reliability could not be maintained have proven wrong.

However for write, flash is not so optimal. Only 0’s can be written, so a block of data has to be set to 1’s before data can be written. Writing is much slower than reading, especially random writes.

Including NAND Flash in the Data-in-Memory Topology

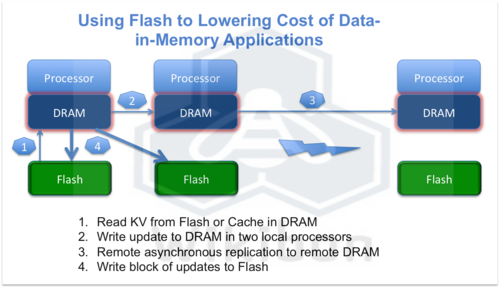

Figure 1 shows the architecture that Aerospike uses to incorporate NAND flsah productively in its database architecture. It utilized the strength to DRAM, the strength of flash as a read persistent layer, and the strength of blocking up writes to persistent flash storage. The duplication in DRAM within the data center and outside allows protection of data against loss.

The key characteristics of this architecture are:

- Provision of low latency consistent performance for IO reads and writes (Wikibon published research showing a 200 times reduction in variance with flash arrays;

- Much greater scaling of Data-in-Flash than Data-in-DRAM systems;

- Much lower costs of Data-in-Flash compared to Data-in-DRAM, particularly as the amount of data increases (Wikibon research shows that a comparison of two-year server and active data costs for a transactional analytic workload with a 2 Terabyte Replicated Database (4 Terabytes in Total), supporting 800,000 operations/second (5,000 Bytes/operation) costs seven times less for Data-in-Flash compared with Data-in-DRAM.

For very applications with very small amounts of data (1TB or less), and ultra-low latency, Data-in-DRAM will remain the preferred topology.

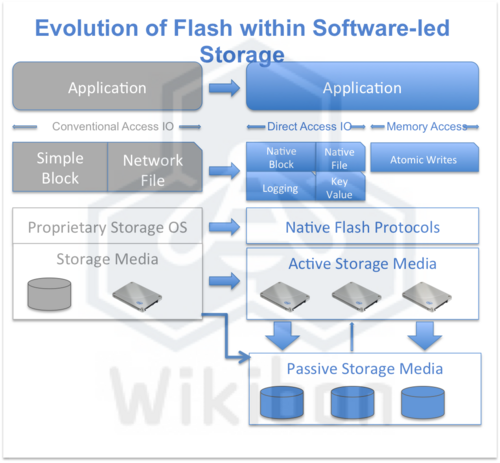

Future Directions for NAND Flash Access

Figure 2 shows that range of new access techniques from companies such as Fusion-IO and Virident that are being tested in beta at the moment. As these techniques are implemented into traditional and new file systems and databases, the potential of Data-in-Flash topologies will become greater and will become mainstream. These file-systems and databases will increasingly utilize very large distributed flash-only storage and obtain storage services from a Software-led Storage architectures, as discussed in recent Wikibon research.

Action item: In a recent Peer Incite, Dag Liodden, Co-Founder and CTO of Tapad, discussed why he used the Aerospike Data-in-Flash architecture for the deployment of his ad service. Without the development of modern Intel processors and modern databases such as Aerospike that can exploit the processor, DRAM, and flash, the ability to provide real-time advertisement serving and the real-time analytics that optimize the placement would not be possible cost-effectively. CTOs together with the lines-of-business should be looking for business opportunities where processing of big data can provide new revenue

Big Fast Data Needs Stress Traditional DBMS Approaches

At the November 27, 2012 Wikibon Peer Incite we heard Dag Liodden, Co-Founder and CTO of Tapad, describe how the ad tech company is exploiting extended-memory databases to drive business results. Specifically, rather than use a pure in-memory architecture, Tapad is leveraging technology from Aerospike to deliver ad units in real-time to potential buyers.

The key technological nuance is that the Aerospike database allows Tapad to address memory as a “single level store” by deploying flash as a memory extension. While there may be a slight tradeoff in performance with this approach (relative to an all DRAM in-memory system), the cost benefits are so substantial that they outweigh pure in-memory alternatives.

It is becoming increasingly apparent to a group of practitioners within the Wikibon community that data management will evolve from today’s norm – where transactional databases are isolated from analytics systems and feed them in a batch manner – to a new situation where transactional data is fed to analytics systems and relevant decisions can be made in real time (defined as before you lose the customer).

Technology vendors are going to market with two dominant approaches to this trend, including:

- Do the heavy transaction lifting on our “big iron” system then ship them to our expensive data warehouse, and we’ll do the “real-time” processing;

- Systems such as Aerospike that have the potential to unify transactional and analytic data to allow machines to make decisions.

We see the latter as the next generation approach with a much greater ability to deliver tangible business value at scale. There are caveats. In particular use of such systems will forego traditional rich SQL functionality, so practitioners should identify use cases where such capabilities are not needed. Often these are ad-serving or emerging social analytics applications as well as certain risk and fraud use cases in financial services. In addition, to the extent that flash is used as a memory extension, business cases that extend over three years or more should assume flash systems will need to be replaced due to wear out factors from these demanding workloads. Nonetheless, in many cases these added costs are inconsequential relative to overall business value.

Action item: Innovative suppliers of database and data management technologies are finding new opportunities within so-called Big, Fast data markets-- i.e. those that feed transaction data into analytics systems in "real-time." Increasingly, this unified approach will drive greater levels of business value, and traditional vendors must find ways to compete without a "Big Iron" dogma. Specifically, suppliers must design next gen systems that leverage flash as an extension of memory to provide predictable and consistent performance at dramatically lower costs than pure in-memory database systems.

How Real Time Analytics Impact IT Organizations

In the November 27, 2012 Peer Incite Research Meeting, Dag Liodden, Co-Founder and Chief Technology Officer at Tapad, discussed how dynamically-updated, real-time analytic applications are tightly integrated into this ad-tech company’s ad-bidding service.

Tapad’s infrastructure is far removed from the batch-process analytic applications of the past. At companies like Tapad, analytic applications are migrating from off-line batch processes to real-time decision-support systems that are integrated with transaction systems. These systems are not only providing analysis based upon historical data, but increasingly are dynamically updated with the latest transaction data. This tight linkage between transaction systems and analytic applications is driving new requirements for additional infrastructure elements, such as Tapad's use of the Aerospike NoSQL solution and solid state disk, that enable the company to meet the latency, scaling, and performance requirements of real-time analytics for ad serving. This shift impacts more than infrastructure requirements, however.

Dynamically-updated, real-time analytics applications that are integrated with transaction systems require that organizations treat analytics applications more like transaction systems in terms of service levels, business continuity, and disaster recovery. IT professionals responsible for supporting real-time analytic application tied to a 24x7 transaction systems should design for:

- Non-disruptive software and hardware upgrades,

- Non-disruptive maintenance,

- High-availability and automated failover,

- Rapid recovery,

- Limited data loss.

Organizationally this means that owners of analytic applications will need to engage more formally with security, business continuity, disaster recovery, and compliance teams.

Action Item: As analytic applications move from standalone applications to real-time analytics, analytic application owners should prepare for the additional requirements and constraints that come with on-line production applications. The security, business continuity, disaster recovery, and compliance professionals supporting transaction applications are best equipped to assist in meeting these new requirements for analytic applications.

Flash Storage Arrays Make Big Fast Data Applications Feasible and Affordable

The demands of analytics-driven real-time decision making, which require millions of compute decisions to be made every few seconds and near-instantaneous access to multiple terabytes of data, are transforming the infrastructure to support these high transaction volume applications.

While advances in multi-core server technology and the advent of NoSQL databases are both key components of the overall solution, the commercialization of high capacity, low cost SSD or flash storage arrays makes possible a solution that can not easily or effectively be achieved utilizing traditional hard-disk drives (HDDs) or even an all DRAM in-memory alternative.

During Wikibon’s November 27th 2012 Peer Incite call, Dag Liodden, Co-Founder and CTO of Tapad shared his firm’s rationale for selecting flash as the primary data storage asset for the company's high performance cross-platform ad solution, which offers its customers, such as Dell, online ad retargeting services across multiple devices, browsers and mobile apps. (Follow this link for Wikibon Big Data Analyst Jeff Kelly’s overview of Tapad.)

Why Flash Works Best According to Liodden, latency and access patterns dictate the type of storage needed for Tapad's application to read and write every device key value as well as support specific types of querying and updating of user IDs. Tapad can store kilobyte-scale key values in RAM, however it has collected information on billions of devices, the data for which, including replicated back ups, has grown to exceed 3.5 terabytes. In addition, access patterns are random, so the system needs to assume that all the data is “hot” and have real-time access to the entire data set.

Liodden says that loading almost 2 terabytes of active data in an in-memory solution would not only be cost prohibitive, it would entail the use of several more servers in addition to lacking inherent caching heuristics for Tapad's ad exchange model. “Using an all RAM configuration might be faster than a RAM and flash approach but it doesn’t afford us the predictability and lower cost of keeping all the data on flash. Plus, if a server or node goes down, it takes much more time to perform a RAM boot and load that data back into memory. Latency per request is not as predictable with RAM, and you can’t predict hot spots. The RAM bus speed may also be a bottleneck.”

Liodden believes traditional HDD arrays still have uses for some Big Data or Hadoop and MapReduced applications that may be CPU bound more than IO intensive. “In many cases HHD or rotational drives have high bandwidth when reading sequential or linear data such as counting the number of unique users visiting a site or summing up the number of page views while sifting through massive data sets. For smaller data sets with random access patterns, SSD is much better.”

Tapad selected NoSQL database solution provider Aerospike to help support its ad exchange application. Liodden uses traditional relational databases for other in-house applications but found the NoSQL approach much more appropriate for the integration of real-time analytics and high-speed database reads and writes needed for his ad exchange environment. “The data set keeps growing, and our traditional relational database would need to be modified or clustered to handle the volume of data we have. It was just much easier and straight-forward to go with the Aerospike option.”

Aerospike also offers a Flash-Optimized Data Storage Layer, which reliably stores data in DRAM and flash as well as software that supports wear-leveling on SSDs – a common concern with high transaction volume environments. By design, flash drives can only accept a limited number of electrical charges. However, Liodden says Tapad has been running its flash array in a production environment for more than 18 months and has yet to replace an SSD drive. “Given the original cost of the SSDs, they have already more than paid for themselves, and the replacement cost continues to go down.”

Bottom Line Traditional HHD storage and relational databases are not going away, as they will retain their usefulness for many existing applications. However, for high-performance and real-time applications that rely on access to active data, deploying SSDs and flash storage arrays is quickly becoming the best practice. In many cases, flash is not only more cost-effective than DRAM in-memory solutions, flash offers more predictability and resiliency.

Action item: Application owners of real-time analytics and high transaction volume solutions that require access to terabyte-plus volumes of active data and must forgo sequential processes in favor of integrated processes to meet latency requirements need to consider flash storage arrays over traditional HDDs and even all-DRAM in-memory solutions. In addition, IT executives should consider deploying all metadata as active data on flash arrays to enable the repurposing of that metadata for new value creation.