Contents |

Executive Summary

Using flash as a persistent extension of main memory is a much more cost effective approach than purely relying on DRAM as an in-memory data store. The performance disadvantage of flash, relative to main memory, is easily offset by the persistent capacity advantages flash brings to the table to enable a single level store for in-memory transactional analytic applications.

Wikibon has previously analyzed and concluded that Data in Memory technologies are the only cost-effective way to provide infrastructure for high-volume real-time transaction analytic applications. Transactional analytic applications integrate the transaction and analytic processing in a single pass of the data.

This research looks at two different implementations of data in memory:

- Data-in-DRAM, using database such as Cassandra, Couchbase, MemStore, or MongoDB, and,

- Data-in-flash using databases such as Aerospike.

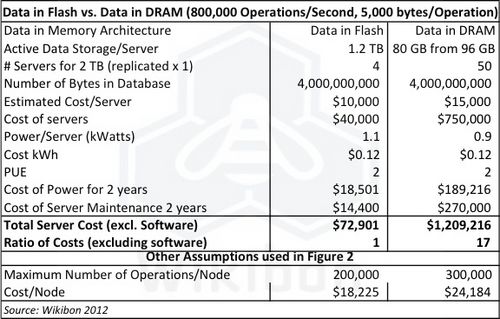

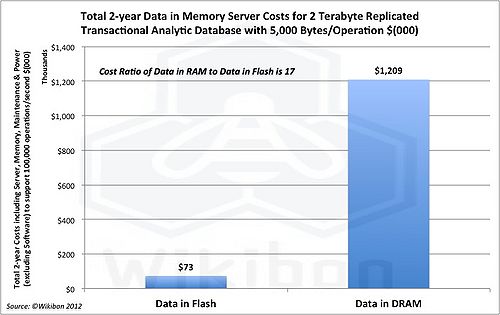

Figure 1 shows the results comparing the total two-year cost of servers and 2 terabytes of active memory storage supporting a high performance (800,000 operations/second) transactional analytic application. The memory storage is replicated and requires 5,000 a storage capacity of bytes/operation.

Source: Wikibon 2012

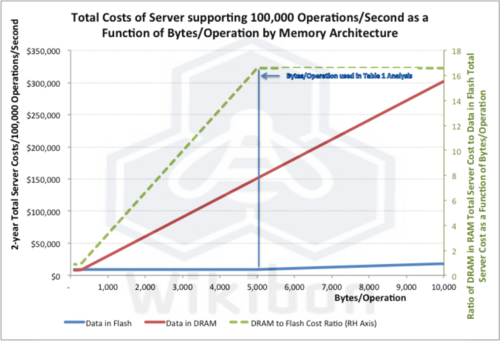

Figure 1 shows that the cost of data-in-DRAM is about 17 times higher than the data-in-flash implementation. Table 1 in the body of the research shows that the data-in-DRAM requires 50 nodes to meet the active data requirements, whereas the data-in-flash only requires four nodes. The data-in-DRAM approach requires more nodes to hold the active data, and the processors in these nodes are not utilized to the full. Figure 2 shows the cost per 100,000 operations for a range of data requirements from 100 bytes/operation (where the data-in-DRAM nodes are fully loaded) to 10,000 bytes/operation. The data-in-flash solution is cost effective for the whole range.

Figure 3 in the body of the research shows the enhanced and lower overhead methods of directly addressing flash memory announced by Fusion-io. Other companies are also working on these extensions. These will significantly improve the performance of data-in-flash and drive the up to 17-times cost advantage of data-in-flash over data-in-DRAM much higher.

Wikibon concludes that data-in-DRAM implementations such as TimesTen from Oracle are a “flash in the pan”, and will not support growing big data analytic or transactional analytic applications going forward. Wikibon believes that the current leading data-in-flash database for transactional analytic applications is Aerospike. Wikibon would recommend that Cassandra, Couchbase, MemStore and MongoDB implement data-in-flash technology to be competitive and increase the range of applications that can be addressed. Wikibon would strongly recommend that executives from ISVs and enterprises support a data-in-flash model, especially for high performance and Big Data systems. Data-in-DRAM should be bypassed.

Wikibon observes that IBM, Oracle, and Teradata need to radically change their architectures to embrace a true data-in-flash model, as their current implementations are uncompetitive from both a performance and price/operation perspective. ISVs and enterprise IT executives should not assume traditional vendors would compete quickly with data-in-flash, as this would impact current revenues. In order to remain competitive, ISVs and enterprise executives should evaluate implementing available data-in-flash databases now in order to position themselves for dramatic database performance and price-performance improvements.

Real-time Big Data High Performance Transaction Analytics

Web service providers have extreme IT challenges for some applications, particularly Big Data high-performance transaction analytics. The challenges are even higher when service providers have to meet Web-scale volumes and when the application needs to understand some or all of the following:

- Know whom it is interacting with:

- Read & write cookies from billions of devices,

- Read & write user profiles for hundreds of millions of users;

- Anticipate intent based on context:

- Page views,

- Recent search terms,

- Advertisements served,

- Game state,

- Last move,

- Friend’s lists,

- Location information,

- Audience Segments/location patterns;

- Respond in milliseconds with:

- Most relevant advertisement,

- Richest gaming experience,

- Latest attack vector,

- Recommendatioins for the best product/service,

- Treating special customers like VIPs;

- Be available 24 x 7 x 365 without ever going down:

- Revenue loss x 10 for any outage;

- Lowest cost per transaction:

- Be cost effective in the digital dimes universe (as apposed to traditional analogue dollars);

- Etc.

These applications often require very high rates of random access to relatively small databases. Hard disk drive HDDs can only sustain limited IOs to each disk (a maximum of about 200 IOs per second (IOPS). The IOs are mechanical and very slow, and of course the access time increases with queuing as the HDDs are driven harder. The working data set for Web service applications tends to be large, making array caching/tiering solutions impractical, with high latency and even higher latency variance. It is immediately obvious that traditional arrays deploying any mixture of HDDs cannot be configured to provide the latency required to meet the performance and cost constraints of Big Data high-performance transaction analytics workloads.

Wikibon has discussed the performance and cost issues of HDDs compared to solid state memory in a posting entitled “Designing Systems and Infrastructure in the Big Data IO Centric Era”. The choice of infrastructure solution is between two solid-state data in memory alternatives:

- Data-in-DRAM using databases such as Cassandra, Couchbase, MemStore or MongoDB.

- Data-in-Flash using databases such as Aerospike.

Data in Memory Solutions for Real-Time High-Performance Transaction Analytics

Data in DRAM solutions use databases such as Cassandra, Couchbase, MemStore and MongoDB. In the usual implementation the database is held in memory, with a replicated copy in memory on another node(s). This solution is similar in concept to the TimesTen from Oracle or SAP HANA appliance but tuned for real-time transaction analytics. They usually do not include the memory protection features of the HANA appliance to reduce cost.

Couchbase and MemStore use a simple key value pair (KVP) API and have very low latency. In general, the MongoDB and Cassandra databases have richer APIs and database functionality: this allows fewer API calls, but much higher latencies.

The server hardware, power, and maintenance costs of data in DRAM solutions are shown in Table 1 below, and are about $1.2 million over two years for a 2 terabyte replicated solution. The number of IOPS can very high indeed, assuming that the processor L2 and L3 caches are not flushed because of problematic application data access patterns. For applications avoiding bad access patterns and requiring very high IOPS/Terabyte with very low latency, Couchbase and MemStore offer good but expensive solutions.

Data in Flash solutions such as the Aerospike KVP database use a combination of DRAM and NAND flash as persistent storage and provide high IOPS/Terabyte at potentially much lower costs. Database updates are made to DRAM, with a duplicate copy(ies) made to other node(s), and the update acknowledged when all the DRAM nodes have been updated. A large number of data updates in DRAM are then blocked up and written to flash on the servers in very efficient large blocks, and the DRAM storage freed up.

Data-in-flash solutions preserve the speed of the DRAM and allow fast access of random reads from flash. Data-in-flash allows very much larger databases to be held in memory.

Figure 1 shows that these data-in-flash solutions can be dramatically lower in cost because of the potential ten-fold reduction in the number of servers and higher cost of DRAM compared with NAND flash. This reduction, together with the reduction in power and maintenance, leads to an overall 17X reduction in hardware costs, from $1.2million to $73,000.

Figure 2 shows the cost/100,000 operations for a range of data requirements from 100 bytes/operation to 10,000 bytes/operation. At the lowest level, both solutions are fully utilizing the server capacity in the nodes. As the bytes/operation requirement is increased, the data-in-flash nodes are fully utilized unit at 5,000 bytes/operation. Figure 2 shows that as the bytes/operation are increased on data-in-DRAM nodes, they become less utilized, as the constraint is the amount of memory that can be attached to each server. The difference between the two solutions increases to a maximum of about 17 times. As the demand and the availability of Big Data grows, Wikibon believes that the demand for higher amounts of data will grow, and the data-in-flash architecture becomes the only valid design option.

Data-in-Flash Directions

Data-in-flash needs specific changes in database architecture to exploit the different characteristics of flash and DRAM. When implemented correctly, data-in-flash offers high IOPS for both reads and writes.

Offsite business continuance can be met with asynchronous copying with some potential loss of data. The use of Axxana technology could reduce the RPO to near zero by holding a synchronous copy of the data in flight locally in a special flash drive protected against fire, flood, and explosions, and can be accessed by Internet or by cell for data recovery.

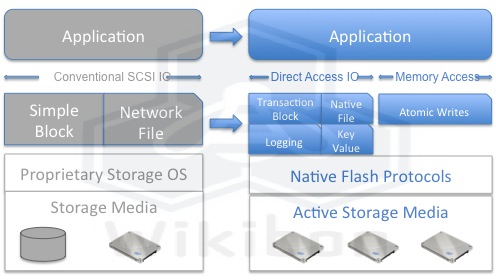

One of the speed limitations at the moment is the SCSI protocol that is used to communicate between the processor and physical HDD/SSD drives. Figure 3 shows how traditional storage array-based architectures are migrating to software-led storage as part of a software-led infrastructure. The right hand side of Figure 3 shows the expected technology improvements to allow native access to flash. This will allow significant improvements to the path length and latency and significant reductions in cost/node, and greater integration of storage software services with the application.

Source: Wikibon 2012

Earlier this year, Fusion-io announced a software development kit (SDK). Developers can use the developer application programming interfaces (APIs) and programming primitives included in the kit to communicate directly with PCIe flash. These APIs allow developers to create scalable apps faster by dramatically reducing latency, DRAM memory, and processor cycles, and allow business problems and opportunities to be supported that previously could not be solved. The proof demonstrations were discussed in the introduction.

Many database vendors are in active initiatives to exploit these APIs, including Aerospike and Couchbase. The availability of APIs and SDK from Fusion-io and other companies will enable database developers to dramatically improve the response times and latency of their products. This will allow Big Data transactional, analytic, and mixed databases in flash to reach petabyte size and beyond, with millions of operations/second/node. Big Data applications are freed from the tyranny of King Rust.

Conclusions & Recommendations

Wikibon concludes that data-in-DRAM implementations such as TimeTen from Oracle are a “flash in the pan” and will not support growing big data analytic or transactional analytic applications going forward. Wikibon believes that the current leading data-in-flash database for transactional analytic applications is Aerospike. Wikibon would recommend Cassandra, Couchbase, MemStore and MongoDB implement data-in-flash technology to be competitive and grow the range of applications that can be addressed. Wikibon would strongly recommend that executives from ISVs and enterprises support a data-in-flash model, especially for high performance and big data systems. Data-in-DRAM should be bypassed.

Wikibon observes that IBM, Oracle, and Teradata need to radically change their architectures to embrace a true data-in-flash model, as their current implementations are uncompetitive from both a performance and price/operation perspective. ISVs and enterprise IT executives should not assume traditional vendors would compete quickly with data-in-flash, as this would impact current revenues. In order to remain competitive, ISVs and enterprise executives should evaluate implementing available data-in-flash databases now in order to position themselves for dramatic database performance and price performance improvements.

Action Item: CIOs and CTOs should place data-in-flash as a strategic technology, follow the evolution of the technology closely, and ensure that their organization is in a position to exploit the business potential to the utmost. This applies not only to large enterprises, but also to intermediate companies. In a Wikibon case study a small organization of 220 people was able to improve revenue by 10% with no increase in business cost by introducing a Data in Flash strategy for their core applications.

Footnotes: