Tip: Hit Ctrl +/- to increase/decrease text size)

Storage Peer Incite: Notes from Wikibon’s February 2, 2010 Research Meeting

This week's Peer Incite focuses on the bleeding edge of network consolidation -- fiber channel over 10 Gb Ethernet (FCoE). This new technology, which is still maturing, promises the ability to converge the SAN and LAN. This holds the promise for reductions in cabling and ports and a simplified management schema for a single unified network across the data center. Today FCoE standards are solidifying and several vendors already have products in the market based on them, although at this point inter-operation between products from different vendors is imperfect.

These technological challenges will be overcome over time, one way or another. The much larger problems are organizational. The SAN and the LAN are two very different creatures, managed by different organizations, and optimized along very different concerns, and before they can be consolidated those very real issues have to be resolved.

Basically the SAN is owned by the storage group and optimized first to handle very high volumes of data and large files with no data loss and second for very high transmission speeds. The LAN is owned by the network group, and optimized first for a high degree of flexibility and second for a transmission speed that meets business needs, often slower than the SAN. It accepts a certain degree of packet loss and handles the problem by resending dropped packets.

The storage group views the SAN as the center of the data universe, while the network group and CIO see the network as the epicenter of the computing infrastructure, with servers, desktop systems and storage as the peripherals. The two groups use very different management tools, reflecting these different views of the network. In most shops SAN management is handled with tools from the storage system vendors, while LANs and WANs today are managed using increasingly sophisticated tool sets that view the entire enterprise network, from data center to desktop, as a whole and focus on performance of the network and its peripherals.

Consolidating these two very different entities is a difficult challenge that goes far beyond mere technological issues. Certainly SAN managers can benefit from adding the sophisticated tools developed for LAN and WAN management to their toolkits. That is the easy part. But when the SAN becomes a part of the overall corporate network, it still must be managed to ensure zero data loss and to handle very high-speed transfers from machine-to-machine. Anything less will have an immediate negative impact on application performance. How this is to be balanced with the different optimization needs of the LAN and WAN is still an open question that promises to create issues for years to come. G. Berton Latamore

Contents |

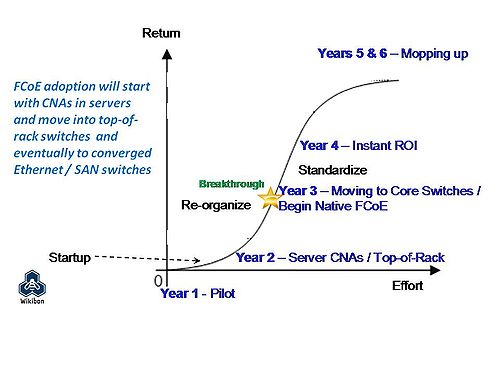

FCoE Adoption Curve

At the February 2nd 2010 FCOE: Fact v Fiction Peer Incite we discussed the adoption rate of Fibre Channel over Ethernet, in addition to other myths and realities.

Figure 1 shows an S- or Ogive curve which reflects how individuals in the Wikibon community believe FCoE will penetrate the marketplace. Early adoption is occurring in 2010 as pilots. A key driver for FCoE adoption is virtualization and virtual IO; which is increasing server heat densities by consolidating many smaller servers on to fewer larger boxes. In addition, many customers are seeing port counts escalate dramatically. Further, cable bulk is becoming unwieldy and [[Technology Convergence: Transforming data center infrastructure|management complexity] increases annually.

The three obvious candidates for FCoE consideration are: 1) FC shops facing high growth; 2) customers building out new data centers and 3) cloud service providers using FC. Early adoption will be in large-scale server deployments which adopt converged network adapters (CNAs). CNA technology will migrate to top-of-rack switches which will still provide separate connectivity out to individual Ethernet and FC switches. The technology will eventually move to converged switches and then to native end-to-end FCoE targets (e.g. storage).

However this end-to-end vision will take several years to evolve and will occur in phases:

- Phase I will come in the form of CNAs being utilized in new server deployments. CNAs will converge NIC's and HBA's onto a single card but still have separate Ethernet and FC connections exiting the system.

- Phase II will move CNA technology to top-of-rack (ToR) switches. CNA outbound traffic will converge into a single technology into the ToR switch which will exit out to separate Ethernet and FC switch infrastructure.

- Phase III will converge these separate switch resources onto a unified switch that supports both Ethernet and FC traffic exiting out to Ethernet clients and FC-based storage targets.

- Phase IV will see Ethernet and FC converged via FCoE end-to-end all the way to target devices.

We expect slow adoption initially and then a breakthrough to occur when shops reach critical mass, CNA prices decline and users are attracted by the benefit of eliminating connection costs. Eventually the ROI will be instantaneous because communications connections will be cut in half. Organizations that have not adopted by the 2012 timeframe will be at a disadvantage from the standpoint of cost, heat density, and overall environmentals.

Action item: The adoption of FCoE will be steady over the next five years. Eventually, the economics of halving connectivity costs will outweigh any near-term friction that exists in the customer base today. Organizations with large investments in Fibre channel should begin to plan for converging Ethernet and FC technologies within the next two to five years.

Footnotes: Modified FCoE Adoption Curve

FCoE: Time to focus on Immediate Benefits

The most immediate driver for FCoE is the imperative to deal with the cabling and card challenges with server racks and blades. With hundreds of servers in a rack supporting up to 1,000 virtual machines, the traditional way of connecting to these servers with individual FC cards and NICs is unsustainable. Converged network adapters (CNAs) reduce the number of cables in the rack by about two-thirds. Two 20Gb cables from the CNAs connect each server to a top-of-rack (ToR) switch. This ToR switch then connects to the existing Ethernet and FC networks.

The benefits of this approach will be lower cost blades and server racks that are easier to maintain, have lower heat, space, and power requirements, and will be more reliable. The biggest single saving (~$100,000 per rack) comes from reducing costs associated with cables and cabling.

Action item: CIOs and senior managers in installations with significant FC infrastructure should focus on position the infrastructure to take advantage of lower cost and lower power servers and blades that will be available in volume in 2010 from the server vendors. The immediate task is to test that these server configurations will connect from the ToR switch to the existing FC and Ethernet networks, and to revise the operational and trouble-shooting procedures for FC cards and NICs to accommodate CNAs.

FCoE Technology Math Lesson

On the February 2 FCoE Fact vs. Fiction call, we discussed some of the potential technology advantages of Fibre Channel over Ethernet (FCoE). FCoE is the first major application to take advantage of the enhancements to Ethernet described by Data Center Bridging (DCB), and this is being implemented on a 10GbE technology base. This promises the ability to converge LAN and SAN technology, which suggests that CIOs should examine what can be done today and begin to plan for the future.

In a large datacenter, each server on a rack may have four, six, eight, or more 1GbE NIC ports and two-to-four 4Gb Fibre Channel (FC) ports. The NIC ports would probably be a combination of ports on the server motherboard and some number of single, dual, or possibly quad-port NIC add-in adapters. The Fibre Channel ports would be in the form of single or dual-port add-in adapters. These NIC and FC ports would be connected to different top-of-rack switches, the NICs connected to an Ethernet switch and the FC adapters connected to a Fibre Channel switch.

A typical rack with ten servers each having six 1GbE NIC ports (two on the motherboard and 2 dual-port NICs) and 1 dual-port 4Gb FC adapter then has:

- 140Gbps of total available I/O bandwidth,

- 80 cables (and switch ports consumed),

- 30 add-in adapters,

- 2 different top-of-rack switches.

In an FCoE design, this same rack of ten servers with a pair of dual-port FCoE adapters in each server would have:

- 400Gbps of total available I/O bandwidth,

- 40 cables (and switch ports consumed),

- 20 add-in adapters,

- 1 top-of-rack switch.

The number of cables has been reduced by 50%, the number of adapters reduced by 33% and the number of switches in the rack reduced by 50%. However, the total available I/O bandwidth has increased by 285%. There is also a reduction in power consumption, and therefore heat generated and air conditioning required for this new solution. This example is a relatively simple one, with only ten servers in a rack. Consider the impact this would have on a row of server racks that had more than ten servers in each rack.

Action item: As you plan new computing racks or new data centers, consider the long-term savings in equipment and expense by implementing a converged network using FCoE.

Organizational Imperatives for Converged Networks: Preparing for FCoE

Consolidation and convergence are popular themes in budget-strapped, space-strapped, and power and cooling-strapped data centers. Organizations hope that converged IT infrastructure will deliver a permanent step-function reduction in the cost of IT services, avoid or delay the requirement to build new data centers, and enable the company to live within energy consumption and cooling constraints. However, whether an IT organization is considering server, storage, or network consolidation, the consolidation typically requires a significant upfront investment, and early adopters will pay a price premium.

In that regard, the converged network delivered by FCoE is no different. As a result, many companies will delay decisions for a year or more until they determine that the pricing of FCoE justifies the upfront investment. Others will delay until the technology is determined to be sufficiently mature and until clear leaders emerge, since, despite the efforts of standards committees, mixed-vendor environments will almost certainly be more difficult to support, monitor, and maintain. As companies wait for prices to decline and leaders to emerge, however, it is imperative that they prepare themselves organizationally for converged networks.

Depending upon the size of the company and current organizational and reporting structures, converged networks may require changes in the budgeting process, change-management processes, management reporting structures, and chargeback systems. As an example, in most larger organizations, the storage administrator almost certainly has responsibility for the storage network infrastructure, requisitions additional FC switches and associated cables, and pays for the infrastructure out of a storage budget. The storage administrator most likely monitors and manages storage and storage-network performance and availability and has a charge-back or cost-allocation system for the storage capacity used by application owners. At the same time, the server or LAN administrator likely has responsibility for requisitioning the LAN infrastructure, including network adapters, LAN cabling, and Ethernet switches, owns the LAN budget, has a different application for monitoring LAN performance and health, and has a different chargeback system for allocating LAN costs to application owners.

In order for a company to gain the full benefit of FCoE, the departments, processes, and budgets must consolidate. And for the most effective and efficient implementation, the departmental, process, and budget consolidation should occur prior to the FCoE implementation. Because budgets are typically done on a calendar-year basis, and because these types of significant organizational changes are typically only done once a year, in concert with the budget cycle, the organizational changes required for FCoE could delay FCoE adoption by almost two years.

Action item: CIOs looking to achieve the maximum efficiency and cost savings should consider converged FCoE networks. However, as a prerequisite, they should first focus on the organizational and budgetary imperatives necessary to achieve a positive return. The primary areas to consider are budget ownership, charge-back or cost-allocation systems, staff reporting structure, and change-control processes. Failure to address these issues proactively will result in reduced benefits of the FCoE investment and a delay in the return on the FCoE investment.

Vendor Actions for FCoE

On February 2nd’s FCoE Fact vs. Fiction Peer Incite, we discussed that one of the key benefits of FCoE is that the robust vendor ecosystem including server, operating system, network, and storage vendors. The creation of the technology includes new standards in both storage (T11) and networking (IEEE and IETF). Some practitioners are concerned that FCoE could be plagued with some of the interoperability challenges that have historically been found in the FC market.

As vendors looking to move customers along the adoption curve of FCoE, they would do well to consider both the historical challenges of the industry and the long term goal of flexibility that customers are pursuing.

Action item: Vendors who are serious about FCoE will do more than just give lip service to the development of solutions. They should actively engage in the creation of standards that will allow for vendor interoperability. They will also create robust documentation including reference architectures.

Getting Rid of Stuff: 10 Gig E and FCoE

On the February 2, 2010 FCoE Fact vs. Fiction call, we discussed some of the benefits of FCoE and the move to 10 Gigabit Ethernet. The key value proposition is the halving of connections and consequently connection costs. This means getting rid of cables, adapters, ports and redundant switch infrastructure; and dramatically streamlining communications costs.

Here's a video from @davegraham that clearly shows the opportunity to reduce cable bulk.

As shown on the FCoE Adoption Curve, at some point in the mid-term (we're predicting between late-2011 to mid-2012) FCoE technology will reach a critical mass breakthrough, and ROI will become virtually instantaneous. This point will mark a meaningful impact of FCoE technology in terms of reducing cable bulk, cutting port costs, and eliminating redundant cards and switches.

Action item: For mission critical storage applications, FCoE will allow practitioners to get rid of much unwanted and unnecessary infrastructure. But it won't happen overnight. Organizations should plan accordingly to prepare for this trend and benefit from reducing asset costs, physical connection bulk and wasted space.