Software-Defined or Software-led storage infrastructure is a major trend we’ve been tracking at Wikibon. Pioneered by the hyperscale giants and slowly trickling into the traditional enterprise, the idea behind Software-led storage is to run rich storage function on commodity hardware and shift the point of control from proprietary storage arrays to a software and compute-led model. The benefit of this approach, in theory, is simplified infrastructure and the ability to run rich storage function on inexpensive scale out (versus scale up) hardware. This in turn leads to more facile scaling, increased automation and lower overall costs.

Also, as Wikibon member Nelson Nahum points out: "another advantage of using software defined storage (that is properly designed) is the levels of availability (uptime) you can reach are much higher than traditional storage arrays. This is because instead of having a dual controllers and a few drives in a configuration, you have many controllers where you can move workloads in case of failures. The idea is that instead of designing to avoid a single point of failures, you can design the software to handle multiple concurrent failures."

To date, however, broad scale implementation of Software-Defined Storage has been limited in traditional Microsoft IT shops. With VMware’s marketing push toward the Software-Defined Data Center, Microsoft’s Windows Server 2012 launch was highly anticipated and has some clues as to the direction of Software-led storage within Microsoft. Wikibon has been predicting for some time that ISVs would increasingly try to grab more storage function and pressure traditional storage models; but in our view, specifically as it relates to Windows Server 2012, arrays that integrate with this new platform will provide better tactical ROI in the near term.

In particular, while Microsoft’s release of Windows Server 2012 is encouraging, it lacks robustness and maturity for certain storage function and leads us to believe that true Software-led storage from Microsoft is still a release cycle or two away. Specifically, array-based storage will continue to provide the best ROI for many small and mid-sized Microsoft shops over the next 18-24 months.

These were the findings of a recent study by Wikibon, published here in detail. In particular, Windows Server 2012 enables the offloading of certain storage functions to the array, for arrays that “speak” Windows Server 2012. Two positive features highlighted in the research are: ODX, a capability designed to reduce server and network overhead; and Trim/Unmap, a capability to allow the reclamation of unused storage space.

In both of these cases, Wikibon’s research found that to the extent an array had the capability to exploit these new features, the value proposition of array-based storage was significantly better than relying solely on a Microsoft-led (Software-led) storage stack. While users we interviewed were encouraged by Hyper-V Version 3, two functions stood out as limiting within Windows Server 2012: Storage Spaces, a storage virtualization capability which pools storage; and de-duplication to allow more efficient data storage optimization.

As such, the array-based capability that Wikibon modeled to evaluate the business case demonstrated significantly better value than a Microsoft Software-led approach using commodity disks. Note: Wikibon modeled an EMC VNX, which had integration capabilities with Windows Server 2012. Practitioners should be cautious to ensure that new array purchases can exploit Windows Server 2012 capabilities.

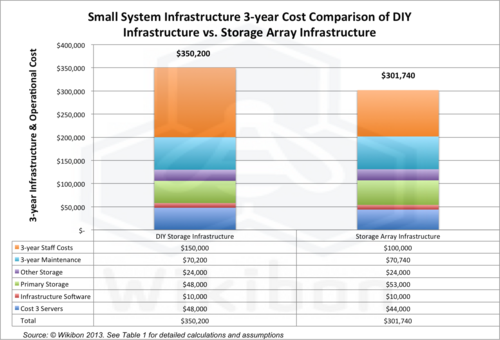

The data Wikibon presented shows two other important findings (see Figure 1):

- Spending 10% more on disk array hardware that can exploit Windows Server 2012 capabilities can lead to 14% lower overall costs relative to today’s Microsoft Software-led approach using JBOD;

- While server costs will be somewhat lower and largely offset more expensive array costs, the real savings come from infrastructure management costs (i.e. lower people costs).

While these numbers may not appear earth-shattering, the key message is that by utilizing array-based hardware that can integrate with and exploit Windows Server 2012 function, IT organizations will free up staff time and reduce management complexity by approximately 33%. This can lead to better IT staff productivity and reduction in time spent doing non-differentiated heavy lifting for storage.

Note that not all IT organizations generally and CFOs specifically will buy into this argument as the justification of a business case. Notably, "soft dollar" savings from redeployed staff often take a back seat to CAPEX reduction -- especially when they comprise a portion of an FTE. Indeed we are arguing that spending additional CAPEX on infrastructure can lead to better IT productivity, which to us is a sensible business case. Nonetheless, IT practitioners making this argument should have a corollary business case that specifies how the freed up staff time will lead directly to incremental business value.

Action Item: Microsoft’s Windows Server 2012 delivers some compelling function, but critical storage capabilities are lacking, such that true Software-Defined Storage from Microsoft remains elusive. In the near-to-mid term, to achieve maximum efficiency IT organizations (ITOs) must either investigate alternative software-defined offerings or stick with array-based storage solutions that integrate with Windows 2012. Importantly, to the extent these traditional arrays exploit key new features in Windows Server 2012, business value will likely exceed all-Microsoft storage stack approach.

Footnotes: