Contents |

Introduction

For several years Wikibon has been studying the benefits of virtualization and thin provisioning. Our research spans dozens of detailed customer interviews and studies of many hundreds of customers over several thousand storage volumes. As well, our information has been validated through Wikibon's Energy Lab, which produces studies designed to assist customers in understanding the degree to which a product contributes to energy efficiency.

Our objectives in conducting efficiency analyses are to identify not only the hardware impacts on issues related to utilization and energy consumption but more importantly the hard-to-quantify green software aspects of technologies. Wikibon Energy Lab Validation Reports are submitted to utilities such as Pacific Gas & Electric Company as part of an energy incentive qualification process where often outside engineering firms have validated our findings.

The Wikibon Energy Lab process defines and validates various testing procedures to determine, for example, energy consumed by specific products in various configurations. As well, Wikibon reviews actual customer results achieved in the field to validate the effectiveness of these technologies based on real-world field-data analysis. These proof points are mandatory for the utility company to qualify a specific vendor's technology for energy incentives. Thin provisioning and virutualization are two key technologies that we have studied.

Storage Systems 101

Typically, storage arrays consist of an array controller, which controls the major functions in the system, and the spinning disks in the array. Due to 50-year old historical nuances in the way servers and storage communicate to each other, data must be placed on contiguous spaces within the disk system to be accessed by a specific server. Think of laying out data on a disk as laying out wood flooring of variable sized planks without the benefit of a saw. Imagine if all the planks were coming to the builder in different sizes with very little predictability as to the size of the next plank that had to be laid on the floor. Soon the floor would have many open spaces. The same is true for the vast majority of disk devices installed today.

Imagine further the floor is populated with lots of open space and a large 15’ plank comes to the builder to be laid out. The builder sees there’s not a 15’ contiguous space in the room so in order to accommodate that plank, the builder either needs to free up space (which rarely happens) or the builder needs a new room. The same is true for disk arrays. To rip up the planks and re-organize them is prohibitively difficult and expensive so IT managers simply buy more disk space that in turn is wasted.

Enter Storage Virtualization and Thin Provisioning

Storage virtualization breaks up the storage into small, evenly sized chunks that can be assembled in any pattern and viewed logically by the server as though it were contiguous. The capacity on physical drives is broken into small chunks that are re-assembled into logical disks according to the capacity needs of the applications running on the server. The server sees what are referred to as ‘Virtual Volumes’, which is storage that appears to the server as contiguous space on the disk. Storage virtualization software works with the array controller to map the physical storage that is placed on the disk (which is dispersed) to the virtual volume that the server sees. The server asks for a piece of data and as far as the server is concerned, the next piece of required data is contiguous to the previous data. The bottom line is virtualization can increase average utilization from 20% or 30% to perhaps as high as 40%-50%. But why isn’t utilization much higher?

The second reason why virtualization in and of itself doesn’t allow extremely high storage utilization is because: 1) if applications run out of storage, they crash and 2) moving data to new physical storage space to accommodate growth can take days or months of planning and preparation. So IT managers have developed the practice of simply over-allocating storage to applications, meaning they always provision more storage than is needed to account for growth. This approach is commonly referred to as “fat provisioning.” Space once allocated is very hard to reclaim.

Thin provisioning software allows IT managers to make the application think it has plenty of space (so it won’t crash) but in reality only writes the amount of data that is physically needed at that moment. Typically a spare pool of free space or chunks is available to allocate for any server that is connected to the storage array. Virtualization makes the implementation of this provisional feasible, because additional individual blocks can be allocated to any volume from free space, and free space itself can be increased dynamically.

The bottom line is the combination of virtualization and thin provisioning means less wasted space, less spinning disk, less energy consumed.

Quantifying the Impact of Virtualization and Thin Provisioning

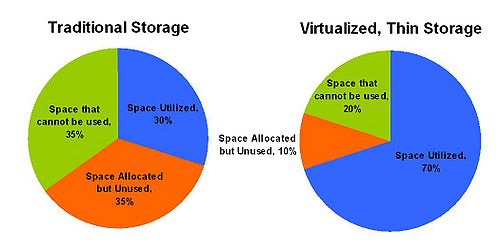

Historically, spinning disks within storage arrays are only 20-40% utilized (see Figure 1). There are three main reasons for this phenomenon with traditional storage systems:

- Storage must be allocated on contiguous disk space,

- Once allocated, storage is very hard to move,

- Because of 1 & 2 IT managers over-allocate space to accommodate future growth.

Other factors clearly play a role, including the need in certain use cases to under-utilize storage to reduce the amount of data under a disk's actuator, thereby increasing performance. However the inefficient way in which traditional storage systems lay storage out on the devices is a primary culprit in wasted space.

Virtualization and thin provisioning are two powerful storage optimization techniques that significantly improve effective storage utilization. Suppliers have leveraged these technologies to implement thin copying and automated tiered storage management to provide what Wikibon believes are crucial elements in efficient storage systems. The operational efficiencies derived from virtualization and thin provisioning have enabled storage administrators to store more data on less physical hardware while delivering substantial operational productivity and improved energy efficiencies.

Figure 1 below illustrates the impact of virtualization and thin provisioning on storage utilization. The data have been derived by analyzing approximately 2,000 storage volumes at 125 customer sites over the past two years. Data were statistically analyzed based on metadata supplied to vendors through "phone home" service systems or other data supplied by customers downloaded directly from storage controllers. At no time was any user or customer data made available to Wikibon, only controller metadata. The data show the pre- and post-implementation metrics for virtualization and thin provisioning. No effort was made to isolate the effects of these technologies individually. The information has been summarized into three categories:

- Space utilized - actual customer data written to disk space that was allocated and unused;

- Space allocated but unused - because of inefficiencies of the way data is laid out on traditional disk subsystems;

- Space that cannot be used - space that was not allocated due to operational, technical, or other constraints.

The bottom line is that directly due to the benefits of thin provisioning, customer data stored on the system increased from an average of 35% to 70%.

Advice to Practitioners

Wikibon has found that the benefits of virtualization and thin provisioning will vary by use case, application, workload and array manufacturer. As well there are several caveats users should consider when evaluating thin provisioning. Nonetheless, our studies have shown dramatic results. In one cluster of customer examples we found:

- 97% of customers experienced an increase in effective storage capacity as a result of virtualization and thin provisioning;

- Over 75% of customers achieved a 50% or greater increase in effective space (i.e., they would have had to install 1.5 times the storage capacity using traditional arrays to achieve the same allocated space);

- 50% of customers achieved additional capacity benefits of 150% or more from virtualization and thin provisioning (i.e., they would have had to install 2.5 times the storage capacity with traditional arrays to achieve the same allocated space);

- 25% of customers achieved additional capacity benefits of 500% or more from virtualization and thin provisioning (i.e., they would have had to install six times the storage capacity with traditional arrays to achieve the same allocated space).

As indicated such results will vary dramatically, so practitioners are advised to understand their specific use case and require manufacturers to help predict the benefits and stand by them with contractual agreements and penalties if missed.