Contents |

Executive Summary

Wikibon is extensively researching Software-led Infrastructure and how it contributes to both lower IT costs and improved application delivery and flexibility for the business.

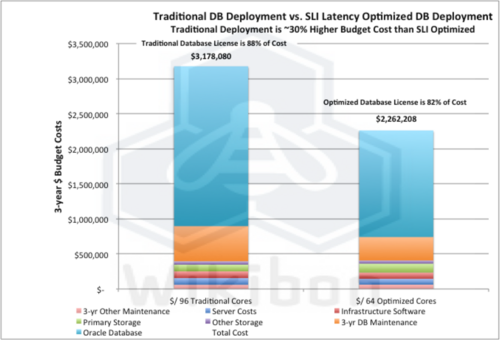

Using Software-led Infrastructure (SLI) principles will allow databases to be run at significantly lower cost and with improved flexibility. For example, in a traditional database deployment a typical database environment using Oracle Enterprise Edition will be at least 30% more expensive to run than in a Software-Led Infrastructure environment.

Figure 1 shows the hard cost overall savings from changing a traditional physical deployment to an SLI deployment. The database license cost is 88% of the cost in a traditional physical database deployment.

We developed an economic model to assess the cost benefit of optimizing Oracle infrastructure over a three-year period. We compared two Oracle database environments running Enterprise Edition: 1) A traditional "roll-your-own" infrastructure and 2) A "roll-your-own" infrastructure optimized for low latency through increased server memory and the use of flash within a tiered storage construct (a capability not currently available from Oracle). For the left hand side of the chart we assumed a modular storage array with no flash or tiered storage support. On the right hand side we assumed an EMC VMAX using FastVP and flash within the array.

We chose to compare a cheaper modular array with a more expensive VMAX to assess the potential savings of optimizing infrastructure through the intelligent and balanced use of RAM and external flash. Importantly, while the storage and server CAPEX on the right is higher, the overall savings are significantly lower due to the requirement for fewer Oracle licenses.

In our example, an SLI deployment would reduce the number of cores required from 96 to 64, while requiring more spending on servers (more RAM and faster processors), storage components, and virtualization. In the net, however, the overall cost can be reduced by about 30% as a function of the more efficient use of database licenses. In the traditional case, database licensing comprises 88% of costs vs. 82% in the SLI case. In addition, the SLI deployment has the added benefits of more high availability options, higher flexibility both to deploy and update, and greater ease to automate and manage.

Setting up a Software-Led Infrastructure will also enable easier migration of suitable workloads into the cloud, which will itself have to be deployed in an effective Software-Led Infrastructure.

The bottom line: By using SLI principles, virtualizing and using more expensive servers and blazing fast storage, the number of cores can be reduced by 33%. The reduction in additional Oracle Enterprise Edition licenses costs pays for the full cost of the enhanced servers and storage twice over. The hardware and a backup system come for free!

SLI Database Challenges

The most costly IT budget line item is enterprise software, and the largest component of the enterprise software budget is database software (whether from IBM, Microsoft or Oracle). Software licensing costs are high and complex, and related to the power of the processor. For example, the Oracle database edition license cost is $47,500 per processor (see footnotes for a definition of processor).

While database cost savings are a compelling argument for SLI, users need to carefully consider the impact on mission-critical application performance and best practices to mitigate the challenges. Software-Led Infrastructure has five fundamental characteristics, which are listed below in order of importance to database processing. The technical and management challenges of database environments are included for the first two elements.

- Pervasive Virtualization and Encapsulation:

- Databases are often the key component of application systems and require good performance and availability. Virtualization of databases can affect performance with the processor overhead of virtualization (around 10%).

- Distributed High Performance Persistent Storage:

- In a virtualized system, the IO goes through the hypervisor, which gives rise to a virtualization IO “tax”, blending the IO from multiple systems and making the normal caching mechanisms of disk-based IO less effective (about 15% impact on IO performance).

- The biggest constraint of database performance is almost always storage performance, especially where the storage is to hard disk drives (HDD), each with limited IOs per second and limited bandwidth. The result of using a disk environment where a large number of IOs go to HDD is high-average IO times and very high variance. Wikibon research shows the difference between a good HDD array (average 5ms, variance 169) and a good flash-only (or a tiered storage environment with a very high percentage of flash) array (average 2ms, variance 1).

- The sharing of virtualized system resources can lead to the “noisy neighbor" phenomenon, where a rogue job can interfere with the central application performance. A frequent example is a long-running, badly written inquiry that locks up the database, preventing more important production work.

- The sharing of virtualized system resources can lead to the “noisy neighbor phenomenon, where a rogue job can interfere with the central application performance. A frequent example is a long running badly written enquiry that locks up the database, creating poor IO response times and increased variance for more important production work.

- SQL databases are sensitive to variance because of the read and write locking mechanisms. Extended locking chains lead to threads timing out, with subsequent major disruption to the end-user experience. This is especially the case with virtualized databases, and the processors and storage must be balanced for a virtualized environment.

- Storage systems supporting virtualized databases need the ability to guarantee IO performance response time and variance, using a combination of secure multi-tenancy capabilities and upper and lower capping of IO rates (IOPS) for database environments.

- Intelligent Data Management Capabilities:

- This supports the ability to allow management across the system as a whole. The key is ensuring that all metadata required for automation management is available from all system hardware and software components via industry standard APIs.

- Unified Metadata about System, Application, & Data:

- This provides a longer-term SLI requirement to ensure consistent rich metadata, which allows rapid access.

- Software Defined Networking.

The negative impacts on database systems are why databases have been the last software class to be virtualized. However, this research shows that utilizing best practice will allow all databases to be virtualized, assuming that the ISVs have provided the necessary certification. Under these conditions, virtualization will reduce costs, provide alternative high-availability options, and improve business flexibility with improved time to implement and time to change.

SLI Best Practice for Database

Best practice for database infrastructure should include the following:

- Memory optimization on the servers to ensure that memory is not a constraint on system performance:

- Optimize does not mean maximize! The number of cores should be optimized for the database environment.

- Memory size should be optimized. Memories that are too large can negatively impact performance if the l1/l2 cache hit rates are affected.

- In Figure 1, a 30% uplift is assumed per optimized server core to increase memory and balance the power of each core in a virtualized environment. Overall the number of cores is reduced from 96 to 64. The decrease comes from increased power and memory of the servers, better utilization of server resources from the decreased multi-programming levels and more efficient locking mechanisms, and reduced IO times (see lower latency storage below).

- Database system virtualization onto physical servers by database type (e.g., Oracle Enterprise Edition), and virtual database spreading across some or all of the physical servers (e.g., a virtual Oracle RAC instance on each physical server).

- Deployment of the lowest latency storage possible with the minimum possible “noisy neighbor” interference, while ensuring that RPO and RTO SLA objectives can be met:

- The IO average response time should be low (<2 ms).

- The variance of IO response time should also be very low. An example of a variance definition could be that the percentage of IOs exceeding 8ms should be significantly less than 1%, and the number of IOs that exceed 64ms should be zero. Better variance should be traded for slightly longer average response times.

- In Figure 1, the cost of storage is assumed to twice that of traditional storage. The storage is flash-based, with more than 99% of IOs coming from the flash tier.

- The combination of higher server costs, low latency low variance IO performance and ability to share the load across virtualized resources will increase the efficiency of the server systems. This is key to reducing the number of cores in Figure 1 from 96 to 64 while maintaining the same database performance profile.

- Implementation of effective tools to identify the root cause for performance issues,

- Implementing requirements that all components of the system be manageable (now or in the near future) by automation software via restful APIs.

The benefits shown in Figure 1 come about from improving the utilization of Oracle licenses. This does not directly lead to lower costs but can help avoid increases in license fees, especially during enterprise agreement negotiations. This is a complex area, and Wikibon has tackled this issue in research entitled Oracle Negotiation Myths and Understanding Virtualization Adoption in Oracle Shops. The negotiation should focus less on license savings and more round the better use and greater benefit that the organization will get from its Oracle deployments and the greater likelihood that Oracle will be used in future projects.

Also not included in Figure 1 are the very real benefits of improved flexibility and improved options for availability. Applications with lower RPO/RTO requirements than a full Oracle RAC implementation may benefit from using virtualization fail-over capabilities. Improved speed of deployment and speed to deliver change will lower IT operational costs and increase the business value delivered to the line of business.

Future Wikibon Software-led Research

Software-Led Infrastructure will continue to be a major research area for Wikibon in 2013 with a special focus on database environments. Wikibon will be publishing details of the assumptions in Figure 1 as well as case studies of successful implementations in much greater detail.

Action Item: CIOs should ensure the alignment of objectives by including some level of database software license responsibility within the IT operations budget. Moving to a full Software-Led Infrastructure should be clearly set as an objective. This should include the ability to migrate suitable workloads to the cloud if financially attractive. All database software that is certified for virtualization should be run on an optimized environment that includes suitable virtualization tools and optimized servers and storage. In time this should include automated operations.

Footnotes: The term "per processor" for is defined with respect to physical cores and a processor core multiplier (common processors = 0.5 x the number of cores). For example, an 8-processor, 32-core server using Intel Xeon 56XX CPUs would require 16 processor licenses. Other Oracle database features are also charged per processor. For example, the Real Application Clusters (RAC) feature costs $23,000 per processor.