Note: For the purposes of this article, the terms “SDDC” and “software-led infrastructure” are interchangeable.

Contents |

Introduction

As an industry, we’re seeing more and more products released that aren’t dependent on underlying proprietary hardware. As I’ve mentioned before, this era really began back in the early 2000’s with the release of VMware’s ESX server, which brought to the mainstream the concept of hardware abstraction through the instantiation of virtual software constructs, which we call virtual machines.

In the years since, VMware, along with the rest of the virtualization ecosystem, has massively progressed this concept to new heights. Today, automated mechanisms have the ability to migrate workloads between hosts and storage arrays when certain conditions warrant. Although these workloads are complete virtual machines, the applications inside these VMs benefit from availability mechanisms that weren’t available in an all-hardware world.

It’s fair to say that most IT pros these days are pretty aware of the benefits wrought from the virtualization era of computing.

Virtualization is just the beginning

However, server virtualization alone does not a software defined data center (SDDC) make. While the hypervisor provides the underlying foundation for SDDC, that resource is quickly becoming a simple commoditized abstraction layer on top of which more powerful orchestration and automation services reside. That said, this virtualized abstraction remains critical, as it provides a common layer that sits between compute resources and higher-level management functions.

The compute resource is but one of three key foundational elements of the data center, and that’s been handily “software-itized” already. Today, we’re seeing the next major steps toward full software definition in emerging networking and storage paradigms

Software defined networking (SDN)

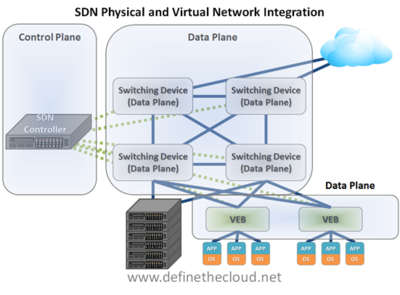

Legacy networking equipment contains distributed intelligence, with individual decisions – traffic routing and prioritization, for example – made by individual switches and routers based on often static rules. Acceleration of these decisions is often carried out by custom ASICs or other hardware. In an SDN model, the traffic routing and prioritization intelligence is moved to a central location where there is intelligence encompassing the entire network. With information from across the entire network, this central controller can automatically manage communications pathways to, for example, route around errors, or make traffic decisions based on data priority or cost.

I’m not going to go in depth into how SDN works in this article, since I actually wrote about this very topic in this Wikibon article.

Software defined storage (SDS)

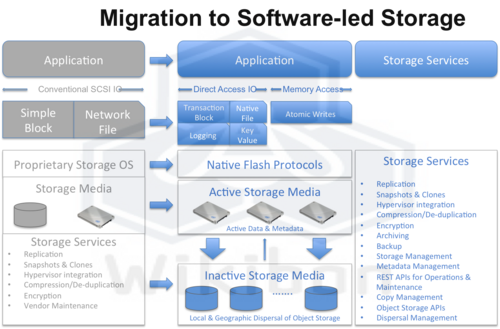

Storage is the third foundational component of the data center and is also being software-enabled in a number of ways in order to help realize a full SDDC vision. A lot of really smart people at companies such as Nexenta, Nutanix, Simplivity, and others, as well as many companies claiming that they are “SDS”, are working on this. As is to be expected, the terminology is sometimes watered down by overuse, but here, we’re talking not about pseudo-SDS but the real thing.

In a software-defined storage world, as is the case with servers and networking, abstraction is the key enabler of the service. However, storage introduces issues with ensuring that both capacity and performance goals are met. Storage has become a significant challenge for many organizations as they struggle to adapt legacy systems to meet emerging needs. This is primarily due to what has become known as the “I/O blender” in which a max of various kinds of storage I/O patterns are conglomerated into a single stream, which must be effectively services by storage.

Wikibon’s David Floyer wrote an in depth overview of software-led storage that provides a look at everything you ever wanted to know about the topic but were afraid to ask.

The eventual impact

Today, we’re only seeing the initial benefits of what will eventually be the full SDDC spectrum, particularly as these data centers are combined with hybrid cloud services. As mentioned, when conditions warrant, the management systems overseeing the hypervisor abstraction layer can shift workloads to other hosts.

As time goes on, expect to see more and deeper integration with individual applications. So, an application will be able to communicate directly with the data center management layer and provide to that layer a list of needs with regard to networking, storage, and compute. Or, the management layer will have enough insight into what is happening right now in the environment to, for example, automatically provision new storage when needed or create a specialized network route to handle traffic. The data center will be able to self-heal around issues in ways that require manual intervention today due to the rigid nature of hardware and the lack of centralized intelligence.

SDDC and the hybrid cloud will eventually be very closely related services as organizations start to trust the cloud more. Orchestration and automation systems will understand the full lifecycle of an application, including when it hits peaks and valleys from a resource need standpoint. For example, suppose that an application is created to support a specific, high volume marketing effort. The management layer will understand the resource need and will shift appropriate workloads to the hybrid cloud partner when scale needs outstrip resource supply in the private data center. This will be completely enabled by the ability of software to manage all three resource silos – compute, networking, and storage.

This automation will come at the hands of more comprehensive data center orchestration technologies. For CIOs, this new level of orchestration and integration will require the tearing down of any remaining walls that may exist between resource-focused departments in IT. More knowledge will need to be integrated into a single team – or person – to create this centralized construct, but the end result will enable CIOs to provide business services without many of today’s constraints.

Action Item: CIOs don’t necessarily need to jump on the SDDC bandwagon right now, particularly since there is still a great deal of uncertainty. However, this is an area that CIOs and data center architects should carefully study for future replacement cycles and determine whether and how this paradigm will affect existing workloads and how it may be of benefit to the organization.

Footnotes: