Executive Summary

All-flash arrays are using aggressive 6:1 inline data reduction (DRe) techniques. As a result, the cost of all-flash storage is reduced from $15,000/terabyte to $2,500/terabyte, very close to the cost of traditional arrays. The performance of the all-flash arrays is far better than traditional storage arrays as is the productivity of support staff. Almost all the time taken by DBAs and storage administrators to manage traditional storage arrays can be eliminated by all-flash arrays.

Tier 1 storage array vendors in particular have traditionally offered great performance and great availability. The only way to improve traditional storage arrays to compete with the performance demands of modern applications is to increase the percentage of flash shipped. However, the data reduction techniques applied by traditional storage array vendors are either nonexistent or of poor quality. The cost of flash on traditional storage arrays is at least four time higher than the equivalent flash on all-flash arrays.

Therefore, applying state-of-the-art data reduction techniques to the flash portion of traditional storage arrays is a business imperative for all traditional storage array vendors, both for future sales and for protection of their installed base.

The questions addressed in this Wikibon research are whether modern inline DRe techniques can be applied to legacy storage arrays, how they should be applied, and the business case for deploying them. To illustrate the research Wikibon looked at a case study for an application where a new storage array with 500TB of disk and 35TB of flash had been deployed nine months ago, but the required performance is much higher than originally forecast. The DBAs and storage administrators believe that an additional 105TB of flash are required. Three options to meet the performance are evaluated:

- Increasing the amount of flash on the traditional array;

- Replacing the traditional array with an all-flash array;

- Maintaining the same flash on the traditional array and using a DRe appliance to provide a logical five times increase in effective flash storage to the application.

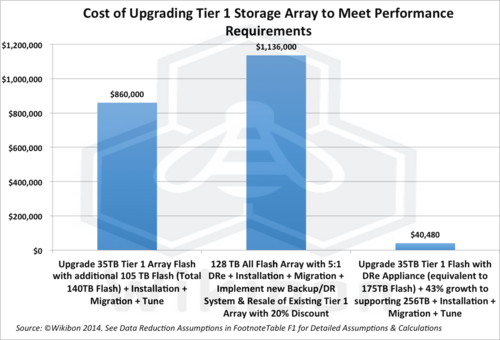

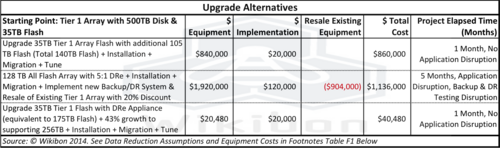

The results are shown in Figure 1. By taking the array as is and using a DRe appliance, the amount of flash storage seen by the application can be increased by a factor of five, from 35 to 175TBs. Wikibon estimates the cost of the appliance (based on $/GB used within an appliance) and deployment to be about $40,000. This is a low-risk, low-cost solution that can be installed with no application outage and no change to the current storage array backup and disaster recovery procedures.

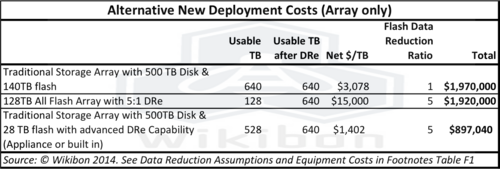

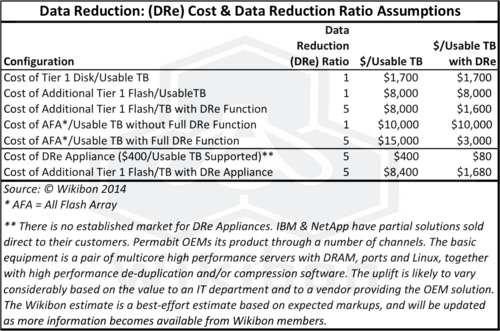

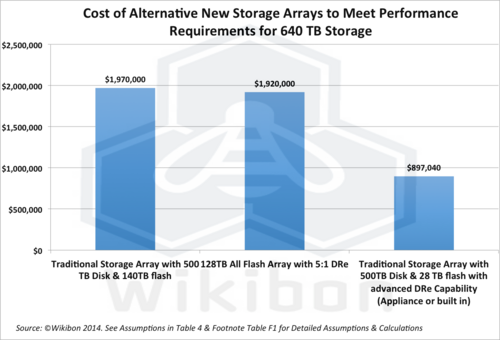

Clearly the original storage array was not configured correctly. Another question to ask is which options should have been considered to meet the true performance requirements from the beginning. Figure 2 below shows the comparison in costs between an all-flash array, traditional storage array, and traditional storage array with advanced DRe techniques applied to the flash. The impact of DRe is to reduce the cost of a traditional storage array to less that half the alternatives. This does not imply that all-flash arrays cannot be justified. The details of the case study below point out that in many cases the AFA can simplify storage management and reduce the number of copies of data required.

Source: © Wikibon 2014

See Data Reduction Assumptions and Equipment Costs in Footnotes Table F1 Below, together with data from Table 4 below

Wikibon continues to believe that all active data will migrate to flash. What is clear is that traditional storage arrays can be given a few extra years of life by providing advanced DRe techniques for the flash component. Wikibon’s research finds that inline data reduction appliances offer a powerful non-disruptive upgrade to installed legacy storage arrays and should be focused on improving flash storage on those arrays. Storage executives should ask their legacy vendors when this technology will be available on their installed storage arrays and not invest further in any array type that will not either support an inline data reduction appliance or the equivalent function built into the legacy storage array controllers.

The only current DRe appliance vendor is Permabit, which recently announced its SANblox OEM solution. Wikibon members believe that Permabit should increase the number of channel partners that OEM this box to include resellers and VARs, to increase the pressure on traditional vendors to provide state-of-the-art DRe solutions for the flash component of traditional storage arrays.

Introduction to Data Reduction (DRe)

De-duplication techniques have been around for a long time on traditional storage arrays. However, they have never gotten much traction. Backup appliances such as EMC’s Data Domain have very efficient inline de-duplication, but for general purpose storage arrays de-duplication has for the most part not been inline (everything completed while the data is in flight). Instead, capacity is sized for the original file, and capacity reduction is done as a low-priority batch job. Sometimes only file-level “single-instance” de-duplication is executed on exact copies of files.

Compression is a well understood technology which takes significant processor resources to execute. Many applications include compression, i.e., image, voice and video recordings, which are a major portion of the growth of unstructured data. Trying to compress already compressed data can be counterproductive! There is always a trade-off in compression between effort to execute, effort to restore, and the compression ratio.

Data reduction on data held on hard disk drives reduces the amount of data transferred but does not usually increase data access density to the disk. In fact it usually decreases it. Data access density is usually the primary constraint of hard disks, and therefore reducing the data on the disk does not always allow more data to be stored on a disk. Data access density is decreasing over time on hard drives as data capacity of drives increases. Storage admins have learned the hard way of the challenges and overheads of data reduction and have shied away from them.

However flash storage is completely different. Flash storage has high data access density and has data access coming out of its ears, and flash data access density is improving at the same speed as flash density and flash costs. The all flash array (AFA) vendors have been aggressively using DRe techniques because flash is a more expensive medium, and it makes sense to use compute cycles to reduce storage costs.

This research addresses these questions:-

- Can modern inline DRe techniques be applied to legacy storage arrays?

- If so, how should they be applied, what DRe strategies should be deployed?

- What is the business case for deploying DRe?

The research will use a case study to illustrate the research.

Case Study - The Problem

Application response time is a growing problem. The application team tells the storage admin that the storage system is the problem, and the storage admin says a combination of increased transaction rate and a new application software release is the problem. The business sponsor is telling the CIO, “A plague on all your houses”.

An all-flash-array (AFA) salesman is selling the DBA and application team on a total flash-only array. The message is simple - a “pure” flash array solves all the application performance problems and is cheaper than a properly configured EMC traditional storage array. Why? Because the all-flash array can de-duplicate and compress the data and can achieve 6:1 reduction in actual gigabytes required.

The AFA salesman offered his array as a better faster solution just nine months prior. However, IT went with the traditional tier 1 storage array instead, giving the salesman the opportunity to say “I told you so - why did you waste your money upgrading the past? You should have sold the existing tier 1 array while you could and then replaced it with a pure flash array. Join the future and get real work done.”

Case Study Problem Analysis & Solution - Current System

- The application is complex with a large number of configurable modules giving very high business value to the sponsoring line-of-business;

- The storage array supporting the application is 535TB in size, including 500TB of disk drives and 35TB in flash;

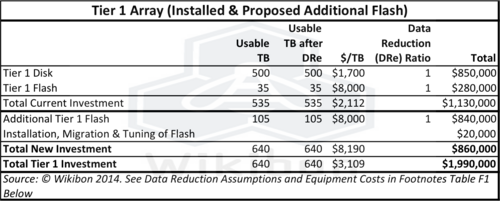

- The cost of the current tier 1 storage array was $1.13 million (see Table 1);

- The Read/Write ratio is 70:30;

- The IOPS Max is about 50,000;

- IO response times are very erratic, with an average of 12ms, but many IOs over 100ms and some over 250ms;

- The application IO and transaction rates have grown like topsy;

- The previous and all future application releases are expected to include greater functionality and more database calls per transaction;

- The system is business critical, utilizing tier 1 storage with sophisticated replication and recovery system designed for very low RPO & RTO;

- The server performance has been enhanced with tweaks such as additional server DRAM;

- The storage system is now the bottleneck for both read & write IO;

- The DBA and storage admin are practically living together; things improve for a few days then get even worse;

- Putting the additional flash drives on the server will break the current recovery system;

- The current storage array is a tiered system with flash, high-speed SAS drives and higher capacity drives;

- The working set sizes of the application modules are very large;

- CPU wait time is high on the servers;

- Less than 50% of IOs are being serviced by flash drives;

- The current storage array has no de-duplication or compression feature that will work in a high performance high availability tier 1 environment;

- The storage admin and DBA say they need two-to-three times as much flash for all the critical application tables;

- Adding additional flash is a major cost upgrade to the existing storage array of $840,000 for additional tier 1 flash. The costs of installation, migration of data and system tuning are estimated at $20,000 over a one month period.

- The DBA and application team is uncertain of how much better the impact of additional flash will be on IO response time and total throughput;

- The best guess is that putting in additional three-times existing flash (105TB) will raise the hit rate on flash to about 90%;

- If this is achieved, the DBA and application team believes that performance should be stable for this release and the next.

Case Study Alternative Solution - All Flash Array

The “pure” all-flash array system is proposed as a replacement for the existing tier 1 storage array.

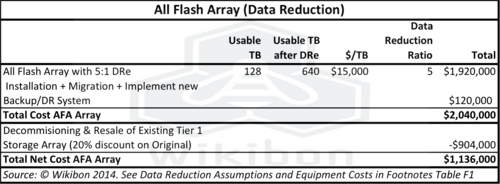

- The 6:1 reduction ratio looks reasonable overall. For the application-heavy, mission-critical applications, more would be expected (in general) from compression than de-duplication. The normal assumptions are 3x from de-duplication and 2x from compression. Perhaps 6:1 can be achieved for this application, perhaps not. For this application it is agreed with some confidence that 2x could be achieved from de-duplication and 2.5x from compression, for an overall data reduction ratio of 5:1.11

- The evidence-based approach to determining potential benefits is a welcome change in approach from the wild-west claims of 50:1 data reduction of a few years ago;

- The transfer of the total application to a new all-flash storage array will mean the design and testing of a completely new recovery system running in parallel to the current system;

- The replication system on the array and the management software ecosystem is untested for very high availability systems.

- The costs of AFA installation, migration of data to the AFA, implementation and parallel running of a new replication, backup and disaster recovery system are estimated as $120,000 with a four-month elapsed time.

- The resale value of the existing tier 1 storage array after decommissioning costs and loss of value is estimated as the original cost of the array ($1,130,000) less a 20% discount, or $904,000.

The unexpected additional budget requirements of about $2 million with a difficult resale of equipment of $0.9 million is not an easy sell for IT to an already skeptical line-of-business executive for solutions that are not ideal and carry risk of system and personal failure. IT needs an alternative solution.

Case Study - Can Data Reduction Appliances Help?

The benefit of both solutions in the previous section is that all the storage is available to be managed and optimized under a single storage array. Three alternative appliance approaches are investigated for applying data reduction techniques to the relatively expensive but faster flash storage of a legacy storage array. These are the IBM SVC, the NetApp V-Series & a Data Reduction Appliance.

Using IBM SAN Volume Manager (SVC) to Virtualize Flash Storage with Data Reduction

- Virtualizing disk-based storage has mixed results especially when high performance is required, as disks in general have very low access density, and data placement needs a physical understanding of the disks;

- Flash storage has high access density, making storage virtualization techniques easier to apply with less storage admin time for monitoring;

- The IBM SCV is by far the most widely deployed storage virtualization platform, eclipsing deployments from EMC’s VPLEX, Hitachi’s USP/VSP Series and NetApp V-Series;

- The IBM SVC using IBM’s real-time compression (from the acquisition of Storwize) can provide a 2:1 DRe ratio inline or better. A 2.5 DRe is assumed.

- The IBM SVC does not support de-duplication, although IBM has indicated it is “working on it”;

- The IBM SVC virtualizes the data attached to it and provides its own set of data services to the virtualized data;

- The key data to be compressed would either have to be:

- Migrated to IBM flash storage behind the the IBM SVC (sold combined with the SVC by IBM as the FlashSystem V840) - this would make the flash data independent disk data on the tier 1 storage array and make application-consistent snapshots, replication and backups very difficult to implement;

- OR the tier 1 storage array would have to be virtualized behind the SVC (this would enable consistent replication using consistency groups, which is important for aggressive RPO/RTO SLAs);

- Either way, the SVC would be responsible for the data services on the data behind it, including snapshot and replication services for the data;

- The SVC replication services are well established, but not in general used to replace Tier-1 storage array performance, functionality and levels of IBM service.

- The IBM SVC is not considered a viable solution for this application environment.

Using NetApp V-Series to Virtualize Flash Storage with Data Reduction

- NetApp V-Series virtualizes storage and uses NetApp’s ONTAP storage services;

- The NetApp V-Series using compression can provide a 2:1 DR ratio inline or better.

- NetApp only claims a 35% data reduction or more;

- The NetApp V-Series supports post-processing de-duplication - a fingerprint of each 4K block is recorded, and a batch post-processing applies reduces the data; However, this duplication feature is only supported on NetApp disk arrays.

- The NetApp V-Series virtualizes the data attached to it, and provides data services for the virtualized data;

- The key storage to be data reduced would either have to be:

- Migrated to NetApp flash storage behind the the NetApp V-Series - this would make the flash data independent disk data on the tier 1 storage array and make application-consistent snapshots, replication and backups very difficult;

- OR the tier 1 storage array would have to be virtualized behind the SNetApp V-Series (this would enable consistent replication using consistency groups, which is important for aggressive RPO/RTO SLAs);

- Either way, the SVC would be responsible for the data services on the data behind it, including snapshot and replication services for the data;

- The NetApp replication services are well established but use a fundamentally different architecture, not in general used to either replace or mix with Tier-1 storage array functionality.

- The NetApp V-series is not considered a viable solution for this application environment.

Using a DRe Appliance to De-duplicate & Compress Flash Data

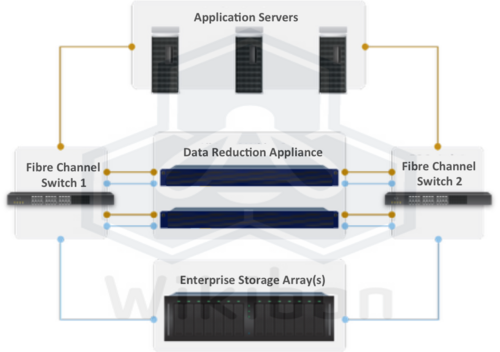

An alternative solution is a DRe Appliance that sits in the storage network in front of the array, as shown in Figure 3 below. An example is an OEM version of DRe Appliance (the SANblox from Permabit) configuration to support the existing flash with de-duplication and compression, without having to purchase any additional flash. The advantages of this approach are:

- The existing 35TB of flash is logically increased to 175TB of flash (5:1), an increase in 140TB;

- The existing backup, replication and recovery procedures are not changed at all - this reduces the cost and elapsed time of implementation to 1 month, and significantly reduces the likely impact of an outage;

- The cost of the solution is far lower than alternatives (see Table 3 below);

- The solution is easily extensible to additional flash or hard drive storage to increase capacity;

- The performance is sufficient to meet the requirements of three times the existing flash (in theory it achieves an additional four times the flash capacity), although not as high as an AFA solution;

- The implementation requires no application outage, as it sits in the FC channel network as shown in Figure 3 below;

- The risks of this solution are:

- The access density to the 35TB of existing flash may not be sufficient to support the increases IO activity to the flash. In theory this should be sufficient, but not yet proven;

- An OEM version of the Permabit SANblox may not be available for the tier 1 storage array in trouble;

- The team considered this approach a viable and low cost option.

Case Study - Solution Alternative Comparisons & Conclusions

The challenges of both the IBM SVC and the NetApp appliances in this case study are the levels of de-duplication/compression that can be offered, and they cannot be offered as a "seamless" appliance within the current infrastructure. They could be added as a separate box with the flash inside. However, this would create two arrays to manage, and a separate backup/recovery & DR solution to design, implement, and merge with the existing processes and procedures. The alternative use of these appliances is to migrate all the Tier-1 workload under these boxes. While possible, this would still be migrating to a traditional array box, and neither offer the same level of functionality, performance, or service as the traditional tier 1 storage arrays. In addition it would impose a five-month implementation period for migration and test which would be a very disruptive process. Neither solution is considered viable for this application environment.

The solutions that are available are shown in Table 3. Clearly the lowest cost and fastest solution to implement is the DRe Appliance of the type offered as an OEM box by Permabit. The least risk solution (assuming that the flash cures the performance problems) is the addition of traditional flash. The least risk from a total business point-of-view is to implement the DRe Appliance focusing on the flash storage. This solution is highly likely to be successful, the only issue being the question of whether the flash access density that can be sustained with a DRe of 1:5. Additional strategies to enhance this solution would be to add a smaller amount of flash to reduce the flash access density, or in extremis the migration to an all-flash array.

Of course, one question would be what the cost of alternative arrays would have been if the DRe capability had been available at the time of the original storage array purchase. Table 4 below shows the comparative costs and shows that the traditional storage array could have been proposed at half the cost of an all flash array. This analysis does not mean that all flash arrays should not be deployed or cannot be justified. In many cases the reduced cost of support staff because of the simplicity of the AFA will more than justify the all-flash approach. In addition, the greater data access density of an all flash array can mean that data that previously would have to be copied can share the same storage (e.g., data to create a data warehouse need not be copied, data to create a test suite need not be copied). Wikibon continues to believe that all active data will migrate to flash. What is clear is that traditional storage arrays can be given a few extra years of life by providing advanced DRe techniques for the flash component.

Bottom Line The DRe Appliance approach can be installed without any disruption, extremely quickly, and can be focused on increasing the number of volumes that can be supported by flash. It does not preclude the future use of either more flash on the existing array, or of migrating to an all flash array. The DRe appliance is by far the cheapest solution ($40,480) and will take less than a month to install, and the risk is low. The team recommends the DRe appliance.

The biggest risk to this approach is the length of time for the Permabit SANblox to go through the OEM process with each of the vendors. Wikibon believes that Permabit should either make this solution available directly to customers, and/or allow distribution of its appliances through storage distributors and VAR channels.

Action Item: Wikibon’s research finds that inline data reduction appliances offer a powerful non-disruptive upgrade to installed legacy storage arrays. It is especially powerful in improving the cost-effectiveness of flash storage on those arrays. Storage executives should ask their legacy vendors when this technology will be available on their installed storage arrays, and not invest further in any array type that will not either support an inline data reduction appliance or the equivalent function built into the legacy storage array controllers.

Footnotes: Footnotes: Assumptions for Case Study.