Tip: Ctrl +/- to increase/decrease text size

Contents |

Summary

To support network computing applications effectively, IT management will increasingly rely on Ethernet networks to simplify storage infrastructure, drive increased efficiencies and lower costs. Specifically, over the next decade, the Wikibon community believes more than 80% of the Global 2000 will be on a path to converge significant portions of SAN and LAN traffic onto a single fabric using 10 Gigabit Ethernet (and subsequent technology turns).

A key component of this strategy will be the exploitation of Fibre Channel over Ethernet (FCoE) as a primary storage protocol. Users should note however, that native FC continues to have a robust roadmap independent of FCoE (e.g. 16 Gig FC, etc.), and constraints will exist in moving to Ethernet (e.g. organizational inertia). Therefore, storage architects should continue to exploit FC’s incremental improvements for many years to come on a tactical basis. FCoE and FC will coexist with other protocols (e.g. iSCSI).

The converged network ecosystem is evolving steadily, and after years of standards efforts storage architects are starting to take notice. According to InfoPro, the number of organizations with FCoE in their long-term plans jumped from 9% to 25% from 2007 to 2009.

Why is FCoE Important?

For years, the main issues with Ethernet-based storage has been performance and reliability. As a result, Fibre Channel has been dominated high-performance data-center storage networks. Wikibon estimates that 85% of mission critical block-based storage applications in large organizations are run over Fibre Channel, and this is not likely to change for many years. Wikibon calculations suggest that $25B in FC assets remain on the books of organizations worldwide, and this installed base will influence storage purchase decisions for the next decade.

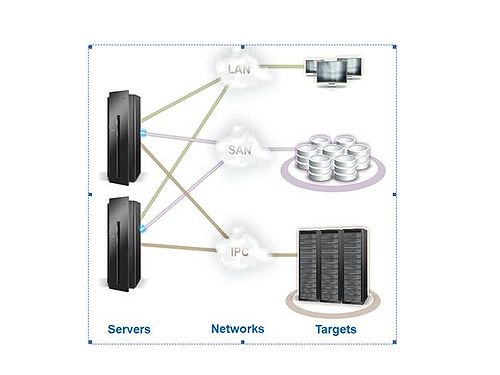

As shown in Figure 1, IT management has traditionally architected networks that separate LAN, storage, and other system-to-system traffic to accommodate the unique requirements of each network:

- LAN infrastructure supports connectivity to a very large number of users with relatively low performance and quality-of-service (QoS) requirements. If a connection becomes highly utilized, data is likely to be re-transmitted, but performance and throughput are not critical requirements.

- SAN infrastructure supports connectivity between fewer high-performance ‘nodes’ (server & storage targets) where performance requirements are high and data cannot be lost, ever. Re-transmission would create objectionable performance penalties and violate service level agreements.

- Inter-process communication (IPC) networks (e.g. system-to-system or backup servers) have very few lines but extremely high performance and QoS requirements.

These three distinct network infrastructures require separate server adapters, network switches, cables, and, importantly, different management processes, increasing complexity and cost.

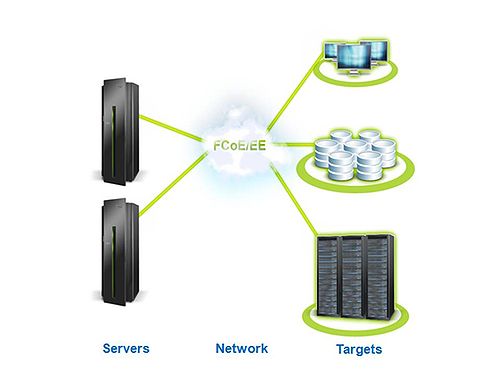

The latest 10 Gigabit Ethernet specification includes a lossless option, meaning no data is lost on transmission, eliminating the need to re-transmit data and endure performance penalties. This fundamental change sets the stage to converge LAN and SAN traffic onto a single Ethernet-based network running FCoE (see Figure 2), essentially bringing the flexibility of Ethernet to SANs and the reliability of FC to Ethernet.

Three key technology enablers play in this scenario: The speed of 10 GigE; the lossless capability of 10 GigE, and FCoE. Combined, these technologies mean organizations can preserve their existing Fibre Channel infrastructure and essentially halve the number of adapters, switches, and cables.

How and when will FCoE Happen?

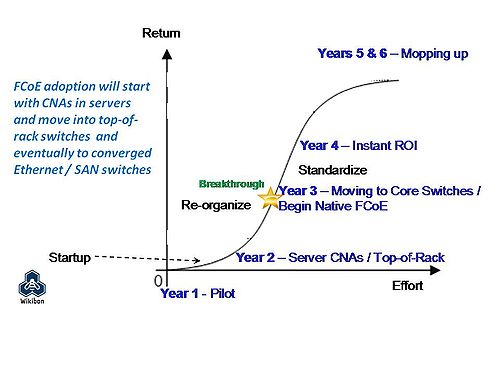

While the appeal of a single network pipe is compelling from a cost and simplicity perspective, the transition from today’s network silos to a unified network will take five-to-seven years once initiated. As shown in Figure 3, organizations should start with FCoE pilots and evolve converged networks over time. Initially, ROI will be poor, which will slow adoption; however within three years of adoption, organizations will see dramatic reductions in network infrastructure complexity, and ROI will become instantaneous (breakthrough point).

Specifically, discussions with Wikibon users suggest the evolution of converged networks will take the following course:

- ’’’Startup Phase’’’ – Organizations will begin using converged network adapters (CNA’s) in servers to converge separate Ethernet and SAN cards; building small converged networks in pilots. The impact will be to demonstrate the viability of reducing connectivity and management complexity coming out of the server into the top-of-rack switch, which will still have distinct connections to SAN and Ethernet switches.

- ’’’CNA’’’ logic will eventually be integrated into top-of-rack switches creating a single connection from top-of-rack switch to core Ethernet/SAN switches which will be converged but still direct traffic to separate SAN and LAN targets.

- ’’’End-to-end convergence’’’ will eventually take place from server, through top-of-rack switch to converged Ethernet/SAN switches and out to native FCoE devices; all through a single fabric running on 10 GigE.

The FCoE Ecosystem

The supplier system for FCoE technology has mobilized in response to pending 10 GigE adoption. As indicated, the gating technology is CNA. These converged adapters are essentially FC host bus adapters (HBA’s) and network interface cards (NIC’s) on a single adapter. CNA’s combine FC and Ethernet chip sets and are necessary to enable host servers to talk FCoE.

From a storage perspective, in order to exploit FCoE, users will need a converged switch that talks both FC and Ethernet. These devices are FCoE switches that also have pure FC ports as an option to accommodate existing FC infrastructure. Ultimately, storage targets will have native FCoE (see reference to NetApp announcement below).

The following is a brief rundown of the major suppliers in the ecosystem:

- Cards – CNA’s – Emulex and Qlogic are the established and dominant players, with Brocade as a new entrant. QLogic is shipping a single chip CNA (lower cost and energy) and has announced major CNA design wins with IBM and NetApp.

- Servers with CNA’s – IBM and QLogic have jointly announced CNA’s for IBM blade servers. HP has made some public statements indicating FCoE is coming, and we expect Dell and Sun will also make announcements in this area by the end of this year.

- Switches – Cisco is the dominant Ethernet switch vendor and also supplies FC switches. Brocade is the dominant FC switch supplier.

- Target devices (e.g. storage systems) – On August 11th, 2009, NetApp became the first storage systems manufacturer to announce availability of native FCoE targets. QLogic is the exclusive supplier of the CNA for NetApp’s FCoE target storage systems. EMC, the largest supplier of FC-based storage, has talked publicly about the importance of FCoE but has not made a formal announcement as of yet. Together with QLogic and its other ecosystem suppliers, NetApp is at the forefront of the FCoE market at this time.

- Device management software is embedded in the above devices and will be compatible with FCoE.

- SAN management software (e.g. CA, EMC, Hitachi, Symantec, etc.) will evolve. The key question here is will SAN management and network management software converge? The answer near term is probably not, and this part of the ecosystem may remain separate indefinitely.

How will the ecosystem change as a result of convergence?

A battle is looming between Ethernet and FC suppliers. On the switch side, Cisco is going after Brocade’s FC SAN base, and NetApp is extending Ethernet-based storage to attack EMC’s FC installed base. Strong FC storage players such as EMC, Hitachi, HP, and IBM will have to respond to protect turf and try to exploit Ethernet.

A similar battle is taking place in the components business as NIC cards and HBA’s converge. Broadcom and Intel are the two big NIC players and are undoubtedly eying entrance into this market (Broadcom recently made a failed bid to acquire Emulex). Emulex and QLogic are the dominant players in the FC business, and both companies are moving aggressively to exploit 10 GigE, with QLogic demonstrating early design wins with its single chip CNA.

What is the Significance of NetApp’s FCoE Announcement?

NetApp is the first storage system vendor to announce native FCoE target devices. By using QLogic single-chip CNA’s in its storage systems, NetApp is able to announce a unified, multi-protocol device (FCoE, iSCSI, NFS, CIFS), which can, in theory, dramatically reduce connectivity costs if fully deployed end-to-end.

This is an aggressive move by NetApp, a leader in Ethernet storage to encroach on the Fibre Channel domains of traditional storage leaders such as EMC, Hitachi, IBM, and HP. NetApp’s unified storage message is strong and directly aimed at siloed infrastructure requiring different architectures (e.g. EMC’s V-Max, CLARiiON, Celerra approach).

NetApp uses a QLogic dual port CNA at the storage target running native FCoE that can talk to a converged switch or a standard switch. To take full advantage (i.e. to have a converged network), customers will need a converged Ethernet switch, and NetApp is making Cisco’s FCoE switches (e.g. Nexus 5000), which is considered a top-of-rack switch, available. No vendor has announced core FCoE switches yet, so this announcement represents an early example of converged networks which, as indicated above, will be phased in over time.

The importance of this announcement is that NetApp is the first storage systems supplier that can support all major storage protocols using a single port on the same target. Competitors at this point in time require multiple native ports, which increases expense. NetApp’s approach, if fully adopted, means less complexity, fewer cables, less power, lower costs, and investment protection. Because NetApp supports multiple protocols today, customers can use existing protocols, and, as they move to FCoE, they can keep existing storage devices.

The reality is all major storage suppliers are moving in this directio; however NetApp is first and is trying to put customers on the curve sooner. In addition, its unified storage approach is unique in the industry and continues to reach further into the data center.

CIO considerations

IT management should recognize lossless 10 Gigabit Ethernet technologies will bring changes and have three impacts. Initially, the costs of cutting over to a unified network structure will bring additional expense and have a slow ramp up time. Over time, substantial savings will be realized by reducing the numbers of cards, cables and switches. Finally, network and storage organizations, which typically report directly to different IT management structures, will probably need to be re-organized. Wikibon practitioners in networking and storage groups have begun assessing these changes. Not surprisingly, the networking folks are taking a decidedly Ethernet approach, pushing for example an all-iSCSI direction, while storage admins with large FC installed bases are typically advocating FCoE or continued native FC.

Ultimately, Wikibon believes that, because these changes are being driven by 10 Gigabit Ethernet, network-centric management structures will predominate, with storage professionals responsible for ensuring quality-of-service and storage performance over the Ethernet network. In many organizations, CIO’s should consider having storage and networking professionals reporting into the same direct management authority. In our view, this does not mean that iSCSI will ultimately replace FC or FCoE; rather it means that these standards will co-exist.

Action Item: While early in the adoption phase, FCoE will continue to grow steadily. Within three years, most new high-end data center infrastructure (hosts, switches, adapters and storage) will support FCoE. Organizations with Fibre-based SAN should ensure that organizational structures are in place to exploit 10Gb Ethernet and FCoE and that new sever and storage gear is equipped with converged network adapters (CNAs). Such strategies will support a vision of a converged data center where the most practical path to unification is bringing together block and non-block storage infrastructure.

Footnotes: