Originating Author: David Floyer

The announcement of general purpose (rather than supporting backup or virtual tape libraries) deduplication by NetApp and IBM within a controller for file orientated storage is a first within the major industry players. It is a sound placement of the function, and will be a contribution to establishing a storage services infrastructure.

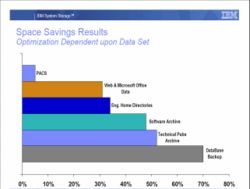

One of the challenges for CIO's and CTO's will be managing user expectations. Many vendors have proclaimed 50 – 100:1 data reduction. These are restricted to environments where the same data is saved many times sequentially, such as in virtual tape libraries and backup. Deduplication of production file systems offers more modest reductions in space in the 2:1 range, as shown on the chart below illustrating reduction for different workloads.

The following can serve as useful guidelines to getting started with deduplication:

- Start by understanding the benefits and costs of deduplication in your specific environment. Measure the data reduction, and measure the impacts on performance, particularly on reads.

- Create a deduplication pool of storage with minimal other function on the filer head to minimize potential performance problems with competing filer functions.

- Consider dropping tape storage for anything other than data that must be physically moved off site. If there is no justification for keeping it on some sort of disk, it should probably be discarded.

The best candidates for deduplication likely to be Tier 2 and Tier 3 applications with low I/O read activity and low volatility.

Action Item: IT management should assess deduplication for enteprise storage systems. However, user expectation of both the amount of benefit (30%-50%) and the range of applications that it will be suitable for (low volatility, low read I/O) should be managed. Look hard at tape storage for long-term use and evaluate moving to a strategy of eliminating tape except for removing data off-site.