Storage Peer Incite: Notes from Wikibon’s July 26, 2011 Research Meeting

Recorded audio from the Peer Incite:

Long-term data storage presents several security challenges. One is disaster planning. The longer the data needs to be stored, the more likely the primary storage site will suffer a disaster, ranging from multiple disk failures to a regional event such as a major flood. If the data is to survive such an event, it needs to be stored in multiple locations -- the more the better, but two locations separated by at least 500 miles is a minimum.

Second, the risk of data corruption increases over time, particularly if the data needs to be encrypted or will be compressed to save on storage. In those cases the corruption of a single bit can render an entire file unrecoverable. The best solution for this is to divide the data file into numerous small, independent pieces so that the loss of a bit only impacts a portion of the data and then store multiple copies of each piece in different locations.

Third, over time technologies change, and the medium used to store the data, particularly in the case of inactive media such as tape, may become obsolete. When the time comes to read the data, there may be no machine available that can handle the format of the medium. The solution here is to store the data on active media such as hard disks and to migrate the data to new disks regularly.

The practical problem is that until recently the available solutions to these problems are expensive. Large financial traders, for instance, have adopted a three-data center strategy, with two primary centers close enough to each other to allow complete mirroring of data between them and a third far enough away -- usually cross a continent or ocean -- that it will not be involved in a regional disaster at the location of one of the two primaries.

Recently a new solution, erasure coding, has appeared in the cloud. This is based on a mathematical construction and involves dividing the data into a large number of small segments which then are duplicated and spread over multiple locations and in some cases multiple IaaS providers. The result, according to the math, is a virtual guarantee of complete data survival over very long periods of time. The one trade-off is that recovery will involve small lag times. This is therefore not suitable for currency trading, but it is a strong solution for the rest of us.

The articles in this newsletter take a look at the various aspects of this approach, which can solve the problem of secure data archiving, particularly of large files such as medical images, for long periods while providing on-demand access at a reasonable cost. G. Berton Latamore, Editor

Scaling Storage for the Cloud: Is Traditional RAID Running out of Gas

Cloud computing practitioners led by Amazon, Facebook, Google, Zynga and others have architected object-based storage solutions that typically do not involve traditional storage networks from enterprise suppliers such as EMC, NetApp, IBM, and HP. Rather these Web giants are aggressively using commodity disk drives and writing software that uses new methods of data protection. This class of storage is designed to meet new requirements, specifically:

- Petabyte versus terabyte scale,

- Hundreds of millions versus thousands of users,

- Hundreds of thousands of network nodes versus thousands of servers,

- Self-healing versus backup and restore,

- Automated policy-based versus administrator controlled,

- Dirt cheap and simple to operate.

Traditionally, enterprises have justified a premium for RAID arrays and will often pay 10X or more on a per GB basis relative to raw drive costs. Practitioners are finding that traditional array-based solutions cannot meet the cost and data integrity requirements for cloud computing generally and cloud archiving specifically. New methods of protecting data using erasure coding rather than replication are emerging that enable organizations to dramatically lower the premiums paid for enterprise-class storage – from a 10X delta to perhaps as low as 3X.

These were the conclusions of the Wikibon community based on a Peer Incite Research Meeting with Justin Stottlemyer, Director of Storage Architecture at Shutterfly. Specifically, rapidly increasing disk drive capacities are elongating RAID rebuild times and exposing organizations to data loss. New methods are emerging to store data that provide better data protection and dramatically lower costs, at scale.

Shutterfly: Another Example of IT Consumerization

Shutterfly is Web-based consumer publishing service that allows users to store digital images and print customized “photo books” and other stationary using these images. Shutterfly is a company with more than $300M in annual revenues, roughly one half of which is derived in the December holiday quarter, and a market value of around $2B (as of mid-2011). Its main competitors are services such as Snapfish (HP) and Kodak’s EasyShare.

A quick scan of Shutterfly’s Web site underscores its storage challenges. Specifically, the site offers:

- Free and unlimited picture storage,

- Perpetual archiving of these pictures – photos are never deleted,

- Secure images storage at full resolution.

Stottlemyer told the Wikibon audience that when he joined Shutterfly, 18 months ago, the organization managed 19 petabytes of raw storage and created between 7-30 TBs daily. Costs were increasing dramatically, and drive failure rates were escalating. In that timeframe, Shutterfly had a near catastrophe when a 2PB array failed. The firm lost access to 172 drives, and while no data was lost it needed three days to calculate parity and three weeks to get dual parity back across the entire system.

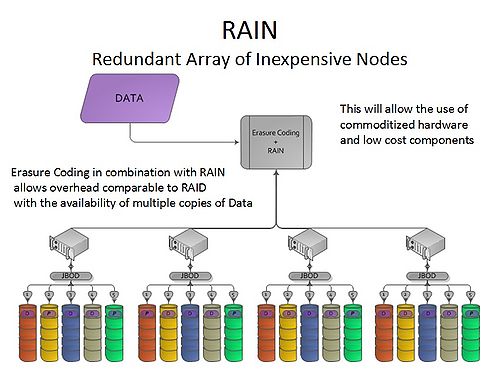

This catalyzed action, and after an extensive study of requirements and potential solutions, Shutterfly settled on an erasure code-based approach using a RAIN architecture (Redundant Arrays of Inexpensive Nodes). This strategy allows Shutterfly to leverage a commodity-style computing methodology and implement an N + M architecture (e.g. 10+6 or 8+5 versus a more limited RAID system of N+1, for example).

The firm looked at several alternatives, including open source projects from UC Santa Cruz, Tahoe-LAFS, Amplidata and others. Shutterfly settled on a Cleversafe-based system using erasure coding and RAIN. Cleversafe was chosen because it was more mature and appeared to be the most appropriate system for Shutterfly’s needs. The Shutterfly system today takes in a write from a customer to a single array, which gets check-summed and validated through the application. This data is also written to a second array and similarly validated.

A key requirement for Shutterfly and one key consideration for users is the need to write software that can talk to multiple databases and protect metadata and maintain consistency. Shutterfly had to develop some secret sauce that maintains metadata in a traditional Oracle store as well as the file system and provides access to metadata from the application. This is not a storage problem per se. Rather it’s an architectural and database issue.

Key Data Points

- Shutterfly has grown from 19 PBs raw to 30 PBs in the last 18 months.

- The company is seeing 40% growth in capacity per annum.

- The increase in image size is tracking at 25% annually.

- There is no end in sight to these growth rates.

- Shutterfly experiences typically 1-2 drive failures daily.

Some Metrics on RAID Rebuild Times

- For a RAID level 6 array a rebuild on a 2TB drive could take anywhere from 50 hours for an idle array up to two weeks for a busy array.

- Each parity bit added at the back-end increases system reliability by 100X – so a 16+4 architecture will yield a 10,000X increase in reliability, relative to conventional RAID, at commodity prices.

- Rebuild times for a 12+3 array, for example, using erasure coding start at 1-2 hours for an empty/idle array. For a full array however the rebuild times could be longer than with a conventional array.

- However the level of protection is greater, so the elongation in rebuild times is not as stressful because data is still fully protected during the rebuild. With longer rebuild times due to larger hard drive capacities, drive failures on traditional arrays are reaching the point where data loss is increasingly likely.

Shutterfly's primary driver was peace of mind during the drive rebuild process at a much more economical cost than would have been achievable using traditional RAID techniques. If a drive fails today, Shutterfly has multiple other parity bits that can protect the system, easing the pressure to recover quickly.

Object Storage and the Cloud

Organizations are increasingly under pressure to reduce costs and in particular cut capital expenditures. While the external cloud is alluring, at scale, organizations such as Shutterfly are finding that building an internal cloud is often more cost-effective than renting capacity from, for example, Amazon S3. Rental is always more expensive than purchase at scale, but rental offers considerably more flexibility, which is especially attractive for smaller organizations.

Another key issue practitioners will need to consider is the requirements of object storage. While object stores offer incredible flexibility, scale, and availability, they are new and as such harder to deploy. They also are not designed for versioning. While many small and mid-sized customers (through Amazon S3) are using object storage today, development organizations at large companies may be entrenched and not willing to make application changes in order to exploit the inherent benefits of object storage – which can include policy-based automation. Nonetheless, for firms scaling rapidly and looking toward cloud storage to meet requirements, the future of storage is object because of its scaling attributes and inherently lower cost of protecting data. Traditional RAID is increasingly less attractive at cloud scale.

What are the Gotchas?

In point of fact, RAID 5 and RAID 6 use erasure coding, but with limited flexibility on the granularity of protecting data. The Shutterfly case study shows us that with erasure coding data, reliability of 5 million years can be attained—i.e. astronomically low data-loss probabilities.

The downside of erasure coding such as the Reed-Solomon approach used by Cleversafe is that it is math-heavy and requires considerable system resources to manage. As such you need to architect different methods of managing data with plenty of compute resource. The idea is to spread resources over multiple nodes, share virtually nothing across those nodes and bet on Intel to increase performance over time. But generally, such systems are most appropriate for lower performance applications, making archiving a perfect fit.

The Future of Erasure Coding

As cloud adoption increases, observers should expect that erasure coding techniques will increasingly be used inside of arrays as opposed to just across nodes, and we will begin to see these approaches go mainstream within the next 2-5 years. In short, the Wikibon community believes that the trends toward cloud scale computing and the move toward commodity compute and storage systems will catalyze adoption of erasure coding techniques as a mainstream technology going forward. The result will be a more reliable and cost effective storage infrastructure at greater scale.

Action item: Increasingly, organizations are becoming more aware of exposures to data loss. Ironically, drive reliability in the 1980's and early 1990's became a non-factor due to RAID. However as drive capacities increase, the rebuild times to recalculate parity data and recover from drive failures is becoming so onerous that the probability of losing data is increasing to dangerous levels for many organizations. Moreover, the economics of protecting data with traditional RAID/replication approaches is becoming less attractive. As such, practitioners building internal storage clouds or leveraging public external data stores should consider organizing to exploit object-based storage and erasure coding techniques. Doing so requires defining requirements choosing the right applications and involving developers in the mix to fully exploit these emerging technologies.

Footnotes: Is RAID Dead - a Wikibon discussion on the issues.

Erasure Coding and Cloud Storage Eternity

Storage bits fail, and as a result storage protection systems need the ability to recover data from other storage. There are currently two main methods of storage protection:

- Replication,

- RAID.

Erasure coding is the new kid on the block for storage protection. It started life in RAID 6, and is now poised to be the underpinning of future storage, including cloud storage.

An erasure code provides redundancy by breaking objects up into smaller fragments and storing the fragments in different places. The key is that you can recover the data from any combination of a smaller number of those fragments.

- Number of fragments data divided into:......m

- Number of fragments data recoded into:.....n (n>m)

- The key property of erasure codes is that the stored object in n fragments can be reconstructed from any m fragments.

- Encoding rate..........................................r = m/n (<1)

- Storage required......................................1/r

Replication and RAID can be described in the erasure coding terms.

- Replication with two additional copies........m = 1, n=3, r = 1/3

- RAID 5....................................................m = 4, n = 5, r = 4/5

- RAID 6....................................................m = n - 2

When recovering data, it is important that it is know if any fragment is corrupted. m is the number of verified fragments required to reconstruct the original data. It is also important to identify the data to ensure immutability. A secure verification hashing scheme is required to both verify and identify data fragments.

It can be shown mathematically that the greater the number of fragments, the greater the availability of the system for the same storage cost. However, the greater the number of fragments that blocks of data are broken into, the higher the compute power required.

For example, if two copies (r = ½) provided 99% availability, 32 fragments with the same r (16/32) and therefore the same amount of storage would provide an availability of 99.9999998%. You can find the math in a paper by Hakim Weatherspoon and John D. Kubiatowic.

Figure 1 below shows the topology of a storage system using erasure coding and RAIN, a Redundant Array of Inexpensive Nodes.

Wikibon believes that storage using erasure coding with a large number of fragments will be particularly important for cloud storage but will also become used within the data center. Archiving will be an early adopter of these techniques.

Action item: All storage professionals will need to be familiar with erasure coding and the trade-offs for data center and cloud storage.

Footnotes: The ideas in this post are used in two other posts on erasure coding:

- Erasure Coding Revolutionizes Cloud Storage, Wikibon 2011;

- Reducing the Cost of Secure Cloud Archive Storage by an Order of Magnitude, Wikibon 2011.

Erasure Coding Revolutionizes Cloud Storage

In a previous post, Wikibon showed that slicing data up and spreading it across a network of 32 independent nodes can increase availability five million times over a traditional replicated copy, while using the same storage resources. Yes, five million times; the calculation is ((1-99%)/(1-99.9999998%). In another post the availability is kept constant, and the cost of the secure highly available cloud system is reduced by an order of magnitude compared with traditional storage array-based topologies. At the same time, companies have extended the erasure technology to provide built-in encryption support.

A simple healthcare example can illustrate the potential for cost reduction for archival systems. Patient records of x-rays, MRI scans, CT Scans, EKGs, MEGs, 2D and 3D scans all take up a large amount of space and have to be kept for up to 100 years. Over time a patient will have multiple doctors and multiple medical facilities in multiple locations, any or all of whom may need to access those images.

The key requirement of such a solution are similar to most archiving systems, with the difference that this would be a production system:

- Immutability of the stored objects;

- Preservation of provenance;

- The ability to dynamically change to new technologies over time;

- Extreme reliability (a bit lost is the object lost);

- Very high availability (lives may depend on access);

- Cost efficiency that improves over time;

- High level of security.

The most cost-effective solution is to use a logically central, geographically dispersed data store based on encrypted erasure codes, shared over many medical centers. Each center would have a cached copy, which does not need backup, as it can be reclaimed from the cloud. The cloud store does not need further copies made for disaster recovery. A low-cost rolling backup strategy and update logs would be necessary for recovery from catastrophic software or operator failure. A single dispersed copy would be more accessible and reliable than a best-of-breed three-data-center array-based synchronization topology, and be 9-to-25 times less expensive.

One of the constraints to adoption is the object nature of the resulting storage model. For developers who are familiar with object storage and can take advantage of it, this is good news. For traditional developers and ISVs, this approach can represent a challenge.

Action item: Erasure codes will allow highly available dispersed archival systems that are an order of magnitude less expensive than traditional systems. CTOs should be looking hard for opportunities to use these revolutionary topologies to design more cost effective systems that will be simpler to manage.

Footnotes:

- Additional information on erasure coding and very high availability can be found at Erasure Coding and Cloud Storage Eternity, Wikibon 2011;

- Additional information on different storage topologies and the cost difference for cloud storage can be found at Reducing the Cost of Secure Cloud Archive Storage by an Order of Magnitude, Wikibon 2011.

Large Orgs Should Take Targeted Approach to Object Storage

Object storage offers a number of benefits over block-and-file storage. For instance, object storage makes it possible to create and manage metadata related to specific objects. This allows administrators to develop and apply policies, such as how long to store an object or how many local copies of an object must be stored, to stored objects easily.

Such metadata management and policy management tasks are helpful when archiving data including images, audio, and video files but are difficult or impossible to execute with block and file storage methods.

Object storage is quickly becoming the de facto cloud storage standard, and in fact a number of smaller enterprises are already leveraging object storage on Amazon’s Simple Storage Service, or S3. However, migrating a large number of existing applications to object storage is a complex and time-consuming operation requiring a new approach to application architecture.

Action item: Small enterprises in greenfield environments should strongly consider object storage. Migrating to object storage at larger companies is a more difficult affair, however. It requires a complete change of mindset on the part of developers and administrators that may be reluctant to move away from block-and-file storage. Enterprises with large and complex webs of enterprise applications and storage devices, therefore, should pinpoint just the applications best suited for object storage for migration. Wikibon recommends enterprises take a “SWAT team” approach, creating small teams to target the best candidates for object storage, such as e-mail archiving and multi-media data.

The importance of standards for Cloud Data Management

With the advent of the cloud storage as paradigm of storage-as-a-service, many companies are moving their data to the cloud. Each company has diverse reasons for this, but when talking about public clouds the concerns are almost always the same: security, availability, integrity, and accessibility of the data stored in the cloud. Cloud storage still remains a fairly new paradigm; thus, each cloud storage provider has its own set of interfaces to store, access and delete the data stored in the cloud.

When using cloud as a provider for archiving services, this lack of standards can become an obstacle for inter-cloud data migration. Cloud archive service providers must store customer data for extended periods of time (generally years, including forever). However, relationships between providers and customers often do not last forever. Price, better service, legal issues, data security, or even providers going out of business are some of the reasons why customers might want to change their cloud archive provider. Some companies may also want to spread the archival of their data between several providers or migrate their data from private/hybrid clouds to public providers. Customers must have the ability to move their data seamlessly between providers, which is becoming more difficult every day due to the lack of standards for managing data in the cloud.

Some efforts to standardize the access to data stored in the cloud, like SNIA's Cloud Data Management Interface (CDMI), are in progress. CDMI defines a functional interface for both end-users and administrators to manage the data and metadata stored in the cloud and define containers for the data. CDMI also provides support for data retention, where data cannot be modified or deleted for a given period of time. Other main feature of CDMI is that clients have the ability to discover the capabilities of the cloud storage service offered by the provider.

Action item: The vendor community should support and adopt standards for cloud storage data management, like CDMI. A standardized interface for management of data stored in the cloud will be a huge differential between vendors in the near future. Forcing the customers to write their own interfaces for each cloud provider will increase the cost and difficulty of cloud archiving adoption and drive customers away from providers with "proprietary" interfaces.

Reducing the Cost of Secure Cloud Archive Storage by an Order of Magnitude

Introduction

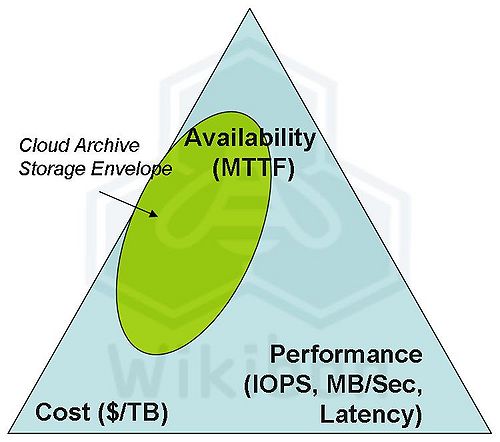

Storage architecture is always a trade-off between competing priorities. This is illustrated in Figure 1, where the green oval represents the design point envelope for high-availability secure cloud archive storage, where the storage is separated from the processing. Performance is not the most critical component; the objects are large, and users will tolerate a minute latency for an object. Local caching will provide better performance if necessary for repeated viewing.

Secure archive data systems require high data reliability for three reasons:

- When data is sensitive and must be encrypted, and the loss of one bit of data will render an object unreadable;

- When data is compressed, the loss of one bit of data may render an object unreadable;

- Keeping data for a long time increases the risk of corruption, and decreases the ability to reconstruct data from other sources.

In Figure 1, fixing the requirements for high availability, but lower performance means that cost does not have to be so high.

Several storage architectures that would fit the green envelope shown in Figure 1. The focus will be to compare two, a 3-data center traditional array-based storage solution and an erasure coding-based cloud storage solution.

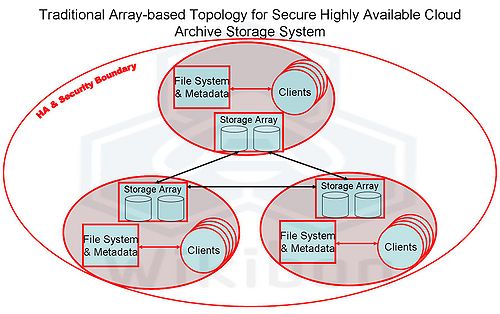

Traditional Array-based Storage Topology

Figure 2 illustrates a traditional three-data-center solution with replication among three data centers. The storage array system act as a single file system across three data centers, with copies of the storage in any one data center in each of the other two data centers. The high-availability and security boundary has to include all the components of the system. RAID 6 protection is assumed within the storage arrays as a protection against the increasing problem of multiple disk failures, and the three distributed copies provide higher reliability and very high access availability and protection against disastrous loss at up to two locations.

There are many potential providers of array storage, and the topology is well known and understood. Less well understood are the risks of data corruption; storage vendors offer some features to mitigate the risk, but in general do their best to avoid this subject.

The topology shown in Figure 2 would have a minimum storage requirement of 3.6 times the amount of actual archived data. In reality, systems of this nature have a significant additional overhead in keeping copies of storage both for synchronization between the sites, and for assisting in providing copies of data in case of disastrous software or operator failures. A figure of six times the amount of actual archived data is more realistic.

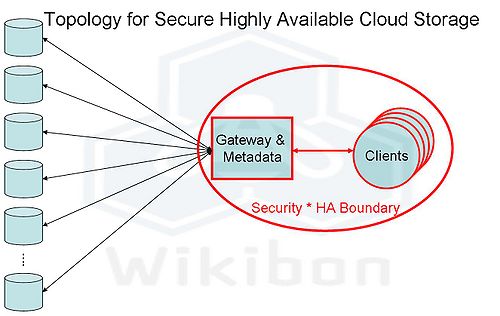

Erasure Coded Storage Topology

Wikibon has described erasure coding in a posting entitled Erasure Coding and Cloud Storage Eternity, and shows that very high levels of data availability can be achieved. A topology using erasure coded storage is shown in Figure 2, which achieves the same levels of availability and security as the traditional array-based topology shown in Figure 1. The topology has multiple locations solution to store the data fragments. This would allow data to be held in multiple locations (and even multiple cloud storage providers). The loss or theft of individual data stores would not affect either the availability or security of the storage system.

The overhead of storage required would depend on the additional number of fragments that the data is recoded into (n) and the minimum number of fragments that are needed to read the data (m). A typical value that is used in archive store is n = 22, and m=16. The total storage required in this environment is n/m = 1.375 times the actual amount of archived data. If greater levels of reliability and performance were required, then raising n/m to 1.6 would provide a significant improvement.

Comparing Storage Topologies

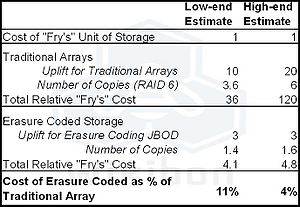

Table 1 shows a relative cost comparison between the two topologies shown in Figure 1 and Figure 2. The basis of comparison is the cost of a unit of storage (a SATA disk drive, for example) at the well-known Fry’s store.

Traditional arrays consist of software and hardware, and come in at between 5 and 20 times the base storage cost. For high-availability systems, the range is 10-20. The number of copies of the data is in the range 3.6 to 6.

Erasure coded-based storage would require the minimum amount of “Fry’s” overhead at each of the distributed nodes, as all of the protection is within the file system. Almost no storage software is required. A value of 3 times the base storage value is reasonable.

Table 1 shows a range of estimates on the comparisons between the two topologies. The bottom line is that erasure coded storage between 11% and 4% the cost of a traditional array topology (an order of magnitudes less cost). Putting it the other way round, a traditional array topology is between nine (9) and twenty-five (25) times more expensive that topologies based on erasure coding.

Erasure Coding Solutions

Erasure coding as a science has been round for a long time. The technique has been used in traditional array storage for RAID 6. The usage has been historically constrained by the high processing overhead of computing for the reed-Solomon algorithms. The recent introduction of the multi-core processor has radically reduced the cost of the processing component of erasure coding-based storage, and future projections of the number of multi-cores will reduce it even further.

There are a number of storage projects and products using erasure coding for storage systems. These include:

- Tahoe-LAFS;

- Berkeley’s OceanStore technology, used in the Geoparity feature of EMC’s Atmos storage offering;

- Self-* systems project at Carnegie Mellon University (Self-* systems are self-organizing, self-configuring, self-healing, self-tuning and, in general, self-managing);

- Cleversafe, a winner of Wikibon’s Wikibon CTO award for Best Storage technology Innovations of 2009, and is currently the leading vendor of erasure encoded storage solutions.

Compared with traditional array topologies, topologies based on erasure encoding are newer and there is far less user experience in how to implement, manage and code in a practical environment. Wikibon expects to see significant adoption of erasure coding solutions over the next few years. However, users should temper the theoretical calculations in Table 1 and set initial expectations at a lower level.

Comparisons and Observations

The comparison given in Table 1 makes the assumption that topologies deliver the same availability and performance, and that the rest of the system round it the same in cost, performance and availability. That assumption is clearly not completely true. Differences include:

- Storage array topologies are more numerous and are likely to more robust than far newer solutions based on erasure coding - this is very likely to change over time;

- The perimeter for high-availability and security is much longer and more challenging for array-based solutions than for erasure coded solutions;

- The management and safeguarding of encryption and storage metadata become the most critical components in both topologies, as loss of this metadata would lead to access to all the data being lost (the data would be there but impossible to retrieve);

- A separate backup copy of data on a low-cost medium (e.g., tape) would be required periodically in case of metadata loss.

- The coding of applications systems using traditional file systems will be easier for most ISVs and application developers than coding using storage objects - additional training and architectural review would be required for erasure coding solutions;

- SATA disk storage will be the storage technology for the foreseeable future. Flash storage may play a part as a cache mechanism, rather than a tiered storage mechanism;

- The flash-caches will probably be part of a front-end user system, rather than the retrieval system;

- Erasure coding will be equally applicable to public clouds, private clouds and hybrid clouds;

- Both systems do not provide near-zero RPO (recover point objective), i.e., data can be lost before it is secured;

- If near-zero RPO is required (most archive systems can just resend later to recover), an Axxana like system would be appropriate for either system.

- This example specifically analyses a secure high-availability archive cloud system - the business case and calculations would need to be analysed separately for other types of storage systems.

The bottom line is that using erasure encoding for cloud storage systems is a new technology. The early adopters are using the technology in very large secure deployments at the moment, where the savings are highest. Wikibon believes that archive topologies based on erasure-coding will be adopted as best practice within two to three years.

Action item: CIOs and CTOs with large-scale archive requirements that need to be secure and highly-availability (for reliability and accessibility) should look long and hard at erasure encoded storage vendors, and include them on any RFP.

Footnotes:

- An example of practical example for archiving medical records can be found at Erasure Coding Revolutionizes Cloud Storage;

- Additional technical details on erasure coding can be found at Erasure Coding and Cloud Storage Eternity.