Business analytics is about turning lots of (on its own) meaningless data into valuable insights. But that’s not the whole story. Not by a long shot.

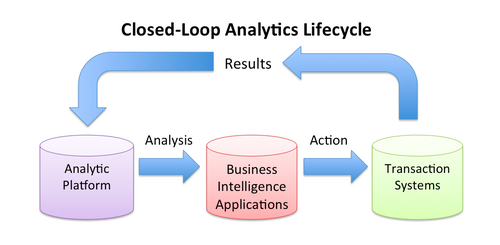

For analytics practices to reach their full, transformative potential, analytics platforms and tools need to make it easy for users to take action based on these new insights. They must also monitor and feed the results of actions taken back into the system in an automated way to inform and improve the predictive models that led to the insights in the first place.

You can think of this process as closing the Big Data analytics loop.

For example, a business user at a large retailer may use a self-service business intelligence tool to decide if and when to offer a new promotional campaign. Perhaps the user determines that a three-hour online-only sale that afternoon will likely result in significant revenue generation, based on intelligence produced by the underlying analytics engine. The user then must execute the campaign, track its performance, and make adjustments to future campaigns as warranted.

In most current environments, this first step requires the user to exit the BI application and log into a separate campaign management system to kick-off the sale and related promotional efforts. The user then waits a day or more to receive a canned, static report with metrics regarding revenue and profit generated by the campaign. Based on these metrics, the user might then suggest to a central analytics team or maybe even to IT ways to adjust analytic models based on the results, positive or negative.

If the user is lucky, those suggestions might be acted upon, but usually not for weeks or months. More likely, these suggestions never make their way into the analytic process and the loop is never closed. Users continue to make decisions based on analytics and intelligence that is essentially frozen in time until someone higher up the food chain decides its time to “overhaul” the entire analytics process. The whole scenario is repeated again.

A better approach

In a truly closed loop analytic environment, the user would be able to take the required action – kicking off the three hour online-only sale – from directly within the same BI application that produced the related intelligence. From there, real-time results of the campaign – revenue generated as a direct result of the sale – is fed back into the underlying analytic system. The user can track results in real-time with the same BI application, while the analytics engine adjusts its models based on the actual results of the campaign to refine its future campaign predictions and recommendations.

The next time the user performs similar analysis, the recommendations generated will be informed by the relative success or failure of previous, real-world campaigns, and the entire process is repeated. With each successive iteration, the analytics and recommendations improve and revenue increases.

Architecture and Culture

Closing the analytics loop requires significant forethought into how data and application infrastructures are architected. BI/data visualization applications, analytic engines, and transactional systems must be able to bi-directionally communicate in real-time. BI and transactional applications must be tightly integrated, with transactional functionality built into BI apps. Ideally, non-relational databases must play a role to accommodate multi-structured data, such as social and machine-generated data.

Cultural issues also must be overcome. “This is the way we’ve always done it” can no longer be an acceptable excuse from analysts, IT pros, or business users for clinging to outdated, inefficient, and misguided analytic processes.

Action Item: CIOs should carefully review current analytic processes/technologies and make adjustments or wholesale changes where needed to ensure closed loop analytics environments. This will likely mean evaluating and investing in next-generation BI platforms that integrate transactional functionality directly in the UI, such as Microstrategy’s mobile platform with transaction services], new methods of data storage, namely flash and in-memory data stores from vendors like Fusion-io and Aerospike, to allow for real-time data exchange between analytic and transactional systems, and NoSQL databases and platforms such as Hadoop, HBase, Accumulo and Cassandra to accommodate large volumes of multi-structured data. From a people and processes perspective, reluctant users must be trained and incentivized to use closed-loop analytics tools and processes, less related technology investments go for not. And data architects need to adjust their preconceived notions regarding siloed analytic and transactional systems and adopt a more integrated approach.

Footnotes: