Originating Author: David Floyer

Contents |

Enterprise Data Recording

Axxana have introduced a technology simple in concept and profound in its implications. They have named it Enterprise Data Recording (EDR). It allows the nirvana of disaster recovery, zero data loss at unlimited distance with only two nodes.

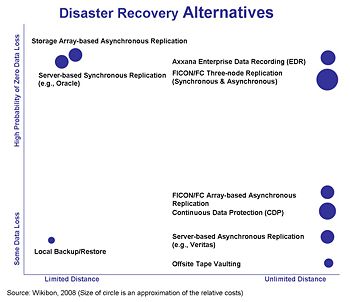

Figure 1 shows the alternatives for disaster recovery that are currently available. There are only two solutions that provide zero data loss at extended distances; three-node replication and now EDR.Speed of light problem

To ensure zero data loss (zero RPO), IO has to be written in both sites (nodes) before data processing continues, a technique called synchronous replication. The longer the distance, the greater the time required to complete the write and send back the acknowledgment. At 25-50 miles between nodes, the elongated IO response time usually brings the application to its knees.

The alternative technology is asynchronous replication which allows unlimited distance by buffering the IOs and writing them to the remote site asynchronously. A buffer of IOs is held at the primary site (usually 5-10 minutes worth of IOs). In a disaster this buffer is lost, and with it the last 5-10 minutes of enterprise data. This is not acceptable for (say) financial organizations that may transfer millions of dollars in a transaction. It is increasingly unacceptable to organizations with highly integrated sets of applications where any data loss means significant problems for IT and business recovery.

Until EDR, the only technology that can provide this level of data protection within disaster recovery was the extremely expensive three-node remote replication technologies from EMC, Hitachi & IBM, usually with mainframes. This requires IT equipment in each of the three nodes, and expensive high-speed WAN communication between the sites.

EDR technology

Axxana’s EDR combines three technologies:

- Airplane “Black Box” technology to protect the data during a disaster

- Highly resilient cellular broadband technology to extract and transfer the data to the secondary site

- Ability to record the buffered IOs at high-speed and integrate it into the asynchronous remote replication array hardware and software

This combination of technologies allows the critical data buffer to be protected through a disaster (direct flames at 2,000ºF for one hour followed by 450ºF for an additional 6 hours, pressure of 5,000lb, pierce force of a 500lb rod dropped from 10FT, 30ft of water pressure and a shock of 40G), and transmit the data to the second site.

Business value of zero data loss

Wikibon has written extensively about the problems of recovering from data loss. In the early days of IT, business departments could go back to paper records and re-enter the data. Today’s IT applications are so integrated within an organization and between organizations that the regeneration of that lost data is very expensive, and often impossible.

Zero data loss replication solutions simplify the application design, and the business process designs for an organization. For the IT department they simplify the recover process and significantly reduce the recovery time objectives (RTO). Zero data loss also significantly reduces the complexity of disaster recovery testing.

Asynchronous vs. Synchronous Replication

Synchronous replication is operationally easy to use. It gives the ability to switch workloads between data centers, and minimizes recovery time (RTO). Testing of recovery systems is significantly simplified. However, there are a significant number of drawbacks to synchronous replication:

- Distance of second site Synchronous "B" sites have to be within 25-50 miles radius to ensure reasonable IO response times; asynchronous backup sites can be any distance away

- Cost of telecommunication lines The lines in synchronous replication have to be up all the time: any short glitch in line quality or availability will close down the synchronous link, and recover will be operationally complex. With asynchronous both the redundancy and the size of the communication lines can be significantly relaxed

- IO Response times The problem with application that are run with synchronous replication is that IO response time are significantly elongated. The longer the distance, the greater the impact on IO response time. At best, the impact of is an increase in user response time and a potential reduction in productivity. At worst the elongation of IO response time can become the bottleneck that limits the number of users and number of transactions the application can sustain

- Cost of application development/maintenance Elongated IOs constrain the number of database calls that can be done by all parts of an application, and as a result can limit application functionality, limit performance and response time, and increase the design complexity of applications

- Qualification of Application Packages Because of the issues above, not all packaged applications (particularly outside the financial industry) are qualified for synchronous replication

The bottom line is that using asynchronous replication can be a significant advantage to an IT shop even over short distances. The EDR technology enables this for organizations that need a high probability of zero data loss.

Axxana’s route to market

Axxana have chosen to bring their technology to market by partnering with multiple array vendors, rather than developing its own asynchronous remote replication solution. This allows much easier adoption of the technology for sites with asynchronous solutions installed.

Action Item: Large data center CIOs & CTOs with zero RPO requirements should determine which storage vendors have unambiguous plans to integrate EDR technology into their asynchronous replication solutions. The EDR technology should be verified with an installation of one of the early solutions available. If it works as advertised and there are not too many tie-ins with unnecessary array software, the EDR should be evaluated for adoption as the platform of choice for the disaster protection of high-value transactional data.

Footnotes: Zero data loss should be interpreted a a high probability of zero data loss. There is no solution which guarantees zero data loss from all disasters.