Software is Eating the World - Will Amazon Eat Enterprise Infrastructure

From Wikibon

Storage Peer Incite: Notes from Wikibon’s April 23, 2013 Research Meeting

Recorded video / audio from the Peer Incite:

On April 23 the Peer Incite community gathered to discuss the impact of Amazon's dominant, disruptive, fast-growing cloud service on internal IT organizations and their data centers and the rest of the cloud vendor community. Jason Mendenhall, EVP of Cloud for Switch Communications, known for its huge SuperNAP colocation center, itself an AWS competitor and host to several major cloud service providers, gave a strong presentation on the state and direction of evolution of cloud computing, why AWS will not be the 600 pound gorilla of cloud business services forever, and why CIOs should leverage cloud and colocation services. The articles below present commentary on various aspects of this multifaceted discussion and provide Wikibon's view on the future of IT and cloud services.

In this issue of the Peer Incite Newsletter Wikibon's analysts look at the implications of this trend to users and vendors, and discuss the ever-important getting rid of stuff.Bert Latamore, Editor

Software is Eating the World: Will Amazon Eat Enterprise IT Infrastructure

Amazon is a formidable force but one cloud does not fit all sizes, especially within the traditional enterprise IT marketplace. It's no longer about 'why cloud' but rather 'which cloud.' This was the premise put forth by Jason Mendenhall Executive Vice President of Cloud at Switch. Switch is the creator of the SuperNAP, an enormous colocation facility (see Wikibon's Largest Data Center Infographic) that also delivers networking services and a diverse cloud community. Switch hosts more than 500 vendors, and Jason has visibility on some of the most forward-thinking cloud activities in the world.

Switch is developing an advanced infrastructure, which Mendenhall claims is "the world's best data center." Switch is a unique technology ecosystem that hosts multiple clouds and in essence is a platform for Amazon's competitors to participate in the market at scale. It is a robust fiber optic network that started with Switch's acquisition of Enron's broadband peering arbitrage data center. The Switch facilities can expand to 2.2M square feet of space, and its strategy is to consolidate and aggregate multiple CSPs in one secure facility and enable multiple providers to compete and add unique value in the marketplace by leveraging scale and cross-pollinating selected cloud services.

The main cloud competition for virtually all of Switch's tenants, and traditional IT suppliers, is Amazon's AWS. Amazon is expected to generate roughly $3B in AWS sales in 2013. Its attack on traditional IT suppliers has been well-documented, and the posture AWS has taken toward incumbent infrastructure vendors has been extremely aggressive. Amazon is getting serious about enterprise IT-as-a-Service (ITaaS), and traditional IT infrastructure suppliers and competing cloud service providers are being forced to respond.

On the one hand this is great for customers, because Amazon has popularized the cloud concept and is creating more competition. On the other, Amazon is pressuring IT organizations to respond; and while some Wikibon practitioners are moving to Amazon, some say they don’t want to put all their data in AWS, because it’s often too expensive and too risky.

The conundrum for the IT industry at large is that Amazon is both incumbent and disruptor. It has the largest share of the public cloud marketplace but at the same time it is moving faster than competitors, cutting prices and adding function at lightning speed. Switch believes that, in aggregate, its tenants represent the second largest cloud installed base in the market; pitting it and its ecosystem head-to-head with AWS.

Where is Cloud Adoption Today?

According to Mendenhall, cloud today is at a point in its development similar to where VHS adoption was in the early days of video. From an enterprise standpoint, we're still in Cloud 1.0. While many companies are experimenting with cloud, and many have cloud strategies, other than those early Web 2.0 companies that start lives in the cloud, most IT organizations are really just getting started. They may have virtualized internal data centers and begun to develop orchestration and automated management capabilities to constitute so-called private clouds, but it's early days with respect to developing true hybrid cloud strategies that leverage a public cloud component.

Further, Mendenhall feels the most interesting angle on cloud will be the hybrid model, where internal and external clouds are combined. Mendenhall sees two models of cloud emerging: The operating model and the technology model with customers building new applications that enable new business models on cloud technologies. Model 1 is "my mess for less" according to Mendenhall, but the more interesting developments are based on strategies to leverage public cloud platforms and autoscaling apps like mobile for, for instance, Big Data and analytics. Innovating enterprises are looking beyond hosted virtualization to leverage cloud as a technology to transform the way applications are developed and deployed as an agile strategic weapon.

When asked for transformative examples, Mendenhall specifically cited a healthcare situation in its ecosystem. Healthcare is a highly compliant and security-intensive environment where service providers and customers created a small interchange of clouds with layered services unique to their needs. The customers created a platform with a multi-vendor approach within their own secure environment that could provide different levels of service and capabilities for back office apps, emerging Web apps, storage workloads at scale, etc. By leveraging different cloud technologies and applying them in tailored ways, these customers were able to bring cloud technology to an industry that generally is perceived as anti-cloud.

He also cited Big Data as a transformative area. Mendenhall talked about two models: Mining public sources like Google or Facebook or Twitter and mining internal data. Switch sees the latter as where much of the action is. Customers are challenged to build the high performance infrastructure to handle the needs of Big Data so what they're doing is building cloud infrastructure to do the analysis and bringing data back into their internal organization once the analysis is done. This type of data movement is often problematic for cloud infrastructure, but Switch's approach according to Mendenhall is advantageous because clouds are physically proximate with very high bandwidth infrastructure between installations.

The bottom line on adoption, according to Mendenhall, is it's a spectrum where a variety of use cases and workloads are emerging; which ties into the premise of the call which is one cloud size does not fit all needs.

Is Amazon Unstoppable?

As indicated, Amazon the Gorilla is also Amazon the disruptor. When asked if his tenants and other IT providers can compete, Mendenhall admitted the prominence of Amazon but argued that, while Amazon will grow and its service points will continue to evolve, the AWS platform is not for everyone. He cited several issues that customers should evaluate with Amazon AWS, or any cloud, including:

- The network, often overlooked when data has to be moved around;

- Hybrid model challenges, specifically with respect to control and management across diverse cloud platforms;

- Security;

- Location of data placement and transparency of where the data resides;

- Cost;

- Support;

- Performance, and,

- Control.

His angle on Amazon is that while AWS has a place in the enterprise, there needs to be choice in the market. Multiple types of competitors to Amazon are emerging, both within Switch and elsewhere, that will give customers choice.

Wikibon practitioners who have evaluated these parameters have reported mixed results. On the one hand they indicate that as a single service, Amazon is the most comprehensive and complete. They believe Amazon security is often better than their own internal security. Its support model and ecosystem are evolving to provide layers of support that extend AWS' own offerings.

However, many practitioners remain concerned about Amazon's willingness to go "belly-to-belly" and partner by providing greater transparency and control for customers. While Amazon has begun to acknowledge private and hybrid cloud strategies, much of its marketing has been somewhat negative on these concepts.

The bottom line on the competitive landscape is it is in flux, but as with all markets one vendor will not sustain a solely dominant position indefinitely. Amazon is unmatched in certain areas such as ease of accessing (for example) it's SLAs and obtaining other information on its cloud. Competitors often cite better SLAs, but many don't even allow public access to SLAs.

Amazon on the other hand is dogmatic in certain ways and is often not willing to provide customized solutions. It is building up its ecosystem of partners and this capability will evolve over time. As such there are clearly openings for competitive cloud offerings. In general, however, Wikibon believes that on the issues of pricing, functional rollout, and business models, while firms like Switch are providing critical infrastructure, most so-called cloud service providers are still ramping up and learning how to compete with Amazon.

Having stated this, clear alternatives are emerging. VMware's recent cloud announcement and the momentum behind the OpenStack movement are two examples that have impressive potential. The Wikibon community expects that Open Source software initiatives like OpenStack - with HP's commitment for example - and extensions of VMware's private clouds into hybrid models will grow to provide formidable alternatives to AWS. In particular both OpenStack and the VMware/EMC strategies are building out extremely diverse and robust ecosystems that Wikibon believes will gain critical mass and represent strong alternatives to AWS. What's more, Microsoft Azure in particular has done an excellent job of building out infrastructure to provide a competitive alternative to AWS, and the Google threat always looms large.

How Should CIOs Approach Cloud Generally and Amazon Specifically?

For the past decade, CIOs have been under pressure to cut costs, do more with less, and improve efficiencies. Infrastructure management has increasingly become non-differentiated heavy lifting. Importantly, Amazon and other cloud service providers have been innovating, investing, and growing, not shrinking their budgets. This brings forth a critical question for CIOs. Specifically "how can I continue to innovate with technology while at the same time running my own infrastructure?" For certain mission-critical applications the answer has been the risks associated with outsourcing to the cloud warrant continued investments to 'control' infrastructure internally. However the fact remains that for many workloads, infrastructure management and running an infrastructure-as-a-service operation is a "race to zero." It's virtually impossible for the vast majority of IT organizations to continue to 'compete' with cloud service providers at scale.

As such the writing is on the wall. Wikibon believes that by 2018, 80% of IT organizations will be pursuing a strategy that involves a public or hybrid cloud approach with a major emphasis on outsourcing infrastructure provisioning and management. Key considerations include choosing which applications can go to the cloud, processes for getting data in and out of the cloud, true costs of rental versus owning infrastructure, leveraging cloud for Big Data analytics and transforming skill sets.

Action item: Amazon has changed infrastructure management permanently. It's aggressive entrance into the enterprise is creating competition and momentum that will drive costs down and improve processes globally. While outsourcing to Amazon, lock, stock, and barrel may make sense for many mid-sized and smaller companies and Web startups, most CIOs should view AWS as another arrow in the quiver of IT options. CIOs must recognize that cloud should not be a strategy to simply lower CAPEX but rather a way to transform organizational processes to improve speed and further integrate IT into the business transparently. By making infrastructure 'invisible' and frictionless, CIOs can focus resources on creating competitive advantage with technology rather than chasing the tail of keeping the lights on.

The CIO and the Cloud: Embrace, Extend, Innovate

Introduction

On April 23rd at 12 Noon EST the Wikibon community gathered to discuss cloud strategies generally and how enterprises should specifically evaluate AWS and alternative approaches. Our guest was Jason Mendenhall Executive Vice President of Cloud at Switch, the creator of the SuperNAP, an enormous colocation facility (see Wikibon's Largest Data Center Infographic) that also delivers networking services and a diverse cloud community. Use the links below to watch or listen to a replay of the full broadcast.

The word “cloud” became a hot addition to the popular lexicon a few years ago and quickly took on eye-rolling status as vendors tripped over themselves to include the new term in all of their marketing collateral. At the same time, lofty cloud promises left CIOs and IT staff sweating bullets as they worried that senior management would believe the promises and put internal staff out of their jobs. Over the ensuing years, the hype factor has worn off a bit and some very public cloud provider outages have taken some of the sheen off the rhetoric, bringing back to earth the reality of running a complex IT organization.

Skepticism is turning into opportunity

In addition, CIOs are less skeptical of cloud, and IT departments are not seeing it as the threat that they used to. People generally realize that their jobs remain relatively safe (for now), particularly as views of the cloud have evolved. No longer is the cloud seen as an immediate replacement for IT. Instead, the cloud is seen as one more arrow in the CIO’s ever-expanding quiver. It’s a formidable arrow, capable of unlocking new kinds of innovation that simply isn’t possible or feasible in the traditional on-premises data center model.

The cloud as a compute tier: Innovative business models

One important and emerging use case for the cloud is provided by Big Data analytics. Previously, meeting the significant processing and storage challenges inherent with Big Data may have required CIOs to make significant infrastructure investments designed to accommodate peak load scenarios. Today, CIOs can build systems that push the analytical work into the cloud – which has basically unlimited compute power – and bring back on-premise just the results. Under this model, the CIO pays for resources on-demand rather than constantly paying for excess capacity. This enables nimbleness than was heretofore extremely difficult to achieve on a budget that will be a fraction of the costs.

This is just one example for how the cloud can be leveraged. There are innumerable more potential use cases out there that are waiting to be discovered by an innovative CIO attempting to solve complex business problems.

Cloud is but one arrow in the quiver

While cloud is but a single arrow, CIOs have at their disposal many different providers from which to choose, including Amazon, VMware, HP, IBM, Joyent, Savvis, OpenStack providers, and more. Different providers will have different sets of skills and services, and it may not be possible for a CIO to adopt a one-size-fits-all approach.

In the old days, the term “server sprawl” was adopted to describe the phenomenon of physical servers spreading throughout the organization without oversight, resulting in all manners of inventory, cost, and workload difficulties. The CIOs of today and tomorrow may find it necessary to battle “cloud sprawl” as IT and various business units engage cloud providers at different levels.

To combat this situation, CIOs need to ensure that the organization’s IT governance processes are well-established and operational. Of course, some level of shadow is almost inevitable in complex organizations, so CIOs need to be proactive in engaging their peers in order to ensure that appropriate technology decisions are made. As a part of this process, the CIO, in concert with his peers and governance groups, needs to ensure that approved providers are relevant to the company’s industry and customers. This will enable a much higher level of success than doing heavy lifting with the wrong provider or providers.

Prepare teams for the future

Finally, the CIO must ensure that his team is prepared for a paradigm shift from a technology provider to a combination technology provider/technology customer. IT organizations of the future will continue to provide some services directly to the organizations while, at the same time, acting as the IT services broker in the organization. This will require different kinds of thinking for CIOs and their staff members as people move from “break/fix” to a “leveraging” mentality. In other words, rather than writing scripts to manage storage, IT staff members will be working with providers to create business solutions and will need a more business-focused mindset to be successful.

Action: For CIOs, the emerging reality is this: The cloud is here to stay. It’s your job to embrace it and develop strategies that will get you into the platform in a way that meets current and future business needs and then, over time, to continue to iterate while continuing to align the services provide with strategic objectives.

Keep Your Own Data Close and Your Competitors Data Closer

Cloud Provider ISVs (CPIs) have become essential IT providers for many organizations, providing excellent applications from sales force optimization (e.g., SaleForce.com) to individual productivity tools (e.g., Microsoft 365, Google Apps), to managing IT work-flow (e.g., ServiceNOW). The number of applications available from CPIs is expanding dramatically; with or without IT involvement, cloud is becoming a significant part of shadow or budgeted IT.

By using economies of scale, hyperscale architectures based on scale-out commodity hardware that is expected to fail, open source software and a Dev/Ops organization, CPIs can lower the barriers to adoption and provide application value to the business at an accelerated rate. In addition, low latency storage is removing the barriers to integrated higher function systems that can adapt to the work-flow required by an organization, rather than the tradition changing of work-flow to fit the ISV package.

The migration to any new IT platform is never easy. It is impossible to predict what CPI applications will be available at a set time in the future. However, some clear principles can be extracted to guide how topologies of legacy and CPI applications should co-exist, and how to take advantage of the massive volume of big data and data streams from the universes of people and things will generate. Ten guiding principles include:

- Active data and metadata will migrate from the SAN to the server, and data latency will improve from milliseconds to microseconds to nanoseconds (from hundreds of miles, to hundreds of yards to just feet away). Keep all active data as close as possible together on flash and the servers as close as possible to the data.

- CPIs will offer the majority of innovative applications. Move your legacy systems to the same mega-datacenter as your most important CPIs. Back-hauling data will reduce cost, increase security, and increase the value of combined data. Cloud-bursting within a data-center may make sense; cloud-bursting between data centers is moronic.

- Choose a mega-datacenter that supports an in-house ecosystem of CPIs relevant to the industry served, including competitors.

- Choose mega-datacenters that offer business continuous and disaster recovery as a service, including synchronous protection of new data as well as geographic asynchronous protection. This service should be integrated across legacy systems and cloud service providers.

- Choose mega-datacenters that offer multiple competing telecommunication vendor services to all major organizational hubs.

- Choose a mega-datacenter that also has cloud data and stream providers for the relevant industry.

- Overall, focus on where the data is, and always bring processing to the data where possible. Avoid data sprawl and CPI sprawl; if a CPI does not offer cloud services in your mega-datacenter, choose a CPI that does.

- Ensure that data sources about your competitors are as close as possible, even closer than internal data.

- A very high proportion of data should flow from active to passive; expansive metadata in active storage should mitigate transfers in the opposite direction. Movement of large amounts of data over networks is a sign of a fundamentally weak data infrastructure architecture and should be avoided like the plague.

- Passive data should be placed on the lowest cost magnetic media with appropriate geographic distribution to ensure security, immutability, provenance and integrity. The metadata should allow creation of multiple logical views of data (e.g., a backup view, an eDiscovery view, etc.) without physical data movement.

The migration to data movement minimalism will require compromises and pragmatism along the way. Adherence to the guiding principles should be unwavering.

Action item: CIOs and CTOs should ensure that data architects are the main drivers of infrastructure strategy, and that expensive and slow data movement over distance is minimized.

ITOs Must Reorganize Around Services or be Left Behind

One of the main lessons from the April 23rd Peer Incite with Jason Mendenhall, Executive VP of Cloud at Switch Communications, builder of the huge SuperNap colocation center in Las Vegas, is that cloud services are here to stay and are growing because they offer business advantages. One area where that is true is in Big Data analysis, where much of the data often comes from cloud services and resides on the cloud, and which usually requires major a compute resource to run the analysis in a reasonable amount of time.

However, cloud services also are a long-term threat to ITOs. Every time a cloud service, like Salesforce.com, displaces an internal application like CRM, the ITO shrinks. Given that the cloud is here to stay, ITOs have to adapt or die.

The concept of running IT as a service organization is not new – Gartner was talking about it as early as 1990. Back then the argument was that business executives see IT as a set of business services, not a collection of infrastructure, software, etc. CFOs and CEOs have always wanted IT to manage itself as a service provider, but the constant war to keep the lights on, waged in large part in terms of a constant series of minor tweaks, scripts, and other highly technical adjustments, punctuated by major system crashes and application migrations to new hardware, forced a tech mindset that quickly devolved into silos – server, network, storage. That made it nearly impossible for IT professionals to see across the entire system, much less manage how something as complex as ERP ran, and what it cost, end-to-end.

Virtualization, which moves management up to the software layer and out to the edge of the system and promises a near future in which entire data centers will be managed from a “single pane of glass”, along with IaaS and network-based colocation services like Switch that allow ITOs to turn much of the hardware layer over to someone else to run, are changing that. They are replacing the traditional detailed technical challenges with a new set of higher level, business-oriented issues including managing a growing stable of cloud service providers (SaaS & IaaS), decisions about where different data sets need to reside and how much control the enterprise really needs over those data sets, and how to leverage new kinds of applications coming in from the cloud that never were in the data center.

At the same time, SaaS and the new mobile cloud services are changing user expectations. If Salesforce can provide CRM as a set of services on a utility pricing model, CFOs are asking, why can't my ITO do the same? It is not a long step from that to a decision that either the ITO does take a services approach or the CFO and CEO will replace it with providers that do.

However, reorganizing around services is not just a technology transition, it is a complete organizational change. It must be driven from the top – the CIO or above, rather than created from the bottom. And it will mean the retraining or replacement of many IT employees, not all of whom will make the cut. That includes CIOs, themselves.

Action item: The bad news is that the process will be wrenching at times. The good news is that this is not going to happen tomorrow. ITOs have time to make this huge transition. But CIOs need to kick the process into gear now. The longer they wait, the shorter the time they will have and the more ground they will lose.

The Vendor Survival Guide for Competing Against Amazon's Cloud

While the wave of cloud computing has been part of the IT dialogue for the last five years, the ecosystem is still in the early phases of maturation. On the April 23, 2013 Peer Incite, Jason Mendenhall of the Vegas-based Switch cloud colocation facility said that the industry is still in the “VHS phase” (1.0 version) of cloud.

While Amazon does not break out its cloud revenues, it is generally understood that AWS is the leader in cloud with estimates that it could bring in over $3B in revenue for 2013. Amazon is not only the leader, but is a disruptor to other cloud services through frequent and rapid deployment of new features and services. Technology suppliers must not only mimic Amazon’s speed and ease, they must rapidly put forth the business case for an alternative that differentiates and clearly communicates why and how they are different.

Jason Mendenhall spoke of how Switch brings together an inter-cloud exchange that delivers a broad ecosystem of cloud providers plus cross-connect capabilities. As the suppliers that Switch hosts look to win business away from Amazon, they should focus on things that Amazon can’t or won’t do. There is also immense opportunity to deliver on targeted cloud offerings that are targeted at specific vertical needs.

Here are four areas that vendors should look to for differentiation from Amazon AWS:

Performance: While Amazon continues to add options, not all clouds are created equal. Locality, network design and how compute and storage communicate are all important. Clouds/services need to compete with better performance overall in the design and the type of workloads that are best used in the environment.

Cost: Amazon and Google are racing each other down in price, but it is still very difficult to determine what real costs will be over time. Vendors can help customers choose the right solutions by offering transparency (it’s been said that Amazon is only cheap until you use it). The cost of moving data out of Amazon is colossal. Clarity of costs is key.

Control: Privacy, security, compliance, and alignment with governance requirements and/or corporate edicts is challenging. Vendors need to deliver appropriate reporting and a new wave of toolsets to monitor the environment. Visibility into application performance and control is absolutely crucial.

Support: SLAs must be transparent (easy to find on a public website) and better than Amazon. Having 24X7X365 phone support. Amazon is growing its channel to reach deeper into the enterprise.

Action item: Amazon’s market position in cloud, rapid growth, speed of innovation and low margins make it a daunting competitor. Technology vendors and service providers can successfully compete; especially when they can be part of an ecosystem such as Switch has built. CIOs are looking for deeper relationships to find new value by using the cloud beyond simply lowering costs.

Switch SuperNAP Cloud Infrastructure Service Does the Heavy Lifting for IT

As the cloud service provider space matures, it has become apparent to organizations of every size and industry that building and managing a data center is best left up to the experts. Despite concerns about security, data loss, availability, and response time, companies are increasingly adopting cloud solutions of various forms and for a variety of reasons including cost and freeing up valuable IT resources to focus on higher value projects for their organization.

During Wikibon’s April 23, 2013 Peer Incite, Jason Mendenhall, EVP of Cloud for Switch Communications spoke about how Switch’s 400,000 square foot – and rapidly growing – SuperNAP colocation center in Las Vegas is transforming the IT industry’s perception of cloud services. “Today, the question is ‘which cloud?’ not ‘if’ a company should adopt a cloud strategy. Customers tell us ‘I need an alternative to Amazon Web Services (AWS)’. They come to Switch because we offer them more transparent and competitive pricing, better security and scale, live customer service, flexibility of service, and control in the form of tools that monitor application performance or support compliance requirements.' Switch has hundreds of clients from large government agencies and Fortune 100 companies, hospitals and Internet start-ups to dozens of cloud service providers.

Mendenhall believes cloud service providers need to differentiate themselves and in doing so provide their customers with the means to transform their own internal IT organizations. “The IT executives of the future will be much more business focused, with skills for managing multiple vendor relationships and development environments, not technologies. These new IT leaders will manage strategy and direct a service oriented organization, and the cloud will help them get there.”

Goodbye Non-Differentiated Tasks and Services

The move to cloud involves “moving up the value stack” – getting rid of mundane infrastructure management that adds little or no business value. Unless your organization is selling IT services, managing infrastructure is often not a value generator. Indeed, many cloud-based application service providers themselves leverage Switch, AWS and other cloud services as part of their value proposition.

Benefits of moving to the cloud include:

- Resource Re-allocation: Moving lower value-creation tasks such as infrastructure management to the cloud frees up key resources to place on mission critical or other projects that help an organization innovate or grow its business.

- Recruitment and Retention Improvement: Moving IT away from non-differentiated tasks to projects that drive revenue or help transform the business provides incentive for existing IT employees and helps recruit additional talent.

- Expense Redirection: Moving to the cloud moves capital expenditures to operational expenses – an advantage for many companies – allowing organizations additional free cash to spend on higher value projects.

- Technology Refresh Avoidance: Moving to the cloud avoids investing in soon-to-be obsolete hardware and network infrastructure as well as the task of updating system software and maintenance contracts.

- Instantaneous Scalability: The cloud allows a company to add additional storage or compute power at will. An organization can also carve out temporary cloud space for testing applications, managing projects or for seasonal requirements.

- IT Simplification: Moving to the cloud reduces IT infrastructure complexity and most likely the number of vendors an organization needs to manage. Older systems can be retired and the data from those systems hosted in the cloud.

Bottom Line

The benefits of cloud are clear and compelling for just about every IT shop. But as the cloud space matures, IT leaders need to determine which cloud services will best suit their unique requirements and decide whether to simplify their IT infrastructure or get rid of it altogether.

Action item: CIOs and other IT leaders need to take a portfolio approach and decide which processes and skills to keep, what technology or services to invest in, what to re-write, and what portion of their existing portfolio is either too costly or time consuming to continue to support in-house. CIOs should also consider conducting a value analysis, preferably with existing internal tools (ROI, TCO, workflow, etc.), to determine where to put resources and to assess the go-forward scenario for using cloud services to transform their business.

Weighing the Costs and Benefits of Big Data in the Cloud

The public cloud is gaining traction as a popular platform on which enterprise developers and data scientists can explore novel Big Data use cases and perform small proofs-of-concept. These often involve relatively small subsets of data extracted from enterprise-owned data sets, and projects are often brought back in-house when they move to production-level status.

This occurs for a number of reasons, from data security and privacy concerns to data movement and network constraints. By bringing Big Data projects back into corporate data centers, however, enterprise developers and data scientists are forgoing one of the major benefits of the cloud with respect to data-centric applications and analytics: simplified and cost-effective access to third-party data.

Significantly more value can be realized from Big Data projects when internal data sets – such as customer transactional data - are merged with third-party data that, when analyzed, reveal insights not possible to uncover with internal data alone. By bringing these data sources into the equation, business analysts and data scientists are more likely to discover game-changing insights that positively impact the bottom line.

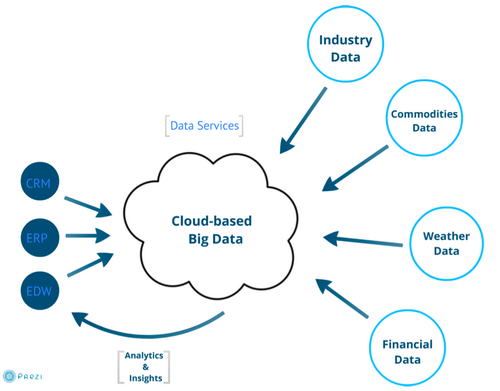

By definition, third-party data is created and stored outside any given enterprise’s data center. Social media data – such as Facebook “likes”, Tweets and Pinterest comments – get the lion’s share of attention, but just as or more valuable are third-party data sets such as financial markets data, weather data, and aggregated industry-specific data. As these data sets “live” in the cloud to begin with, it sometimes makes more economic sense to access and integrate them with internal data via cloud-based Big Data deployments.

Put another way: It costs significantly less money and takes a lot less time to move a few terabytes of internal structured data from a corporate data center to the cloud than it does to move several petabytes of multi-structured data from the cloud to a corporate data center. Resulting high-value analytics and insight can then be brought back into corporate data centers.

Further, cloud service providers and data brokers have begun offering packaged data services that make it relatively simple to access and integrate new data sets with cloud-based Big Data projects (See Figure 1].

CIOs absolutely must still seriously consider the security and privacy implications of performing Big Data analytics in the public cloud. In particular, when third-party data is brought to bear in Big Data projects, the resulting analysis and insights often take the form of new data sets that are themselves significantly more sensitive than what came before. (Consider the recent controversy over research from the University of Cambridge that illustrated how traits such as sexual orientation can be determined by analyzing seemingly unrelated Facebook likes.)

Further, some enterprises for which Big Data analytics serves as a primary source of competitive differentiation (and this will be the case at more and more enterprises across vertical industries as Big Data projects mature) will likely determine the cost of investing in and developing its internal Big Data core competency (both technology and people) outweighs the benefits of outsourcing to the cloud.

Action item: CIOs should think seriously about deploying production-level Big Data projects in the public cloud when incorporating significant amounts of third-party data is involved. Perform a thorough cost-benefit analysis that takes into account the time and financial costs of identifying, integrating, and analyzing third-party data in both internal and cloud-based Big Data deployments. Do not, however, overlook the security and privacy implications of Big Data in the cloud and push cloud service providers to provide detailed accounts of security policies/capabilities in order to de-risk cloud deployments.