Research done in collaboration with Stuart Miniman

Contents |

Introduction

The release of the first beta of VMware’s VSAN product has spurred a surge of interest in the hyperconverged infrastructure space. VSAN is VMware’s software solution, which converts server-based storage into a Virtual SAN and is intended to replace traditional networked storage arrays. Hyperconverged infrastructure takes an appliance-based approach to convergence using, in general, commodity x86-based hardware and internal storage rather than traditional storage array architectures. Hyperconverged appliances are purpose-built hardware devices.

Although VSAN alone does not constitute hyperconvergence, because VSAN is the software-defined storage component in an overall virtual environment, the environment does converge compute and storage into a single solution. This is why VSAN is of particular interest to companies such as Scale Computing, Nutanix, and SimpliVity. This paper will compare and contrast VSAN and these technologies. These three companies have planted their flags firmly in the convergence market and have made waves with their ability to simplify data center architecture at prices far lower than more established players providing single resource solutions. This high growth area can expect even more growth and attention as VMware releases VSAN into the marketplace.

All of these solutions are designed to simplify the data center. They accomplish this by leveraging software + flash, which brings storage close to the compute resource, eliminating the standalone storage arrays that currently occupy much of the data center. Rather than use an expensive, proprietary monolith (the SAN), these solutions aim to harness less expensive, but very capable, hardware from across all of the hosts in the cluster and manage that storage with a robust software layer.

VMware VSAN

As of this writing, VMware VSAN remains in beta and is subject to some beta limitations that are expected to be lifted before the product enters general availability.

For the companies that operate in the hyperconverged space, VMware’s VSAN is a major validation of its direction as they combine storage and compute into ever-smaller appliances designed to tame what has become the data center beast. Despite having "SAN" in its name, to call VSAN a SAN is a misnomer. As is the case with all the products in this space, the storage component is comprised of commodity storage devices brought together as a software-based storage array. In VSAN, the individual hard drives and flash (SSD or PCIe) that are present vSphere hosts form the basis for this storage array as long as the hardware exists on VMware’s hardware compatibility list. The nodes used to provide virtual machines each become nodes in the storage pool. Due to its VMware-centric nature and that fact that VSAN doesn’t present typical storage constructs, such as LUNs and volumes, some describe it as a VMDK storage server.

Ease of use

Ease of use is one of the principles in the software-defined data center, and here VSAN doesn’t disappoint. To use VSAN, an administrator needs only to enable the service at the cluster level, a task handled via vCenter. It should be noted that, while VSAN is easy to enable, it is not intended to be free for existing vSphere users, and pricing has yet to be announced. As time goes on, CIOs are increasingly interested in solutions that help to tame what has become an IT beast in many cases. VSAN’s simplicity, as well as its integration with what is already a familiar data center tool — vSphere — will make it an ideal choice for those who are looking for ways to simplify. On the cost front, VMware claims than VSAN can support the same number of VDI endpoints as an all-flash array, but at 25% of the overall cost. This is due primarily to the fact that VSAN is leveraging a combination of just a few solid state disks, which are used to accelerate a much less expensive hard disk, while the all-flash array is quite expensive. Further, VSAN is a software-only solution, so customers do not need to pay for anything except the inherent software costs as well as the solid state disks and hard disks they select. With an all-flash array, the customer is paying for the chassis, the controllers, the power supplies, as well as a full array of solid state disks.

Scalability

In beta, VSAN clusters include three to eight nodes. At least three nodes are required to establish baseline data protection. One can surmise that the eight-node limit during beta is a restriction designed to limit use cases for testing. The production version is expected to support up to 32 nodes, which is the maximum size of a vSphere cluster, but at this point, that is just an assumption. Even with an eight-node limit using today’s large disks, organizations can still enjoy an array with significant capacity. Since VSAN has yet to exit beta, it’s still uncertain whether the eight-node limit will be lifted. If it isn’t, VSAN is an option that SMB and midmarket CIOs should consider in their planning. Depending on capacity needs, enterprise CIOs may also find utility in an eight-node VSAN but will likely be more interested in having the potential to scale beyond this limit.

Different disks, different purposes

In the world of VSAN, different kinds of disks are used for different purposes. Solid state disks are used as a distributed read/write back mechanism with fixed usage; 70% of SSD capacity is used to support read cache with the remainder serving write caching needs. To protect against data loss, writes can be mirrored between solid state disks in different nodes. It’s important to note that solid state storage in VSAN is not used to store data; it’s used only for caching.

Here’s specifically how it works: It’s pretty common knowledge that modern virtual environments suffer from what has become known as the “I/O blender,” which makes it very difficult for storage arrays to keep pace with the myriad I/O patterns hitting the controller. In VSAN, the flash storage tier is used to cache writes and convert them into I/O patterns that are more sequential in nature and can be written to the hard disk tier in a much more efficient way. This turns the I/O pattern into sequential I/O, thus improving performance.

VSAN facts

In addition to using a fixed read/write caching ratio, VSAN carries with it a number of other requirements and limitations. Each storage node can have up to eight disks. With VSAN, the use of both kinds of disks — hard disks and solid state disks — is required. As such, there must be at least one hard disk and at least one solid state disk in each node.

As one would expect, VSAN, by virtue of its creator and the fact that it is tied deeply into the vSphere kernel, is a vSphere-only product. It is not and probably never will be a hypervisor-agnostic product. Given vSphere’s market position for now and the foreseeable future, this will not be a serious limitation of the solution.

VSAN’s tight coupling with vSphere imbues the product with some benefits but also carries some risk. Any time a service tightly bound to the kernel experiences a problem, the entire host may be negatively impacted. Bear in mind that VSAN is still a beta product and is undergoing testing, so all possible use cases and interactions have yet to be tested.

VSAN limitations

VSAN also carries with it what could be considered some technical limitations. For example, data is statically assigned to a node. In the current incarnation of VSAN, data placement occurs at the time data is initially written, after which it doesn’t move. As other objects change nodes, such as virtual machines, this can become a performance limitation, as data may not be local to the node on which it is actively being used. This adds latency to the storage process and additional load to the networking layer. As clusters grow, data locality becomes increasingly important for maintaining good performance.

From a pure features perspective, VSAN doesn’t add much beyond storage aggregation, tiering and policies, relying on vCenter and vSphere to provide the bulk of its storage efficiency tools. Such services include snapshots, linked clones, replication, vSphere high availability, and distributed resource scheduler.

In this first iteration, VSAN is very much a way to aggregate host-based storage and divide it into a storage tier and a caching tier. Dividing storage in this way provides customers with improved storage performance over just hard disks alone. As is the case in some hybrid storage systems, VSAN can accelerate the I/O operations destined for the hard disk tier, providing many of the benefits of flash storage without all of the costs. This kind of configuration is particularly well-suited for VDI scenarios with a high degree of duplication among virtual machines where the caching layer can provide maximum benefit. Further, in organizations that run many virtual machines with the same operating system, this breakdown can achieve similar performance goals. However, in organizations in which there isn’t much benefit from cached data — highly heterogeneous, very mixed workloads — the overall benefit would be much less.

There are already signs that VMware is working on eliminating some of VSAN’s limitations. At the time the beta was announced, a server could only support six disks per disk group and a maximum of five disk groups. As of the end of November, 2013, the latest beta refresh of VSAN increased the number of disks in a group to seven, and it is widely expected that VMware will increase the number of hosts that can take part in a VSAN cluster, which is currently limited to eight.

VSAN policies

With regard to policies, there are three important terms to understand in VSAN:

- Capabilities: These are things that VSAN can offer, such as performance or capacity (i.e., flash read cache reservation percentage).

- Policy: A combination of capabilities that can be applied to an object.

- Object: An object is usually a VMDK file and any associated snapshots.

So, with VSAN, an administrator can, for example, apply a policy based on flash read cache to an individual VMDK file. As such, VSAN can provide an organization with fine-grained policies to make sure that mission-critical systems get the resources they need.

VSAN: The bottom line

As mentioned, VSAN is well-suited to VDI scenarios, but given the fact that it’s being built into the kernel and that vSphere supports so many general purpose workloads, VSAN will also be more than suitable for most mainstream server virtualization workloads and will likely be a contender for other I/O intensive needs as well. However, given that VSAN is just a software-only feature bundled with vSphere, it seems that there would be ample opportunity for customers to discover hardware combinations that either don’t work or that don’t meet expectations.

VSAN is very much a “build your own” approach to the storage layer and will, theoretically, work with any hardware on VMware Hardware Compatibility list. However, not every hardware combination is tested and validated. This will be one of the primary drawbacks to VSAN and one of the biggest draws to alternative solutions, including Scale Computing, Nutanix, and SimpliVity, which deliver predictable performance on standard hardware configurations.

Scale Computing

Unlike VSAN, Scale Computing, Nutanix and SimpliVity sell solutions comprised of hardware and software. While their software could, in theory, be sold as standalone software, bundling the software with pre-architected hardware into hyperconverged appliances allows them to provide their customers with better support and be sure that the total package can provide a predictable level of performance and capacity. Support for this predefined hardware set is certainly much easier than simply supporting whatever customers decide to pull off the shelf and use. It’s also easier for the customer, since it avoids the need to choose, test and optimize the environment, which results in a longer time-to-value. A “roll your own” environment can be considered “undifferentiated heavy lifting” due to the amount of work that it takes to make sure everything is operating as expected.

Ease of use and time to value

As is the case with all of the solutions in this space, Scale Computing’s solution is an integrated appliance that features ease-of-use capabilities claimed by all hyperconverged solutions. In fact, simplicity and ease of use are defining characteristics of hyperconverged appliances.

In a traditional environment, deploying even a three- or four-node highly available cluster can take days. Between establishing the shared storage environment and then individually configuring each individual node, full deployment can be a long undertaking. Further, the cost of such environments – (ones that include a SAN) is often quite expensive, and the need to manage the compute and storage resources separately adds to both the cost and complexity of the solution.

With Scale Computing's HC3 and HC3x platforms, an administrator needs only to rack and cable each appliance, assign IP addresses to each node and initialize the system. Because the hardware is already present and the management layer handles the rest, this is the entirety of the initial configuration, which can be accomplished in just an hour. When considering the increasing importance of time-to-value — the amount of time that it takes a new solution to provide direct value to an organization — this decrease in deployment time is important. It also addresses the “simplicity” angle that is important to CIOs and businesses.

This initial configuration also provides an environment in which any deployed virtual machines are highly available. Because the storage layer is a fully integrated component of the overall system, the administrator doesn’t need to create clusters, data stores or any of the other structures that have become common in today’s virtual environments. The software-based availability mechanisms that run the cluster keep virtual machines running regardless of disk or hardware failures.

Data center costs and economies of (small) scale

In addition to enabling simplicity even for complex scenarios, hyperconverged infrastructure appliances are also supposed to provide positive benefits to data center economics. Beyond simply choosing commodity x86-based hardware, Scale has made some other decisions intended to tip the cost benefit in its favor and to differentiate itself from the competition. Specifically, Scale has adopted the open source KVM as the sole underlying hypervisor for its platform and has customized the surrounding tools to bring to KVM enterprise-class features needed for most customers. Scale didn’t just choose KVM due to cost, though. The company chose KVM because it runs well on top of the platform and provides a solid workload foundation. It also eliminates the need for a VSA, which when coupled with its object-based storage layer, allows it to have direct access to the hypervisor.

The hypervisor and related services

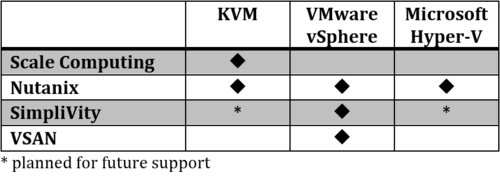

Scale Computing feels that the selection of KVM is a key differentiator in this virtual platform solution as, when coupled with the management layer built by Scale, it provides the full breadth of features needed by customers, without the licensing costs found in other solutions. The other players in the space have all opted to support commercial hypervisors, although Nutanix does support KVM as well. Scale’s selection of KVM will be of most interest to those who are particularly price-sensitive, don’t have a substantial investment in other hypervisors, and don’t need the solutions found in the mainstream ecosystem. This allows Scale to eliminate the need for licensing fees and include all features in its pricing. Table 1 provides a look at the hypervisors supported by each of these platforms.

Data center economics: Step size

When it comes to hyperconvergence, one of the key considerations faced by organizations is the overall “step” in terms of what it takes to add additional infrastructure to an existing data center. The larger the incremental step size, the higher the likelihood that an organization expanding its data center will be left with excess resources that may take a long time to grow into. When it comes to providing organizations with a step size that adds noticeable resources without adding too much, Scale wins the day. The largest unit, the HC3x adds just a single six-core processor, 64 GB of RAM, and between 2.4 TB and 4.8 TB of raw disk space to a cluster. This is a small step size that carries a small incremental unit cost as organizations seek to expand. Given Scale’s target market, this scale size is well-considered.

This also makes the Scale HC3 and HC3x among the most affordable appliances in terms of initial acquisition. Organizations can buy a three-node starter system that includes:

- 12 processing cores,

- 96 GB of RAM,

- 6TB to 24 TB of raw capacity.

In the interest of simplicity and supportability, ongoing implementation and management of Scale’s solution is an area that can be considered a key differentiation. Regardless of platform, VMware and Microsoft environments still require a lot of planning and configuration. From an ease-of-use standpoint, Scale believes that it has a competitive edge since it was built with simplicity and high availability built in and not added as an afterthought. Further, the company has custom-developed its own patented technology for administrative systems to support KVM and its hardware.

Data protection

In any environment, data protection is a critical function. In a Scale environment, the management layer uses a Storage Protocol Abstraction Layer, which helps in this effort. This layer packages all file or block I/O requests into 256KB chunks wherever possible – the remainders are allocated in 8KB chunks. From there, two copies of each of these chunks are written to separate locations in the storage pool, thus providing data protection that is roughly the equivalent of a RAID 10 configuration. When a disk fails, the management layer will detect this failure and route I/O operations to a secondary copy of the data. In addition, the system proactively regenerates another copy of any lost data in order to maintain a high level of data protection. In the event of the loss of an entire node, virtual machines are automatically restarted on other nodes, using secondary copies of the data.

Well-thought performance

To improve overall cluster performance, Scale implements a technique known a wide striping in which I/O is aggregated across all of the disks in the cluster. In this way, the cluster doesn’t have to wait for an individual disk to complete a large operation. Scale also includes a per-VM feature known as VIRTIO, which enables multiple small write operations to be cached before them committing them as a larger, single write operation, thereby reducing overall disk I/O.

Scale uses a copy on write methodology to support snapshots in order to reduce the amount of space used by the snapshot. In this methodology, the snapshot file holds only the changes that have been made since the original snapshot was taken.

Small scale, bigger opportunities

Perhaps the most controversial element in Scale’s architecture is the lack of flash, which is a cornerstone element for the other solutions in this space. The flash tier in those solutions provides the architecture with a way to support even the most I/O intensive applications while still supporting capacity needs through the use of hard drives.

However, Scale’s solution is not targeted at the large enterprise; topping out at eight nodes in a cluster and supporting up to 200 virtual machines in a cluster, Scale is very much targeted at the SMB and small midmarket that may not have a need for expensive solid state storage in the infrastructure. In a fully-equipped cluster, an HC3x cluster would have eight nodes, with each node bearing up to four 15K RPM SAS disks for a total of 32 such disks. Assuming that each 15K RPM SAS disk can provide about 200 IOPS of performance that equals 6,400 IOPS in a full cluster, which is sufficient to meet the needs of many SMBs. Scale’s system uses a modular architecture. This architecture enables feature additions as customers demand new capabilities. It is this modular architecture that will allow Scale to add flash to the system if customer demand requires it.

Scale: The bottom line

Scale’s HC3 and HC3x platforms are best suited for SMBs with general purpose server virtualization needs that don’t need high I/O applications or environments that require little/no maintenance. Scale is also well-suited to remote office/branch office applications and departmental units within larger organizations. Scale is very much the “value play” of the described solutions. That should not be viewed as a negative against the product in any way. Scale provides a most capable platform.

Nutanix

Nutanix entered the hyperconvergence market by storm and has been releasing regular product updates to extend its product portfolio and to expand its reach to a wider swatch of the market. Nutanix sells solutions that are based on commodity server appliances. Each appliance contains one to four independent server nodes. A fully fault tolerant cluster can be built with three or more of these nodes.

Solution breadth and depth

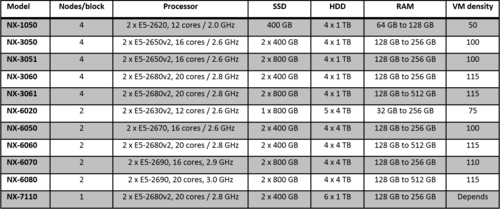

At present, Nutanix offers the widest production selection in the space. As the company continues to grow, it is providing different solutions to help customers best match individual needs and step size to ensure that growth is economical as customers add nodes and appliances. Table 2 below outlines Nutanix's product lineup as of this writing.

Perhaps the biggest challenge to having such a broad product selection is that it can be a bit daunting for customers to choose how to grow. The ability to differentiate is a positive when it comes to step size but may come at the cost of simplicity. However, the broad product line, particularly as Nutanix expands it, also allows the company to address additional market needs. When Nutanix initially hit the market, it was viewed as an excellent virtual desktop solution, and that use case was often associated with the company name. As it grows, however, the company is able to support needs beyond these VDI roots and the lineup is more than capable of handling general purpose server virtualization needs. In addition, Nutanix has released a Hadoop Reference Architecture, demonstrating the appliance’s ability to support big data analysis.

NDFS and VSA: Scale out

In the hyperconverged space, scale-out is king and is the only way that solutions grow. Nutanix’s scaling method involves the use of what amounts to a virtual storage appliance (VSA), a virtual machine that runs on each node and presents to that host an aggregation of the resources that are available in the cluster. This VSA approach is in stark contrast to VSAN’s kernel integration approach, which, for obvious reasons, isn’t really an option for Nutanix. However, while the VSA approach may introduce some performance overhead, the loose coupling it provides, combined with data replication, makes sure that a failed VSA doesn’t lead to an inability to access data.

Nutanix’s VSA is really more than just a VSA. It also intelligently leverages the SSD-based storage available in each node as a distributed global cache, which provides the user with a storage layer that is comprised of both solid state and rotational storage and that can support tens of thousands of IOPS worth of performance capacity. The SSD layer also helps to support the VSA component’s I/O overhead.

In some circles, this VSA approach has been derided as Nutanix attempting to just hide the SAN inside the box rather than replacing it. However, this does not seem to be a wholly accurate statement. The user is never exposed to the storage component, and the underlying file system takes care of storage operations, which are shielded from the user. Frankly, on the outcomes side of the technology house, Nutanix achieves the same goals that are achieved with VSAN, Scale, and SimpliVity: easier administration and lowered costs.

Replica-based data protection

As is the case with Scale, Nutanix takes a replica-based approach to data protection and the whole system is underpinned by Nutanix’s own distributed file system, dubbed NDFS. NDFS enables the system to scale storage and other resources in a linear fashion as new nodes are added to the system.

Data locality as a performance differentiator

Data locality is an important component in Nutanix’s vision. To keep data local with minimal impact, Nutanix has implemented the ability for storage to move lazily between nodes. In short, as a virtual machine moves, its storage files will eventually move, but blocks are moved only as they are accessed rather than just moving all at once. In using this method, the idea of data locality is maintained, but in a way that doesn’t place a massive load on the network by performing a full storage migration operation.

Nutanix: The bottom line

In addition to continuing to provide new products, Nutanix continues to add features to its underlying software. One such feature, deduplication, was added in version 3.5 of the company’s operating system. However, at present, Nutanix’s deduplication services only support the high performance storage tier; the capacity tier does not yet support deduplication, although this situation is expected to be corrected in a future OS release. Nutanix has said in the past that the company doesn’t add features until they lose enough deals. This is its prioritization mechanism. That said, deduplication across the entire system should be a very high priority for Nutanix. Implementation of a deduplication feature will eliminate a check mark in the “con” column as customers compare Nutanix with other solutions in this space — most notably, SimpliVity.

SimpliVity

VSAN, Scale Computing, and Nutanix are all products that deftly combine storage and compute and present it as a complete infrastructure solution. Each is based on fully commoditized x86 server hardware.

Not all goodness is software

SimpliVity and Nutanix share a lot of similar characteristics, particularly in addressing the need to simplify the data center, but SimpliVity differs from Nutanix in some very fundamental ways. First of all, whereas Nutanix is fully software-based, SimpliVity’s magic — performance and capacity optimization functions — are handled via proprietary, dedicated hardware (the OmniCube Accelerator Card) that is present in the host in order to leverage the rest of SimpliVity’s tools. Customers are not required to use this hardware accelerator card; the functionality of the card can be replicated in software, but with reduced performance. Today, SimpliVity leverages this software option (via Amazon Web Services) for remote sites where it may not be feasible to deploy custom hardware.

Hardware as a true commodity

Although SimpliVity provides pre-built hardware (outlined below), one of the company’s differentiators is its willingness to work with different compute platforms than the standard Dell offering on which its products are generally based. At present, this is a service reserved for larger deployments where it makes sense for SimpliVity to test and validate a different platform, but having the ability to choose hardware is one of the baseline principles in the software-defined data center and in software-defined storage. In this way, SimpliVity is ahead of Nutanix and Scale Computing but still not quite on par with VSAN from a pure hardware choice standpoint.

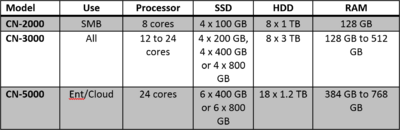

SimpliVity takes a similar approach to other vendors, but with a smaller set of products than Nutanix, and with customizability inside each product that gives the ability to provide solutions across the full market spectrum. SimpliVity’s product lineup is outlined in Table 3.

The Data Virtualization Engine

SimpliVity’s product — known as OmniCube — is powered by what the company calls the SimpliVity Data Virtualization Engine (DVE). According to SimpliVity, “DVE deduplicates, compresses, and optimizes all data (at inception, in real-time, once and forever), and provides a global framework for storing and managing the resulting fine-grained data elements across all tiers within a single system (DRAM, Flash, HDD), across the data lifecycle phases (primary, backup, WAN, archive), across geographies, data centers and the cloud.”

Although that is one long sentence, it’s the basis on which SimpliVity indicates that the company indicates that it is able to replace more than a dozen discrete data center components with a single OmniCube appliance. These components include servers, storage and specialty appliances such as WAN accelerators.

In addition to the DVE, SimpliVity also loads a virtual machine known as the OmniCube Virtual Controller (SVC), inside which resides all of the intelligence of the system. This SVC component talks directly to the hardware accelerator (the OmniCube Accelerator Card) and handles all of the write functionality of the system. As such, all of the storage across the cluster is virtualized and presented as an aggregated pool, just as should be the case in a software defined storage system.

Data replication is becoming the norm for data protection

On the data protection front, data replication is leveraged inside the cluster. This is the same approach used by the other solutions in this space. Data replication is less costly in terms of raw I/O than most RAID levels. So, even though it effectively slashes the usable capacity in the cluster, from a capacity perspective disk space is less expensive than disk performance, so the tradeoff of using replicas for data protection makes economic sense.

System wide deduplication

SimpliVity maintains a globally federated cache that tracks deduplicated files and their location. It should be noted that the SimpliVity solution provides full deduplication for both solid state and capacity storage — everything in the data path supports deduplication, putting SimpliVity in the lead (for now) in this feature. This is an important advantage to note for SimpliVity as global deduplication can be a big money saver.

SimpliVity handles this simplicity by making vCenter the center of the appliance universe. The entire system is managed through a vCenter plug-in, providing administrators with a familiar context in which to carry out their responsibilities. This is a huge advantage for organizations that have already standardized on vSphere.

Replace storage even for legacy hosts

SimpliVity has taken an approach that enables virtual machines to run inside its own cluster, but the storage component can also be exposed to legacy servers. This allows a great deal of solution flexibility as SimpliVity can supplant a legacy SAN even for environments that maintain both physical and virtual servers.

Just VMware… for now

At this time, SimpliVity is a VMware-only product, like VSAN. The company does plan to support other hypervisors in the future. At present, this shouldn’t be a major concern since VMware continues to enjoy major market share. However, as time goes on, SimpliVity needs to consider support for, at a minimum, Hyper-V, in order to enable customer choice for particular use cases.

SimpliVity: The bottom line

For particularly large organizations that want to run their own hardware but that want the benefits that come with SimpliVity, it is the one company of the three hyperconverged vendors here willing to go beyond its own hardware, but the deal must be of reasonable size in order to make the testing worth the cost. For most SMBs and midmarkets, the SimpliVity-built OmniCube will be more than sufficient for most general purpose workloads as well as for VDI and other I/O intensive needs. For large enterprises, custom hardware may be an option, but the OmniCube may be more than sufficient there, too.

Summary

CIOs considering products in this evolving market segment should consider several high level items. Each of these will help the CIO ensure that:

- A hyperconverged solution best meets the needs of the business.

- The right hyperconverged solution is selected.

Action 1: Make sure application support needs are considered

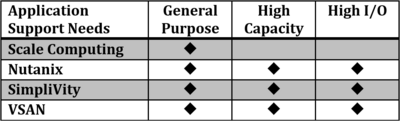

First comes an overall look at the application support landscape in the IT department. For those that are supporting relatively mainstream applications with reasonable I/O requirements, any of the discussed solutions will be a good fit as they are all well suited to general purpose virtualization (see Table 4).

Once the business deviates from this path, though, things start to change. Not all of the solutions presented are suitable for high I/O applications, particularly as scale becomes a concern. Specifically, Scale’s solution, which to be fair, is intended to be an SMB solution, will not support high I/O applications as well as some of the other players mentioned in this paper. This is primarily due to Scale’s lack of a solid state storage option in its architecture. Again, bear in mind that Scale aims to bring simplicity and cost-effectiveness to the data center and flash adds relatively significant cost.

Action 2: Match solution “step size” with overall needs and assess budget impact

As mentioned, “step size” — the amount of incremental infrastructure necessary to move to the next level — is an important consideration for CIOs considering this Lego-like approach to the data center. A small step size carries lower “sunk costs” while a larger unit carries higher costs but may still be a fit. Here, it really comes down to organization size and overall needs. In terms of step size, from smallest to largest, it goes:

- VSAN: Just add drives.

- Scale Computing: Very small step size, lowest appliance cost.

- SimpliVity: Single appliance.

- Nutanix: Single hardware device with multiple possible nodes.

That said, Nutanix is the most hardware-differentiated of the appliance vendors and has a variety of options from which to choose.

For CIOs considering one of these options, match the step size with your overall needs.

Action 3: Implement solutions that are easy to implementation and use

Gone are the days of building everything from scratch, particularly as organizations demand more from IT. More and more CIOs are demanding solutions that are easier to implement and manage. When it comes to ease of implementation, VSAN is at the bottom of the stack since it isn’t a pre-built appliance like all of the others. Any of the native hyperconverged solutions — Scale, Nutanix, SimpliVity — will meet this need.

Action Item: VMware VSAN is a technology feature that CIOs managing vSphere shops should carefully evaluate as a part of their data center strategy. However, as the hyperconverged market continues to mature and expand to encompass a growing number of use cases, CIOs should consider whether the simplicity, budget-friendliness, and performance opportunities introduced by hyperconverged solutions outweighs the perceived "roll your own" benefits (choice, flexibility) of VSAN.

Footnotes: See Wikibon's Software-led Infrastructure landing page for much more on Converged Infrastructure.