Tip: Hit Ctrl +/- to increase/decrease text size)

Storage Peer Incite: Notes from Wikibon’s August 25, 2009 Research Meeting

The utility computing model -- that computer power is delivered out of a plug in the wall and charged according to the amount used month-to-month -- goes back at least to the Science Fiction of the 1950s. Today that model has a new name, "Cloud Computing," and it is fast becoming a reality with the rapid development of Software-as-a-Service (SaaS) and Infrastructure-as-a-Service (IaaS).

The advantages of this new model are huge: It can move large parts of IT out of the enterprise, essentially converting CAPEX investments in hardware and software into OPEX monthly expenses, easing the pressure on CAPEX in today's economic market. It also allows IT to cut expensive staff -- not good for the staff but beneficial to companies hard pressed by the recession. It eliminates the need to guestimate what the business will need three-or-more years into the future, a constant issue in sizing hardware and committing to multi-year software licensing, and provides turn-on-a-dime response to unexpected changes in demand. This is particularly attractive to companies saddled with large, expensive computer installations that they now under-utilize because their business has decreased. And, speaking of under-utilization, for many companies Cloud Computing can hugely increase overall resource utilization, thereby cutting the cost of access to computing resources, by sharing resources among multiple user organizations.

The main disadvantage of the Cloud is that it is outside the corporate firewalls and delivered over the Internet. This raises both performance and security concerns. A slowdown on the Internet, a denial-of-service attack or just a cut cable in the last mile at either end of the connection can decrease service levels or cause an outage of vital services. And while the Cloud vendors usually have excellent security at their sites, your corporate data is being handled by people you do not know and have not vetted. Also, even the most secure Internet connection obviously adds extra risk to data in transit. G. Berton Latamore

How strategic sourcing changes information technology

Originating Author: David Vellante

Peer Incite Guest: Dave Robbins of NetApp

The Premise

On August 25th 2009, the Wikibon community gathered for a Peer Incite Research Meeting with Dave Robbins, the former head of Global Infrastructure for NetApp. Robbins put forth a strong premise and message to the Wikibon community, specifically:

The current economic downturn should not to be wasted by IT professionals. By challenging current assumptions and doing things differently, organizations can take fixed costs out of the business and modernize infrastructure to reduce operating expenses.

To pay for this transformation, users should consider strategic sourcing as a way to move CAPEX costs to the OPEX budget and move toward a model more weighted toward variable costs. This was generally considered by the call participants to be an early step on the cloud computing maturity model.

Metrics that Matter

Two key statistics came out of the call today:

- Server utilization rates in test and dev environments average between 5-8%.

- Typically, IT infrastructure costs are 70-80% oriented toward fixed costs.

Cloud computing promises to alter this dynamic. Organizations are on a multi-year journey to reduce the fixed cost percentage of expenditures and variabilize costs, with a 50/50 mix as a reasonable target that will vary by organizational size, business emphasis, and other factors. The Wikibon community discussed a three-step process to achieve this transformation, involving:

- Cut projects that aren’t essential and implement a prioritization and demand management system.

- Use sourcing as a strategic weapon across the portfolio to eliminate fixed costs.

- Develop and introduce metrics and sustainable processes to govern continuous improvement.

The End Game

By taking such an approach, Robbin’s goal for NetApp is not only to reduce fixed cost but to improve business flexibility. The starting point for NetApp was to figure out which pieces of IT get outsourced and which stay in house. Robbins conveyed to the Wikibon audience a simple model that: Outsources more generic infrastructure (e.g. test/dev and corporate Web site apps); Identifies applications that are standalone and pushes those to outsourced infrastructure where possible (e.g. small data marts) and Identifies applications that support specific business processes that can be pushed to the cloud (e.g. HR and CRM).

What’s left are strategic applications with high degrees of integration that are core to the business and will remain in-house indefinitely.

The fact is, however, that we’ve heard similar stories and predictions over the past decade. The key question that came out of the call is the following:

Is this a permanent shift in the mindset of business technology executives or, will things normalize (i.e. revert back to a fixed cost model) once the economy rebounds?

Five Reasons the Change is Permanent

Robbins and the Wikibon community cited five reasons that lead us to believe this is a more permanent shift:

- The quality and ubiquity of networked computing is much greater today than in the past.

- IT complexity continues to escalate, and IT response rates continue to be too slow as compared to external cloud solution providers; there continues to be a slow and steady movement toward the consumerization of IT.

- Customers are demanding cost transparency, metering, and pay-as-you-go pricing.

- Technologies including virtualization and software-as-a-service are maturing in support of this trend.

- The severity of the economic downturn will have long-term effects on corporate behavior vis a vie over-investing in fixed asset bases that are continuously depreciating.

Action item: Technology executives are being forced by business conditions to modernize infrastructure, better utilize assets, and provide equal or improved service levels. To pay for this transformation, organizations must use sourcing as a strategic weapon to drive fixed costs out of the business and improve responsiveness. The skill sets required to support this new reality will increasingly emphasize business and not technology optimization.

Are fossilized process and ideas damaging your data center efficiency?

Fossilized processes are created by doing the same thing over and over again without asking the question why! Fossilized ideas are like trying to solve today’s problems with last year’s process or solutions; it just does not make sense.

Few of us have the discipline to challenge the status quo meaningfully, we justify our indolence with a focus on the immediate task of just getting things done. However the confluence of the current recession and evolving IT technologies has created an environment rich in opportunity to break from business as usual.

An excellent example of such thinking was offered by Dave Robbins, CTO/IT at NetApp, at the Aug. 25, 2009 Wikibon Peer Review. Dave asked the question, “Why do we have raised floors?” Originally, it was to accommodate the large Bus & Tag cabling (remember them), and it makes no sense to run cooling through the floor. So, other than in some mainframe shops, why do we still need raised floors? The data center has many such examples. Do you know that on average 20% of the servers in a data center are not being used, or no one knows what they are being used for? Do you know your storage utilization? How many expensive storage resources are creating cost without contributing appropriate business value? How about your suite of applications? Is it sensible to continue to host all applications internally or would it make more sense to outsource some? There are many more examples, but these well illustrate the point.

Probably the most defining technology trend in the recent years has been the significant advance in Cloud technology. This has driven a strong resurgence in outsourcing with a growing base of disparate service providers delivering a potpourri of Cloud-based services such as the increasingly popular SaaS.

Thanks to advanced Cloud-based technologies, outsourcing in its many flavors is becoming the enabler of a key data center attribute, agility. The enablers are;

- Reducing capitol expenditures and moving to a pay-as-needed plan. This allows data centers to respond more quickly to unpredictable workload demands.

- Reducing fixed budget costs from the nominal 65% to 80% of total budget closer to 50%, which increases the data center manager's freedom to respond to dynamic circumstances.

- Outsourcing to free data center resources such as facilities, equipment and specialized skill sets for reallocation or budget relief.

Action item: Always question the status quo, identify and eliminate the fossilized processes that inhibit rather than contribute to efficiency, look for those loosely integrated applications that could be efficiently outsourced and increase variable verses fixed budget expenses. These actions should drive efficiency, optimize budget expenditures, and increase agility within the data center.

How to Determine which Apps to Outsource to the Cloud

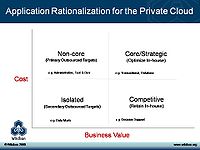

Organizations looking at cloud-sourcing strategies generally and specifically so-called private cloud strategies need to align the perspectives of the CIO, application owner, and infrastructure management. The decision to outsource or retain applications in house should be based on an analysis of cost, business value, and risk (to the business).

The private Cloud or hybrid Cloud encapsulates both internal and external infrastructure and provides a common security, privacy, governance, and compliance framework as applications are moved. In essence, the private cloud includes a highly virtualized in-house infrastructure and an external cloud infrastructure, both optimized to move applications around the organization based on demand and supply.

Rationalizing the Outsourcing Decision

Business value is derived from making the business more productive, more responsive, or more risk averse. Other factors involved include generating revenue and lowering costs. Application owners need to evaluate their portfolios on a relative value basis, which is a starting point to the outsourcing decision. Costs are then assessed as well on a relative basis using a simple scoring method.

Specifically, organizations should start by selecting possible application candidates for outsourcing to the cloud by doing the following:

- Group applications into 'suites' aligned by business process (e.g. HR enrollment or sales fulfillment).

- Perform a relative analysis of cost and business value for each application suite.

- Start with those applications that are lower in perceived value (and business risk) and are relatively expensive to deploy and maintain -- these are likely candidates for outsourcing because they aren't strategic and they are too expensive to run in-house.

As shown in Figure 1, this exercise allows the visualization of applications on a grid that can be used as a communications and decision-making tool. Applications that are core to the business and highly integrated (with high IP content) should remain in-house, while applications that are relatively standalone (e.g. fewer users, less strategic) are good candidates for outsourcing.

Dave Robbins of NetApp advised the Wikibon community to look for opportunities to dis-integrate applications where possible and outsource portions of what may appear to be highly integrated and immovable apps. The NetApp example given was choosing between upgrading a highly integrated Seibel CRM application or dis-integrating the app and moving to a SaaS CRM.

Action item: Cloud computing is coming to a data center near you. The message to practitioners is outsource the low value/low risk applications first, gain experience and virtualize in-house resources to enable optimization of more strategic systems.

Cloud computing mandates IT organizational change

Today’s silo-oriented IT organizations are ill-suited for adopting Cloud Computing. The traditional structure of application development, operations, technical infrastructure, finance, and help desk has been very effective at centralizing IT and reducing cost, but will need to move closer the business as cloud computing matures.

Organizations have taken large, multi-facetted applications (SAP, Siebel and many others) and implemented modules across different business processes. For example, sales modules may be included with a manufacturing package. Cloud computing will provide alternative solutions where, for example, it may be more effective to utilize a higher function SaaS package to manage sales and channels and provide feeds into centralized manufacturing and distribution systems.

These kinds of decisions dictate that the business accept a higher degree of responsibility for IT, and that choices of applications, infrastructure, and support be pushed down closer to the business.

The most difficult aspect of this IT organizational evolution will be to ensure adequate cross-functional governance, compliance, risk management, and security. These IT functions will need to be strengthened to ensure, for example, that risks taken by one function do not impact the organization as a whole. The meltdown of financial organizations because of lack of central governance and risk-management should provide a salutary example to all.

Action item: Organizational executive teams will need to take a hard look at current IT organizations and start to move overall responsibility for IT closer to the core business functions. IT should start untangling applications that integrate across business processes. However managing the IT architecture for overall governance, compliance, risk, and security management should remain a strongly centralized function, integrated with key senior business professionals such as the chief security officer.

Will the cloud strangle IT vendor margins?

As Cloud Computing matures over the next decade, service providers will take an increasing share of the IT market. Non-strategic functions and large chunks of IT will be etherized. Wikibon predicts that over this time, total spending on external IT services will exceed the declining traditional IT budget in most shops.

Users have needed the support of the IT vendors and have paid premiums for software and services that ensure project success. IT infrastructure vendors are living off the reality that users always buy more than they really need and under-utilize it. Margins have been good, and the IT vendor community has continued to be robust.

Selling to Service Providers

Service providers however have a 'coin operated' relationship with their customers, who just pay for what is used. IT is core to a service provider's business, expertise is high and they consume IT equipment in large quantities. Service providers are astute negotiators and will not pay a premium for software or services. For vendors who supply to service providers, volumes are high but margins are thin – sometimes very thin.

The key strategic decisions for software and hardware vendors are:

- How to sell to service providers;

- Whether to become one.

Selling to service providers means creating relationships with large services companies and creating products that will be effective. Becoming a service provider meansMichael Versace changing the product, competing with your traditional sales channel near-term and, increasingly over the next decade, your customers. Either way, the current model of large upfront software costs with big maintenance fees will not be sustainable when dealing with services providers. In general, the philosophy of variabilizing costs will ripple through to software and maintenance models, mandating new ways of pricing and generally changing business practices.

Action item: The traditional model of selling to IT organizations will be a declining market. It would be imprudent to throw away this cash cow, but it will be essential to develop deep relationships with services companies and create a set of service company solutions. IT vendors should consider developing ‘sandbox’ service offerings with two objectives: 1) Learn about the requirements of this new marketplace; 2) Hedge your bets in case you can’t sell to service providers. For all vendors the strategic imperative will be to drive down product cost and increase volume.

Technology Risk Management for Virtualized Sourcing Strategies

The perfect storm of an economic recession, the advent of enabling technology in the form of unlimited bandwidth, virtualization, ever-increasing computing and storage power, and goals to variablize IT costs may mean the time for Cloud Computing has come - in one form or another.

Trading Technology for Business Risk

CIOs are faced with trading traditional technology risks and controls for new business and enterprise risks when sourcing strategies move technology infrastructure and responsibilities out of the data center and to the external Cloud. This in essence causes a shift away from traditional enterprise technology control thinking (e.g., access management, change control, data security, infosec, DR) to service provider relationships, capabilities, and accountabilities, or in a nutshell, to business risk management.

For example, if the enterprise can no longer touch the mainframes, servers, or storage devices, in theory it is no longer necessary to maintain datacenter controls for physical access management, environmental protection, and back-up/recovery (see Cloud Solves Security). However, new monitoring, auditing, compliance, and certification requirements are needed to maintain a business relationship with the Cloud service provider and provide the transparency, control, and trust necessary to support the business. Or if the specific Cloud service allows or requires the enterprise to accept digital identities established by end-users in the cloud, there should be a corresponding shift in controls and investments by the enterprise away from identity provisioning capabilities and to methods that allow the enterprise to trust identities provisioned externally. Or finally, if the new virtualized environment requires sensitive information to reside outside the enterprise, new information management controls –business data centric – are necessary to compensate for the lack of physical control.

CIOs and business managements must address the advent of a virtualized technology risk environment when sourcing decisions include external cloud service. To understand the requirements of this new risk environment, the enterprise can start with 4 basic steps for determining the impact of sourcing decisions on its existing technology risks program:

1. Understand the business value of the application or business function being sourced (How to determine which Apps to Source). Determine criticality to the business, the risk inherent in supporting the application or business function, the control environment (e.g., application and data integrity controls, compliance capabilities, service level requirements) and its efficiencies, and the residual risks.

2. Understand the trust model of services providers, and assess the following capabilities from the perspective of transparency, control, and separation:

- Identity vetting, provisioning, and enrollment;

- Data protection, erasure, and retrieval for structured and unstructured content;

- Software assurance, patch and change management;

- Client platform security;

- End-to-end network security;

- Personnel screening;

- Platform hardening, multi-tenancy protections – compartmentalization, security, and performance assurance;

- Service resiliency – failover, recoverability, RPO and RTOs;

- Real-time auditing, monitoring, and compliance reporting;

- Support for forensic and legal investigations;

- Infrastructure provisioning and configuration management;

- Administrative and privileged user access;

- Event and incident management;

- Third-party assessments;

- Security standards compliance.

3. Do the gap analysis. The delta between the risk and control capabilities determined in 1 and 2 above will give the enterprise a clear picture of the activities and investments required to virtualize technology risk management.http://wikibon.org/wiki/v/Using_sourcing_strategies_to_modernize_legacy_systems#

4. Focus on transparency, compliance/control, and separation. Capabilities, features, and services for on-demand and continuous visibility in to the virtualized infrastructure and operations, the need to satisfy internal and external compliance and control requirements in real time (not only point-in-time), and the separation and ongoing protection of enterprise resources in the cloud, are top priorities.

Action item: CIOs must proactively assess technology risks when making sourcing decisions, particularly when considering external cloud services. Understanding the impact of the cloud provider's security and trust models on current risk practices is the most important first step.