Contents |

Premise

Backup infrastructure is expensive insurance that fundamentally delivers no business value beyond protecting against data loss. While vital, this function can be improved to protect data more efficiently and at the same time deliver new business value to organizations. By better leveraging space-efficient snapshots and using a general-purpose catalog that transcends the backup infrastructure, our research shows practitioners can lower backup related costs by 15-40+% and support new use cases that deliver incremental business value.

Disk Based Backup: Not a Cure All

Last decade, many organizations began to move away from tape as the primary backup medium and shift tape’s role to long-term retention. While most shops still use tape (e.g. for compliance and deep archiving), some have completely eliminated tape from their technology portfolios.

Regardless, the move to disk-based backup was fairly rapid and spurred by the introduction of data de-duplication techniques, which lowered the cost of disk-based backup. Value came from the significantly less complicated and much faster recovery from disk rather than tape.

Notably, the company that created the so-called purpose-built backup appliance market, Data Domain, succeeded in many respects because it allowed organizations to keep their current backup processes in place—requiring no changes in backup application and procedures. By inserting a target hardware device directly into the backup stream, IT organizations created a capability that could restore data much more quickly than with tape.

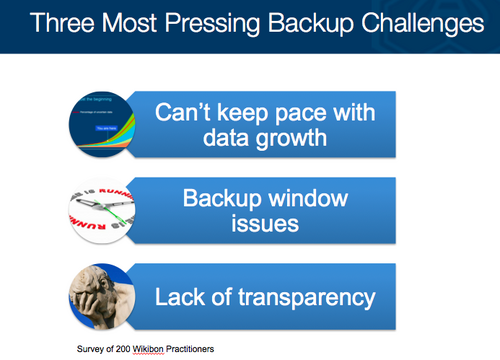

Unfortunately, many backup problems were not entirely solved by purpose-built backup appliances. Wikibon practitioners indicate that several factors continue to plague them, including the top three reported: 1) data growth continues to outpace the backup infrastructure and its ability to accommodate new requirements; 2) this fact has led to difficulty meeting backup windows and 3) a lack of transparency about the efficacy of backups.

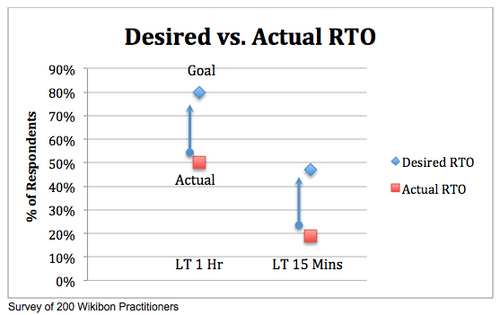

In particular, despite the steady move to disk-based backup, a gap remains between desired and actual recovery time objectives (RTO) that customers report. Consider the following data:

- Fifty percent of Wikibon practitioners indicate they can restore lost data for their most critical applications within one hour. However nearly 80% say their desired restoration time is within one hour.

This gap between actual and desired RTO is even more acute for those customers with more stringent RTO requirements. To wit:

- Nineteen percent of Wikibon practitioners say they can restore data from their most critical application within fifteen minutes. However 47% indicate their desired RTO is within fifteen minutes.

The dissonance between the business requirement and what IT can deliver is an age-old theme in business technology circles. Several organizations we’ve spoken with are beginning to attack this problem and re-think how they approach backup infrastructure. In particular, they are using a combination of space efficient and application consistent snapshots with a centralized catalog that tracks not only backup, but also all copy data.

Snapshots: What You Need to Know

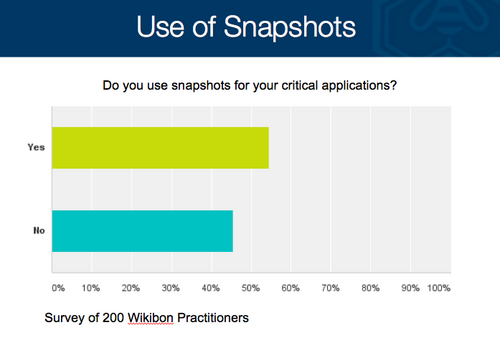

A recent survey of the Wikibon practitioner community showed that more than half the organizations in the community use snapshots for their critical business applications.

A snapshot is a copy of data at a given point in time. In the early days of snapshot technology, a full copy of target data was created and kept in sync with the master during the copy period. Once completed, the snapshot could be used separately from the production system for offsite vaulting, populating data warehouses, test and development, etc. While effective, this process became cumbersome and expensive as data growth exploded.

Subsequently, space-efficient snapshots hit the market. Space-efficient snapshots take a virtual copy of a volume and allow an administrator to quickly copy data by changing pointers to data. This technique avoids copying the entire volume. Changes to that volume are then applied to the virtual copy via an indexing scheme where only the changes are recorded. A pre-requisite for snapshots is a metadata capability that allows the data to be indexed and re-used. This approach requires less capacity, is faster and can be done repeatedly within a short period of time.

Modern-day snapshots should not only be space efficient but also application consistent. In other words, when a copy is made, the system should flush the buffers at a point in time to eliminate outstanding IOs. Application-consistent snapshots are enabled by capabilities within the operating system (e.g. VSS in Microsoft Windows), and the snapshot technology works in conjunction with this feature. Application-consistent snapshots alleviate the need for admins to slog through logs and manually assure consistency when recoveries are performed.

Space Efficient Snapshots Expand System Capabilities

New use cases are made possible by space-efficient snapshots. For example, a snapshot can be taken at noon and another 15 minutes later. In this example, an administrator now has access to any changes made between 12:00 and 12:15. This data can be stored very quickly on premise for fast recovery (RTO) and very low RPO (fifteen minutes max); and moved offsite to dramatically improve disaster tolerance. This process can continue throughout the day without consuming too much capacity.

The problem with this approach is “copy creep.” When virtual copies are easy to make, many get made. This is an issue because the risk of “copy confusion,” copy error and lack of provenance increases. Keeping track of all these copies manually is very difficult and risky.

Automating this process requires a catalog.

What is a Catalog?

In conventional terms, a catalog is simply a list of items. Backup catalogs are files that hold information about what has been backed up. These catalogs are important when restoring data because they allow an administrator to see a list of what’s been protected that can actually be restored.

A backup catalog contains important information about which files have been backed up, when they were backed up, where they are located and other critical metadata. Typically, this metadata applies only to a single vendor’s backup solution and is locked inside of a backup system to be used exclusively by the system that stored the data.

A general-purpose catalog can apply not only to backups, but also any copies that are made and can be used to automate recovery from snapshots. For example, when snapshots are created, a general-purpose catalog will keep track of when the snaps were made, if and when the snap differential data was copied and which snapshot is most current. As such, when there’s a problem, the catalog can adjudicate and automate which files should be restored, dramatically reducing the probability of sys admin error on recoveries.

Importantly, many current backup systems exploit snapshots within their platforms using their own internal catalogs. However they can’t be extended except within that system.

Business Impact

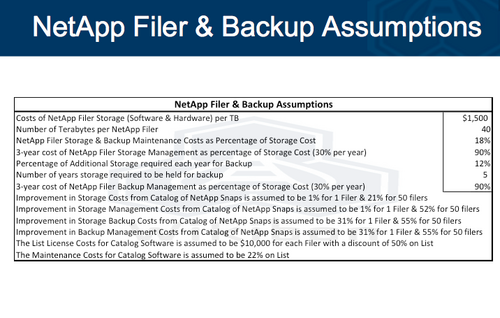

For several years now Wikibon has been advocating a new approach to backup using snapshot technology as a lynchpin of a transformed backup strategy. To this end, Wikibon modeled the economics of using a general-purpose catalog in conjunction with space efficient snapshots to understand the potential savings in CAPEX and OPEX, relative to a traditional backup approach (e.g. a disk-based purpose built backup appliance). We used NetApp infrastructure as a reference point because it is a leading example of an architecture with very good and space efficient snapshots.

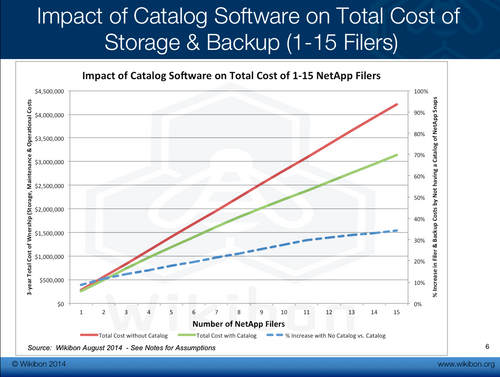

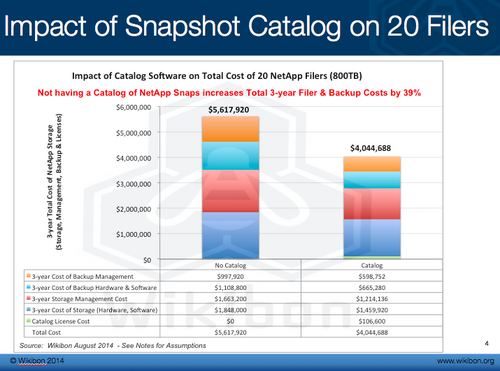

The analysis in the two charts below show the following over a three-year period:

- The crossover point is about 2-3 filers. In other words, as you scale beyond a few systems, continuing to add traditional purpose built backup hardware and software becomes significantly more expensive (nearly 40% more expensive at a 20 filer example).

- Backup infrastructure costs are dramatically reduced. A catalog/snapshot approach costs less than 2/3rds of a traditional backup infrastructure.

- Cost savings on primary storage are an added benefit as wasted space is reclaimed through automation of copy reduction.

- Ripple effects are seen on staff costs as both backup and primary storage infrastructure are simplified.

- The cost of the catalog is assumed at just over $100,000 in the example shown, which will be less than adding new backup infrastructure.

- This analysis excludes the business benefits of new use cases (e.g. Test/Dev).

The Strategic Roadmap

It’s not advisable to rip-and-replace existing backup infrastructure. Organizations installed purpose-built backup infrastructure to improve recovery times and simplify backup and despite some challenges the systems are working better than their tape-based alternatives. However “PBBA Creep” is an issue in many organizations and practitioners have an opportunity to stem the tide of escalating costs.

Specifically, most backup infrastructure is “one-size-fits-all” today, meaning the system is designed to accommodate the 10%-20% of applications that are high value and business critical. Because it’s generally the only system in place, often companies over-spend by protecting applications that could be backed up with less stringent RPO and RTO requirements. Start there and start small to be safe.

Wikibon recommends the following roadmap steps to improve backup over time and extend new business value:

- Avoid adding new backup capacity and instead use snapshots and a general-purpose catalog. Compare this approach with adding traditional backup infrastructure and avoid over-spending on disk-based backup.

- Start getting rid of data and unwanted copies. Work-in-process that has expired, test/dev copies, older unused copies, etc. that can be defensibly deleted should be done so via automated policies driven from the catalog.

- Fully transform backup - further migrate backup thru attrition, protecting more and more data on the new snapshot-based approach

- Target new use cases. Drive new business value/new use cases such as test/dev, compliance, records management, auditing, archiving disaster recovery, etc.

Test and Development Example

This last point is critical and worth an example. Test and development is a killer app for snapshots with a general-purpose catalog. Consider the following example:

A developer is rolling out version 2.x.2 of new software. Developers used to use so-called “dummy data” as a test base, running it against version 2.x.1 and then against the new 2.x.2 release to compare the outputs. The dummy data would have to be created – either manually or from an older copy – and then tested. This could be both time-consuming and error prone.

With a snapshot approach, a developer can test a new version of software against the latest set of data from the production system that came in near real-time and check that the output is the same as today’s production data. The developer can create, very quickly, an exact copy of today’s data. Take a snapshot of the input and the output and compare the results of 2.x.1 with 2.x.2 in near real time.

When mistakes at made and corrections completed, developers can re-test very quickly by creating copies, create copies of copies, etc. then tearing them down, testing and re-testing iteratively to ensure that the performance of the virtual copy is consistent with the production run.

Developer productivity in this scenario skyrockets because for devops, it’s all about how fast can I implement changes. With a snapshot-based approach, developers can change/test/change/test and get answers back in a super-fast time-frame.

Importantly, when it’s all said and done, the many copies can be reclaimed and disposed of easily because the catalog enables policy-based automation to include deleting files that are no longer needed.

Action Item: Continuously adding purpose-built hardware and software infrastructure to a hardened backup infrastructure will perpetuate current backup challenges and create diseconomies of scale. As data continues to grow, IT practitioners should research and test new ways of using space-efficient, application-consistent snapshots, in conjunction with a general purpose catalog, to improve backup efficiencies, lower costs and create new business value. This strategy should proceed in a step-wise fashion, starting with less risky application data and transforming backup over time through attrition. Simultaneously, organizations should exploit this new approach to find use cases that drive business value beyond backup, such as test/dev, compliance, archiving and other applications that require data transparency and provenance.

Footnotes: