Executive Summary of XIV Performance and Availability Envelope

Wikibon classifies the XIV as a tier 1.5 array. The unique single-tier architecture of the XIV spreads I/O activity for all the volumes very evenly across all drives in the array. The result is very consistent performance for all volumes while using much lower cost SATA 1TB drives instead of FC drives. The ideal application environments for the XIV array are:

- Well mannered applications with reasonable I/O activity and cache hit rates,

- A good number of applications using a significant number of volumes,

- No single application requiring extensive tuning.

Contraindications for the use of XIV arrays include:

- The XIV array is dedicated to a few performance-critical applications,

- it is suited ideally to large applications with high I/O rates and very low cache hit rates.

- It is cost-effective where applications in general or specific applications must have very low I/O response times.

- It also is cost-effective where the very highest levels of availability and recoverability are required.

The benefits of the XIV architecture include lower costs, lower storage administrator costs and “good enough” consistent performance.

The drawbacks of the XIV array are that it provides no ability to tune the array for a specific workload, and adding additional volumes when the array is near its I/O limit can have a big impact on the I/O performance of all the arrays, so it requires that close attention be paid when the array nears its capacity.

Traditional LUN-savy storage administrators will feel frustrated that they cannot use their skills. However, many CIOs will find that the XIV offers an opportunity to avoid over-paying for traditional LUN skills.

There have been voluminous writings on the blogs and competitive presentations about the potential availability deficiencies of the XIV architecture. Particular reference has been made to the impact of dual drive failures. Wikibon considers some of the analysis in these blogs to be incomplete and inaccurate and not reflective of the positive experience of institutions deploying the XIV. The availability design of the XIV is different from traditional arrays but is appropriate for the specific architecture of the XIV and results in similar availability to competing arrays. The detailed Wikibon analysis within the sections “XIV Availability Characteristics” concludes that the probability of having to reconstruct an array because of a dual drive failure is less that .05% over the five-year life of an XIV and is considerably lower than other risks that can result in array reconstruction.

Wikibon does not find the claims of IBM that the XIV is greener than other arrays to be credible. The references quoted by IBM refer to the benefits of XIV over older generations of storage arrays.

Wikibon believes that the availability and performance of the XIV array is similar to that of other 1.5-class storage arrays, and other arrays have specific beneficial features. For example, the 1.5 arrays from 3PAR will offer similar benefits to the XIV with a broader range of disk configurations that support a wider range of workloads. Compellent offers a lower entry point and additional software capabilities.

Wikibon concludes that when the XIV is used within its performance and availability envelope (which is more closely defined below), it offers tremendous value with very low administrative costs. This is true when compared with traditional arrays or other 1.5 arrays.

Introduction to the XIV Performance and Availability Envelope

IBM’s XIV architecture is revolutionary, and very simple. It provides great performance and great price-performance for some workloads. If the XIV is used for workloads outside its performance envelope, the result will be a disaster. The good news is that you can determine the performance envelope easily. The following is required to understand the performance envelope of the XIV:

- XIV Design,

- XIV Performance Characteristics,

- Characteristics of Workloads that work well on XIV,

- Workloads that are outside the performance envelope of XIV,

- XIV Price/Performance Characteristics,

- XIV Availability Characteristics.

Horses for Courses

Like every storage array in the marketplace, there are courses where the XIV runs well, and courses that will bring the XIV to its knees. At the end of this article, you will know when to deploy the XIV horse, how to avoid deploying it on unsuitable workloads, and how to manage to keep it within the performance envelope.

XIV Design

XIV supports Block-based Storage

The XIV is a block-based array. It could be used behind a NAS head to support file-based storage, but that is not the design point.

XIV utilizes Standard Volume Components

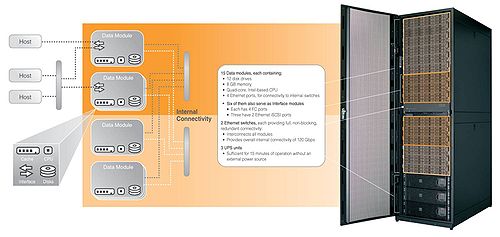

The architecture of the XIV is illustrated in Figure 1 below.

Source: IBM http://www.xivstorage.com/images/ibm_xiv_system_diagram.jpg downloaded 12/20/2009

The XIV consists of an array of 180 SATA drives. Each drive is controlled by one of 15 data modules. Each data module holds 12 drives. The data modules are standard Intel-based quad processors with 8GByes of RAM. The modules are connected by three Ethernet switches. There are three UPS components with batteries that allow 15 minutes of uptime and ensure that the meta-data and data in flight is written to disk safely in a power failure. There are no special ASICs or other components; everything is made of standard volume components.

A Virtualized Single-Tier Architecture

The XIV has a virtualized architecture, which means that the logical volume has no knowledge or understanding of the physical layout. Each data volume is broken up into 1MB chunks and distributed evenly across all 180 drives. Each chunk is written onto drives on two separate data modules. The result of this architecture is reading or writing to any volumes involves nearly all 180 drives. The I/Os are spread evenly over the 180 drives. All data volumes get an equal amount of resources. It is a simple 'single-tier architecture', which assigns the same resources to every volume, and does not favor the performance of any specific volume.

XIV Storage Capacity

A single XIV rack has 180TB of raw capacity, of which 22 TB are required for space capacity (in case of drives failures) and metadata. The dual write protection system leaves 79TB of available storage capacity ((180-22)/2 = 79). As all data volumes are spread evenly over all the drives, the array can be filled completely if the performance characteristics of the workload are suitable. There is no requirement to reserve capacity for reorganization.

Characteristics of Workloads that work well on XIV

The single-tier architecture of the XIV spreads the I/O activity for all the volumes very evenly across all the drives. The result is very consistent performance for all volumes while using much lower cost SATA 1TB drives. The ideal application environments for the XIV array are:

- Well mannered applications with reasonable I/O activity and reasonable cache hit rates:

Most applications are well mannered. The data and I/O design is conservative, and the data has a natural distribution that results in locality of reference and good cache hit rates. - A good number of applications using a significant number of volumes:

The more applications that are accessing a number of volumes, the smoother the I/O activity will be and the better the XIV architecture will respond. - No single application requires extensive tuning:

One example would be a very high access rate on a volume that requires very fast I/O response times. Database applications which generate high locking rates are particularly sensitive, and the overall throughput through the system is constrained by I/O response times.

Workloads that are outside the performance envelope of XIV

The XIV is not suitable for all workloads and all environments. Contraindications for the use of XIV arrays include:

- The XIV array is dedicated to a few performance-critical applications:

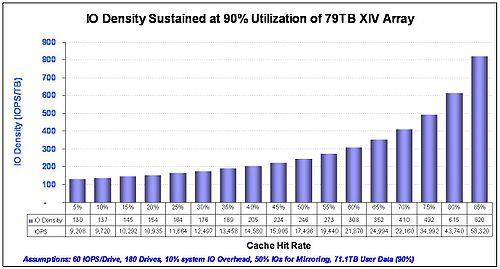

Applications that have very high I/O densities will not be optimal. The gate in such applications is the I/O rate of the drive. The maximum average I/O rate to each disk is about 60 IOPS. This is a much higher level than can be sustained on traditional I/O systems to SATA disk technology, but can still be a constraint. The overall maximum application I/O rate to disk is 60 x 180 x ½ (mirroring) x 90% (other disk overhead) = 4,860. If the cache hit rate is 85%, this represents an overall I/O rate of 32,000 IOPS. I/O density, measured as IOPS/GB, is calculated by taking a maximum utilization of 90% of the 79TB (71.1TB) and dividing it into 32,400 IOPS (32,000/71.1 = 450) to calculate 450 IOPS/TB. Many single applications have much higher access densities. The result of high IOPS/GB is that the capacity of the XIV array cannot be utilized. When combined with a large number of applications, the access density is usually reduced and the capacity of the XIV is much more likely to be in balance with the I/O. - A large application with high I/O rates and very low cache hit rates:

Again the analysis of the previous point is pertinent. The maximum I/O rate to disk is 4,860. If the cache hit rate is 50% for a large application, the maximum I/O rate that can be sustained to disk is less that 10K. This again means that the capacity of the box cannot be utilized. - Where applications in general or specific applications have to have very aggressive (low latency) I/O response times:

Examples of this include highly tuned database systems which generate high locking rates. In this case, the overall throughput through the system is constrained by I/O response times, and the only way to increase system throughput is to reduce I/O response times. This can especially occur in batch systems that may have stringent elapsed time requirements. The XIV architecture improves response times for all applications and gives overall “good-enough” response times. But there are no tools available that will give specific volumes or parts of a volume increased IO response time. Even if the architecture could accept higher performance drives or very high performance solid state drives, the impact would be diluted by being spread over all the I/O.

Chart 1 show the performance I/O density envelope for the XIV. I/O densities that are higher than the chart for a particular IOPS or cache hit rate will result in poor utilization of the XIV. Other constraints with the XIV architecture are likely to kick in above 85% sustained cache hit rate.

- Where the very highest levels of availability and recoverability are required and can be justified:

If Tier 1 storage is defined as providing the highest levels of availability and recovery, the XIV does not meet this definition. Some workloads cannot be run on the XIV at all, and other workloads will run very inefficiently at low utilization rates. The availability characteristics of the XIV are considered below in more detail, but again the XIV does not have the very robust availability characteristics of (say) the IBM8000. The XIV uses the space-efficient copy technique and export of this to a remote site for its asynchronous remote copy, which, although efficient, does not offer the same RPO characteristics of more robust IBM and other offerings. The XIV does not have the range of zero data loss options for 3 data center recovery offerings, and does not have the years of experience and stability required to be a credible partner in the ultra-high availability marketplace. - The IBM XIV is not very green compared with most other modern storage arrays:

The XIV has some green features (e.g., thin provisioning, space efficient copy and good exploitation of SATA disk drives), but this is more than offset by the heat output of 15 servers, the availability of only mirroring, and the lack of any spin-down or AutoMAID capability. All the disks are accessed each time a volume is accessed, making spin-down and AutoMAID an oxymoron for XIV in particular and 1.5 tier arrays in general.

The references given by IBM marketing to support claims of lower power consumption have on deeper investigation turned out to be comparisons against much older array technologies.

XIV Price/Performance Characteristics

The XIV is constructed from low-cost volume components. The use of SATA disks allows the cost of disks and the cost of the array to be very competitive with any other array offering 79TBs of usable storage. The performance is good-enough and consistent when within the performance window indicated in Chart 1 above. The availability is also good-enough for almost all tier 2 and tier 1.5 applications.

The XIV has a reasonable list of software functions. This includes thin provisioning, local mirroring, and remote mirroring. Perhaps the most sophisticated set of features is built around the space efficient software.

When applications are within the performance and availability envelope, the overall price performance as reported in the marketplace is very good, and sometimes outstanding.

XIV Availability Characteristics

Some misinformation has been published in blogs and by vendors about the reliability characteristics of the XIV system. The recovery design is different from traditional arrays but is appropriate for the XIV design.

The XIV recovery mechanisms from component failure are as follows:-

- The XIV system’s switches and UPS units are redundant in an active-active N+1 scheme.

- In the case of a lost data module, the 12 drives are reconstructed on the 14 other data modules before the data module is replaced. In the case of the loss of a second data modules before the data module was replaced, a rebuild of all the data would be required.

- In the case of a lost drive, the rebuild time is a maximum of 40 minutes, as the data can be recovered from all the remaining drives simultaneously.

- The fast recovery of a drive reduces the probability of a dual disk failure by a large factor compared with traditional RAID 5 systems. However, in the case of a double drive failure, all the volumes on the XIV frame will need to be recovered, instead of just the volumes within the traditional RAID 5 group.

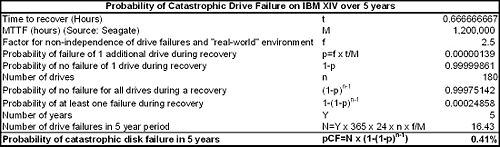

The probability of a double drive failure happening during recovery is shown in Table 1 below:

- If the drive recovery time is 40 minutes, the MTTF of a drive is 1,200,000 hours (Manufacturer’s Claim), the amended MTTF of a drive taking into account "real-world" environment and non-independence of drive failure is 480,000 hours, and the number of drives is 180, the probability of a catastrophic failure during the five-year life of an XIV array is 0.41%.

- The probability of a double controller failure (even with a 24 replacement time) is much smaller than the probability of a double drive failure and can be ignored.

- Note 1 - Note carefully that this is not the risk of a complete array failure – it is the risk of a complete drive failure due to a double disk error. The risks of other failure due to human error (e.g., erasing all volumes), loss of an array due to water sprinklers, microcode failures and application failures are far, far greater.

All arrays can suffer from catastrophic failure which results in the rebuild of the entire array. In traditional arrays this can be caused by the failure of both controllers, failure of microcode, failure of batteries or many other circumstances. These events are very rare but have to be part of the data recovery plan. If the business loss from very long recovery time because of loss of an array is significant, local and/or remote replication solutions should be considered to mitigate the risk of loss.

Practical Issues for Deploying XIV arrays

It is important that the data density requirement for the array are understood and included in any RFP for the XIV. Field reports show that the IBM XIV is easy to install and deploy. Storage administrators that are experienced with the management of traditional LUN-based systems may be a little frustrated at the lack of ability to tune the performance of individual volumes and applications. Senior management should direct them to ensure that the characteristics of the workloads migrated to the XIV are compatible with its architecture, and ensure that the overall data density is within the performance envelope. I/O response times should be monitored as the box is loaded, and headroom should be allowed for peak accesses to data. If the XIV is over-committed, all the volumes and applications supported will be affected by poor response times.

Conclusions

Wikibon believes that the availability and performance of the XIV array is similar to that of other 1.5 class storage arrays. There are specific features that are beneficial in other 1.5 arrays.

Wikibon concludes that when the XIV is used within its performance and availability envelope as defined in this article, it offers tremendous value with very low administrative costs. This is true when compared with traditional arrays or other 1.5 arrays.

Action Item: For the right workloads, the IBM XIV offers solid performance and availability, as well as very good and sometimes outstanding price performance. By understanding the data density and cache friendliness of application data, IT will be able to ensure that the XIV is used for suitable applications, and can be loaded up to a maximum efficiency. Wikibon strongly recommends that the IBM XIV be included in an RFP for tier 1.5 and tier 2 workloads.

Footnotes: This article was inspired by an earlier blog entitled 8 reasons to pay attention to XIV and a subsequent article entitled 8 Reasons to Not Jump at XIV. In particular, the comments to these excellent contributions were very valuable indeed.

Some links from the comments section:

- Carnegie Mellon paper on disk drive availability

- Wikibon note on Xiotech IP

- Storage Mojo blog on disk drive reliability

- WAFL performance note

- IDC Paper auditing NetApp's availability process

- XIV in Exchange 2010 paper from IBM