Data Protection as a Service

How Virtualization and Cloud Computing are Changing Backup and Recovery

First, Some Random Thoughts

I usually don't write in the first person on the Wiki but I'm making an exception for this post...

To me, Google retrieving lost emails from tape is like Derek Jeter going to the Red Sox.

I was wondering recently, if Oracle can do cloud-in-a-box does that mean Amazon can summarily raise prices and start charging enormous maintenance fees?

LinkedIn and Pandora – What the Hell?

Did anyone else notice that Facebook is moving into Sun’s former Menlo Park HQ with a valuation 7X what Oracle paid for Sun?

“Big Data” is cool…it gives the cloud something to do.

Sometimes it’s not knowing the answers but which questions to ask…

If only 15% of IT organizations do chargebacks are their private clouds really clouds?

If security and management are the “evil twins of the cloud,” what’s backup? The paperboy?

Question: How do you backup 2 petabytes? Answer: You don’t

Speed Read

If you don’t have time to read this whole blog post – read this section and you’ll catch my drift.

- Backup is broken – duh? Data protection is not one_size_fits_all…It’s not unisex. The time to eliminate the concept of a backup window is now. Data protection can’t just keep getting more and more expensive – it’s stupid to keep throwing good money after bad to buy over-priced insurance. Geico has it right.

- Does anybody remember data value?

- Fred Moore said it best: “Backup is one thing, recovery is everything.”

- Megatrend – virtualization, cloud, cloud, cloud, cloud, cloud…

- Virtualization changes everything…but Server virtualization doesn’t equal storage virtualization. Nor does it equate to desktop virtualization. Virtualization breaks storage and it breaks backup.

- Archiving is a good thing – especially for backup windows. Think about how the cloud can help backup and archive by providing a new low cost storage tier.

- Big Data is not just big – it’s all about data value and speed to decisions.

- Here are ten trends impacting backup and recovery and some scenarios to consider going forward:

#1 Backup is Broken

Why is it that I’m always saying backup is broken? There are five reasons that backup is broken:

- Backup delivers no tangible business value. No one profits from backup except backup vendors. Backup delivers no real competitive advantage. You can have the best backup system in the world and it won’t make you better than the competition.

- Backup is too expensive. I’ve seen some ludicrous numbers like for every $1 spent on primary storage, $4 is spend on backup and recovery. Sounds high but someone from IDC told me that so it must be true. Much of the spend goes to CAPEX but the real bummer is annual maintenance. Companies like Symantec, IBM and EMC live off of backup software maintenance contracts. Want to extend backup to remote offices..hahaha – pay up friends. Want disaster recovery – yeah – Cha Ching!

- Backup is way complicated. Backup agents everywhere, virtualization eliminates physical resources and creates IO bottlenecks and there are a zillion application nuances – e.g. backup up Oracle databases or SAP. Backup is part science, part art and it’s way too confusing to people. Confusion = cash for vendors.

- Data growth is outpacing backup. Why does EMC fund the IDC Digital Universe study each year? To remind everybody about how much pain they’re in and how EMC is the aspirin. IDC says 1.2M petabytes were shipped last year—mostly unstructured data. That means more files, videos, wikis, SharePoint docs, email attachments, etc. All different formats, different versions, different frameworks…an unmanaged mess.

- Shortening RPOs and RTOs. Business are demanding less data loss and shorter recover times. The consumerization of IT and globalization are driving speed, 24X7 business capabilities, agility…all the buzzwords that break traditional backup.

Industry Implications: The four decades old backup model is outdated.

#2 Most Backup is Architected as One-size-fits-all

Historically, backup has been architected as a one-size-fits-all endeavor. Specifically, most organizations have one approach to backing up data:

- Daily incremental

- Weekly full

Sometimes there’s consideration for a nightly full on critical systems like email but generally that’s a one-off. Or maybe there’s an incredibly expensive replication solution like SRDF—good for banks but hardly a fungible, elastic asset. Moreover, backup is a big, honking batch job requiring tons of processing power. When we were running at 10% server utilization that wasn’t so problematic – but virtualization changed all that and now we’re actually becoming resource constrained for backups.

Here’s the cold, hard truth. For decades we’ve been marching to the continuous cadence of the backup window – and vendors have loved it. Data grows, backup windows get pressured – throw more technology at the problem. Have you ever wondered, for example, why data deduplication hasn’t solved the backup problem? It wasn’t designed to fix backup – its goal was to eliminate tape as the primary backup target.

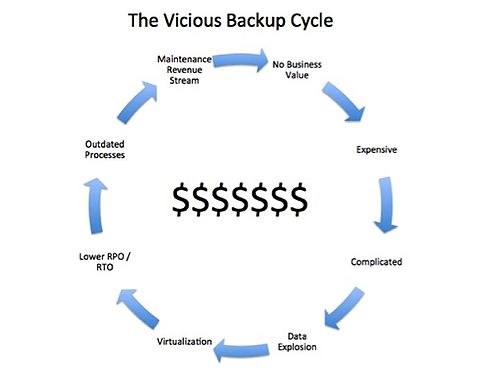

Despite all the technology, we’re still faced with having to backup more data in less time with faster recovery on flat or declining budgets. Do you see the cycle? Here’s the picture:

Industry Implications: This model is not sustainable. It doesn’t scale for today’s virtualization and cloud-based businesses. There are changes coming that are much simpler and more cost effective.

#3 Data Value is not One-size-fits-all

While Data Domain’s slogan was true—“tape sucks,” the fact is backup is the real culprit, not just tape. Organizations we support in the Wikibon community are starting to have more conversations about data value. Consider recovery for example.

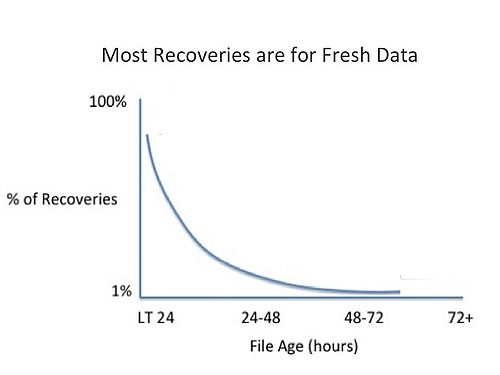

The vast majority of recovery is done on data that is less than 24 hours old. Maybe 10% of recoveries are 24-48 hours old and a very tiny percentage of recoveries are for data that is more than a few days old. So from a recovery standpoint, data age is a primary consideration of value. The curve is a steep one that looks something like this:

The point is different data and different applications have different value. Order entry systems might be more value than a marketing video. Compliance is not one-size-fits-all – some data have higher risk profiles. So why do we treat everything as one-size-fits-all?

Industry Implications: Expect to have more conversations about information value. Why only perform a business impact analysis (BIA) for disaster recovery – why not for mainstream data protection? Begin to architect data protection solutions based on value (e.g. recovery value or application value).

#4 Server Virtualization Changes Everything

What’s the #1 reason IT organizations virtualized servers so aggressively? To take cost out by reclaiming underutilized processing power. What’s the #2 reason? To increase flexibility and agility.

The problem with server virtualization and reason #1 is backup cycles used to be “free” because organizations could use underutilized servers for their backup jobs. But virtualization increases storage demand by 4X (the metric here is IOs per MIP). The good news is reason #2 means that everything can be “containerized” and virtual machines can be moved from point A to point B within the data center, very easily. This simplifies recovery because you don’t have to recover on the same machine where the backup was performed.

Fewer physical servers combined with virtualization means you’re in for an “IO storm.” This increases backup complexity and pressures the backup window.

Industry Implications: Organizations must begin to take an application focus for data protection. Applications live in virtual machines. Backup high value systems as VMs that can be recovered elsewhere. Use the lowest cost backup (e.g. VTL and cloud) for the rest of the applications.

#5 Storage Virtualization Enables New Backup Models

Traditional backup technologies march to the cadence of the backup window, which is increasingly an impediment to service levels demanded by business lines. Array-based replication technologies provide solid SLAs but are costly and difficult to manage. Most current backup technologies do not meet the requirements of many parts of the business and are a barrier to establishing IT as a service or cloud-like infrastructures. The requirement is a common application data protection model that can be tuned to the specific line-of-business budgets and departmental SLAs. Specifically, this capability must meet the protection and recovery requirements of a virtualized environment.

Some important technologies have allowed the previous batch backup model to be replaced by a continuous backup approach. These include:

- The ability to take application-consistent copies of data almost instantaneously and highly efficiently. Virtualized storage arrays have been the design point for storage arrays, as the virtualized architecture allows just the pointers to the blocks to be copied. Some applications also provide this snapshot capability.

- Operating system services such as Microsoft VSS that allow the storage volumes to reflect a consistent set of data at a point in time;

- De-duplication and compression functionality within the storage array or application to minimize backup storage space and (optionally) to minimize line costs for remote replication;

- Backup suites which take advantage of the new technologies and provide integrated backup and restore capabilities.

Snapshots and replication do not in themselves provide a secure backup system or a robust recovery system. The backup data has to be catalogued, integrated, and managed to provide these services. Some systems provide these services through agents in the servers. A key is to have a rich set of restore capabilities, including application and bare-metal recovery, physical-to-virtual and virtual-to-virtual recovery for VMware environments, file/object recovery and the recovery of a backup dataset as a VM. Ideally, all the functionality is managed from a single console.

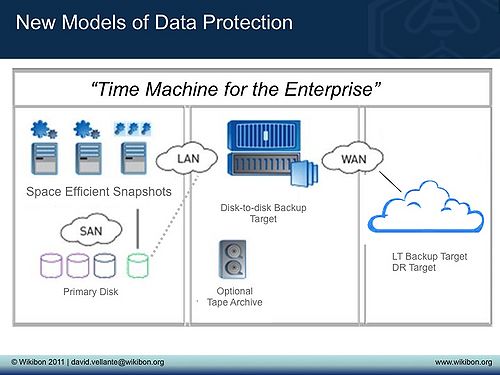

Wikibon believes this is the new model of data protection that is emerging. It is based on underlying snapshot and CDP technologies, i.e., consistent snapshots will be taken on a regular basis, and the incremental data from the last snapshot moved to a separate site. The level of service can be adjusted by varying how often the snapshots are run, how quickly they are transferred (mainly determined by telecommunication line speed), and how quickly the data can be recovered (quality of recovery system and amount of recovery data held locally and remotely). This allows the service to be tailored to meet the business recovery time, recovery point and budget requirements. Most important of all, it eliminates the backup window. This model offers a far more flexible and cost-effective strategy to meet Infrastructure requirements for cloud computing and is more effective in our view than the traditional one-size-fits-all backup window model.

The analogy we use for this model is Apple Time Machine for the enterprise:

Constraints to Adoption

Wikibon sees four major constraints to adoption of a service model for backup:

- The availability of applications and operating system that support consistent snapshots. This is currently easiest in a Windows environment with VSS.

- The understandable reluctance to make major changes to complex data protection processes and procedures that work. Movement is likely to take place on an application-by-application basis, as new versions of software are implemented.

- The desire for support for this model from the major backup software providers.

- Reluctance for change within the organization: Wikibon observed that organizations that have adopted the new model did so because of severe pain in one area. CIOs need to provide the capability of selective higher and lower functionality for lines-of-business or see them look for Cloud alternatives that either provide better service levels or lower cost alternatives.

Wikibon believes that all these constraints will be reduced in importance as this model takes hold as best practice over the next few years.

The future of data protection is space efficient snapshots. This approach enables new tools for shorter RPOs (i.e. less data lost on a recovery). The technique organizations are using is to take a fast, space efficient copy at a point in time locally and then once that’s done, shoot a copy off site. Then at a convenient point in time take another copy to a DR site and/or archive to tape.

Industry Implications: This approach uses the idea of continuous data protection (CDP). It’s the Apple “Time Machine for the Enterprise” model with CDP and storage virtualization as the underpinnings. Suppliers that can support heterogeneity get bonus points.’’

#6 Backup and Archiving: Strong use Cases for the Cloud

Backup and archiving have different business objectives (e.g. fast recovery vs. compliance), different processes and often serve different business lines. While these disciplines are not one in the same, they are related. Backup and archive data tend to be inactive data and archiving can cut well over 50% of an organization’s backup window. Why? Because it removes tons of data from the weekly full.

Most data (i.e. 2/3rds) is tier 3 or tier 4 meaning it should be archived or deleted. One Wikibon client in the pharmaceutical industry found that 50% of its file and print capacity was .pst files.

The cloud is often a good target for archiving. For many organizations, the cloud has better security than internal processes and archiving doesn’t typically require high performance. The cloud may also be a good platform for certain DR use cases. There’s a battle going on right now where CEOs want to outsource everything to the cloud and CIOs rightly want to protect their companies from disastrous decisions.

Most organizations we talk with in the Wikibon community are pursuing private clouds. Surprisingly, about 37% of our members have no clear cloud strategy or think cloud is a meaningless buzzord. About 50% of our members are pursuing private clouds with just 9% pursuing hybrid cloud strategies. A very small minority (~5%) are relying predominantly on public clouds.

Industry Implications: Cloud computing is still in its infancy. Backup and archiving are great use cases for the cloud. Key issues are choosing the right apps, seeding the cloud, getting the cloud on ramps in place, having the right software (backup and archiving), setting up policy engines to facilitate automation and classification based on data value (“uber manager” for the cloud). Much work needs to be done but organizations are saving 35-50% on infrastructure projects by using the cloud.

#7 Customers are Freaking out About Security - Privacy is Next

High profile hacks against Sony, Sega, RSA, Lockheed Martin and many others are escalating. As more and more data moves to the cloud the risks grow exponentially. Data is migrating quickly to external clouds but are backup and recovery processes being updated at a concomitant pace? I don’t think so.

And what about privacy? Security and privacy are different. Security is about ensuring that data access is given to the authorized person, machine, application or process; and if unauthorized people get in, a clear trail can be recreated to figure out what happened. Privacy is a different side of the same coin. It’s all about how I want my data to be exposed; or not.

Organizations spend lots of time on security but privacy further complicates the matter. Who owns privacy? How does it relate to security? How will cloud security and increased awareness on privacy impact backup and recovery? These are serious questions that must be answered by IT organizations.

Industry Implications: Start thinking about data value and offering security and privacy services for different classes of data. This is the right mindset and data-protection-as-a-service dovetails with this philosophy.

#8 Big Data is Stressing Backup and Recovery

Big Data is all the rage these days. Big data is not only big, it’s unstructured, distributed to multiple locations, is increasingly valuable and brings a new key – metadata. Protecting this data and metadata requires new data protection mindsets as it may not be physically possible to backup, let alone restore, petabytes of data.

Organizations need to begin to understand new file system capabilities and metadata management approaches that can help with big data protection. For example, provide mechanisms such that metadata can be queried remotely and subsets of data can be brought to a central repository to be analyzed and protected. This repository will not only act as an analytics data store and often an archive, but increasingly these archives will have corporate value. Protecting them will be fundamental but a one-size-fits-all approach won’t suffice.

Industry Implications: Again – a mindset around data-protection-as-a-service with varying levels of protection, cost, performance and extensibility is fundamental to this future.

#9 Disk-based Data Protection is the Norm

Data protection has rapidly moved from a tape- to disk-based process as the price of disk has dropped and software technologies have evolved to accommodate data deduplication and both client and target based backup on disk. This has allowed organizations to keep more data online longer which accelerates recovery and can deliver other business value through off-site replication and potentially long-term archiving.

Tape is being relegated to a compliance medium and disk-based systems are providing superior recovery for most use cases. This trend is not going to stop. Higher capacity devices and flash-based systems are extending the storage hierarchy as hybrid devices that perform both primary and secondary storage function are hitting the market. In addition, software to manage the placement of data throughout the hierarchy is becoming a key innovation for organizations. Importantly, this software enables automation, which reduces costs and human error.

Industry Implications: Tape is not dead - however its role is increasingly narrow and confined to 'last resort' and compliance use cases. The most cost effective high bandwidth way to move data remains loading tapes on a truck (CTAM - the Chevy Truck Access Method for you mainframers) but it certainly is not the preferred approach. The nature of recoveries (i.e. most recoveries are for fresh data) means that increasingly recoveries are being done from local disk-based snapshots, not tape.

#10 The Time for Data Protection as a Service is Now!

Many IT practitioners I speak with roll their eyes when discussions about “the cloud” come up. They often hate the term. But universally, organizations are moving toward IT-as-a-service. Why is that?

Pressure from the corner office.

IT spending has gone from 5-7% of revenue in the late 1990’s to 2-3% today. The drivers are:

- The consumerization of IT

- The quest for increased operational efficiency

- The drive for agility

- The rise of the service provider as a disruptor to traditional IT models

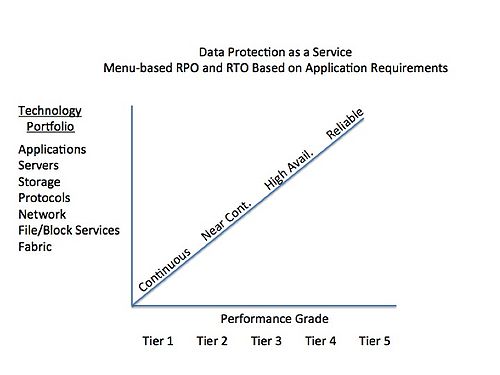

This is the CEO mandate to the CIO: Cut costs and get agile or I’ll find someone who will. This mandate is trickling down to data protection. There’s a shift from one-size-fits-all to a services catalogue. Here’s my interpretation of a three-dimensional model that my friend John Blackman, an IT practitioner proposes:

The way the model works is that the IT organization maintains a technology portfolio that is aligned with the strategic objectives of the business. Each layer in the portfolio has a primary and approved vendor, which is reviewed periodically. The IT organization offers a menu of services to the business based on its application requirements. The intersection of the technology portfolio, the performance grade and the protection level determine the cost per month to the business. Everything is offered as a service with an up-front (one-time) setup fee and a negotiated monthly price and contract term.

Not every combination in the three dimension is supported but enough to provide a full range of options for the business.

This is data protection as a service.

Now a key to this approach is at one end of the spectrum, the ability to use space efficient snapshots and continuous data protection to ‘tune’ RPO (recovery point objective) to business requirements. RPO refers to the amount of data the business is willing to lose as measured in time. A theoretical RPO Zero would mean no data loss. This level could be dialed up in, say, fifteen-minute increments depending on the business requirements. The price would drop as the RPO increased because less storage and simpler processes would be required to support the business objective.

The spectrum would move through virtual tape libraries (VTL), Big Data solutions and other data protection approaches that could all be offered as a service to the business.

This takes the notion of backup from one of complicated, expensive insurance with no value to a more cost effective, aligned, optimized data protection service that can be tailored to the application requirement and quickly scaled to meet growing business demands.

The Services Angle

As organizations move toward virtualization, backup becomes increasingly problematic. The reason as stated is clear: Backup is a resource intensive job and consolidation through virtualization creates backup window bottlenecks. Organizations often discover that virtualization is an opportunity to re-architect backup processes, which is not something organizations wish to do every year. Nonetheless, chasing the ever-shrinking backup window is not a good answer either. Services organizations with an understanding of the VMware and Hyper-V roadmaps, as well as backup software capabilities and degree of integration required to minimize backup challenges.

Users should identify those service organizations with the following:

- Deep knowledge of data protection

- An understanding of not just backup but recovery and disaster recovery

- An understanding of de-duplication, both source and target side

- A track record of establishing backup processes and procedures

- A strong services background with the ability to design, deploy and manage infrastructure

Conclusions

As IT organizations move toward virtualization, cloud computing and IT-as-a-service, data protection will undergo a fundamental shift. The underpinnings of this transformation include a change from one-size-fits-all backup to a data protection offering that matches service levels with application requirements.

IT organizations would be wise to bring in outside help to navigate through this transition. There are several issues that an outside consultant can help manage, including:

- ROI. The business justification of data protection as a service – data protection is still viewed as insurance and a quality risk assessment and business impact analysis from an outsider can have a meaningful impact with upper management.

- Chargebacks. Most organizations (85%) don’t do chargebacks. Yet IT-as-a-service, by definition must include some type of chargeback or showback mechanism.

- Processes. Core backup processes were largely developed decades ago for serial tape – pre-virtualization, pre-cloud. They’ve bolted on new complexities related to recovery, compliance and the like and need to be revisited to be optimized for IT-as-a-service.

- Training and Education. Organizations have an opportunity to re-skill staff and gain increased leverage by developing data protection approaches that free up existing personnel. As discussed, however, new approaches will require new mindsets and existing staff will have to be educated and in some cases re-deployed on other tasks.

- Architecture. Data protection is not trivial. Virtualization complicates the process and creates IO storms. Architecting data protection solutions and a services-oriented approach that is efficient and streamlined can be more effectively accomplished with outside help. Don’t be afraid to ask.

The bottom line is data protection is changing from a one-size-fits-all exercise that is viewed as expensive insurance to more of a service-oriented solution that can deliver tangible value to the business by clearly reducing risk at a price that is aligned with business objectives. Understanding data protection in a holistic fashion from backup, recovery, disaster recovery, archiving, and security; and as part of IT-as-a-service is not only good practice, it can be good for your bottom line.

Action Item:

Footnotes: