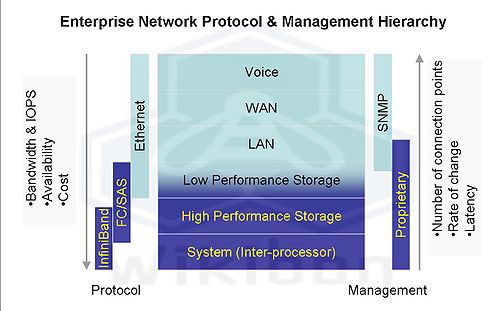

Ethernet is offering improved capabilities for connecting storage and servers. iSCSI is increasingly offered on lower-end storage arrays, and the extensions to Ethernet allowing lossless connection have enabled fibre channel over Ethernet (FCoE) as a protocol. This has allowed the vision of a single Ethernet fabric to include all the connections within an organization:-

- Voice connections;

- WAN connections;

- LAN connections;

- Low performance storage connections;

- High performance storage connections;

- Inter-processor connections.

The connection list sequence above has several characteristics:-

- The number of connection points tends to decrease with numerical position;

- The rate of change tends to decrease with numerical position;

- The bandwidth requirements tend to increase with numerical position;

- The availability requirements tend to increase with numerical position;

- The latency requirements tend to decrease with numerical position;

- The equipment connected tends to increase in cost with numerical position.

Ethernet is the protocol of choice for multiple connection points, and it is very easy and cheap to switch Ethernet traffic. It is very easy to make changes, and the bandwidth capabilities have increased significantly to 10Gb. The ability to oversubscribe the network reduces the cost of Ethernet networks. The availability of the latest SNMP standards enables effective monitoring and management of the whole network with tool like WhatsUp Gold and others.

In low performance storage environments, Ethernet connections are both suitable and lower cost. In many file-and-print environments, CIFS and NFS protocols based on Ethernet allow suitable performance and improved connectivity to very large files systems.

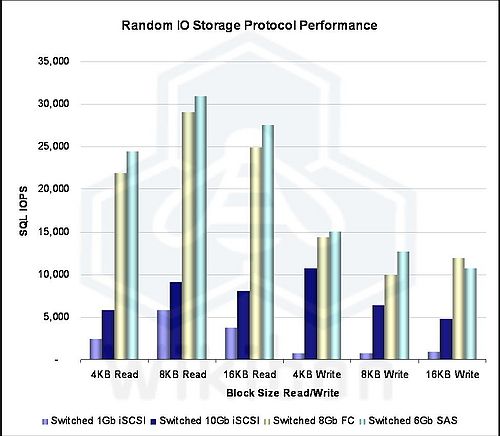

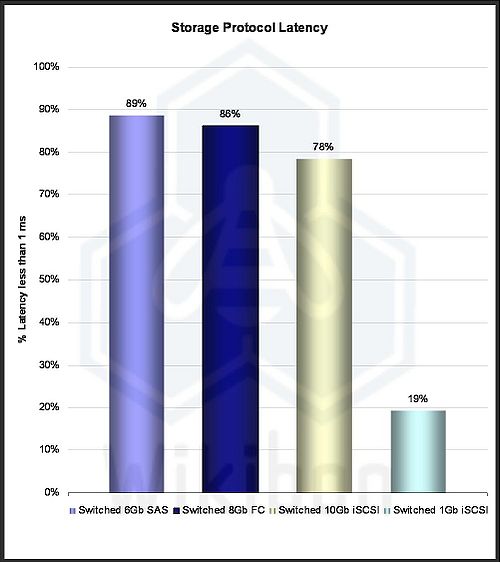

However, Ethernet does not work well so well in database environments where random IO performance for small block sizes and latency are the key requirements. Latency is the time taken to get the data, and is of importance when there is serialization of tasks. It becomes very important in database systems, and in high performance systems. Dennis Martin gave a presentation at the 2011 Flash Summit illustrating the difference in IOPS performance and latencies of different storage protocols, and shows clearly that neither 10Gb or 1Gb iSCSI is not well suited for high-performance storage environments. Figures 1 and 2 are based on this presentation.

Source: Wikibon 2011, based on measurements by Dennis Martin presented at the 2011 Flash Summit “Measuring Interface Latencies for SAS, Fibre Channel and iSCSI”, downloaded 9/20/2011

Source: Wikibon 2011, based on measurements by Dennis Martin presented at the 2011 Flash Summit “Measuring Interface Latencies for SAS, Fibre Channel and iSCSI”, downloaded 9/20/2011

Virtualization, erasure coding and flash storage are all changing the storage landscape. Virtualization is putting pressure on IO performance, and making it more random. Erasure encoding is enabling much lower costs of distributed storage. Flash storage is reducing or eliminating the long latencies of hard disk mechanics, and the storage network protocol is now relatively a higher proportion of the IO time and of increasing importance.

When looking at systems interconnect, Ethernet can work for lower performance systems but does not have the bandwidth and latency for high performance systems. Of the top 100 supercomputers, only one (position 45 as of June 2011) is using 10Gb Ethernet as the processor interconnect fabric. Very low latency InfiniBand from Mellanox and QLogic dominate in this space.

Some networking companies and networking professionals have argued that Ethernet now offers the potential to provide all the interconnect requirements for an organization. Wikibon believes that this is not the case, and that inter-system and high performance storage requires different protocols.

Very importantly, these system networks should be managed by the system side of the house, (system, database and high-performance storage), and not the LAN and WAN networking groups. The availability requirements for storage networks are much higher, performance requirements (especially latency) are more demanding, the rate of change lower and the requirements for change control more stringent. These requirements are more in line with the tools and mindset required to assist the server and database management groups.

The lack of SNMP standards for storage means that Wikibon would advise as a general rule keeping high-performance storage networks and storage devices separate from general-purpose networks. However, the systems and storage side have an important role in helping the networking group by ensuring that latest levels of SNMP are available on all server and storage equipment.

Action Item: Wikibon recommends that CTOs and CIO should continue to separate out the management of the networks of high-performance storage and systems from the general purpose LAN and WAN networks. Equally, Wikibon recommends that IT organizations should work with networking vendors for general purpose networking, and system and high-performance storage vendors for high performance/low-latency networks. This will help minimize (but not eliminate) the unproductive religious wars that can break out between the different mindsets that are required for the two environments.

Footnotes: