Introduction

In a May 2010 study, IDC forecast that in 2010 1.2 million petabytes of storage in all forms (1.2 zetabytes) would be shipped. About 75% will be created by consumers and 25% by enterprises. However, IDC forecasts that 80% will be touched, hosted, managed, or secured by enterprise services. By 2020 about 50 times this amount of data could be shipped. This tsunami of data is both an opportunity and a challenge for CIOs and IT practitioners. The opportunity is that more data is available to help businesses be increasingly proactive and effective in providing goods and services to clients. The challenge is that traditional ways of storing, managing, processing, and analyzing data are no longer adequate. New thinking is required to accommodate the directions that storage will take in the era of Big Data. Underpinning this data wave are three mega-trends including:

- The simplification of IT infrastructures through convergence,

- Massive cost reductions through virtualization,

- New business models enabled by cloud computing.

Infrastructure Management at a Cross-Road

IT infrastructure has reached a cross-road, as new models of computing challenge existing IT management practices. The traditional practice is to create bespoke configurations that meet the projected performance, resilience and space requirements of an application. This is wasteful, as projections are rarely correct and often over-specified, and these over-provisioned resources are impossible to reclaim.

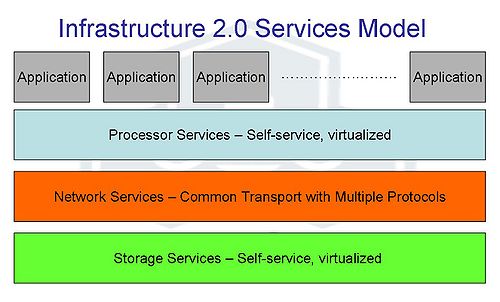

The modern paradigm is to build flexible self-service IT services from volume components that can be shared and deployed on an as-needed basis, with the level of use adjusted up or down according to business need. These IT services building blocks can come as services from public cloud and SaaS providers or as services provided by the IT department (private clouds).

The components of private clouds could be self-assembled, but that model can lead to the same issues as the current one, namely that the specification and maintenance of all the parts requires a large staff overhead to create and maintain the IT services. Increasingly vendors are providing a complete stack of components, including compute, storage, networking, operating system, and infrastructure management software.

Creating and maintaining such a stack is not a trivial task. It will not be sufficient for vendors or systems integrators to create a marketing or sales bundle of component parts and then hand over the maintenance to the IT department; the savings from such a model are minimal over traditional approaches. The stack has to be completely integrated, tested, and maintained by the supplier as a single SKU, and the resultant stack has to be simple enough that a single IT group can completely manage the stack and resolve any issue.

Equally important is that the cost of the stack is reasonable and scales out efficiently. Service providers are effectively using open-source software and focused specialist skills to lower the cost of their services. Internal IT will not be able to compete with services providers if their software costs are out of line.

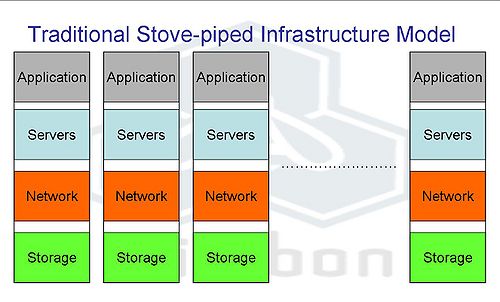

Infrastructure 2.0 is an IT world without stovepipes

Today procurement occurs in stovepipes, with networks, storage, servers, middleware, and applications each vying for budget and control. Application and database managers specify or strongly influence the equipment their applications will run on.

The benefits of this approach is a tailor-made infrastructure that exactly meets the needs of applications, and for the few applications that are critical to revenue generation, this will continue to be a viable model. However, 90% or more of the application suite do not need this approach, and Wikibon has used financial models to determine that an Infrastructure 2.0 environment will cut the operational costs by more than 50%.

The key to Infrastructure 2.0 is tackling the 90% long tail by taking common technology building blocks, and aggregating them into a converged infrastructure. There are two major objectives in taking this approach:

- Drive down operational costs by using an integrated stack of hardware, operating systems, and middleware:

- The current system of bespoke stacks that are fitted to applications result in inefficient maintenance and operating procedures.

- The major challenges are selecting an infrastructure stack(s), making the necessary organizational changes (flattening the operational organization with fewer more generalized and higher quality staff), and managing the business issues of migration to this new environment.

- Accelerate the deployment of applications:

- Currently IT shows spend 70% or more of the IT budget in maintaining the status quo, 25% on growing the business, and only 5% on transforming the business. A major objective of the transformation to Infrastructure 2.0 is to reduce the IT costs of maintaining the current application suite and increase the percentage of spend on business transformation.

Conclusions for Storage

Storage will become a key part of a converged infrastructure stack. In the previous decade, storage software function has migrated out of the server and down to the storage array, which became the main data-sharing point within the data center. New technologies and topologies are bringing some storage and storage function back much closer to the server and moving some further away in cloud storage. Storage function will be distributed inside and outside of data center, in internal and external clouds. Lastly but most importantly, storage will be a key enabler of new business process and business intelligence applications that will be able to digest and present orders of magnitude more data that current applications.

Cloud Storage Services

Cloud services in all forms are providing instant compute services at competitive rates. Service providers are offering storage services such as Amazon S3, Microsoft Azure, and Google Storage for Developers. These use self-service models, making buying and consuming the services easy and fast. These services use APIs to put and get the data, and this means that existing applications would need conversion to take advantage of these services. Not all workloads will be appropriate for cloud storage service - applications needing very low latency (e.g., heavy database applications) would probably be affected by network latency and not be candidates for external storage, and security and management of external storage are still works in progress.

IT is responding to these services by using them when appropriate and by providing its own set of storage services that need to be cost competitive with the external services, with a similar self-service model.

Conclusions for Storage

The public and private cloud infrastructure, rather than single arrays, will become the sharing point for data. Compute and storage management systems will ensure performance, mobility, and security of data across the cloud networks. IT will provide the business with a set of self-service storage services which will be delivered from a variety of internal and external sources.

The Impact of System Technology Changes on Storage

Technology trends that matter are the result of large volumes bringing prices down; large volumes usually come from consumer use. The most important technology trends within the data center are being driven by consumerism, and the large volumes of chips and devices being used in consumer electronics.

Multi-Core Processors

The previous determinant of system speed of consumer PCs and data center servers derived from the same technologies was the cycle time of the processor. Moore’s Law, that every 18 months cycles time would be cut in half and systems performance would double, remained king. That simple view has been complicated by the advent of multi-core systems. The latest processors are shipping with 6 cores, and processors with many more are in the pipeline. These cores communicate with each other over system busses and through L3 caches. Adding cores is not as efficient a way as cycle time in improving performance – each additional core only increases performance by 50% of the previous core. But overall, the impact of cycle time and additional cores has been to accelerate Moore’s law.

These trends have two important side effects:

- PCs and servers are getting smaller, and heat density is getting greater – you only have to look at the cooling systems of the Nehalem Intel processors to appreciate the impact. Servers that previously look 2U of rack space now only take 1U, and trend is towards multiple servers per 1U of rack space.

- The space for additional cards on the servers is becoming extremely limited. This has lead to a convergence of cards and motherboard technologies to allow cards to become multi-functional. For example, a collection of cards required to support iSCSI, FC, 1gE and 10gE can now be supported with a single CNA (Converged Network Adapter). An important side effect has been to increase the bandwidth requirements of systems.

Conclusions for Storage

The important impact of processor technologies on storage is that the bandwidth and I/O requirements of future servers will much higher and that processors will be capable of handling any type of I/O that is thrown at it. Storage systems will need much higher throughput capabilities. Part of that higher throughput will come from increased integration of servers and storage (e.g., blades using virtual I/O) and part from flash storage.

Flash (NAND) Storage

Consumers are happy to pay premiums for flash memory over the price of disk because of the convenience of flash. For example, the early iPods had disk drives but were replaced by flash because the device required very little battery power and had no moving parts. The results were much smaller iPods that would work for days without recharging and would work after being dropped. As a result of huge consumer volumes, flash storage costs dropped dramatically.

In the data center, systems and operating systems architectures have had to content with the volatility of processors and high-speed RAM storage. If power was lost to the system, all data in flight was lost. The solutions were either to protect the processors and RAM with complicated and expensive battery backup systems or to write the data out to disk storage, which is non-volatile. The difference between the speed of disk drives (measured in milliseconds, 10-3) and processor speed (measured in nanoseconds, 10-9) is huge and is a major constraint on system speed. All systems wait for I/O at the same speed. This is especially true for database systems.

Flash storage is much faster than disk drives (microseconds, 10-6) and is persistent – when the power is removed the data is not lost. It can provide an additional memory level between disk drives and RAM. The impact of flash memory is already being seen in the iPad effect. The iPad is always on, and the response time for applications compared with traditional PC systems in amazing. Applications are being rewritten to take advantage of this capability, and operating systems are being changed to take advantage of this additional layer. iPads and similar devices are forecast to have a major impact on portable PCs, and the technology transfer will have a major impact within the data center, both at the infrastructure level and in the design of all software.

Conclusions for Storage

Flash will become a ubiquitous technology that will be used in processors as an additional memory level, in storage arrays as read/write “Flash cache”, and as a high-speed disk device. Systems management software will focus high I/O “hot-spots” and low latency I/O on flash technology and allow high-density disk drives to store the less active data.

Overall within the data center, flash storage will pull storage closer to the processor. Because of the heat density constraints mentioned above, it is much easier to put low power flash memory rather than disk drives very close to the processor.

The result of more storage being closer to the processor will be for some storage functionality to move away from storage arrays and filers and closer to the processor, a trend that is made easier by multi-core processors that have cycles to spare. The challenge for storage management will be to provide the ability to share a much more distributed storage resource between processors. Future storage management will have to contend with sharing storage that is within servers as well as traditional SANs and filers outside servers.

Beyond flash, other exciting persistent memory storage technologies include Memristor from HP and PCM (Phase-change memory) from IBM. Memristor has the exciting possibility of combining non-volatile memory with logic functionality, which could allow the development of “smart” storage. Non-volatile storage has a significant funding and research wind behind its back, and it is of major interest to both consumer devices and enterprise computing.

Hypervisors and Virtualization

Volume servers that came from the consumer space only had the capability of running was one application per server. The result was servers that had very low utilization rates, usually well below 10%. Specialized servers that can run multiple applications can achieve higher utilization rates but at much higher system and software costs.

Hypervisors such as VMware, Microsoft’s Hyper V, Xen and Oracle VM have changed the equation. The hypervisors virtualize the system resources and allow them to be shared among multiple operating systems. Each operating system thinks that it has control a complete hardware system, but the hypervisor is sharing those resources between multiple operating systems.

The result of this innovation is that volume servers can be driven to much higher utilization levels, 3-4 times that of stand-alone systems. This makes low-cost volume servers that are derived directly from volume consumer products such as PCs much more attractive as a foundation for processing and much cheaper than specialized servers and mainframes. There will still be a place for very high-performance specialized servers for some applications such as some performance critical databases, but the volume will be much lower.

The impact of server virtualization on storage is profound. The I/O path to a server will be providing service to many different operating system and applications. The result is that the access patterns as seen by the storage devices will be much less predictable and be much more random. The impact of higher server utilization (and of multi-core processors mentioned above) is that I/O volumes (IOPS, I/Os per second) will be higher, much higher. There will be few processor cycles for housekeeping activities such as backup.

In addition, virtualization is changing the way that storage is allocated, monitored and managed. Instead of defining LUNs and RAID levels, virtual systems are defining virtual disks and expect to see array information presented reflecting the virtual machine and virtual disk and the applications they are running.

Conclusions for Storage

Storage arrays will have to be able to serve much higher volumes of random read and write I/Os with applications using multiple protocols. In addition, storage arrays will need to work with virtualized systems and speak the language of virtualized administrators. Newer storage controllers (often implemented as virtual machines) are evolving that will completely hide the complexities of traditional storage (e.g., the LUNS and RAID structures) and be replaced with autonomic storage that is a virtual machine (VM) focused on providing the metrics that will enable virtual machine operators (e.g., VMware administrators) to monitor the performance, resource utilization, and Service Level Agreement (SLA) at a business application level.

Storage networks will have to adapt to providing shared transport for the different protocols. Adaptors and switches will increasingly use lossless Ethernet as the transport mechanism, with different protocols running underneath.

Backup processes will need to be re-architected and linked to the application. Application consistent snaps and continuous backup processes are some of the technologies that will be important.

Overall, there is a strong trend towards a converged infrastructure, where storage function placement is more dynamic, being placed optimally in arrays, in virtual machines or in servers/blades.

Server Racks and Blades

The technology trends discussed above are leading to deployment of large numbers of small volume servers in racks and blades. One of the challenges of such a high density of servers is connecting the them together and connecting them to the storage and users. The days of being able to make changes within a rack, or being able to actually wire up a rack are numbered.

Blade systems now provide virtual I/O, such as the HP Virtual Connect technology. This allows a central point for connecting together all the servers and the outside networks. There is always a trade-off between latency, bandwidth, connectivity, and distance when it comes to networks. High-performance processor-to-processor networks require high bandwidth and low latency and don’t need distance and communication flexibility. InfiniBand is such a solution. High performance storage networks require much longer distances, point-to-point communication and medium levels of bandwidth and latency. For users, communication flexibility is the most important. Technologies such as Virtual Connect can be dynamically changed to provide the optimum transport, protocol, and connectivity for different parts of the system.

Conclusions for Storage

The storage networks will need to be virtualized and dynamically modifiable. Storage resources within the rack/blade systems (flash and disks) and outside the servers (storage arrays) will need to be interconnected and managed as a seamless unified storage system.

Disk Drive Technologies

The design of disk drives has not changed much in the last 50 years. Advances in the silicon-based head technology allow higher aerial densities to be read and written to the platters. At a certain point in time in the technology cycle, it becomes more cost effective to reduce the size of the disk, which improves environmentals, space, and cost of manufacture.

The cost of 2.5 inch disks is now becoming cheaper that 3.5 inch for performance disks. Even with the distance limitations of 8 meters, SAS is a better back-end protocol that FC (actually it is FC-AL, Fibre Channel Arbitrated Loop which is not point-to-point). As a result, performance drives are migrating rapidly from 3.5 inch FC to 2.5 inch SAS drives. This gives much better environmentals and better performance.

The one exception is SATA drives, which are still more cost-effective in a 3.5 inch form factor and probably will continue to ship in this size for some time. As a result, many arrays are being designed with the ability to mix 2.5 inch and 3.5 drives.

The front-end protocols remain mainly iSCSI and FC. For short distances (8 meters) and a low number of drives (32) SAS is emerging as an attractive high-speed alternative (6gb/second) for connecting to storage within a rack or storage in an adjacent rack, or for small integrated systems.

Solid-state drives (SSDs) were the first introduction of flash into storage. They were simple to implement, because they used the same software as disk. No application code or system algorithms had to be changed. The introduction of flash caches (usually as separate read and write caches) in the array or server provided a more efficient way of using flash with less protocol overhead for most workloads. As the flash is persistent, well mannered workloads can use it for active data with and SATA drives storing the inactive date. SAS high-performance drives will become less useful. As a result, 2.5 inch drive technology probably will be the last form factor change for the next decade or longer.

Conclusions for Storage

Storage needs will be met by a different hierarchy than before, with flash-caches and SATA taking a predominant role as the most cost effective combination for most “well-mannered” workloads. Smaller 2.5 inch SAS drives will be used where the workload is “ill-mannered”. Storage professionals and executives should be educating application developers about designing applications to take best advantage of emerging flash technologies together with the densest available SATA technology.

Storage Efficiency Technologies

Storage efficiency is the ability to reduce the amount of physical data on the disk drives required to store the logical copies of the data as seen by the file systems. Many of the technologies have become or are becoming mainstream capabilities. Key technologies include:

- Storage virtualization:

- Storage virtualization allows volumes to be logically broken into smaller pieces and mapped onto physical storage. This allows much greater efficiency in storing data, which previously had to be stored contiguously. The earliest examples were the NetApp WAFL file system, the HP EVA virtual system, and 3PAR storage virtualization. This technology also allows dynamic migration of data within arrays that can also be used for dynamic tiering systems. Sophisticated tiering systems, which allow small chunks of data (sub-LUN) to be migrated to the best place in the storage hierarchy have become a standard feature in most arrays.

- Thin provisioning:

- Thin provisioning was introduced by 3PAR (acquired by HP in 2010), and is the ability to provision storage dynamically from a pool of storage that is shared between volumes. This capability has been extended to include techniques for detecting zeros (blanks) in file systems and using no physical space to store them. This again has become a standard feature expected in storage arrays.

- Snapshot technologies:

- Space-efficient snapshot technologies can be used to store just the changed blocks and therefore reduce the space required required for copies. This provides the foundation of a new way of backing up systems using periodic space-efficient snapshots and replicating these copies remotely.

- Data de-duplication:

- Data de-duplication was initially introduced for backup systems, where many copies of the same or nearly the same data were being stored for recovery purposes. This technology is now extending to inline production data, and is set to become a standard feature on storage controllers.

- Data compression:

- Originally data compression was a offline process used to reduce the data held. Data compression is used in almost all tape systems, is now being extended to online production disk storage systems, and is set to become a standard feature in many storage controllers. The standard compression algorithms used are based on LV (Lempel and Ziv), and give a compression between 2:1 and 3:1. Compression is not effective on files that have compression built-in (e.g., JPEG image files, most audio visual files).

Conclusions for Storage

These storage efficiency technologies can have a significant impact on the amount of storage saved. However, they do not affect the number of I/Os and the bandwidth required to transfer the I/Os.

Storage efficiency techniques will again be applied as appropriate to the most appropriate part of the converged infrastructure. The market momentum is to apply these technologies end-to-end to avoid re-hydration of the data moving through the system. This will allow savings throughout the stack, particularly in the data that has to be stored and transmitted, and will improve both system and storage efficiencies.

Big Data

Big data is data that is too large to process using traditional methods. It originated with Web search companies who had the problem of querying very large distributed aggregations of loosely-structured data. Google developed MapReduce to support distributed computing on large data sets on computer clusters. Apache Hadoop is a open source software framework that supports data-intensive distributed applications integrated and distributed by companies such as Cloudera.

Big data has the following characteristics:

- Very large distributed aggregations of loosely structured data – often incomplete and inaccessible:

- Petabytes/exabytes of data,

- Millions/billions of people,

- Billions/trillions of records;

- Loosely-structured and often distributed data:

- Often including time-stamped events,

- Often incomplete;

- Flat schemas with few complex interrelationships,

- Often including connections between data elements that must be probabilistically inferred.

Applications that involve big data can be both transactional (e.g., Facebook, PhotoBox) or analytic. Big-data projects are driven by business people looking to extract every ounce of value from data. The projects are risky, much shorter but more intense than traditional data warehouse projects. Data warehouses can be used as a source of data, but the data net is usually cast much wider out into the organization, suppliers, partners, and the Web itself. When the projects pay off, the returns are very high, but many projects are abandoned.

The very large amounts of data involved with big data projects make it not feasible (because of the cost and elapsed time to transfer data) to use the traditional model of bringing data to a central data warehouse. For big data problems, the code is usually moved to a simple shared-nothing model of data (often distributed but possibly local for transactional systems) and subsets of the data extracted and returned to a single location. This shared-nothing approach is used for both distributed data and for processing data centrally.

New database systems using this shared-nothing approach have been developed by Aster Data Systems (acquired by Teradata), Greenplum (acquired by EMC), Netezza (acquired by IBM), and Vertica (acquired by HP). For transactional big data systems, shared-nothing databases such as Clustrix and NimbusDB can be used. This approach is more cost effective, and facilitates an iterative approach to solving problem by enabling far faster methods of loading data.

Conclusions for Storage

As a result the requirement of big data, storage systems have had to be adapted to meet new requirements. Shared-nothing, distributed models result in processors and storage being more tightly integrated in the infrastructure. Management of metadata (information about the data) is key to understanding where data is residing. High capacity high bandwidth storage systems are needed centrally to manage a single file systems with potentially billions of files and metadata records.

Network Directions

Networks are converging and flattening. The most ubiquitous protocol is Ethernet. The addition of lossless extensions to Ethernet, expands the options for storage and network convergence. There is still a place in IT for InfiniBand, which has higher bandwidth and much lower latencies than Ethernet but limited distance and connectivity. For many server-to-server protocols, and for high-end storage systems, Infiniband is being increasing preferred as the interconnect.

Conclusions for Storage

For storage networks, the main protocols are FC, NFS, and iSCSI. Native FC will still be used in large, very high performance systems, but because of the space limitations on servers referenced above, converged network adapters (CNAs) will be used increasingly to run multiple protocols under the Ethernet transport. QLogic recently announced a rich set of functions for its third generation of CNAs, which are likely to set the standard for the next few years. The converged storage protocols will be Fibre Channel over Ethernet (FCoE) and IP, with iSCSI sitting on top of IP (together with CIFS and NFS for file system).

Milestones for Infrastructure 2.0 Implementation

Some key milestones are required for Infrastructure 2.0 implementation in general and storage within infrastructure 2.0 in particular:

- Sell the vision to senior business managers,

- Create an Infrastructure 2.0 team, including cloud infrastructure,

- Set aggressive targets for Infrastructure 2.0 implementation and cost savings, in line with external IT service offerings,

- Select a stack for each set of application suites,

- Choose a single vendor Infrastructure 2.0 stack from a single large vendor that can supply and maintain the hardware and software as a single stack. The advantage of this approach is the cost of maintenance within the IT department can be dramatically reduced if the software is treated as a single SKU and updated as such, and the hardware firmware is treated the same way. The disadvantage is lack of choice for components of the stack, and a higher degree of lock-in.

- Choose an Ecosystem Infrastructure 2.0 Stack of software and hardware components that can be intermixed. The advantage of this approach is greater choice and less lock-in, at the expense of significantly increased costs of internal IT maintenance.

- Reorganize and flatten IT support by stack(s), and move away from an organization supporting stovepipes. Give application development and support groups the responsibility to determine the service levels required, and the Infrastructure 2.0 team the responsibility to provide the infrastructure services to meet the SLA.

- Create a self-service IT environment and integrate charge-back or show-back controls.

From a strategic point of view, it will be important for IT to compete with external IT infrastructure suppliers where internal data proximity or privacy requirements dictate the use of private clouds, and use complementary external cloud services where internal clouds are not economic.

Overall Storage Directions and Conclusions

The storage infrastructure will change significantly with the implementation of Infrastructure 2.0. The will be a small percentage of application suites that will require a bespoke stack and large scale-up monolithic arrays, but the long tail (90% of applications suites) will require standard storage services. These storage services will be more distributed within the stack with increasing amounts of flash devices and distributed within private and public cloud services. Storage software functionality will become more elastic and will reside or migrate to the part of the stack that make most practical sense, either in the array or in the server or in a combination of the two.

The I/O connections between storage and servers will become virtualized, with a combination of virtualized converged network adapters (CNAs) and other virtual I/O mechanisms. This approach will save space, drastically reduce cabling, and allow dynamic reconfiguration of resources. The transport fabrics will the lossless Ethernet with some use of InfiniBand for inter-processor communication. The storage protocols will be FC, FCoE, iSCSI, SAS, and NAS. Storage will become protocol agnostic. Where possible, storage will follow a scale-out model, with meta-data management a key component.

The storage infrastructure will allow dynamic transport of data across the network when required, for instance to support business continuity, and with some balancing of workloads. However, data volumes and bandwidth are growing at approximately the same rate, and large-scale movement of data between sites will not be a viable strategy. Instead applications (especially business intelligence and analytics applications) will often be moved to where the data is (the Hadoop model) rather than data being moved to the applications. This will be especially true of “big data” environments, where vast amounts of semi-structure data will be available within the private and public clouds.

The criteria for selecting storage vendors will change with Infrastructure 2.0. Storage vendors will have significant opportunities for innovation within the stack. They will have to take a systems approach to storage and be able to move the storage software functionality to the optimal place within the stack. Distributed storage management function will be a critical component of this strategy, together will seamless integration into backup, recovery and business continuance. Storage vendors will need to forge close links with the stack providers, so that there is a single support system (e.g., remote support) and a single update mechanism for maintenance, and a single stack management system. Currently the list of companies in a position to provide Infrastructure 2.0 hardware and software stacks are CISCO, HP, IBM and Oracle. There will be strong stack ecosystems round Microsoft operating systems, database, and applications. Other ISVs such as SAP may also create stack ecosystems.

Action Item:

Footnotes: