Tip: Hit Ctrl +/- to increase/decrease text size)

Storage Peer Incite: Notes from Wikibon’s March 23, 2010 Research Meeting

This week's Peer Incite meeting was research in process over the hour of discussion. It started with the question: Should extra functionality be embedded at the application layer or in infrastructure, where it can be used by multiple applications? Rather than coming to a definite conclusion, however, the discussion evolved into a recognition that the answer is conditional, depending on the functionality involved, and most of all on the best way to provide value to the business.

One conclusion of the discussion is that storage decisions and storage functionality can no longer be considered in isolation from the rest of the infrastructure. Storage is part of an increasingly integrated infrastructure that encompasses the entire IT architecture from the application layer down. And as automation continues its inexorable climb up through functionality layers, storage decisions will have increasingly important impacts on other parts of the infrastructure. Today automated tiering is becoming a reality. The next step undoubtedly will be automated dynamic tiering. In this environment storage decisions will have an increased impact on application performance, while application, network, and server decisions will impact storage.

Managing this environment requires a holistic approach, therefore. And in an environment driven by very tight resources, and particularly tight capital, all decisions concerning IT infrastructure must be driven by business need. Every decision must be justified on the basis of the value it will deliver to the business in terms of competitive advantage. G. Berton Latamore

Tie Infrastructure Decisions to Business Value

When the conversation turns to storage, and the discussion devolved into speeds and weeds, CIOs' eyes glaze over. That was one of the important conclusions of the March 23, 2010 Peer Incite. One CIO stated in a recent discussion with Wikibon, "Infrastructure is evil." Our guest, former CIO Omer Perra's response: "But it's a necessary evil."

In an era of convergence, practitioners cannot view storage in isolation. Storage needs and strategies must be integrated with the other elements of the data center -- processing power, the network, facilities -- and viewed as a total system. Only a holistic view can allow decisions about infrastructure to be credibly related to the value of information.

CIO's should continually press infrastructure heads to answer the question: What’s the best way to deliver value to the users? Speeds and feeds are nice, but the key question is: What does it do for the business? Increase revenue? Cut cost? Reduce risk? By how much? How long will it take to realize the benefits? Who absorbs the risk of moving forward, and can I share these risks with my vendors?

Infrastructure is as an integrated set of components that tie to application service levels and ultimately business value. Only when it is viewed in this way can decisions be made regarding the placement of function into application stacks versus infrastructure (e.g. disk arrays). A key message that came out of our research and Peer Incite call is to endeavor to make infrastructure decisions in the context of the value of information, how it is used, how widely, by whom, and how its value can be protected and enhanced.

While the the general direction of the so-called open systems industry to enable infrastructure-as-a-service (essentially making infrastructure invisible), the architecture lacks the ability to manage infrastructure at the application level. VMware specifically, and to a certain extent Hyper-V, have roadmaps in place to fill this gap, and the general direction is clear -- infrastructure services that enhance application availability, performance and simplicity will thrive in the next decade.

Action item: CIOs need to push infrastructure heads to think about an integrated stack of services and tie infrastructure services (e.g. storage services) and application function together. For the time being, case-by-case judgments must be made about where to apply services-- within infrastructure or embedded in application stacks (e.g. Oracle and Microsoft). When choosing application-centric approaches, be aware that costs will go up at scale, so make sure you have a clear business value justification for the strategy.

Consider Element-based Storage (Storage Blades) to Support Application-centric Strategies

What is Element-based storage?

Element-based storage is a new concept in data storage that packages caching controllers, self-healing packs of disk drives, intelligent power/cooling, and non-volatile protection into a single unit to create a building-block foundation for scaling storage capacity and performance. By encapsulating key technology elements into a functional ‘storage blade’, storage capability – both performance and capacity - can scale linearly with application needs. This building-block approach removes the complexity of frame-based SAN management and works in concert with application-specific function that resides in host servers (OSes, hypervisors and applications themselves).

How are Storage Elements Managed?

Storage elements are managed by interfacing with applications running on host servers (on top of either OSes or hypervisors) and working in conjunction with application function, via either direct application control or Web Services/REST communication. For example, running a virtual desktop environment with VMware or Citrix, or a highly-available database environment with Oracle’s ASM or performing database-level replication and recovery with Microsoft SQL Server 2008 – the hosts OSes, hypervisors, and applications control their own storage through embedded volume management and data movement. The application can directly communicate with the storage element via REST, which is the open standard technique called out in the SNIA Cloud Data Management Interface (CDMI) specification. CDMI forms the basis for cloud storage provisioning and cloud data movement/access going forward.

The main benefits of the element-based approach are:

- Significantly better performance – more transactions per unit time, faster database updates, more simultaneous virtual servers or desktops per physical server.

- Significantly improved reliability – self-healing, intelligent elements.

- Simplified infrastructure – use storage blades like DAS.

- Lower costs – significantly reduced opex, especially maintenance and service.

- Reduced business risk – avoiding storage vendor lock-in by using heterogeneous application/hypervisor/OS functions instead of array-specific functions.

Action item: Organizations are looking to simplify infrastructure, and an application-centric strategy is one approach that has merit. Practitioners should consider introducing storage elements as a means to support application-oriented storage strategies and re-architecting infrastructure for the next decade

The Organizational Implications of Chargeback Systems

Wikibon research shows that the presence of a chargeback system has a major impact on how organizations approach infrastructure. There are at least two models for chargeback:

- Fixed-rate chargeback, which is determined at the beginning of the year and is based upon a point-in-time estimate of the cost to operate dedicated resources used by the business unit.

- Metered chargeback, which reflects the units (i.e., GBs in storage) of resources a business unit or application actually consumes from within a pool of shared resources.

Fixed-Rate Chargeback From a business unit perspective, fixed-rate chargeback has the benefit of being a known, predictable cost. While it may be adjusted on an annual basis, at least for a given budget year there are no surprises. IT management may have an incentive to pad the fixed rate to allow for unanticipated increases in requirements but little incentive to take advantage of cost-saving technologies except during annual negotiations with the business units, since all cost are covered and infrastructure is dedicated to specific uses.

Metered Chargeback From a business-unit perspective, metered chargeback has the advantage of providing a variable cost model. If usage goes up, costs go up, and as usage goes down, costs go down. Assuming that infrastructure usage is tied in some way to the value produced from the infrastructure, this fits very well with business units that have variable sales and variable workloads. On the IT-management side, in a metered-chargeback scenario resources are often provisioned out of a shared pool, which improves overall resource utilization. IT management will still need to cover the cost of all resources in the pool, whether utilized or not, so the rate will need to include some overhead. But in both theory and in practice the annual charges, on average, should be lower, since the resources are provisioned out of a shared pool.

Metered chargeback also has the benefit of providing regular feedback to the business unit on actual utilization. The impact of measurement on the behavior of individuals and organizations is well-documented and largely undisputed. The simple act of measurement will often lead individuals to modify their behavior. Done properly, charge-back can be used to provide incentives to reduce storage usage, eliminate the retention of unneeded data, and enable the IT organization to develop classes of service, based upon actual business requirements. As detailed in the Wikibon Professional Alert, "Developing A Storage Services Architecture," some IT organizations have begun to offer classes of storage services, that differ along dimensions of performance and availability. Typically, however, the assignment of data to a storage class has been relatively static based upon one-time, up-front, or periodically-reviewed decisions regarding application and data value. IT organizations rarely anticipate the implications of a more fluid environment, whereby data is dynamically migrated through tiers of storage.

The Impact of Dynamic Storage Tiering Recently, some storage vendors have begun offering automated storage-tiering solutions, whereby data is dynamically moved through various tiers of storage, based upon application requirements, business rules, and metadata. In a dynamic-tiering environment, chargeback can become significantly more complicated. How, for example, would a chargeback system measure and properly charge for the automatic, but temporary promotion of a subset of application data from Tier-1 spinning disk to Tier-0 solid state storage?

Operational Realities and the Cloud Effect Whether the charge-back mechanism is fixed-rate or metered, if a business unit has a business need for additional resources, it is the IT organization's responsibility to provide the resources, even when the requirements were not forecast by the business unit and are not in the original IT budget. Therefore, it is in the IT organization's interest to drive efficiency and maintain a sufficient buffer to support provisioning additional resources beyond budgeted increases. And while the buffer should include all reasonable operational and overhead costs, it cannot be too large, or the IT organization will find itself compared unfavorably to cloud-based infrastructure providers.

Action item: CFOs and CIOs should carefully consider the desired impact of a chargeback system and choose a chargeback mechanism that fits with the desired impact. If the goal is to assign costs to business units, then a fixed-rate chargeback system is simple and achieves the goal. If the goal is to match IT value to business value, then a metered chargeback system is probably more appropriate. And if the goal is to drive greater efficiency and better utilization of IT resources, then a metered system that includes classes of service, either dynamic or static, is more appropriate.

Application- vs. Storage-centric Services: What's the Right Approach?

Summary

At scale, CIO's will find that it's between 8-23% more expensive to place infrastructure function into application stacks than it is to manage storage function at the array level. This is not to say it doesn't make sense to pursue an application stack approach - but practioners should do so with an understanding of the costs and business value such a strategy will deliver.

This was a major finding discussed at the March 23rd Wikibon Peer Incite Research Meeting. Based on in-depth research with more than 20 practitioners and extensive financial modeling the Wikibon community debated the merits of a shared hardware-oriented infrastructure versus placing function in application stacks. Notably, small and mid-sized customers that don’t want to deal with complex infrastructure will often find it more effiecient and less expensive to place function into application stacks; underscoring yet another ‘it depends’ dynamic in IT.

What is Meant by Application Function?

The Peer Incite discussion evolved around the topic of infrastructure and specifically, should infrastructure function reside in application stacks or hardware. Function was defined as a functional capability that supports a business application. An example is recovery. The example given was should recovery capabilities be designed into, for example, Oracle 11g or Exchange 2010 or does it make more sense to place that function in, for example a storage array.

Research Background

Wikibon interviewed more than 20 practitioners in support of this research. Participants included CIOs, application heads, infrastructure professionals and technical experts. The following excepts summarize the statements made by some of the participants:

CIOs

“Infrastructure is evil. It needs to be simpler.”

“I am the CIO, I am not in the hardware or software business I am in the business to deliver business value (grow revenue, reduce cost). Speeds and feeds are nice but what does it do for my business?”

“We used to have one of everything where businesses made decisions independently-- but that didn’t work. Users want dialtone and to provide that we have to unify our business model on a standard set of IT services.”

“If a vendor can really save me money I’ll listen. But I need to understand how I get from where I am today (point A) to where I’m going tomorrow (point B) without jeopardizing the business.”

Application Heads

“What matters most to me? #1 Availability; #2 Availability; #3 Performance; #4User experience. Cost? Sure; cost is important too.”

Infrastructure Heads

“A well-defined services model cuts costs and improves services to the business. It also shuts vendors up– they can’t disagree with it, they can only comply (if they want to sell to me).”

“We have very informal chargebacks and the businesses pretty much do what they want. Of course we end up paying for it.”

Top Five Findings

At the Peer Incite we discussed the major findings of the research, specifically:

- There was a clear desire from executives to simplify infrastructure. Practitioners are pursuing two broad strategies: 1) Build infrastructure services into software stacks (e.g. Microsoft or Oracle); 2) Standardize with a common reusable set of services.

- Oracle, from a business resilience standpoint has a more credible approach but Microsoft is a force within the application development community, especially in mid-to-smaller businesses.

- Organizations that use chargebacks are much more receptive to an infrastructure services approach.

- CIO’s care about risk and business value; Application heads are focused on the end user experience, speed to deployment, business flexibility and business value received; Infrastructure heads care about service levels and cost.

- Cloud adoption is limited to virtualization. CIO’s are very cautious about bringing legacy apps into the cloud. But business lines are all moving to the cloud and the trend toward outsourcing to the cloud has been accelerated by 12-18 month due to the economic downturn.

Key Data Findings

We presented and discussed the following key graphics:

Application- vs. Array-centric Strategies

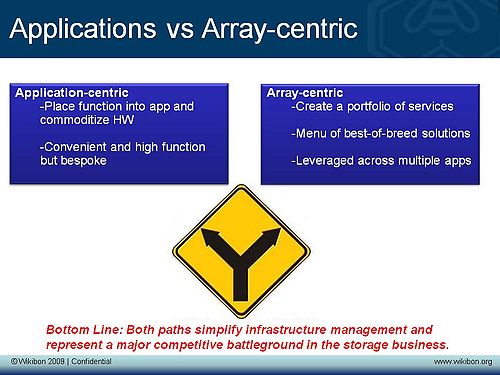

Figure one depicts the choices organizations are considering broadly.

- Organizations are either placing function in application stacks or standardizing on a shared services model

- Organizations with chargebacks tend to favor the latter approach

- Application heads have greater influence and decision-making authority in organizations pursuing an application stack approach.

- Both approaches are viable however at scale, application-centric strategies tend to be more expensive.

A Model for Infrastructure Services

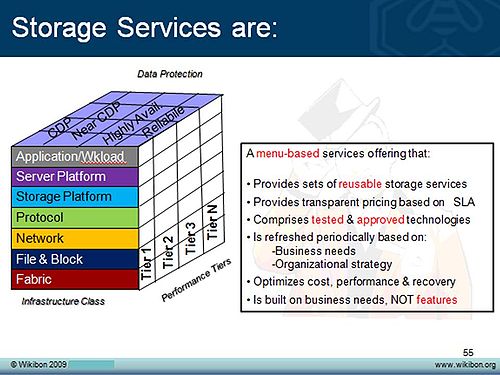

Figure 2 presents a model of infrastructure services developed by John Blackman and presented to Wikibon when he was an architect at Wells Fargo. A similar model has also been deployed in his current environment at a large grocer. The cube presents a three dimensional model of 1) Infrastructure components; 2) Availability levels and 3) Performance Groups. The intersection of the three dimensions is a service that is priced and delivered to the business.

- The approach has the benefit of simplifying infrastructure decisions

- Not all applications can be serviced from the cube (but 80% probably is a reasonable estimate)

- Such an approach can save more then 20% at scale relative to an application-centric, bespoke approach

- Often such a model will create tensions within application groups and business lines.

- Notably, this model will not satisfy 100% of applications in the portfolio-- 80% is a good target for practitioners.

Comparing the Cost of DAS v SAN

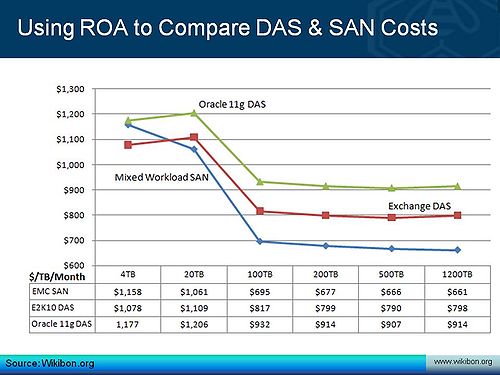

Figure 3 shows the cost per TB per month of three scenarios: 1) An Oracle 11g workload running DAS; 2) A mixed SAN workload and 3) A Microsoft Exchange 2010 environment. The figure shows cost on the vertical axis and scale on the horizontal plane. The model shows that in small environments (e.g. less than 750 seats), DAS is cheaper than SAN for Microsoft Exchange 2010 but above 1000 seats, SAN is less expensive. Oracle, due to exceedingly high license and maintenance costs was not found to be lower in terms of TCO.

- Data accounts for capex as costs depreciated over a 48-month period or lease costs.

- In no instance is Oracle DAS less expensive than SAN due to Oracle’s license fees at $20K - $30K per server.

- SANs of 20TB and under assume iSCSI SAN.

- Larger SANs use EMC CX (100/200 TB) and V-Max (500/1200 TB).

- Includes incremental server and software license costs to accommodate increased compute requirements.

- SAN is a fungible asset that can be shared across the application portfolio and is less expensive at scale.

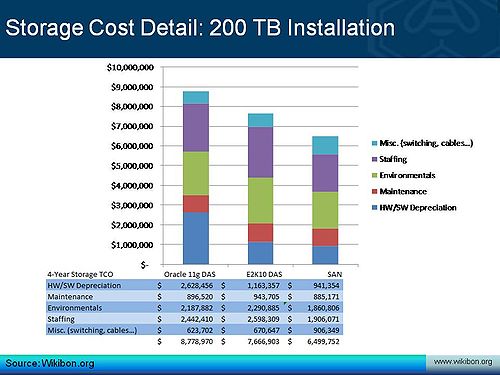

Figure 4 shows the drilldown of Figure 3 in a 200TB example. The model developed uses a return on asset methodology to capture metrics for installed assets as well as new gear.

- At 200TBs, a One-off model is 15% more expensive than a storage services approach

- The efficiency improvements from a storage services model at 20TB and up range from 8-23% relative to a One-off approach

- The ability to share resources across the application portfolio is the key enabler.

Advice to Practitioners

The Wikibon community agreed that pursuing initiatives that simplify infrastructure was commonsense business, however not having a simplification strategy (either application- or infrastructure-centric) was imprudent. Generally, at scale an application-centric approach will be more expensive than a shared services (e.g. SAN) model. However an application-centric approach can often deliver outstanding business value, especially in the area of database recovery. Pracitioners are advised to ensure adequate business value when pursuing application-centric approaches and justify the added expenditure.

In addition, in smaller shops, the application-centric approach is sensible as it removes the complexities associated with shared infrastructure such as SAN. Further, organizations interested in pursuing a shared infrastrucutre approach at scale should obtain a clear roadmap from their vendors as to how existing infrastructure will be migrated and how organizational risks will be mitigated. As well, users should push vendors to provide reference architecutures and proven solutions to further limit risk and speed deployment.

Action item: Organizations must actively initiate simplification strategies as one-off/bespoke infrastructure approaches will increase costs and deliver hard-to-measure business value. Application-centric approaches should be pursued with a clear understanding of ROI and business value while infrastructure-centric strategies should be designed to facilitate technology migration. By accommodating these simple parameters, organizations will limit complexity, improve service levels and limit lock-in.

Related Research

- Why Microsoft's Head is up its DAS

- Infrastrucutre Wars

- How Google Apps Threatens Microsoft

- Should Exchange 2010 use DAS or SAN

- Should Storage Services Reside in Arrays or Application Stacks

- A Storage Services Approach will Streamline Infrastructure Delivery

- Comparing Return on Assets (ROA) to Return on Investment (ROI)

How do storage vendors become relevant to application owners?

A year ago, with data growth still off the charts, storage administrators were a hot commodity. Today and for the foreseeable future, other IT skills such as network administrator will be in higher demand according to IT compensation and staffing specialists as well as industry analysts.

Meanwhile, storage technologies are improving to the point where the need for administrator intervention or tuning is much less of an issue, while tiering is also being taken over by more sophisticated software applications - not to mention a raft of newly minted cloud-service offerings that have entered the market.

In addition, while business line application owners care about performance, availability, manageability, data protection, security, and cost within the context of meeting or exceeding their requirements, and the question of keeping infrastructure in house vs using an external service provider may be a relevant part of that discussion, application owners seldom care what brand or type of storage is implemented if optimum service levels are maintained.

For better or worse, application owners have become increasingly more involved in driving technology purchasing decisions, and application vendors have a leg up on infrastructure providers. There are several reasons for this including:

- Application vendors bypass IT and sell to lines of business,

- Applications vendors speak the users' language and understand their needs better,

- Infrastructure vendors, especially storage providers, appear largely irrelevant to users,

- Users view storage largely as a utility or commodity.

Unfortunately, application owners often make technology purchasing decisions that negatively impact their enterprise's existing IT infrastructure. The plethora of crudely implemented archiving, collaboration, content management and e-discovery tools are perfect examples.

Bottom line

Given the fact that services or functions like data replication, archiving, and backup have application performance implications, storage suppliers need to provide an understanding of how their capabilities impact application performance, availability, and overall service levels. But as long as storage companies sell primarily to infrastructure heads, the disconnect between infrastructure and application environments will persist.

Action item: Storage providers must link their solutions to an overall service level for the applications, and sellers need to market to application heads as well. This means providing simple data about application level metrics, user availability, user performance, etc., versus just device-level metrics. Storage vendors need to quantify their contribution to application service levels. This includes creating reference architectures like the IBM red books and EMC proven solutions. The application stack approach has a great advantage in this regard so integrating with application stacks (e.g. Oracle, Microsoft, SAP, etc) is a good idea. If Oracle asks for a service and a storage vendor provides it, then it’s a win for both sides of the application and infrastructure equation.

Get Rid of Stuff with an Infrastructure Services Architecture

A shared infrastructure services approach can simplify application support, reduce the number of vendors, eliminate unnecessary hardware/software/maintenance, and reduce costs by 8%-23%, depending on scale. This assertion is based on research performed by the Wikibon community and discussed at the March 23rd Peer Incite Research Meeting.

The concept of an infrastructure services architecture was first presented to the Wikibon community by John Blackman. The model presented at today's Peer Incite was simplified, but the messages are similar. A key premise of the model is that the business requirement defines the service, not the infrastructure. The intersection of infrastructure class (e.g. server platform), protection level (e.g. RPO/RTO) and performance tier (or grade) will determine what is delivered, the SLA, and the cost to the business.

Frequently, such a model will cause friction within application groups and lines-of-business that have often become used to having the freedom to mix, match, and let infrastructure groups manage myriad hardware and software options. However by implementing a menu-based services approach, organizations can retire bespoke assets and maximize skill sets.

Practitioners should expect such a model can apply to 80% of an application portfolio, with 20% of applications requiring specialized infrastructure.

Action item: One-off implementations and purpose-built application infrastructure should be the exception, not the norm. A services-oriented infrastructure approach emphasizes standardization, re-usability, tested configurations, and transparent pricing to the business. Organizations should initiate such an approach for the majority of applications and retire outdated processes that encourage a 'one of each' mindset.