Contents

|

Introduction

The Wikibon users recently surveyed agree that Windows Server 2012 is an important and valuable release. Microsoft is shipping an updated operating system with a plethora of new features. Some are automatic and need no effort to use. However, for many of these new features, particularly those that relate to storage, effort is required to evaluate, implement and maintain. This careful evaluation may prove to be beyond the capabilities of many SMBs. The options for implementation of these features are:

- Implement the features in-house

- Advantages: Early implementation, early benefits

- Disadvantages: Acquire or buy-in skills, risk as early adopter, cost of maintenance

- Implement through third party hardware (e.g., storage arrays), hypervisors (e.g., Hyper-V or VMware) or ISV software.

- Advantage: Lower risk, lower cost, better implementation of features

- Disadvantage: delays implementation, potential of high cost of supporting hardware/software

On balance, most organizations and practically all SMBs will favor indirect implementation of Windows Server 2012 features.

Windows Server 2012 storage features

Although we don’t see uptake as being strong with these first-release storage features, that’s not to say that Microsoft hasn't made impressive strides in adding storage functionality to the Windows Server operating system. With Windows Server 2012, Microsoft has brought into the operating system some enterprise level storage features that can be leveraged to improve efficiency and may have the potential to reduce storage costs.

Offloaded Data Transfer (ODX)

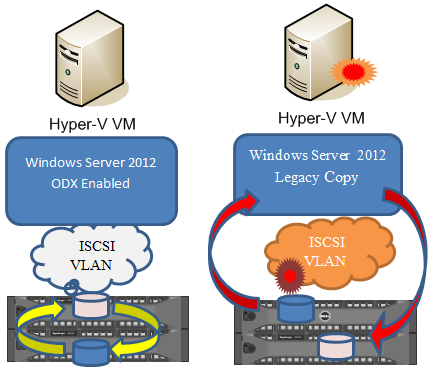

Perhaps the feature for which there is most excitement is ODX or Offloaded Data Transfer. With ODX, Windows Server 2012 can offload processor-intensive data copy and move operations to the storage array, thus alleviating the Windows Server of that burden.

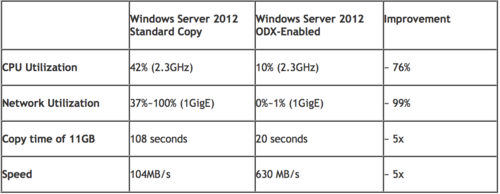

However, in addition to offloading the Windows Server, ODX’s enlistment of the storage hardware can result in massive speed improvement of the copy/move operation itself. These speed improvements come due to the fact that the array does not need to use the network to perform the requested operation, thus eliminating one point of resource contention. In fact, in some real world testing, Dell storage engineers saw throughput speed jump from 104 MB/s to 630 MB/s, a fivefold improvement.

As workloads continue to grow ever-larger, services such as ODX will become increasingly important in the enterprise. This is one feature that all storage vendors should strive to support. It’s not terribly difficult to implement and can have tremendous customer benefit.

- Recommendation: Implement this feature in storage arrays that support ODX

TRIM/UNMAP

Releasing deleted space and allowing thin provisioning features has always been a challenge for Windows Server storage administrators. Defragmentation software scheduling is not for the faint-hearted! Thin provisioning is a method by which storage administrators can maximize storage capacity by using space which has been dedicated to a storage volume, but that has yet to actually be used. For example, many administrators tend to over-provision storage on Windows computers just in case it’s needed in the future. With thin provisioning, space is assigned to a volume, but it’s not actually allocated until it’s needed. So, if an administrator assigned 100 GB to a volume but only used 40 GB that other 60 GB can be made available to other volumes. As more space is required toward fulfilling the original space commitment, it is allocated granularly but the total capacity can’t go beyond the original specification. With UNMAP capability included in Windows Server 2012, the thin provisioning process can reclaim previously used space that has since become unused. With this feature, thin provisioning becomes more efficient, resulting in further lowering of costs through zero space reclamation.

Recommendation: Implement thin provisioning through Hypervisor software and storage arrays that support TRIM/UNMAP.

Deduplication

Deduplication has long been a part of the enterprise storage arsenal. With Windows Server 2012, Microsoft has brought this feature into the operating system where is can carry out its intended purpose, which is to reduce the amount of physical disk space used by an organization’s data. To this end, deduplication relies on a complex set of tables that define the various locations of deduplicated is data. Deduplication results in direct cost savings as a result of the achieved storage savings.

Unlike just about any enterprise class storage array, though, Windows Server 2012’s deduplication feature is not of the inline, real-time variety. Instead, Windows Server 2012 deduplication feature runs as a scheduled post process task. The difference between inline and post process deduplication is significant and affects how much space needs to be available.

With storage that supports inline deduplication, data is written natively to the storage device in a deduplicated way. With post process deduplication, data is initially written to the storage duplicated. At some scheduled point, a process then runs and deduplicates the data that has been written. Although the end result is deduplicated data, capacity needs are much greater in a post process system since the data initially consumes all of the required disk space.

The post-process nature of Windows Server 2012’s deduplication technology pales in comparison to the more robust, more capable inline deduplication features found in more traditional storage arrays. Although Microsoft’s method can have a positive impact on overall latency, today’s storage processors generally have significant excess capacity and the deduplication process is simply built into the data flow. Microsoft’s deduplication operation is a file-level service that operates by breaking files up into smaller data chunks and then performing deduplication based on those smaller chunks, which can improve overall deduplication success.

Storage Spaces

An impressive feature that has been added to Windows Server 2012 is Storage Spaces, which is Microsoft’s answer to storage virtualization, which supports the perceived desire by SMBs to move to lower cost commodity storage. Storage Spaces provides administrators with the opportunity to centrally aggregate and manage storage from a variety of sources and manage it all as a single pool of storage to be divvied up. Think of Storage Spaces in the same way that you think about vCenter, except for storage. vCenter is a management platform that manages hardware across a number of vSphere instances, but vCenter itself doesn't care where these vSphere instances come from. It just brings each vSphere instance into the centralized resource cluster and manages all of the resources as a single pool.

At the same time, Storage Spaces can imbue even the most basic commodity storage with some enterprise class features, such as thin provisioning and, when combined with new NTFS features, deduplication.

In essence, Storage Spaces provides a level of abstraction to storage in Windows so that all of the storage sources can be managed as a single resource pool. With Storage Spaces, for example, administrators can combine local in-server storage with external, JBOD-based storage and even USB connected storage (albeit, with a corresponding drop in performance) into a single pool of storage that the administrator divides as necessary to meet organizational goals.

It should be noted that iSCSI LUNs cannot be included in Storage Spaces-managed pools. Further, Storage Spaces does not allow the use of storage that is already subject to RAID; in fact, Microsoft requires that RAID functionality be disabled and that the drives be presented to Storage Spaces "raw."

Recommendation: Wait and see. This feature will require additional software from Microsoft or ISVs to be managed effectively. For larger organizations, the benefit of offloading RAID will be significant. Overall this feature will be of significant benefit to very small SMB implementations, but will need significant management enhancement to make it easy to use.

iSCSI target software

Windows Server 2012 is the first Microsoft Windows Server operating system to include iSCSI target software built into the system, although add-ons were available for previous versions of Windows to provide this functionality. Microsoft’s iSCSI target software provides some enhancements over previous efforts, including support for 4k sector drives, but more importantly, the new iSCSI target software doesn’t have to be tethered to physical hardware; it can be configured to use storage from a Storage Spaces defined volume. Once done, that volume is then shared with other systems using the iSCSI protocol. The remote systems simply configure their iSCSI initiators to point to the Windows Server 2012 system as their target. The iSCSI target in Windows Server 2012 provides companies with a way to share Windows-based storage on a block level.

SMB 3

As workloads grow in size, it becomes increasingly important that the communications protocols used on the network have the ability to keep pace. As such, Microsoft has made significant improvements to the Server Message Block (SMB) protocol. SMB 3 includes major improvements that drastically impact both the performance and the reliability of storage-related network communications. This TechNet article provides the details necessary to understand the potential impact of SMB 3. Bear in mind that SMB 3 is primarily a file-level protocol appropriate for use in NAS-type scenarios.

The result: Administrators using SMB 3 can simply continue to use the structures they use today. SQL databases, Exchange and Hyper-V virtual machines can be stored on regular, every day, SMB 3-enhanced file shares. Of course, this is Microsoft making these proclamations, but while there is perceived potential upside here from a simplicity and skills standpoint, but there is a tradeoff.

While SMB 3 adds significant improvements over previous versions of this protocol, ensuring full compliance with all SMB 3 capabilities is a significant undertaking for storage vendors. Further, even though SMB 3 is a good transport protocol, customers and vendors already have enterprise grade transport tools at their disposal and SMB 3 doesn’t bring to the table major differentiation. The block level iSCSCI and Fiber Channel and the file-level NFS and existing SMB tools are generally more than sufficient to meet the storage needs for most organizations.

Recommendation: Wait for support to be included in Microsoft, ISV and Hypervisors software as an option. Wait for feedback on usage – there is little benefit in being an early adopter.

Hyper-V 3

With Windows Server 2012, Microsoft has shipped a brand new version of Hyper-V, which seems to be garnering significant interest. Although the focus of this paper is on the new storage features found in Windows Server 2012, Hyper-V 3, Microsoft’s brand new enterprise-grade hypervisor, is frequently mentioned alongside these features and can leverage many of the storage features of the underlying operating system. Hyper-V 3 is mentioned here for completeness.

Recommendation: Hyper-V has not caught up with VMware with the depth and quality of functionality, but now provides a low cost alternative for some basic functions. Larger installations are moving to a multi-hypervisor environment.

Required skills for Windows 2012

Although Microsoft has provided administrators with relatively “clean” administrative tools to manage the news set of storage features, the fact remains that those desiring to use the broad swath of new storage features included in Windows Server 2012 will need to have a reasonably substantial set of skills in order to proceed in a way that ensures the viability of the solution.

The following is a list of the skills that are potentially needed in order to provide comprehensive support for the Microsoft solution:

- Storage virtualization

- Working with different kinds of drives (i.e. internal SATA vs. external SAS)

- Disabling RAID controllers

- Performance limitations of USB

- Configuring iSCSI targets and initiators

- SMB 3

- Deduplication

In essence, the person configuring and deploying the Microsoft storage solution must be reasonably versed in all of the technologies that form the solution upon which the business must rely in order to be successful.

Three scenarios for users to deploy storage with Windows 2012

With the above information provided as background for the rest of this paper, when it comes to deploying storage in an SMB, there are three potential scenarios that CIOs can follow, but each comes with an associated set of challenges and benefits.

Scenario 1: The “go it alone” CIO with technical staff

Some organizations default to the “go it alone” route in an effort to save money on the initial investment in hardware and software. In these organizations, the CIO has a staff member (which may the CIO himself) with the necessary knowledge to deploy and support a full Microsoft-based storage solution. In this scenario, the CIO purchases commodity hardware and storage, which may include servers with significant storage capacity and/or external JBOD arrays with SAS connectivity.

There is certainly some financial upside in this scenario, but it comes with some significant risks and challenges as well.

Benefits

The primary benefit in this scenario is cost and we believe that the CIO in this scenario would have the potential to save significant dollars on the initial upfront investment in this solution. The entire solution is commodity-based, leaving the CIO with a broad array of storage options from which to choose, from very low cost to high cost.

Risks and challenges

The risks and challenges in this scenario, however, are vast and they encompass both the staffing of the solution as well as the hardware chosen for the solution itself. Most importantly, the business in this case is relying on what could be a single point of failure: The CIO or the engineer that implemented the solution. In most SMBs, it’s difficult to hire enough people to have fully overlapping skill sets and it’s not likely that many will have multiple staffers with deep storage knowledge.

The CIO needs to consider the impact on the business should the person that architected the solution leave the company. Storage is a foundational element of the IT architecture, so assuming significant risk in being able to support this element is generally not considered a good IT best practice. Solution sustainability is not guaranteed in this scenario.

Moreover, the choice of hardware could be a potential risk factor as well. As cost is likely the primary driving factor in this scenario, it’s more likely that the selected hardware is of lower cost and, potentially, lower quality. Although some of this risk can be mitigated through software, the fact remains that lower quality components represent a more substantial risk for the organization.

Finally, by “mish-mashing” storage technology, there isn’t a simple way to predict the levels of performance that will be garnered from the solution, making it very difficult to do proactive workload planning against the storage solution. This could lead to a CIO finding after the fact that a solution is not capable of meeting workload needs.

Scenario 2: Going the Microsoft route with a partner/consultant

Reading the information from scenario 1, a CIO may make the determination that simply going completely alone is not sustainable due to staffing concerns and may wish to ensure that appropriate long-term operation is guaranteed by using appropriate hardware.

Benefits

There are some benefits in this option and it carries less operational risk than the previous. In this scenario, the CIO has someone to call in the event that the solution fails or fails to live up to expectations. In common parlance, he “has a throat to choke” or someone that can be held accountable to ensure that the organization can continue to do business. Further, if the solution is sound, the solution may still cost less than more traditional storage solutions.

Risks and challenges

There remain, however, significant risks and challenges in this scenario. Bear in mind that much of the functionality that has been added to Windows Server 2012 is new, version 1 technology that has not been widely tried and tested, except in lab and beta environments.

Additionally, while going with a partner to provide the implementation and ongoing support services may make operational sense, it only holds true of the external partner remains responsive to needs. Further, if the solution does not live up to expectations, the CIO may find that his money-saving solution has become a black hole or a never-ending money pit.

Scenario 3: Working with a traditional storage partner

Most reasonably sized organizations have traditionally chosen the opportunity to work with a strategic storage channel partner, who has access to the broad array of storage solutions available on the market. These partners generally have certified technical staff and can help customers match solutions to solve specific business problems. Moreover, in order to maintain certification, partners must often continually learn about the products that they market and sell.

Benefits

The benefits to using a traditional storage partner are obvious. These organizations generally have battle-tested support teams that can ensure that customers stay operational. Moreover, in the event of a catastrophic failure, these vendors have direct-line red phones into manufacturers and can get additional support resources quickly, which is something that is difficult for individual customers to procure.

Additionally, both channel partners and storage vendors have a plethora of information from the field. Potential customers can review case studies and customer testimonials and referrals to add credence to a vendor’s claims.

In other words, the CIO can take some of the worry out of the critical storage decision and focus more on his business and less on the technology that runs it. Frankly, this fact in and of itself should be considered a cost savings.

Risks and challenges

Of course, even with the tried and true approach, there are both real and perceived challenges. For the cost-conscious CIO that was looking to save money by considering a non-traditional approach, the initial cost of the storage investment may be larger that desired. And, since many traditional storage vendors have separated many of their components and sell them separately, there could be a feel of being “nickeled and dimed” throughout the negotiation process.

Once the solution is in place, the customer is also subject to potential vendor lock-in issues as well as being subject to product end of life announcements that may not always be in the customer’s best interests.

Action Item: In many discussions, customers have presented high levels of interest in what Microsoft is doing from both a storage and a hypervisor standpoint. Storage vendors would do well to add support for Windows Server 2012’s ODX and SMB 3 capabilities as rapidly as reasonable.

In discussions with CIOs, it’s clear that for many, buying new storage is about containing costs as much as possible while, for others, it’s about buying storage that will lead to the greatest levels of business flexibility. Integration with Microsoft’s new features has the potential for storage vendors to achieve both goals.

It’s important also to remember that different customers will have varying degrees of tolerance for risk. For those that can and must save money at any cost, it’s more likely that they will experiment with the new Microsoft features on their own. For those that are lower risk, they will almost certainly continue to leverage traditional storage VARs.

Footnotes: Scott Lowe is co-author of the recently published Training Guide: Configuring Windows 8.

If you’d like to learn more about how Windows Server 2012’s deduplication feature operates, this white paper from the Storage Networking Industry Association entitled Primary Data Deduplication in Windows Server 2012 provides in-depth technical information.