In the networking space, the discussion of convergence is typically focused on how SAN (storage, typically Fibre Channel (FC)) and LAN (Ethernet) are coming together (with FCoE being one of the paths. The other network to consider is InfiniBand (IB), which today is used for high performance computing (HPC) environments, predominately server-to-server connections that require low latency. The Ethernet camp is looking to drive down the latency of solutions with 10 Gigabit Ethernet to chip away at InfiniBand. Mellanox is the market leader in IB solutions and, in a recent announcement, expanded the solutions that it is offering beyond IB into Ethernet and even FC.

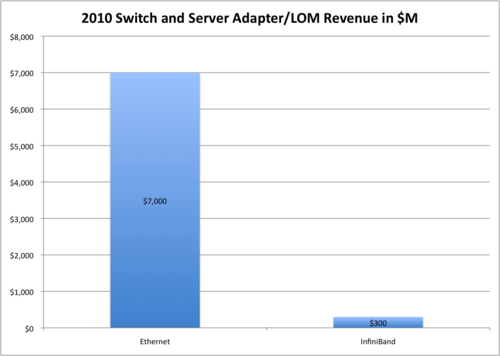

While IB and Ethernet solutions have done a good job of replacing proprietary interfaces in the Top500 Supercomputers, typically little overlap exists between the protocols in the enterprise space. IB is a good fit for environments such as financial trading and oil/gas research, where microseconds can be converted into millions of dollars and also has a niche for high-bandwidth low-latency processor-to-processor communication in enterprise database and meta-data applications where locking rates are very high (e.g., Oracle Exadata, IBM Sonas, and EMC Isilon). Ethernet is the ubiquitous interconnect for most enterprise network traffic. FC and Ethernet can both have the same physical layer, and with the DCB enhancements to Ethernet, FC can be transported over Ethernet using FCoE. While options can allow IPoIB and RDMA-over-Converged-Ethernet (RoCE), IB and Ethernet are different cables and different use cases. While IB is estimated to have a solid growth path in front of it, as seen in Figure 1, even a fraction of 1% of the Ethernet market would give Mellanox ($150M revenue in 2010) a large opportunity for growth.

Graphic: Wikibon 2011

Mellanox took some big steps into the enterprise market last year with its partnership with Oracle (Exadata/Exalogic use of IB and the 10% investment share). While the financial incentive for a public company to grow is undeniable, Mellanox will clearly have to balance its investment from Oracle (Sun) to push deeper into the enterprise space while serving its traditional high-performance computing (HPC) base. A potential troubling sign of imbalance of the new Mellanox SwitchX product is that while the latency of the Ethernet product is an “industry-leading” 230ns, the new 56Gb/s InfiniBand latency is listed at 160ns, significantly higher than the Mellanox 40Gb/s IB latency listed as low as 100ns (hence the PR positioning changes from “industry-leading latency” to “low latency”). For the IB space, latency is king, and Mellanox must not lose focus. The Ethernet market is crowded (see my round-up of Ethernet switches from Interop) where every server vendor either owns it’s own networking products or has multiple partners and OEMs. Mellanox must make sure that its relationship with Oracle and expansion into Ethernet does not disrupt its current IB business, especially in the HPC arena.

Action Item: While solutions from Mellanox and other suppliers will continue to drive down the latency of Ethernet, InfiniBand will remain the leader in ultra-low latency/very high band-width for many years. Many enterprise architectures – including IBM SONAS, EMC Isilon and Oracle Exalogic – use InfiniBand and the growth of shared-nothing architectures where latency is key will continue to favor InfiniBand for the near future. While users should be cognizant of Ethernet’s growth into segments traditionally dominated by other interconnects, IB (and FC) will have strong niches for many years to come.

Footnotes: