#memeconnect #ibm

We have developed a model to evaluate the effectiveness of existing data reduction technologies. Our goal is to be able to rank ROI as defined by (marginal benefit)/(marginal cost). The model we're developing uses the concept of CORE, which stands for Capacity Optimization Ratio Effectiveness. It is a measure of the effectiveness of a storage optimization technology as a function of time and cost to achieve a desired capacity reduction. In this model, a user can trade efficiency of optimization for elapsed time and cost. In other words, it’s a useful way to measure where to more expensive reduction techniques will drive business value.

There are seven variables we consider in CORE:

- Storage Capacity (S) – by capacity, we mean the volume of data being optimized as measured by the size of the dataset used to define the optimization index. In general, the larger the dataset used to define the index, the more CPU horsepower will be required to optimize the data. For example, when Data Domain ingests a backup stream the ‘capacity’ could be measured in TBs; Falconstor and ProtecTier, increase the size of the dataset by using more processing power.

- Percent Capacity Reduction (R) - That is the percent of targeted capacity that is eliminated; which is a function of the type of data being optimized. For example, backup data can be more highly optimized than multimedia files. In general, the more capacity being optimized, the greater the percent capacity reduction.

- Cost of Solution (C_s) - Cost of data reduction solution to include processing power, memory, bandwidth, software, redundancy. (Note: for the first pass we've left off operational costs).

- Cost of Latency (C_l) - C_l is "cost of latency" and is a scaling value based on the assumed cost of a second of added latency.

- Value of Capacity Reduced (V) - Measured as the cost/TB X the amount of data reduced - i.e. the dollar value of storage that is eliminated.

- Workload IOs/second (W) - Workload characteristics (in IO/second)

- Optimization Overhead seconds/io - The optimization overhead of data optimization (in seconds/IO)

Here's the formula:

CORE = (S x R x V) ÷ (C_s + (C_l x W x O)

Wikibon has tested the CORE methodology and the first technologies analysed were:

- A-SIS de-duplication technology from NetApp

- Alberio de-duplication technology from Permabit

- Newly-announced compression technology from NetApp

- Real-time Compression technology from IBM (previously StorWize)

All the technologies are capacity optimization of primary storage, all include inline re-hydration on read, and all except NetApps A-SIS include inline write processing.

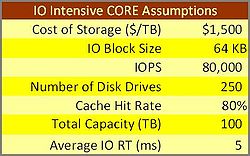

The workload assumptions are given in table 1, and show that it is an IO intensive environment using 400GB high performance drives.

.

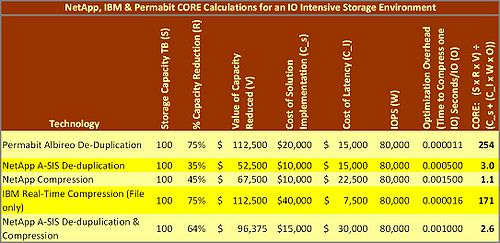

The CORE input variables and CORE results for this IO intensive environment are shown in table 2.

A CORE score of 1 means that the benefits of de-duplication equal the costs. The Wikibon analysis shows that the NetApp compression technology gives a poorer return than the A-SIS de-duplication technology. Combining the two technologies dilutes the benefit of A-SIS.

The Permabit solution for de-duplication and the IBM Real-time Compression have a far higher CORE scores and a much better return on investment that the A-SIS and NetApp compression systems for the workload and cost assumptions made in the comparison.

There are now a number of technologies that have integrated de-duplication and compression technologies within the storage arrays and many more that are coming. The analysis shows that there is a cost to implementing all types of capacity optimization, including a potential increase in IO latency and storage specialist effort to implement and monitor the additional technologies. The results of the Wikibon CORE analysis show that the additional costs of more advanced implementations from Permabit and IBM give a much better return on investment over less efficient implementations from NetApp.

Action Item: Focusing on one best-of-breed capacity optimization technology from either Permabit or IBM will give a better return in IO intensive environments than combining two less efficient technologies from NetApp.

Footnotes: