Storage Peer Incite: Notes from Wikibon’s March 18, 2008 Research Meeting

Moderator: David Vellante & Analyst: Nick Allen

Last week, NetApp announced its new name and a new strategy targeted at increasing its market and mind share among the top storage users. This strategy focuses on providing an answer for consolidating storage to support the vast majority of applications -- but not those that absolutely need tuned, high performance storage -- and to support server and storage virtualization. This newsletter examines that strategy, as explained in NetApp's analyst meeting. In general, the Wikibon community is positive about the plan and is waiting to see if the execution matches the promise. G. Berton Latamore

NetApp is Dead: Long live NetApp

Early last week, NetApp unveiled a new look, a new name, and a new emphasis on gaining share in a total available storage market it estimates at $84B. NetApp believes it serves half of that market and, in a page out of Jack Welch’s legacy, has a stated goal of becoming #1 or #2 in that space. With a projected $4B in FY ’09 revenue, the company appears well on its way. Furthermore, in the largest segment of the market, networked storage hardware, NetApp’s CEO Dan Warmenhoven shared IDC data that demonstrates that on a manufactured basis NetApp is poised to surpass Hitachi for the number 2 position, still approximately 20 points behind EMC’s leading 34% share.

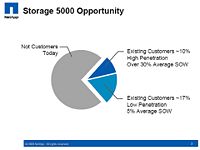

NetApp’s operational plan to achieve and measure this goal is to increase brand awareness and feet on the street to penetrate what it calls the Storage 5000, a proprietary list NetApp has compiled of the world’s 5,000 largest storage consumers. Amazingly, despite its 14-year history, strong reputation, and excellent products, NetApp does no business with 73% of the Storage 5000 and is only deep into 10% of accounts on the list. NetApp’s immediate opportunity is to further penetrate the remaining 17% of this base that does only modest amounts of business with the company.

What is NetApp’s fundamental go to market strategy? The premise of NetApp’s value proposition is dead simple and extremely powerful: Storage is growing at 50% per annum, and budgets are flat to down…so help customers store more for less. NetApp is claiming that up to 75% reduction in storage capacity is possible. The company’s strategy assumes that efficiency wins and is placing its bets squarely on the following investments:

- Storage for server virtualization. NetApp claims to have added storage support for what Wikibon believes is more than 5,000 VMware server instances this year alone and is aggressively pursuing a VMware land grab strategy;

- Breadth of offering to include storage virtualization, thin provisioning (FlexVol), data de-duplication, file consolidation, disk-based recovery, remote replication, cloning, ATA support, and multiple connectivity choices, all in a single, unified platform;

- Add 1,000 feet on the street and enable the channel by transferring intellectual property, including services capabilities to the channel to broaden support, strengthen awareness, and better service customers.

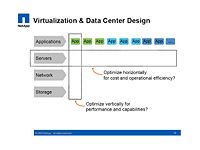

What is NetApp’s vision for what storage will look like in the future? Fundamentally, NetApp is committed to reducing the amount of storage users require. As a stated strategy, this is unique in the storage business and supports a compelling vision. Today’s applications are largely supported by vertically stovepiped infrastructures optimized at the behest of application heads. While this will continue for the most demanding applications, increasingly customers are looking to put in place more efficient (horizontal) platforms that can flexibly support a wider variety of applications. What is authentic about this vision is that instead of laying out a scenario where ALL applications standardize on NetApp infrastructure, this view acknowledges that there will be 'zones' of infrastructure established to do the job most effectively. In essence, it underscores the fact that there's an opportunity to clean up big chunks of inefficiency in the data center while certain applications will continue to require best-of-breed optimization for performance, recovery, security, and business resilience and will be tuned vertically within the infrastructure stack.

The implications of this approach for storage architects and admins is a more services-oriented view where storage provides re-usable services across a variety of applications and is optimized for efficiency by reducing suppliers, standardizing on service offerings, and, while limiting the choice of application heads, dramatically simplifies storage procurement, installation, provisioning, migration, and retirement.

Will NetApp’s strategy work? In pursuing this strategy, NetApp has created a strong portfolio of excellent capabilities that Wikibon believes collectively represents a suite of offerings that is unmatched in a single product architecture. Combined with the company’s emphasis on simplicity, this is a powerful mix that, if marketed more aggressively, will help NetApp continue to grow ahead of the industry average. On balance, the Wikibon community is impressed with NetApp’s approach but believes that, to be effective, its branding campaign, which adds two points to OPEX, must be consistently pursued over a multi-year period. To the extent that NetApp’s investments don’t allow it to grow back to its business model by the second half of FY ‘09 (i.e. OPEX as a percent of revenue settles at 45%), the plan faces risks.

In addition, the NetApp analyst event highlighted several “don’t haves” that NetApp must address in the near-to-intermediate term. First and most obvious is the fulfillment of a goal to increase sales headcount by roughly 1,000 (for a total of 3,000). Scalability remains a concern for NetApp customers as ONTAP 8 (or beyond) is eagerly awaited to support more than a two-node cluster, and few details were provided as to when this capability is expected and what form it will take. This will also have implications for the company’s remote replication strategy, which, while competitive with offerings from modular array companies, continues to be perceived as substantially less robust than offerings from EMC, IBM, and Hitachi, although admittedly Wikibon needs to do more research in this area. Finally, NetApp paid little or no mention to storage for so-called Cloud Computing and does not appear to be going after consumer markets at this time.

Despite this white space, Wikibon believes NetApp’s value proposition is compelling and attractive for users and we expect the company to continue to grow at a rate faster than the marketplace.

Action item: Users that are large NetApp customers have enjoyed the benefits of simplicity and cost effectiveness for quite some time. Non-NetApp users should consider NetApp solutions but be aware that while the company has a comprehensive suite of storage offerings, the most demanding Tier 1 capabilities must be found elsewhere. Users that have modest experience with NetApp are in the best position to leverage a soon-to-be-released bevy of free SE services that NetApp will deploy to move these customers from light to heavy NetApp users.

When I use a word it means just what I choose it to mean, neither more nor less

Take the word virtualization as applied to storage. The Wikibon definition says that block storage virtualization “… breaks the physical connection between the LUN (server side) and the physical volume (array side); the virtualization engine keeps track of (maps) the connection between the LUN and the virtual volume (as the server sees it), and the connection between the virtual volume and the physical volume (as the array sees it).” This applies to storage virtualization as implemented by IBM’s SVC, Hitachi’s USP products and recently EMC with Invista. All allow, for example, data to be migrated without disruption within an array or from one array to another, and allow a full implementation of tiered storage. This can be best described as non-disruptive heterogeneous virtualization. This is excellent strategic fit for large data centers with multiple arrays, where avoiding disruption of applications is a high priority. One Wikibon member uses this to wean people of traditional tier-1 storage, with the promise that if the performance is not adequate with a tier-2 array, it can be seamlessly and non-disruptively migrated back to tier-1 storage.

NetApp's FAS (and 3PAR's InServ) storage systems claim to have virtualization as well. And they are right – they both have excellent virtual architectures that allow the virtual mapping of volumes within a single array. This allows for excellent utilization and performance tuning within that array. This is best described as homogeneous virtualization. This is an excellent strategic fit for installations with fewer arrays where non-disruptive migration is not the most important issue, or as a solution for a specific storage pool supporting (say) virtualized servers.

And then there is NetApp's V-Series, which is both a NAS gateway and a SAN virtualization platform. It is heterogeneous virtualization in the sense that the storage can be provided from qualified storage arrays from other vendors(such as the EMC CX array). Other than RAID, all functionality for the attached storage is provided by the V-series appliance. It provides great connectivity and the ability to extend the life of existing storage arrays, but not non-disruptive migration of block-based storage.

Action item: It cannot be assumed that technologies such as virtualization and thin provisioning will be implemented or integrated the same way by vendors. Humpty Dumpty in Lewis Caroll’s “Through the Looking Glass” asks “which is to be master.” The business requirement is to be master; choose the best strategic fit of the technology to those business requirements and priorities. If you are talking to NetApp as a general purpose storage supplier for the first time, invest in developing a dictionary to translate NetApp storage-speak. It will be worth the effort.

Reflections on the New NetApp

Introduction

On Tuesday March 11th, 2008, the day of its investment and industry analyst event in New York City, NetApp announced a new look, a new name, a new tag line, and a renewed enthusiasm to aggressively increase the awareness of its information management and data protection capabilities for enterprises worldwide. This article summarizes this event and provides our commentary including prospects for the "new" NetApp.

What's behind the new NetApp name and branding?

- While NetApp has grown from relative obscurity to a top-rated player, it has not successfully penetrated its Total Available Market, which it calls the Storage 5000 Opportunity (see Figure 1).

- Despite having evolved from a single-product appliance company to a top player and growing 15%-20% Y/Y to a present size of $3.3 billion dollars in revenue with no slow down in sight, it is still often perceived as just an appliance vendor.

- Thus, it has dropped the “Appliance”, from its name and is now officially NetApp, Inc. (its stock symbol, NTAP, has not changed). It also has a new logo, a new web site, and a new tag line: "Go further, faster".

- Second, it has embarked on a brand awareness campaign that includes major thrusts and spending in advertising and marketing.

- And, most important, it intends to put a thousand new quota-carrying feet on the street.

- NetApp also plans to increase indirect distribution with an expanded channel partners program.

- NetApp claims that these efforts will cost roughly two points of operating expense as seen in increased SG&A, but anticipates these will be offset in less than a year by the resulting increase in revenues.

How is NetApp's value proposition different from its competitors in areas such as VMWare, deduplication, performance management, information management, and data protection?

- VMWare and other X86 server virtualization environments:

- NetApp claims it has superior solutions for X86 server virtualization projects including products, expertise, and, to its point, two customers presented great success stories.

- As traditional backups don't work very well in virtual server environments, NetApp's disk-to-disk backup and snapshot copy capabilities give NetApp competitive features.

- Maintaining multiple, but identical system images can be disk intensive, but NetApp's cloning features can reduce this to as little as one copy.

- NetApp has strong partnerships with the server virtualization vendors, and its field force has developed considerable expertise, particularly with VMWare.

- On the storage efficiency and cost front:

- NetApp claims that it ships more SATA disk drive capacity than its competitors – helping customers save cost and energy. Its RAID-DP is a key enabling technology here.

- Moreover, with its two data reduction features: 1)a unique data deduplication technology that operates in the background and reduces all data stored on NetApp products, and 2) volume-based thin provisioning; NetApp claims users can save as much as 75% real disk space and 40-75% in disk costs.

- In the future, NetApp claims it will add data compression and power saving features.

- On the unique performance front:

- NetApp provides volume-based QOS, application-based cache partitioning and "flexible memory" (dubbed FlexScale; there is nothing about it on NetApp's website or the WWW).

- NAND flash memory is also coming, but not as simple plug-in replacements for HDDs.

What is NetApp's vision for the future and what are the organizational implications for storage administrators?

- Dave Hitz, NetApp's articulate co-founder discussed a vision where storage becomes a horizontal infrastructure services layer providing virtualized storage pools that are process- and service-centric and optimized across applications for cost effectiveness. He also envisioned empowering the "customer", e.g., database, SAP, and system administrators being able to tap these services without direct involvement by storage administrators, who would instead set polices and deal with creating a virtualized infrastructure from a real one.

- Hitz also acknowledged that there will be 'zones' of infrastructure established to do a specific job most effectively. In essence, it underscores the fact that there's an opportunity to clean up big chunks of inefficiency in the data center but certain applications will continue to require best-of-breed optimization for performance, recovery, security, and business resilience and will be tuned vertically within the infrastructure stack. For more in this see this Wikibon article

Commentary

- However, based on the analyst day presentations, it seemed the new NetApp company is now entirely focused on supporting server virtualization. To be sure, NetApp’s story for storage for server virtualization is a strong one, but it seems to have purposely lost focus on many of its other strengths such as file server consolidation and virtual tape libraries.

- Will its new branding, market, advertising and increased sales force pay off?

- The Wikibon community believes feet on the street are the most important element of the company's new programs. NetApp must execute well here, and if the new sales program does not generate immediate results, it must adjust quickly. The same is true for the new advertising and marketing programs. We are somewhat skeptical about the advertising campaign but do note that on March 19th, 2008, a Google search of "VMWare Storage" does pull up NetApp has the first sponsored hit. However, NetApp shows up last on the first page of unsponsored hits below most of its competitors.

- Other considerations:

- Dan Warmenhoven, the company's President and CEO presented NetApp's strengths as:

- Disk-based backup,

- Server virtualization,

- Ethernet storage (meaning NAS, iSCSI and eventually FCoE),

- Cost efficiency.

- We agree that NetApp has a strong story in these areas, but it is clear that NetApp is loath to take on the big storage players on the Fibre Channel front. NetApp products lack sufficient scalability and FICON connectivity to do this. NetApp must finish integrating the technology from its Spinnaker Networks acquisition to improve scalability and availability.

- Dan Warmenhoven, the company's President and CEO presented NetApp's strengths as:

Action item: Users should consider NetApp for their RFP shortlist for VMWare (or other) storage virtualization projects, but be aware that while the company has a comprehensive suite of storage offerings, solutions to the most demanding Tier 1 capabilities must be found elsewhere. Users should also leverage a soon-to-be-released bevy of free NetApp SE services. Vendors should take note of NetApp’s well orchestrated campaign and prepare to counter with services, improved competitive assessments, and product features.

NetApp's silo buster vision of the future

Dave Hitz, NetApp's articulate co-founder turned marketing guy, put up this slide at the analyst meeting in NYC last week. It depicts a vertical slice of a traditional approach to architecting infrastructure where resources (servers, networks, storage, etc) are stovepiped and optimized for applications (largely by application heads). This is a common scene in enterprises where companies mix, match, and manage a sea of heterogeneous storage assets designed to optimize business applications.

There's actually nothing wrong with that picture until you consider that in the Fortune 1000, IT spend as a percent of revenues has dropped from 3.9% in 2000 to 3.0% today. It's simply too expensive to maintain such a 'one off' approach for all applications. While this vector will continue for specialized applications with the most demanding performance requirements (e.g. mainframe and transaction-oriented apps), increasingly customers are looking to put in place more efficient (horizontal) platforms that can flexibly support a wider variety of applications. NetApp envisions virtualized pools that are process- and service-centric and optimized across applications for cost effectiveness.

What is impressive about NetApp's vision is that instead of laying out a scenario where ALL applications standardize on NetApp infrastructure, this view acknowledges that there will be 'zones' of infrastructure established to do the job most effectively. In essence, it underscores the fact that there's an opportunity to clean up big chunks of inefficiency in the data center but certain applications will continue to require best-of-breed optimization for performance, recovery, security, and business resilience and will be tuned vertically within the infrastructure stack.

The implications of this approach for storage architects and admins is a more services-oriented view where storage provides re-usable services across a variety of applications and is optimized for efficiency by reducing suppliers, standardizing on service offerings and, while limiting the choice of application heads, dramatically simplifies storage procurement, installation, provisioning, migration, and retirement. This means certain tasks (e.g. provisioning) can be either automated or transitioned to server or application people within the organization while the storage admin plays more of a service provider role.

The problem with this scenario is that when IT standardizes on a limited set of menu-based services and limiting choice, those with power in the organization will kick, scream, and fight to protect their tier 1 golden geese and concierge-class service levels. The opportunity is to target major pockets of inefficiency and virtualize with VMWare or other approaches. A commitment to initiate storage and server virtualization in tandem will by its very nature address the organizational tensions by 'forcing' standardized approaches to service delivery.

Action item: Organizations that have the stomach to take a services-oriented approach should commit to pursuing storage and server virtualization in tandem. NetApp's horizontal infrastructure vision can lead to substantial cost savings and efficiencies that every CIO and CFO should consider and at least assess the tradeoffs which will often prove to be artificial for many applications and in favor of a horizontal (standardized) approach.

NetApp riding VMWare coattails - hard

Every presentation at the NetApp user conference mentioned VMWare multiple times. NetApp is riding the server virtualization coattails, and riding them hard – with good reason.

Most server virtualization projects are aiming at the myriads of small servers supporting tier-2 and tier-3 applications with direct-attach storage (DAS) or tier-2 storage networks. As previously discussed on Wikibon, the storage and backup systems that are currently used to support the racks of servers are unlikely to be able to support a virtualized server environment without significant re-architecting. Care will have to be taken to avoid major increases in backup software costs.

The key requirements for supporting the storage requirements of a typical VMWare implementation are:

- Flexible architecture – many types of block- and file-based storage implementations will need to be migrated with many protocols. Having a single basic virtualized architecture that will handle all this with common management tools is a significant advantage in a typical server virtualization project.

- Key Functionality – a number of technologies such as cloning are useful in supporting new approaches to backup and recovery.

- Skill and knowledge to design, plan, and support an appropriate storage infrastructure

NetApp is well positioned in the first two items, even with some of the limitations in the virtualization approach. NetApp have ambitious plans to support the migration of expertise from in-house services to the channel. However, for medium or large-scale projects it would be prudent to ensure that some of NetApp’s new feet on the street are directed to your installation.

Action item: If your IT shop is not a general purpose NetApp storage user, NetApp should be included in the RFP shortlist for VMWare (or other) storage virtualization projects. Ensure that NetApp has direct skin in the design and support of your solutions. Whatever solution is adopted, ensure that implementation and operational expertise is developed and retained in house; this new architecture will be a foundation for many years to come.

The nature of competition in storage

In his closing remarks at the NetApp analyst briefing, CEO Dan Warmenhoven suggested that NetApp essentially believes the storage industry is segmenting along more focused lines of competition, and the pure plays are going to win.

One could take this argument to the extreme and posit that the marginal economics of hardware (60% gross margin), software (90+% gross margin) and services (30%) are so different that competition along those lines will emerge and further segment the business leading to a highly dis-integrated(1) storage business dominated by ‘pure plays’ in a business model sense. In such a theory, Symantec, et al, would have the advantage in storage software, folks like NetApp and EMC in storage hardware, and IBM in storage services.

It’s doubtful this is what Dan meant because he’s a CEO and not prone to comments that weaken his argument for world domination by his own company. So if by ‘pure plays’ Dan means companies focused solely on storage, does that suggest for example that, all things being equal, 3PAR as a pure play has a higher chance of success than say EqualLogic, because the latter company is now part of a server (or distribution) business model?

One could observe that the storage business has reached a kind of business model equilibrium where previously, independent business models such as EMC’s totally destroyed the highly vertically integrated models of IBM, Digital, HP, Fujitsu, and Hitachi. And we’ve evolved to a business where within a market segment like storage, certain rules of competition are clear: Don’t make your own disk drives (Hitachi notwithstanding) but rather blend requisite and synergistic businesses (hardware, software and services) necessary to compete and service enterprise customers to achieve competitive advantage. This means server vendors like IBM, HP, Sun and Dell, while not as storage-centric as EMC and NetApp aren’t at a business model disadvantage because what they lack in storage focus they can offset with complimentary server and services leverage.

Focused business models in and of themselves aren’t likely to change this dynamic. Perhaps the consumerization of IT will. This discussion sounds academic, but don’t think John Akers, Bill Gates, and Andy Grove would necessarily agree.

Action item: Since the advent of microprocessor-based computing, the dis-integrated structure of the IT business has changed subtly. Competition still largely occurs along focused and segmented lines. While the Internet has loosened Microsoft's and Intel's monopolies, the real story is Google's domination, which is centered on consumerization and is poised to impact the traditional enterprise space. Suppliers and customers alike must consider the consumerization of IT, what it means to their businesses and respond accordingly.

Footnotes: (1)Author David Moschella popularized the notion of a dis-integrated IT industry in the 1980's, a concept that is now widely recognized as the fundamental explanation of the transference of IBM's monopoly power to Intel and Microsoft.

Storage domination: Not for the faint of heart

Good news for fans of NetApp. The company is aggressively investing to expand its marketshare and is planning to become the number two player in the industry, so it can set its sites on number one. Too bad it's not as easy as spending a few million bucks, getting a new logo and popping some ads in the Wall Street Journal, Computerworld, and NPR-- and NetApp knows this. The company started with a platinum list called the Storage 5000. This is one of those lists you make, because you really can't buy it at any reasonable price. One can't help but wonder how many such lists exist in the storage business and what the combined value of those assets is worth.

EMC no doubt has the most accurate Storage 5000 (or more) in the business. IBM has always been the king of market segmentation and has more data than anyone, but it's unclear how storage-centric its list is and how useful it is in a pure-play storage context. Ditto for HP and Sun. Hitachi, with its heavy reliance on distribution partners HP and Sun, probably has somewhat less visibility on the buying patterns of named end customers.

So what does this magic list mean for NetApp specifically and the industry in general? It means that NetApp has a powerful tool with which to target initiatives and measure success. If the company can demonstrate to itself that there's a cause-and-effect relationship between investment and market penetration, then this list will become a real thorn in the side of competitors that don't have a similar capability.

In the end, however, execution is everything, and NetApp must be careful to not open the door to its most loyal customers while it's going after the unwashed mass of non-NetApp users. The real opportunity is for NetApp to get more sales motion going with those lighter-weight NetApp customers that are probably buying NFS simplicity. The opportunity for NetApp competitors is to test the loyalty of NetApp customers, knock on doors right after NetApp visits new prospects, and try to disrupt NetApp's channel partner loyalty.

Action item: NetApp's development of its Storage 5000 list is a shot across the bow at the big storage competitors. It is a resource that only the largest storage companies are likely to posses and one that can be leveraged and evolved indefinitely. NetApp competitors need to revisit their own similar assets and evaluate them in terms of accuracy, predictive capability, depth and ease of use.

NetApp helps BT get rid of tons of stuff...literally

After each Wikibon Peer Incite Research Meeting we write about GRS (getting rid of stuff). One of the most useful presentations at NetApp's 2008 Analyst Event was from Michael Crader, the Head of Windows Consolidation at BT who talked about how virtualizing Windows servers and using NetApp infrastructure helped him get rid of 'tons' of stuff. Literally, the project allowed BT to remove seventy-five (75) tons of server and storage gear. This equipment was hauled away on huge trucks and disposed of in an eco-friendly manner.

Before the project, utilization of storage and servers was often in the teens, and BT was at capacity for power and cooling. Backup took 96 hours, time to deploy new servers and storage was measured in weeks and customers were not happy. The project involved using VMWare to cut operational costs by increasing server and storage utilization, reducing power and cooling expenses and overall improving information access.

BT's objectives for the project were to consolidate 3100+ existing Wintel servers and achieve a consolidation ratio of 15:1 while moving to an on-demand model. BT saved $2.5M / year in energy costs, cut hardware required by 50%, increased storage utilization to 70%, reduced server maintenance costs by 90% and a project that started in April of 2006 paid back by Christmas.

Here's the before and after:

- 3100 servers down to 134

- 700 racks at 8 sites down to 40 at 5 sites

- 2.1 megawatts of power consumed down to 0.24 megawatts

- 9300 network ports down to 840

- Backup from 96 hours to a full daily in 30 minutes

- 20% storage utilization to 70%

- 6 weeks to deploy new servers down to 1 working day

With 9PB of NetApp storage and 27PB overall, BT is quite large, and not all customers will see such results. And while this case is as much (if not more) a story about VMWare, and probably several suppliers have similar stories, the fact remains that a representative from BT took the time to fly to New York and tell a bunch of analysts how great NetApp is. That is not common in the analyst event business. Usually you get talking headshots or quotation marks in slideware. The cold hard truth is that NetApp provided the storage infrastructure and considerable VMWare expertise to make this project a reality and this very credible guy from BT stood up and said so. He answered all of our questions about the project without any meddling or handling whatsoever from NetApp-- this was unique.

Action item: Customers with poor utilization, slow deployment of resources, and out of control energy costs should synchronize storage and server virtualization projects, radically re-architect their data centers and lower energy bills by getting rid of gear. Users should be aware that processes will have to change dramatically, including and especially backup, and tools (e.g. performance tuning) are still immature. As such, users will need help and NetApp is demonstrating prowess in server virtualization projects in general and VMWare in particular and should be considered a best-of-breed supplier in VMWare.

Action Item:

Footnotes: