#memeconnect #fio

Contents |

Executive Summary

John Little, the author of Little’s Law, recently came to Silicon Valley to talk to social media system designers about the implications of his law for IT system design. Little’s Law is used extensively in operations research and teaches manufacturing engineers to focus on getting rid of stuff in queues to reduce cycle time as much as possible. This approach is known as lean manufacturing. A full explanation of Little’s Law is given in the footnotes.

This research looks at the implications of applying Little’s Law to computer design. It concludes that the major queues in current systems are in I/O subsystems. Applying flash technology can drastically reduce these queues, with the result that new high-performance systems based on scale-out volume components will deliver applications much richer in data and much easier for users. Social media sites and other Web services are in the vanguard in the development of these new high performance “software mainframes”.

CIOs and CTOs should be watching the development of these technologies and should start to prepare for their introduction. Wikibon projects that this will mean major changes in high-performance computing, storage systems, database systems and operating systems over the next decade. Investments over the next two-to-three years are likely to determine the winners and losers.

Computing Queues

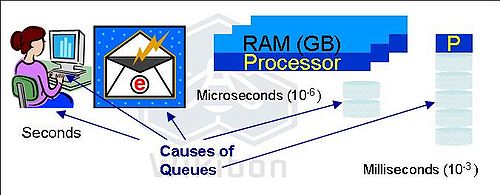

Figure 1 shows the major queues in today’s systems.

The queues are caused by major disparities in speed. Within the data center, the fastest components are the processor and random access memory (RAM), which operate in microseconds. A thousand times slower are the hard disk drives, operating in milliseconds. These hard disk drives are in the processors, in separate arrays with their own processor, and in the client. The disk drives are mechanical, and robust SCSI storage protocols and file systems are designed to deal with the huge speed differences. Solid-state drives (SSDs) use the same protocols, and even though they have the higher transfer speeds and much higher IOPS, I/O latencies exceed 1 millisecond. This speed difference limits the amount of data and the number of accesses to data that can be accomplished within a reasonable end-user response time of (say) 1 second. If the data has “state” and is being updated, the database system required to manage this also has major throughput constraints caused by the disparities in speed.

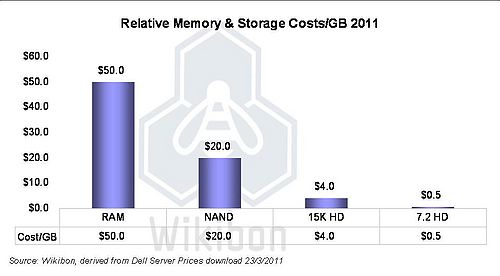

Little’s Law doesn’t take cost into account. Figure 2 shows the relative costs of different technologies at current levels. RAM is the most expensive and the least able to scale as the addressability of RAM is at the byte level. Windows 7 supports a maximum of 192GB. However, NAND storage can scale to terabytes in size, because the addressability is less granular. This layer can be accessed directly via RDMA and shared between processors. And, most important of all, NAND is persistent – if the power goes, the data is retained.

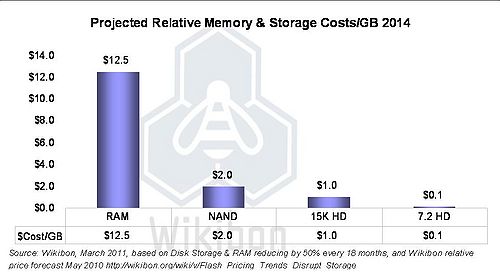

Figure 3 projects the prices forward just three years. The relative costs of storage and RAM follow Moore’s Law. The relative cost of NAND is projected to come down even faster, driven by the explosion of use in the consumer space. Looking forward three years, the price of flash (NAND) is projected to be only three times the cost of high performance disk, and a thousand times faster.

Source: Wikibon, March 2011, based on Disk Storage & RAM reducing by 50% every 18 months, and Wikibon relative price forecast for Flash May 2010.

Lean Computing

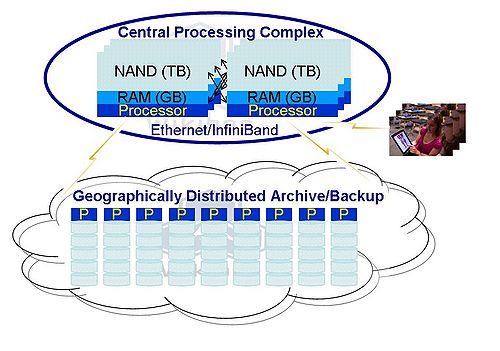

Little’s Law and the relative price cost changes allow the industry to architect high-performance systems in an entirely different way, as shown in Figure 4. Mechanical storage devices are hot, take up too much space, and are a thousand times too slow. Their role as persistent storage close to the processor for high-performance systems can be replaced by NAND (flash) storage, as is shown in the central processing complex in Figure 4. The NAND storage is directly RDMA addressable and linked to two processors, which will manage the data in that node. Access to that storage from other nodes will be through a high-speed inter-connect backbone (Ethernet or InfiniBand). This central processing complex will be based on high-volume components very densely packed in racks. Databases, file systems and operating systems will be adapted to use NAND storage, which will lead to significant simplification.

This simplification and reduction in I/O queues creates significant savings through out the system. The multi-programming level is reduced by an order of magnitude or more, and this allows better utilization of system resources. A high percentage of RAM is taken up with I/O buffers, and the number and length of time that these are held are a small fraction of current systems. A user's data can be paged out to NAND storage after a short inactive time without the time penalty of recovering from disk. The I/O subsystems can be significantly simplified and can themselves become leaner. The locks on file systems and database systems are held for far shorter periods of time, increasing the throughput and/or the amount of data that can be retrieved within a transaction or query. When you calculate out the numbers, the result is leaner and less expensive and more scalable systems. And that is the reason that the computer engineers at social media companies are so interested in Little's Law.

High-density hard disk drives will still be by far the cheapest low-access storage and will be the foundation of the geographically distributed archive/backup “cloud” shown in the lower half of figure 4. Processor power will be available within this complex to allow data extraction using big data tools like MapReduce and Hadoop. Geographic distribution using erasure encoding techniques such as those introduced by Cleversafe and others will minimize cost and requirements for data movement within the network.

Users are the most expensive resource of all. They are shown using mobile devices with NAND storage. The central computing complexes will allow the development of far more data-rich applications, which will significantly improve the productivity and quality of experience of end-users and will interact with mobile devices full of flash memory.

Conclusions

Using Little’s law, Wikibon projects that storage I/O queues are the area that will be addressed in new system architectures and that persistent NAND storage will be a critical component of these new systems. Wikibon projects that these new computer architectures will evolve slowly, with the newest large-scale applications such as social media computing in the vanguard. Most of the technology pieces are in the place – PCI cards with NAND and VSL memory architecture and software are available from Fusion-io, as are the client mobile iPads from Apple. Fusion-io and Apple were winners of the Wikibon CTO awards in 2010. Clustrix has a similar architecture to Figure 4 using battery-backed RAM storage instead of Flash for its database appliance aimed at new big data transaction systems. EMC’s Greenplum on VCE, IBM’s Netezza, and HP’s Vertica systems all distribute their databases over scale-out architectures and are suited to big data analytics. Companies such as Cloudera are distributing big data tools such as Hadoop. Clustix, EMC, and Cloudera were all CTO award finalists in 2010. Many other innovations will take place over the next five years. The overall conclusion is that the fundamental architecture of large transaction, analytic, and computing systems will change dramatically over the next five years.

Action Item: CIOs and CTOs should watch the development of these technologies and should start to prepare for their introduction. Wikibon projects that this will mean major changes in high-performance transaction and analytic compute systems, storage systems, database systems, and operating systems over the next decade. Investments over the next two-to-three years are likely to determine the winners and losers.

Footnotes:

Introduction to Little’s Law

John Little, the author of Little’s Law, came to Silicon Valley to talk to Social Media designers about the implications of Little’s Law to the design of computing solutions. Figure 5 shows the fundamental model.

Source: Little's Law, John D.C. Little and Stephen C. Graves, Massachusetts Institute of Technology, downloaded 3/22/2011, Figure 5.1 modified by Wikibon 2011

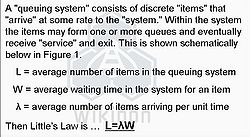

Figure 6 shows the definition of the terms, and Little’s Law (or theorem for the pedants), is

'L = λW'.

Source: Little's Law, John D.C. Little and Stephen C. Graves, Massachusetts Institute of Technology, downloaded 3/22/2011, modified by Wikibon 2011

The result for those that have struggled with queuing theory problems is surprising, since the behavior is independent of the detailed probability distributions involved. Therefore no assumptions are required about the how the customers arrive or are serviced. In addition, the result applies to any system, and applies to systems within systems. This allows different subsystems within a computer or the system as a whole to be analyzed. The model only requires that the system is stable and non-preemptive.

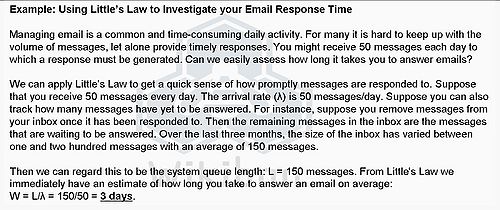

Figure 7 gives an example of using the formula to analyze your email system. It shows very neatly that the only way to improve the response time for answering email is to reduce the queue length and tells you exactly what that queue length should be to achieve the desired service time.

Source: Little's Law, John D.C. Little and Stephen C. Graves, Massachusetts Institute of Technology, Usefulness of Little's Law in Practice – E-mail, downloaded 3/22/2011, modified by Wikibon 2011

Little’s Law was used extensively in operations research, where it is restated as Throughput = WIP (Work in Progress) / Cycle Time. What it taught manufacturing engineers to do was to focus on getting rid of stuff in queues to reduce cycle time as much as possible. This approach came to be known as lean manufacturing.