Contents |

Executive Summary

The 2011 International Supercomputer Conference (ISC’11) June 19-23 in Hamburg Germany is a showcase for announcing new technologies and supercomputer success stories. The two biggest announcements were up to 6 Petaflops (=10^15 Floating Point Operations per second) to be installed in 2011/2012 at the U.S. National Nuclear Weapons Labs Linux Capacity Cluster 2 (TLCC2) and about 3 Petaflop/s at the European SuperMUC Petascale System in the Leibniz Supercomputing Centre.

Mellanox was expected to announce the new generation FDR InfiniBand and announce wins for TCCC2 and SuperMUC. Mellanox duly announced FDR and an initial win for the FDR part of the SuperMUC InfiniBand deployment (see "SuperMUC" section below for details). The real surprise is that Mellanox bid new generation FDR at TLCC2 with Appro but lost out in a benchmark to the current QDR generation bid from QLogic.

It appears that since the Oracle investment into Mellanox, Mellanox has lost focus on its core HPC InfiniBand Business.

US National Nuclear Linux Capacity Cluster 2

Appro won a competitive award for the Tri-Lab Linux Capacity Cluster 2 (TLCC2) against Atipa, Dell, Penguin, and SGI. Appro bid its GreenBlade™ servers together with Intel’s Sandy Bridge-EP processors. These HPC capacity clusters will provide simulation, computing, and stockpile stewardship programs for all three National Nuclear Security Administration (NNSA) weapons labs: Lawrence Livermore, Los Alamos, and Sandia. Exploration of the potential for fusion as a power source is absorbing huge amounts of compute power. The TLCC2 award is a two-year contract to deliver about 3-to-6 petaflops of aggregate capacity to the three DOE labs.

Appro is taking some risk in meeting the deployment schedule, as the new Intel Xeon Sandy Bridge-EP is due for volume shipment by Intel in Q3 2011. Appro will be hoping that there are sufficient early chips and no early snags.

The real surprise is that Mellanox bid, but did not win, the interconnect part of the contract with its new FDR InfiniBand technology. An Appro bake-off between the current generation of Qlogic QDR InfiniBand and the new Mellanox FDR solution resulted in the QLogic QDR system outperforming the new Mellanox FDR equipment.

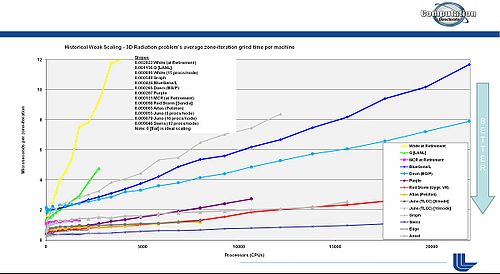

Livermore Labs have performed extensive tests of the scalability and performance results of different systems, as shown in Figure 1.

Source: Presentation on Scalable Linux Cluster, by Matt Leininger, Deputy for Advanced Technology Projects, Computational Directorate Lawrence Livermore National Laboratory, November 17, 2010

The best scalability (lowest initial overhead and lowest overhead slope) was found on the 23-thousand-core Sierra cluster at Lawrence Livermore, which is based on QDR QLogic InfiniBand Interconnect equipment. The Sierra cluster overhead line is the lowest on the chart, starting a 0.2 microseconds per zone iteration with a slope of 0.000046 with 12 processors per node, never rising above 1 microsecond. According to Matt Leininger, the Sierra System is the most scalable system for the workhorse applications at the Lawrence Livermore National Labs.

SuperMUC

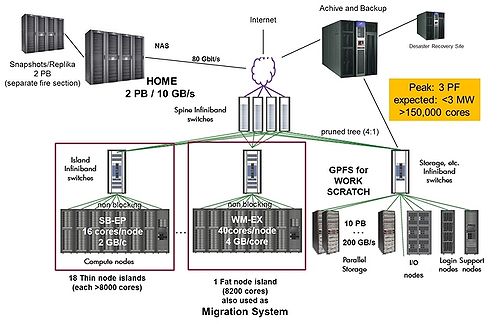

IBM has won the processor design for the European SuperMUC Petascale System in the Leibniz Supercomputing Centre. The SuperMUC will also use a new revolutionary form of high temperature cooling developed by IBM, where the active components such as processors and memory are directly cooled with water with temperatures up to 45ºC (113ºF). Figure 1 gives the details of SuperMuc. The thin islands will use the latest Intel Sandy Bridge processors.

Source: http://www.lrz.de/services/compute/supermuc/systemdescription, downloaded June 20, 2011.

The interconnect part of the SuperMUC is designed with both QDR (40Gb/s), InfiniBand, and the new generation FDR InfiniBand (56Gb/s). Mellanox is the first to announce FDR InfiniBand at ISC’11, and has been selected for the early SuperMUC FDR interconnect implementation. SuperMUX is planned to start production mode August 2012, and eventually is planned to grow to 110,000 cores.

Has Mellanox Lost Focus on InfiniBand?

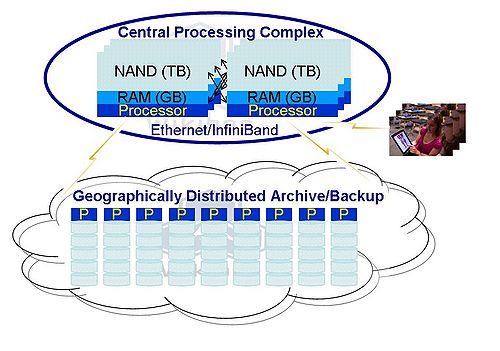

Previous Wikibon research, has pointed out the importance of latency for InfiniBand networks. Wikibon believes that the future of high performance technical and commercial systems will emphasize persistent non-mechanical storage very close to the processors in very dense packaging, with very low latency interconnects. For high-performance systems, InfiniBand is likely to be the interconnect of choice; for lower performance lower costs systems, Ethernet will be the interconnect of choice. Figure 3 is a diagram of the key components of such systems.

Source: Little’s Law and Lean Computing, Wikibon 2011

Mellanox appears to have compromised on the underlying latency of its FDR 56Gb/s technology (160ns) in order to boost Ethernet Latency, and the result is significantly worse latency than the current QDR latencies of 100ns. If Mellanox are only going for bandwidth HPC installations, it will be a short window before QLogic and others have FDR technologies.

For the InfiniBand space, latency is king, and Mellanox seems to have lost focus. As we have said before, Mellanox must make sure that its relationship with Oracle (Oracle has taken a 10% stake in the company) does not dictate its HPC InfiniBand strategy. Mellanox has announced a converged network strategy which includes InfiniBand, and placed emphasis on very good Ethernet latency. This strategy might well suit Oracle and general purpose smaller systems, but is a risky strategy with its installed HPC base.

Action Item: Mellanox has had a great history of providing excellent InfiniBand solutions. However, with the Mellanox push into the Ethernet, InfiniBand latency performance appears to have taken a back seat in priorities. Users planning to use InfiniBand for very high performance systems would be wise to take a wait-and-see attitude to the Mellanox FDR announcements, unless there is an immediate need for high bandwidth InfiniBand. Users should wait and see how Mellanox addresses this issue or if other vendors will provide significantly lower latency FDR InfiniBand implementations.

Footnotes: