“When you add uncertainty to projects, they tend to cost more and take longer to complete.”

It’s been a commonly accepted industry maxim that virtual desktops might improve management, enhance user productivity, facilitate BYOD, enable superior DR, and so on – but forget about saving money.

VDI’s failure to reduce costs stems from the inability of traditional storage to adequately mitigate the uncertainty inherent in a virtual desktop deployment. A conventional “converged infrastructure” solution can make matters even worse. A hyper-converged infrastructure, on the other hand, leverages the uncertainty to achieve a strong ROI and short pay-back period.

The Importance of Certainty for VDI Success

Virtualizing servers is fairly predictable for most organizations. IT can use various tools to gauge the number of likely candidates up front, and purchase the required infrastructure to enable an orderly transition to a virtualized environment. Users should, hopefully, not be impacted other than by improved performance and less or eliminated downtime from server maintenance or failures.

Virtualizing desktops is far more challenging. Applications or usage patterns can easily change over time requiring unexpected resources. And a VDI initiative might have hundreds or thousands of users– each with their own expectations, experiences – and perceptions. Many years ago I saw an SBC (desktop virtualization with TS/RDSH) project fail because a user blamed a broken keyboard on the technology.

Virtual desktops utilize write-heavy workloads with random 4K IO packets. This makes achieving consistent performance difficult: IOPS requirements can swing wildly depending upon usage patterns, time of day and type of applications being accessed. Boot storms, anti-virus scans and patch update cycles can all put sudden loads on the infrastructure and impact general performance.

Users tend to be adverse to change in even the best of cases. If their performance varies under VDI – even if only during occasional boot or write storms, they often become quite vocal and can slow or kill a virtual desktop initiative. Ironically, it is better to have consistently sub-par virtual desktop performance rather than randomly fluctuate between sub-par and good.

Other variables can also affect virtual acceptance. Many laptop users, for example, may be happy to exchange their devices for a virtual desktop that follows them around – particularly between offices or between their homes and offices. But other laptop users may require or simply demand a truly mobile device.

As a result of the uncertainty regarding both the ultimate VDI user population and performance requirements, IT generally deploys a virtual desktop environment over a number of years, making adjustments as appropriate. IT commonly migrates users to virtual desktops during their normal PC or laptop refresh cycles by either locking down their devices to act like thin-client terminals, or replacing them with thin-client or zero-client devices.

The Challenge with Traditional Storage

Implementing virtual desktops over time for an ultimately unknown population does not mesh well with traditional storage infrastructures. Organizations face either purchasing storage arrays up-front with far more capacity than required, or risk forklift upgrades down the road. The initial capacity must be adequate to handle any future growth in the user population, new applications or other unanticipated resource requirements, as well as spikes in intermittent resource utilization.

Most organizations, not unexpectedly, do a poor job of estimating the storage requirements up-front. A recent Gartner study of 19 organizations that deployed either VMware View or Citrix XenDesktop showed that 17 of the 19 spent more on storage than expected, and that storage, on average, accounted for 40 to 60 percent of the entire VDI budget. A Gartner analyst commented that the only reason two of the organizations managed to stay within their storage budget was that they purchased so much of it up-front.

High initial storage cost combined with the difficulty in forecasting the parameters of a VDI deployment in terms of users and resource requirements years down the road tends to create project inertia. Adding to the consternation is the obvious disadvantage of consuming the excess storage capacity at a future date when it will be markedly inferior in comparison to new technologies.

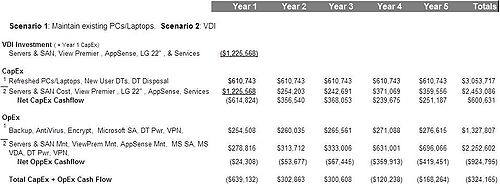

In the example below, based upon the ROI analysis that a Nutanix partner recently presented to a client, purchasing the SAN up-front leads to a projected negative 5 year cash flow (based on “hard” CapEx & OpEx) of <$324,165>.

Example 1: SAN + Servers

Assumptions

- The organization was considering deploying 2,560 virtual desktops (75% concurrent) over 5 years at the rate of 606 virtual desktops created the first three years, and then 521 the last two years (to match the refresh rate of 5 years for PCs and 3 years for laptops).

- This local government organization spends $750 per PC (including taxes, shipping, administration, set-up time, etc.) and $1,815 per laptop (including taxes, etc. as well as docking station and monitor). PC and laptop disposal costs are $100 each.

- Existing PCs and laptops are locked down as thin-clients, and kept for 3 years after their normal refresh cycle, then replaced with 22” LG integrated zero-client monitors. The LGs are purchased for new users. Microsoft SA costs $50/user/yr. and VDA $100/user/yr.

- Servers cost $11,512 and the required SAN costs $722,000.

Converged Infrastructure Compounds the Challenge

As IT staffs virtualize their data centers, they tend to encounter a phenomenon known as Jevon’s Paradox which states that as technology increases the efficiency of resource usage, the demand for that resource increases. IT staffs not only wind up managing far more virtual machines than the physical servers they used to administer, now they also must contend with new management tools, virtualization hosts, vSwitches, vAdapters, and so on. Troubleshooting complexity increases because multiple vendors play an integral part in the infrastructure.

A virtual data center also changes the dynamics of the traditional stovepipe IT organizational model whereby the server, network and storage teams can no longer work effectively in silos. VDI requires further collaboration with desktop and application teams. A 2011 InfoWorld article stated that IT collaboration was the #1 obstacle to VDI adoption.

Virtualization customers have demanded solutions that reduce management complexity, enhance collaboration, and help eliminate inevitable finger pointing from different manufacturers. The storage manufacturers have responded. All the leading players have a version of what they refer to as a “converged infrastructure” that combines compute, storage and network resources either as products or as reference architectures.

The VCE partnership between EMC, Cisco, VMware and Intel manufactured Vblocks is the most well-known and popular converged infrastructure product, but EMC hedges its bets with VSPEX which is a reference architecture. NetApp has FlexPod, IBM has PureFlex and PureFlex Express, HP has BladeSystem Matrix, Hitachi has Unified Compute Platform Express, and Dell has both Active Infrastructure and VRTX.

But calling these solutions “converged” is a misnomer. A better description would be “adjacent infrastructure” since the underlying compute and storage tiers must still be managed separately, and they still require an intermediate network to move data continuously between them. While these pre-packaged compute and storage solutions can substantially reduce the time required to implement the back-end VDI infrastructure, they can also exacerbate the difficulties organizations face in moving ahead with VDI. Now IT must purchase not just the storage, but in some cases many of the server resources up front as well.

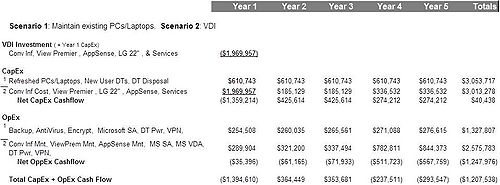

The following ROI analysis is for the same organization as shown above, but now reflects a much larger initial investment for a conventional “converged” infrastructure solution, and a negative 5-year cash flow of <$1,207,538>.

Example 2: Conventional “Converged Infrastructure”

Changing the ROI of VDI with Hyper-Convergence

Google pioneered the hyper-converged infrastructure in the cloud provider space, and it is now utilized by every leading cloud provider. Hyper-convergence enables consolidation, linear scalability, fractional consumption and an abstraction of the intelligence from traditional hardware arrays into software-defined storage. The compute and storage tiers combine into a single consolidated infrastructure.

Nutanix brought this model to the enterprise by leveraging the hypervisor to virtualize the storage controllers themselves. Since every server is a virtual storage controller, the crippling boot and write storms common with traditional storage arrays are eliminated. The local disk combined with integrated flash and auto-tiering of workloads provides for a consistent user experience and, consequently, much greater user acceptance of the virtual desktop environment.

The Nutanix Virtual Computing Platform is managed by the virtualization administrators through the virtualization platform console, eliminating the requirement for storage and compute administrator collaboration. Unlike traditional arrays, storage administrators do not continually field requests from the desktop team for more LUNs or IOPs.

Most importantly, Nutanix enables the VDI environment to expand one server at a time. The much lower initial investment enables a quick payback period. And the overall spend is also reduced since Moore’s Law ensures declining costs relative to VM density.

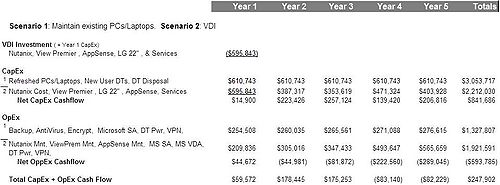

Utilizing Nutanix rather than the conventional storage and converged infrastructure solutions in the examples provides a projected 5-year positive cash flow of $247,902 which equates to an ROI of 42% and a 10.9 month payback.

Example 3: Nutanix Hyper-Convergence

Adding projected 5-year IT staff savings of $2,372,348 from virtual desktops brings the ROI up to 298% and reduces the payback period to 9.6 months.

The Year of VDI?

Despite years of VDI hoopla and hype, it has yet to go mainstream. IT organizations are justifiably wary of the unknown – and the rigidity of traditional storage arrays leave them particularly vulnerable when facing the vagaries of migrating to virtual desktops.

The pay-as-you-grow and consistent user experience enabled by hyper-convergence now allows organizations to move into VDI in a manner in which they’re comfortable – starting slowly and expanding over time as the user acceptance and cost savings are proven. This capability is rapidly being discovered by organizations across the globe, and is contributing to increased adoption of virtual desktops.

Disclosure: The author works for Nutanix. Thanks for review and suggestions by Anjan Srinivas (@anjans)

Action Item:

Footnotes: