Tip: Hit Ctrl +/- to increase/decrease text size)

Storage Peer Incite: Notes from Wikibon’s June 23, 2009 Research Meeting

NetApp is one of the entrepreneurial storage vendors that sprang up in the late 1990's. It recently concluded its annual Analyst Days, and this newsletter comes from a Peer Incite discussing that event. Overall NetApp presented a strong picture of innovative technology, particularly focused on space efficiency. However the company also surprised in what it did not talk about.

First, its senior management basically allowed analysts to infer that the Cloud does note require any new and different storage technology. The message was NetApp sells to service providers and is well-positioned in the cloud for 'traditional' data centers. This seems surprising since Cloud Storage, at present is in its infancy and focused on consumers, and seems to promise a near-term high-growth market providing storage to SMBs.

Second, it did not emphasize green computing at a company and product level, even though it actually has a strong story to tell in terms of storage consolidation, increased utilization, and consequent energy, cooling, and data center space savings. This story is demonstrated by its most prominent user story -- its own (see article below). Given that U.S. federal policies toward carbon emissions and energy conservation are changing rapidly under the Obama Administration, this will become a powerful selling point that NetApp's marketing people appear to be ignoring so far.

However, leaving these aside, NetApp presents a strong story with respect to space efficiency, claiming more than 100% utilization in some customer accounts. While we suspect this is not the norm, the portfolio of space efficient features is innovative and users should investiget the applicability of these in their specific environments. G. Berton Latamore

Contents |

Select observations and reflections from NetApp analyst days 2009

Strong Story for Storage for Server Virtualization

NetApp continues its strong position in server virtualization solutions, citing VMware, Citrix, Microsoft and Cisco as partners. NetApp:

- Has 12,051 joint NetApp & VMware ESX customers

- Guarantees a 50% reduction in storage capacity for virtual environments (read the fine print)

- Is number 2 in iSCSI storage for virtual servers in the US (Dell is number 1)

- Was awarded Citrix Ready solution of the year

- Has been named the Microsoft 2009 Partner of the Year, in the Advanced Infrastructure, Storage Solutions category. NetApp was chosen out of an international field of top Microsoft partners for delivering market-leading customer solutions for Microsoft Hyper-V environments.

Moreover, NetApp has tools that:

- Manage VMs and storage together

- Empower virtual server administrators to:

- Provision storage

- Perform backups & recovery

- De-duplicate data

- Clone virtual machines

- Integrate with VMware

- SnapManager for Virtual Infrastructure, Rapid Cloning Utility, Site Recovery Manager

- Integrate with Citrix & Hyper-V

- Essentials

Indeed, NetApp claims to be the first to have this degree of integration with Citrix and Hyper-V, going so far as to have these vendors use NetApp’s APIs. What’s more, NetApp is ready with its tools for VMware’s recently announced vSphere.

Clearly, NetApp remains a leader in this market segment.

SANscreen – A Hidden Gem

SANsceen came from the acquisition of Onaro. What started out as a great SAN auditing tool and evolved under Onaro to an even better storage change management system, this tool now provides:

- Real-time visibility into the “storage services” delivered by a storage infrastructure.

- Management of storage as a true end-to-end service with path-based service policies, violations, and change-planning capabilities.

- Improved resource utilization and application performance created by aligning storage resources with application service levels.

- Global visibility into storage resource allocation.

- Visibility of VM configurations.

Interestingly, while SANscreen supports other vendors' storage, it does not yet support NetApp filers, yet it must now compete for the channels' attention along with all the other NetApp products.

One-on-One with Dave Hitz, Founder and EVP

Speaking with Dave Hitz is always a stimulating pleasure, and our conversation with him at Analyst Days was no exception:

- Data Reduction: How much more can we get? Given that data de-duplication is reaching mainstream and in-line data compression is becoming viable for primary storage, e.g., Storewize, what else can technology do? Can we get much more? Dave believes that there is still room for application-aware data reduction, especially for virtual machine images. And of course, versus fixed-length chunks, higher data reduction ratios are possible with a variable-length chunk approach like that of Data Domain.

- Reducing the chance of silent data corruption: Jeff Whitehead, CTO and co-founder at Zetta, recently blogged about the sure probability of silent data corruption as disk storage installations grow up into petabytes. Dave pointed out that was why NetApp introduced double parity RAID (RAID-DP) and also that data deduplication, by its nature, reduces that probability.

- How to Castrate a Bull: Dave recently released his new book by that title and gave attendees a copy. It is delightful, insightful and highly recommended. It chronicles his journey with NetApp and is peppered with wonderful tidbits such as rules for managing people and problem solving:

- “Who owns the problem?

- Do I trust them?

- How do I find an owner I trust”

- For the answers read the book.

NetApp’s Vision

Conspicuously missing this year was any kind of presentation on the future of storage. NetApp did spend a lot of time explaining that it already was a big Cloud player in that its products were widely deployed by Cloud providers. And, that it did not intend to address the Cloud any further. When the CEO, Dan Warmenhoven, was asked didn’t NetApp have a skunk works project somewhere for the Cloud, his response was “I wouldn’t know because I’m the CEO.”

Action item: If NetApp is going to grow revenues beyond taking market share in its traditional markets, it should consider borrowing a page from Onaro/SANscreen and expand its deep experience with server virtualization into more products that support heterogeneous storage products. Indeed, server virtualization is so hot today that NetApp must seize this opportunity and grow away from just storage and into information infrastructure. NetApp should also develop a better Cloud story.

NetApps missing a golden green opportunity

NetApp’s customer-facing green strategy or lack thereof was one of my few disappointments at the recent NetApp analyst day. At the end of the sessions, the impression was that such a strategy was not on their radar, and that, I have some difficulty in accepting.

Green IT was not totally ignored. Dave Robbins, CTO, IT Infrastructure, gave an excellent overview of NetApp Green IT activities from an IT practitioner’s perspective. His efforts and those of his team are to be applauded.

Dave’s team has accomplished impressive things:

- By implementing VMware they reduced from 4600, x86 based clients to 230 saving $1.3M in power and cooling and reduced the number of racks needed by 182.

- In their Oracle environment they consolidated midrange servers to logically partitioned enterprise-class servers, which added 300% capacity to production servers and increased the development landscape by 143%. Replication time was also shortened by 2400% (from 1-2 days to 1hr) and the need for a 74% increase in floor space was avoided as was the need for 81% additional power which saved an additional draw on the power grid of 236kW.

Both of these achievements are a great illustration of what desire and a bit of planning can do.

So what was achieved as a direct result of NetApp technology? First, it made possible a consolidation strategy reduced storage systems from 50 to 10. This freed up 19.5 racks in the data center and reduced power consumption by 41,184 KWh/mth, or 32%. Air conditioning was reduced by 94 tons which translates to eliminating approximately 1M lbs CO2 emissions annually, and as a bonus they increased storage utilization by 60%.

In today’s popular parlance, NetApp is eating its own dog food, very successfully. But how is this great work being exploited? Where is NetApp’s customer-facing green strategy? If there is such a strategy, it is not obvious. The product presentations have no mention of how NetApp is driving environmental and energy efficiency, even when discussing data reduction technologies.

Although I am a green advocate, I am also the first to admit that green per se does not necessarily win business, But energy costs, space efficiencies, waste management, etc., are increasing becoming relevant even for the pragmatist whose sole worry is operational costs. Even without the pending legislation Washington has in store for us, a strong, well-articulated green strategy is a valuable sales asset, and its importance will continue to increase with the proliferation of worldwide environmental legislation. EMC, Dell, HP and IBM have all received this message.

Action item: The NetApp IT folks have established solid green credentials in the industry. they were, and probably still are, involved with the Data Center Energy Forecast Report sponsored by Accenture, Silicon Valley Leadership Group, DOE and many others, in short they have a thought leadership position in Green IT.

Why NetApp is not exploiting the great work being done by Robbins and crew, particularly when showcasing NetApp technology, is difficult to understand. The NetApp marketing team has been given a golden opportunity, and it would be a shame to let that gift go to waste.

Will NetApp get acquired?

At last week’s 2009 analyst meeting, Dan Warmenhoven, NetApp’s CEO, addressed industry pundits at the company’s Sunnyvale headquarters. As he did last year, Warmenhoven indicated the firm is doubling down on its bet that pure play storage companies will continue to dominate the field. This was an interesting topic to us here at Wikibon as we’ve recently written about EMC’s prospects in this regard.

NetApp is a $3.4B company with 8,000 employees and a good balance sheet. But it’s a ‘tweener’ in the IT sector. Not huge like HP and IBM, but much larger than smaller pure plays like 3PAR and Compellent. In his keynote, Warmenhoven raised the question that he said he’s frequently asked: “Who is going to buy NetApp?” His answer is essentially no one, because no company wants to own or can afford to own NetApp.

Warmenhoven put up the following chart in defense of his thesis:

The graphic shows the market values of leading IT players and certain potential acquisition candidates. Here’s what Warmenhoven said about some of the companies on this list of likely NetApp pursuers.

- -EMC would face anti-trust issues.

- -Dell – NetApp is too rich for Dell to absorb.

- -HP is happy with its existing offerings.

- -IBM is already selling NetApp and making good money, why buy the company?

- -Cisco really needs VMware, not NetApp.

As such, says NetApp’s CEO, the company will remain independent and be a buyer, not a seller.

On balance, Wikibon agrees, perhaps for somewhat different reasons. With a market cap of between $6-7B, NetApp in our view is too expensive or not a good fit for prospective buyers. Here’s our take on why companies aren’t likely to shell out the $8B that would be required to acquire NetApp:

- EMC – Too much overlap. Spending $8B to fill a storage virtualization hole makes no sense.

- Dell – Oil and water

- HP – Unlike Warmenhoven, we don’t see what HP has to be so happy about with its current offerings. It resells Hitachi at the high end and the EVA and MSA platforms are un-remarkable. HP’s virtualization strategy is fragmented, it’s software is a marketecture band-aid made to look like a complete solution, and its sales force consistently loses to EMC and NetApp outside the HP base. Having said that…it appears HP is happy with this position and is more likely to invest in software and services.

- IBM invests big money in software and services, as that’s where the company’s margin and differentiation model thrive. XIV and Diligent are about as much as Palmisano would allow IBM storage to spend.

- Cisco – There’s no overlap with NetApp, and Cisco is building out its stack. But Cisco’s objectives are to create a data center stack that can efficiently communicate with resources across the network and become the dominant player in the data center of the future. Why does Cisco need to pay $8B to own proprietary storage technology in its stack? It doesn’t.

So we're left agreeing with Warmenhoven, NetApp will remain independent for a while. NetApp has had a huge run with WAFL (Write Anywhere File Layout). It has enabled a single-architecture, industry-leading storage virtualization, the industry's most flexible architecture for a widely installed platform, ease of support for multiple protocols, a single set of software and a #1 spot in the market for space efficient storage.

The question we have is how much further can NetApp go? Are we witnessing a trend similar to the minicomputer days, where the likes of Prime Computer, Wang Labs and Data General, while highfliers in their day, were big but not attractive enough growth prospects to be acquired (notwithstanding DG’s smart move to re-invent the company as a storage player and subsequently sell to EMC).

How NetApp behaves in the near term depends on whether it wins the battle to acquire Data Domain. If it succeeds, it will be intensely distracted for 18 months with a weakened balance sheet. If it loses to EMC, we expect NetApp to go shopping for a solution to help it compete in the backup market. Maybe acquiring backup software in the portfolio would be a good start.

Action item: Users should expect NetApp to remain independent for the forseeable future. NetApp will likely continue to acquire technologies to incrementally improve efficiency but the company is not expected to radically change its fundamental shape in the near-to-mid-term.

NetApp and storage efficiency

The core to NetApp’s product strategy is the ONTAP proprietary operating system, built round the WAFL (write anywhere file layout) file system. The operating system started with support for NFS and has expanded into a unified storage system supporting CIFS, iSCSI and FC. This unified storage architecture means that the same storage efficiency and management functionality is available for all data and is a primary thrust of NetApp's value proposition to clients.

The key storage factors that NetApp users should consider with regard to storage efficiency are outlined in the table of contents of this Alert as follows:

Virtualization savings

The WAFL file system is broken into small 4K blocks that only consume “real” storage capacity when data is written. This allows much more efficient space utilization than conventional arrays where data must be written contiguously, causing large gaps in physical storage. As well, with WAFL, data can be moved within an array without disruption. This and the other features listed below significantly improve utilization and ease of management.

- Virtualization enables storage admins to make logical copies of data without consuming physical capacity (zero capacity snaps). Traditional arrays make snapshot copies by writing data to the old location and copying original data to a new snapshot location (copy on write). In this arrangement the original copy is the current "master" and then copied to create versions representing a point in time. NetApp's approach (and others using virtualization) writes new data to a new logical snap location (once) and then make two logical versions, one representing the original data and the other representing the point in time. This approach is much more space efficient when making many copies (i.e. more than two).

Wikibon has previously investigated the benefits of virtualization and believes that the median gain is an efficiency improvement of 60%, or overall capacity savings of 40%. However, the overall reduction in the cost of storage is less because the number of I/Os that have to be processed remains the same, and the storage management requirement is unchanged. Using a ratio that the disks cost about ½ of the total array spend, the cost savings are about 30% from virtualization and the additional features enabled.

Deduplication savings

NetApp offers a data de-duplication feature which is designed to reduce primary storage capacity (as opposed to most de-dupe implementations such as those from Data Domain which are aimed at backup). The de-duplication feature of WAFL allows the identification of duplicates of a 4K block at write time (creating a weak 32-bit digital signature of the 4K block, which is then compared bit-by-bit to ensure that there is no hash collision). The work is done in the background if controller resources are sufficient. The overall saving varies by customer, and because of controller prioritization, it is not achieved every time. A figure of 30% could reflect the saving in disk capacity. As the data is rehydrated when it is read, there is no improvement in IO or bandwidth. The overall impact on cost is about 15%.

Future savings

The architecture of the hardware, ONTAP and WAFL system can allow a future implementation of compression for NetApp. A reasonable implementation of compression could deliver about a 2:1 reduction in capacity. As there is reduction of the amount of data, there would also be some reduction in the overheads on the controller for data movement and cache efficiency. This is estimated at 20%. The overall reduction would be 35%.

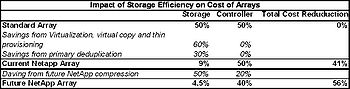

Overall Savings

Table 1 shows Wikibon's estimate of the overall cost savings users should expect, relative to traditional arrays and the storage efficiencies produced by NetApp. Currently the median savings in storage costs is projected at about 41%. Compression, if and when it arrives, will make a significant improvement in storage efficiency, increasing the savings to 56% of today's storage costs.

The table uses a standard array with no space efficiency features as the baseline and estimates that about 50% of the cost is allocated to control function and 50% to storage devices. By applying storage virtualization, virtual copies and thin provisioning, Wikibon research indicates users will achieve an additional 60% savings in storage costs (from improved utilization). Data de-duplication applied to primary storage will provide an additional 30%.

We believe this represents a reasonable estimate of the efficiency of a 'typical' NetApp array, whereby the storage costs of NetApp arrays would be reduced by 41% as compared to traditional arrays (50% x 60% x 30% = 9%; 100%-50%-9%=41%).

Action item: NetApp has one of the best track records of delivering space efficient storage. The savings are significant as compared to traditional arrays and consumers of these products should assess the potential of a NetApp approach. However, NetApp is running out of headroom to improve storage efficiency and existing NetApp customers should assume that compression will be the last significant storage efficiency boost delivered.