Contents |

Introduction

Two very different approaches were recently announced to address the problem of virtualization of big data in general, and Hadoop in particular:

- Compute Engine was announced by Google at the June Google I/O Developers Conference in San Jose.

- Project Serengeti was announced by VMware at the June 2012 Hadoop Summit in San Jose;

Both are intended to help ease the provisioning of large numbers of nodes in a Big Data environment and to avoid the problems of setting up large numbers of physical systems, for example in a Hadoop environment. The challenge for virtualization is to minimize the inherent CPU and IO overheads in performance critical Big Data environments.

Google Compute Engine

Google's Compute Engine provides 700,000 virtual cores for users to spin up and tear down very rapidly for Big Data applications in general and MapReduce and Hadoop in particular; all without setting up any data center infrastructure. It works with the Google Cloud Storage service to provide data services such as encryption of data at rest. This is a different but complementary service than Google App.

Compute Engine uses the KVM hypervisor on Linux. In discussions with Wikibon, Google pointed out the improvements that it had contributed to the open source KVM code to increase performance and security in a multi-core, multi-thread Intel server environment. This allows virtual cores (one thread, one core) to be used as the building block for spinning up very efficient virtual nodes.

To help with data ingestion, Google is offering access to the full resources of its private networks. This enables large-scale movement of ingested data across the network at very high speed and allows replication to a specific data center. The location(s) can be defined, allowing compliance with specific country or regional requirements to retain data within geographic boundaries (within the US and Europe). If the user can network the data cost effectively and with sufficient bandwidth to a Google Edge (usually a short distance), Google network services will take over data services from there.

The Google Hadoop service can utilize the MapR framework in a similar way to the MapR service for Amazon. This provides an improved availability and management framework. At the June 2012 Google IO conference, John Schroeder, CEO and founder of MapR, presented a demonstration running Terasort on a 5,024 core Hadoop cluster with 1,256 disks on the Google Compute Engine service. This completed in 1:20 seconds, at a total cost of $16. He compared this with a 1,460 physical server environment with over 11,000 cores, which would take months to set up and would cost more than $5 million dollars.

As a demonstration this is impressive. Of course, Terasort is a highly CPU-intensive environment, which utilizes cores very efficiently and can be effectively parallelized. Users should demand other benchmark results which include more IO-intensive use of Google Cloud Storage to confirm that the service performs well across a spectrum of big data applications.

Wikibon also discussed whether Google would provide other data services to allow joining of corporate data with other Google-derived and Google-provided datasets. Google indicated that it understood the potential value of this service and that other service providers such as Microsoft Azure were offering these services. Wikibon expects that data services of this type will be introduced by Google.

There is no doubt that Google is leveraging its infrastructure expertise, is seriously addressing the big data market, and wants to compete strongly in the enterprise space. The Google network, data replication, and encryption services reflect this drive to differentiate and compete strongly with service providers such as Amazon and Joyent.

VMware Project Serengeti

VMware’s Serengeti1 project is designed to allow vSphere become the provisioning and management platform for Hadoop applications. This helps to solve the complications of provisioning multiple Hadoop implementations (from Cloudera, Greenplum, Hortonworks, IBM, and MapR) across multiple physical nodes. The Serengeti project will provide a toolkit for HDFS (Hadoop Distributed File System) and Hadoop MapReduce components under the Apache 2.0 licence on the GitHub hosting platform. This will allow the node environment to be abstracted from the physical layer; a sensible direction for future development would be to separate the compute layer from the data layer.

VMware has also updated the Spring for Apache Hadoop project to enable Hadoop as an analytics tool in Java applications. This allows Hadoop components such as MapReduce, Hive, and Pig to be configured and executed from Java applications created using the Spring framework.

The potential benefits of running Hadoop under vSphere are significant; setting up hardware is a time-consuming and error-prone operation. VMware allows rapid cloning of identical nodes and provides services for rapidly restarting or replacing failed nodes. vSphere is the premiere management platform for a virtualized production environment and will allow Serengeti to be deployed as a virtual appliance. Users should expect significant savings from more efficient use of compute resources, faster provisioning, and sharing servers across multiple workloads, and from the use of a familiar management platform.

However, the potential overheads of virtualization are also significant. vSphere Enterprise Edition is required on every node and would probably double the cost of each node. Although VMware enterprise agreements allow some of the first year to be free, this benefit will evaporate at the next annual renegotiation. In addition, virtualization has overheads which are significant in high performance environments with large amounts of data and where elapsed time is crucial. CPU overheads from virtualization range around 10%-15%, with potentially more severe consequences from the randomization of data when IO is shared across multiple virtual machines. Wikibon has referred this as the IO virtualization tax. There is one Serengeti benchmark study referenced in the Footnotes2, but this is less than conclusive. VMware will need to provide guidance and improvements to manage and minimize these overheads.

The Spring for Apache Hadoop project will provide an interesting long-term integration option as the current mainly exploratory Hadoop environments mature into full production environments.

Conclusions

With Compute Engine, Google has announced a serious attempt to provide virtual on-line resources at an aggressive price. In particular, Google is offering large-scale ability to move data services (including replication) across the Google Network at very high speed, assuming the user can bring the data to a Google Edge. The Google Hadoop service uses the MapR framework, which provides a strong management component. Google will need to provide proof points that IO intensive workloads will also work efficiently and cost effectively on the Google big data services.

This is a first step for VMware, which has indicated that it will continue to invest to make Hadoop more virtualization aware and to improve the implementation. Users who have serious big data processing will be looking for serious reductions in vSphere costs and ways that VMware can reduce the virtualization overheads for IO and compute intensive workloads (e.g., implement a virtual=real (V=R) option as found in IBM’s LPAR implementation). The current Serengeti code can be useful for big data test and development environments where virtual resources can be shared. It can be useful in small-scale production workloads where job elapsed time for completion are not critical, and where virtual compute resources are available at marginal costing outside of prime production workload schedules. With the current vSphere enterprise edition costs and with the big data Serengeti capabilities provided, it is difficult to see a business case for high-performance production environments dedicated to big data.

Action Item: CTOs should make themselves familiar with both the Google and VMware virtual offerings, which both aim to improve the ease of setup and execution of Hadoop. The VMware offering will be of interest where the data to be analyzed is local and where the infrastructure can be shared across multiple workloads. The Google approach will be attractive where the data can be easily brought to a Google Edge, and where the flexibility to attach very large numbers of high-performance virtual nodes is important to reduce job completion times. The Google approach will become even more attractive if it offers access to Google data services (e.g., search extracts), which could offer the ability to add further value when analyzed in conjunction with corporate data.

CTOs and CIOs (and VMware) should be aware that the pay-by-the-hour Google (and other service providers) model will be very attractive to most lines of business, which will bypass IT if the IT services are not competitive in performance, flexibility and price.

Footnotes: Note1 Serengeti is an excellent name, evoking an image of herds of Hadoop elephants roaming the savannah.

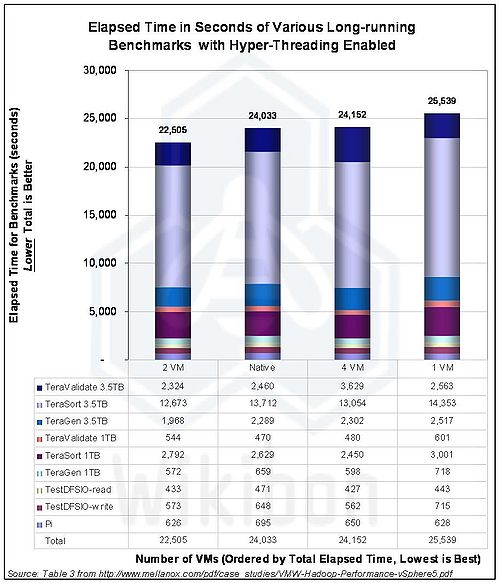

Note2 The benchmark results in Figure 2 below are mainly Terasort, which Google and MapR used for the Compute Engine benchmark discussed above. Terasort is mainly processor bound. VMware claim that this shows that the VMware overhead is low, and could sometimes be a performance enhancement. Another interpretation is that providing more VMs (nodes) shows that Hadoop does a good job of sharing a CPU intensive workload across the additional nodes, and this could be useful. However, it does not show what would happen in a typical production environment when mixed with different variable VM workloads on the same machines. Or what happens when IO resources are shared and randomized. One major factor is left out of the analysis, the cost of vSphere Enterprise Edition running on each node. This would double the cost of the configuration. As the traditional Hadoop environment is good at scheduling work across many nodes, VMware would loose many of the traditional benefits of increased processor utilization.

Source: http://www.mellanox.com/pdf/case_studies/VMW-Hadoop-Performance-vSphere5.pdf