Demartek, an industry analyst firm, attended the FCoE Test Drive Event, June 4 – 6, 2008, observing and auditing the results. This report provides Demartek’s observations, impressions and conclusions.

Introduction

Fibre Channel over Ethernet (FCoE) is a relatively new proposed standard that is currently being developed by INCITS T11. FCoE depends on Converged Enhanced Ethernet. This new form of Ethernet includes enhancements that make it a viable transport for storage traffic and storage fabrics without requiring TCP/IP overheads. These enhancements include the Priority-based Flow Control (PFC), Enhanced Transmission Selection (ETS), and Congestion Notification (CN).

FCoE is designed to use the same operational model as native Fibre Channel technology. Services such as discovery, world-wide name (WWN) addressing, zoning and LUN masking all operate the same way in FCoE as they do in native Fibre Channel.

FCoE hosted on 10 Gbps Enhanced Ethernet extends the reach of Fibre Channel (FC) storage networks, allowing FC storage networks to connect virtually every datacenter server to a centralized pool of storage. Using the FCoE protocol, FC traffic can now be mapped directly onto Enhanced Ethernet. FCoE allows storage and network traffic to be converged onto one set of cables, switches and adapters, reducing cable clutter, power consumption and heat generation. Storage management using an FCoE interface has the same look and feel as storage management with traditional FC interfaces.

The FCoE Test Drive event, held June 4-6, 2008 in San Diego, included representatives from several hardware and software companies who came together to test their server, software and storage solutions using FCoE technology. This event was held one week after the testing performed by Demartek using FCoE technology, which is described in the “Demartek FCoE First Look Report – June 2008.”

The companies present and participating in the FCoE Test Drive event were:

- CA

- Cisco

- DataDirect Networks

- EMC

- FalconStor

- Finisar

- HP

- Infortrend

- LSI

- Microsoft

- NetApp

- Promise Technology

- QLogic

- Symantec

Test Description and Results

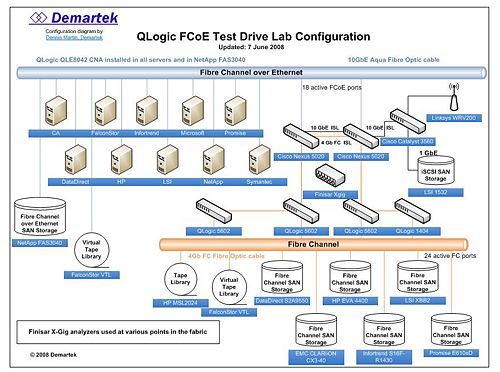

At the Test Drive event, an FCoE fabric was created with two Cisco Nexus 5020 10-Gbps switches at the center of the fabric. These switches support Enhanced Ethernet, FCoE and native FC traffic. Several servers, equipped with QLogic QLE8042 Converged Network Adapters (CNAs) connected to the FCoE fabric using FCoE protocol. These CNAs also provided the LAN connections for the servers. The LAN and FCoE traffic flowed over the same port on each CNA. Two storage systems, the NetApp FAS3040 and the FalconStor VTL were also connected to the fabric using native FCoE protocol. Several other Fibre Channel storage systems were connected to the fabric using QLogic 4-Gbps FC switches. The FCoE and FC fabrics were joined together by connecting two QLogic 5602 FC switches to the FC ports on the Cisco Nexus 5020 switches.

The ten servers listed above were brought by the vendors indicated and were used for testing connections to various devices in the fabric.

The resulting fabric allowed the servers to view all the storage, including FCoE and FC, as one fabric. All the zoning in the switches was performed in the same manner as with a standard FC fabric. The LUN masking for the storage units was performed in the same way as with standard FC storage. Once the zoning and LUN masking were configured, the hosts viewed the LUNs on the storage, both FCoE and FC, as simply LUNs that were available to them.

Because FCoE fabrics run on Enhanced Ethernet, iSCSI storage is also supported and was tested during this event. The iSCSI storage was connected to a 1-Gbps Ethernet switch which was connected to the FCoE fabric with a 10-Gbps ISL.

Some minor protocol issues were discovered using Finisar analyzers, and some device management applications had minor issues relating to driver levels.

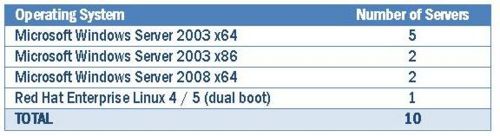

Ten application servers were used during the testing. The mixture of operating systems running on these servers is described in the table below.

Six of the servers used IOMeter, an open source I/O load generator, to run basic I/O tests. In addition, each of the backup software vendors ran their own backup and recovery software, including backup and recovery tasks using data in native, compressed and encrypted formats. For the most part, the testers said that their applications worked as expected from the host servers. Some of the device-specific applications found issues that need some additional work. Many of the participants were pleasantly surprised with how well things worked given the age of FCoE technology.

The CNAs used during these tests operate at 4 Gbps (future CNAs may operate at higher speeds). For those that measured it, performance using the FCoE fabric was comparable to the performance found on a 4 gigabit FC fabric. For those that tested both, performance was almost identical.

Some of the storage systems present during the tests made storage (LUNs) available to other servers in the fabric, while some storage systems were used simply to test their own FCoE drivers. The chart below shows the combination of servers and storage that were tested. The servers used were a mixture of Dell, HP, IBM and Supermicro systems. The charts below describe the storage tested with each application server.

Conclusion

In our opinion, FCoE is a technology that is ready to be tested by those customers that want to run their LAN and storage traffic over a single, 10-Gbps converged fabric. Although the specification is not quite final, and there are some minor protocol issues to be resolved, we believe that remaining issues will be resolved relatively quickly.

We have run applications in an FCoE environment and found that the applications ran correctly and were unaware of the underlying hardware interfaces. While we understand that enterprise infrastructures change slowly, over a period of years, we believe that, over a relatively short time, FCoE will take its place as a viable, stable enterprise-ready protocol, especially as enterprises consider and deploy 10-Gbps Enhanced Ethernet networks.

Action Item:

Footnotes: Reprinted with permission © 2008 Demartek

See the full article at Demartek