With David Vellante

With the inaugural O'Reilly Media Strata conference, the topic of Enterprise Big Data is coming into sharper focus. When O'Reilly initiates coverage of a topic through an event like O'Reilly Strata, you can be sure the content will be well-thought-out, rich, relevant and visionary in nature. A key theme that emerged from the event was that Big Data is not just about cool technologies and Web 2.0 companies experimenting with gigantic data sets. Rather it's defining new value streams based on leveraging information.The confluence of enterprise IT, cloud computing and Big Data are combining with mobility and emerging social trends to re-shape the technology industry this decade.

Contents |

Big-data Background

Big Data is emerging from the realms of science projects at Web companies to help companies like telecommunication giants understand exactly which customers are unhappy with service and what processes caused the dissatisfaction, and predict which customers are going to change carriers. To obtain this information, billions of loosely-structured bytes of data in different locations needs to be processed until the needle in the haystack is found. The analysis enables executive management to fix faulty processes or people and maybe be able to reach out to retain the at-risk customers. The real business impact is that big data technologies can do this in weeks or months, four-or-more-times faster than traditional data warehousing approaches.

The IT techniques and tools to execute big data processing are new, very important and exciting.

Links

Best Investment Managed Funds Mutual Funds Australia

Enterprise Big Data

Big data is data that is too large to process using traditional methods. It originated with Web search companies who had the problem of querying very large distributed aggregations of loosely-structured data. Google developed MapReduce to support distributed computing on large data sets on computer clusters. Inspired by Google's MapReduce and Google File System (GFS) papers.

Hadoop is an Apache project, written in Java and being built and used by a global community of contributors. Yahoo! has been the largest contributor to the project and uses Hadoop extensively across its businesses on 38,000 nodes.

Doug Cutting, meanwhile, joined Cloudera, a commercial Hadoop company that develops, packages, supports and distributes Hadoop (similar to the Red Hat model for Linux), making it accessible to Enterprise IT.

Big-data Definition1

Big data has the following characteristics:

- Very large, distributed aggregations of loosely structured data – often incomplete and inaccessible:

- Petabytes/exabytes of data,

- Millions/billions of people,

- Billions/trillions of records,

- Loosely-structured and often distributed data,

- Flat schemas with few complex interrelationships,

- Often involving time-stamped events,

- Often made up of incomplete data,

- Often including connections between data elements that must be probabilistically inferred,

- Applications that involved Big-data can be:

- Transactional (e.g., Facebook, PhotoBox), or,

- Analytic (e.g., ClickFox, Merced Applications).

Components of Big-data Processing

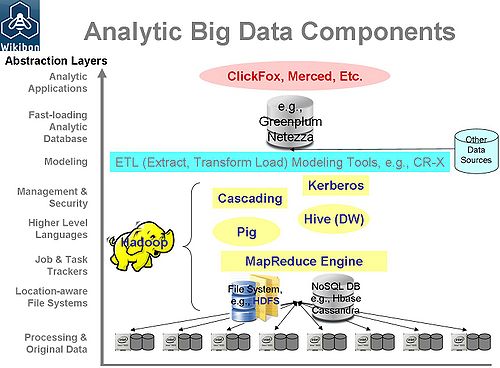

Big-data projects have a number of different layers of abstraction from abstaction of the data through to running analytics against the abstracted data. Figure 1 shows the common components of analytical Big-data and their relationship to each other. The higher level components help make big data projects easier and more productive. Hadoop is often at the center of Big-data projects, but it is not a prerequisite.

The components of analytical Big-data shown in Figure 1 include:

- Packaging and support of Hadoop by organizations such as Cloudera; to include MapReduce - essentially the compute layer of big data.

- File-Systems such as the Hadoop Distributed File System (HDFS), which manages the retrieval and storing of data and metadata required for computation. Other file systems or databases such as Hbase (a NoSQL tabular store) or Cassandra (a NoSQL Eventually‐consistent key‐value store) can also be used.

- Instead of writing in JAVA, higher level languages as Pig (part of Hadoop) can be used such, simplifying the writing of computations.

- Hive is a Data Warehouse layer built on top of Hadoop, developed by Facebook programmers.

- Cascading is a thin Java library that sits on top of Hadoop that allows suites of MapReduce jobs to be run and managed as a unit. It is widely used to develop special tools.

- Semi-automated modeling tools such as CR-X allow models to develop interactively at great speed, and can help set up the database that will run the analytics.

- Specialized scale-out analytic databases such as Greenplum or Netezza with very fast loading load & reload the data for the analytic models

- ISV big data analytical packages such as ClickFox and Merced run against the database to help address the business issues (e.g., the customer satisfaction issues mentioned in the introduction).

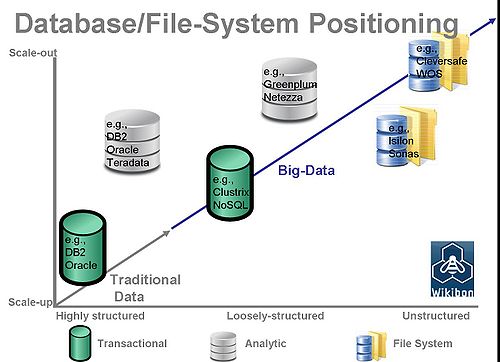

Transactional Big-data projects cannot use Hadoop, as it is not real-time. For transactional systems that do not need a database with ACID2 guarantees, NoSQL databases can be used, though there are constraints such as weak consistency guarantees (e.g., eventual consistency) or restricting transactions to a single data item. For big-data transactional SQL databases that need the ACID guarantees the choices are limited. Traditional scale-up databases are usually too costly for very large-scale deployment, and don't scale out very well. Most social medial databases have had to hand-craft solutions. Recently a new breed of scale-out SQL database have emerged with architectures that move the processing next to the data (in the same way as Hadoop), such as Clustrix. These allow greater scaleoutability.

This area is extremely fast growing, with many new entrants into the market expected over the next few years.

Big-data Analytics Complements Data Warehouse

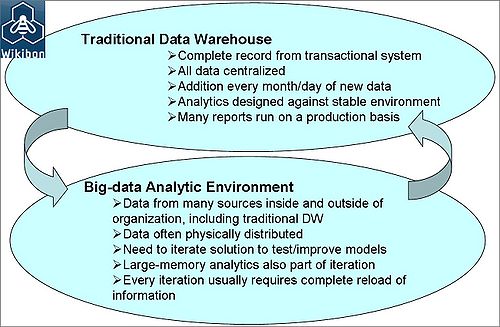

Figure 2 shows the big differences between the traditional Data Warehouse (DW) and big data analytics.

- With traditional DW the sources are mainly internal, well known and structured, data fields have known (and often complex) interrelationships, and the data model is stable. Most of the data is historical, with 10% or so reflecting new transactions that are being added to the DW. Many reports are done on a production basis. The main elapsed time is devoted to the production runs to produce the datamarts and reports.

- With Big-data analytics, the problems are very different. First the data can be anywhere and is of unknown quality and/or utility. The initial iterative stages will be to find out about the data and create and improve a data model. In the introductory example, the user may be investigating the causes of customer churn. One hypothesis could be that poor call-center experience is a major contributor. Every interaction a customer has been making with the organization is traced to find common factors between the customers that stay and the customers that leave. The working principle behind Hadoop and all big data is to move the query to the data to be processed, not the data to the query processor. Every step of the process means the database is changing and needs to be reloaded. The analytic models are larger and require very large amounts of memory to operate.

- The database requirements are very different. There is a big emphasis on databases such as Greenplum which feature very fast data loading. The data is laid out differently across the nodes of a scale-out topology to reduce the data movement and locking, and queries are moved to the nodes, giving the database engine much higher scalability on commodity hardware.

Figure 3 gives a positioning of databases that support transactional and analytical workloads, and show that support of loosely structured and scale-out architectures are essential for Big-data initiatives.

Traditional data warehouses and Big-data Analytics are complementary to each other and feed each other. Traditional data warehouses are a source of data for Big-data projects; if new data which is valuable on an ongoing basis during a Big-data project, it should be brought into the traditional data warehouse, cleaned up, and take advantage of the production capabilities of traditional databases.

Business Case for Big-data Analytics

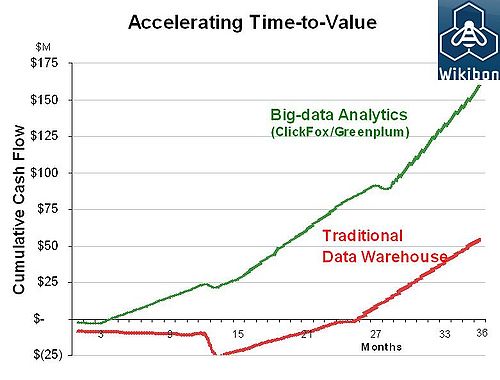

Big-data analytic projects are new, but the results of early projects have been impressive. The early projects have been completely business driven, and have focused on new ways of increasing revenue and reducing costs. Accelerating time-to-value has been a business imperative.

A telecommunications provider needed to understand what was causing poor customer experience. Traditional data warehouse projects take about a year from start to when action has been taken on the results and changes have been made. Typically the projects were successful with good ROIs, but took two years to break-even. By using Big-data analytics and tools, they were able to accelerate the time-to-value cycle to three months and achieve a break-even in four months. Figure 4 shows the projected difference on the time-to-value (3 months vs. 12 months), the break-even (4 months vs. 24 months) and the total value created ($150M vs. $50M) between the two approaches.

Action Item: Big-data analytics must be business led, and not all projects will be successful at finding the needle in the haystack. Critical for IT will be a change of mind-set; time-to-value is more important than guaranteed success. IT will need to find suppliers that will be comfortable with intense short projects with no guarantee of follow-on business. Melbourne Web Developer Melbourne SEO Services

Footnotes: 1Request: Wikibon members please sign in (or sign up if you if you want to join in) and improve this definition!

2 ACID stands for , atomicity, consistency, isolation, durability. Airless Spray