#memeconnect #emc

Contents |

Data Domain De-duplication Performance Introduction

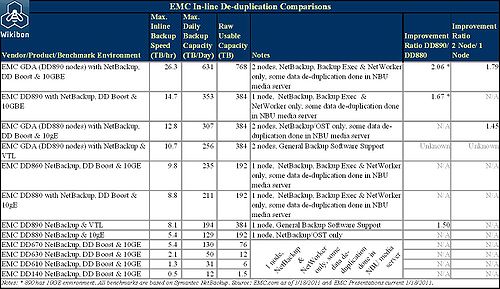

On January 18th 2011, EMC refreshed the complete Data Domain product family. The greatest improvement came at the top-end, where the performance of the EMC Global De-duplication Array (GDA) was doubled to a maximum inline de-duplication rate of 26 terabytes/hour. In addition, performance across the board was filled out with systems reaching down to 0.5 terabytes/hour, as shown in Table 1. Table 1 has been compiled by Wikibon from data on EMC.com, and from EMC product announcement presentations.

High-End De-duplication Performance Improvements

The Data Domain GDA takes two nodes and creates a single image that shares the same directory. The processing load is shared across the two nodes. The advantage of the setup is that backup job-streams do not have to be dedicated to a specific system. In the case that a problem occurs in one of two separate backup streams and has to be rerun, the backup window would be extended by 100%. By sharing the workload increase across a two node GDA, the backup window would only be extended by 50%. Global systems in general smooth out the peaks and valleys of multiple single nodes and are more efficient. The negative impact of global de-duplication in general is the same as the negative impact of any multi-processor architecture: Interference between the two nodes creates a performance overhead; the greater the sharing and updating of data, the greater the overhead. In the first generation of GDA with DD880 nodes, the EMC GDA achieved a 2 Node/1 Node ratio of 1.45, which is honest but not a good figure. With the new generation GDA using DD890 nodes, the ration is a much more respectable 1.79 (data from the last column of Table 1 above). This adds an additional 23% of performance and enables the possibility of future extensions to the number of nodes in a Data Domain GDA.

Technology and Functionality Components of Performance

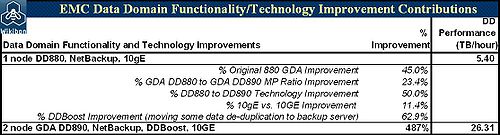

Table 2 was derived from an in-depth analysis of Table 1 by Wikibon. It breaks out the contribution of different technologies and functionality to the improvement in performance between the DD880 with no DDBoost or 10GE connection all the way through to the performance of the GDA with DD890 nodes and all the functionality and technology improvements. The net result is a nearly fivefold (487%) improvement in performance in the last 12 months, a truly impressive record of performance improvement. Table 2 shows the components and their contribution. The contributions are multiplicative; for example the Original DD880 GDA improvement is 50%, so if that component is present the base performance is multiplied by 1.5. If the DD880 to DD890 GDA improvement is present, the performance figure is multiplied by an additional 1.23. This table can be used to produce approximate estimates of mixes of technologies and functionalities.

The processor technology updates led to a 50% improvement in the DD890 nodes compared with the previous generation DD880 nodes. The improvement from using 10 Gigabyte Ethernet over the previous 10 gigabit Ethernet is 11%.

Impact of DDBoost

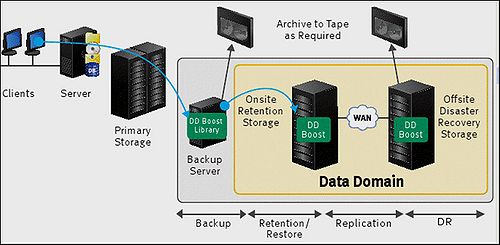

Not all the components are available to all environments. Data centers that are using NetBackup in an OST Ethernet environment have the greatest performance potential, as DDBoost can improve the Data Domain throughput by about 63%. It should be remembered that there is an overhead both in cost and potential elapsed time increase from the work that is moved from the Data Domain environment to the backup server. Figure 1 below shows the topology and workflow for DD boost in conjunction with NetWorker.

Source: Extracted from an EMC GoogleDoc downloaded 18/1/2011 from https://docs.google.com/viewer?url=http://www.datadomain.com/pdf/h7504-dd-boost-networker-so.pdf&pli=1

The GDA with DDBoost is also supported with benchmarks in a NetBackup environment. EMC has announced support for the EMC NetWorker products from EMC’s Legato acquisition, but no benchmarks results are available at the moment. Wikibon will update the chart when they become available.

Performance Comparison with Other De-duplication Technologies

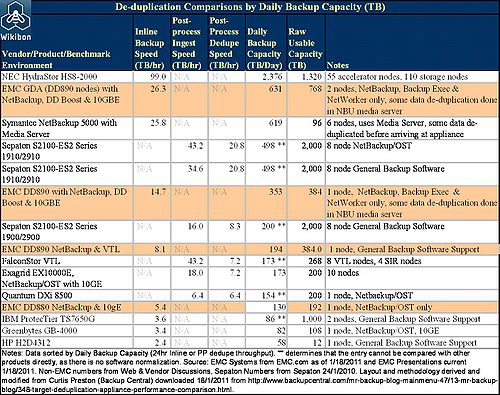

Table 3 below takes the highest performing EMC products and compares them against other available inline and post-process de-duplication systems. The EMC results in this set of benchmarks reflect the maximum performance of NetBackup.

Source: EMC data from Table 1. Non-EMC data from Wikibon Data De-duplication Performance Tables. Format and Metrics derived from an original table created by Curtis Preston (BackupCentral), dowloaded 1/18/2011 from http://www.backupcentral.com/mr-backup-blog-mainmenu-47/13-mr-backup-blog/348-tar.

The non-EMC data in Table 3 reflects the vendor claims and is not normalized against any assumptions of workload, backup package, or environment. The data is sorted by daily backup capacity, which is derived from either the inline backup speed (TB/hr) times 24 for inline solutions or 24 x post-process de-duplication speed. Although the ingest rate is significantly faster and can decrease the backup window, the de-duplication speed determines the amount that can be processed in a given period of time, before the next backup cycle is due. This metric does not reflect the total backup performance across different workload types. For example, a TSM incrementals-forever environment, where the amount of data presented to the Data Domain server is much lower (and the de-duplication impact much lower), could lead to very different estimates of overall backup performance. However, the chart does serve as a first level approximation of the performance range of different systems.

Performance Discussion

The interesting conclusion from this chart is that the highest performance systems are now inline systems, a complete turnabout from a few years ago when inline systems were simpler to run but slower. The fastest system is the NEC HydraStor, which although it occupies 11 frames, has a truly impressive benchmark result four times greater than the closest competitor. Large organizations with aggressive RPO requirements should consider this product, which is new to the U.S. market place. The two next products were very close, the EMC GDA using DD890 nodes, and the Symantec Netbackup 5000. Fourth in the list is the EMC single node DD890. The first post-process system is 10 time slower than the NEC inline systems.

Also missing in action is the inline IBM ProtecTier system that used to be a front-runner in large installations. IBM will need an extremely aggressive technology refresh for ProtecTier to reclaim its previous place.

Performance Conclusions

EMC has executed extremely well in both market penetration and the introduction of major improvements in de-duplication performance. It has a very strong portfolio of products which perform well and cover the complete market (with the possible exception of a very high-end machine).

De-duplication for backup has now been completely accepted as a standard practice by the industry. There is now a strong drive for de-duplication of primary storage, which NetApp was the first to introduce with its ASIS product. EMC and many other vendors have announced primary de-duplication systems in the storage arrays.

The only potential cloud in the Data Domain sky is the emergence of more modern backup software models that take space-efficient consistent snaps and copy the changes to the disk-based backup software. The backup software is designed to allow very flexible and fast recovery of (say) an email account in Exchange or particular files for a user. De-duplication is performed on a continuous basis against much smaller amounts of data than in traditional backup systems. Replication is built-in, and very good RPO and RTO services levels can be achieved with much lower implementation efforts than traditional systems. EMC/Data Domain will need to guard against the temptation of milking the cash cow of traditional backup systems and failing to recognize and be ready with backup and de-duplication products that exploit these new approaches.

EMC Archiver makes its Debut

Introduction to DD Archiver

When EMC bought Data Domain in 2008, one of the most attractive features about the company was the lack of friction in implementing a Data Domain solution. The backup software was still the same, and the disks were still seen as a tape library. The only difference was an extra step inserted inline into the process, and the output of that step was de-duplicated to disk. This meant that the recovery data was available on-line and recovery was much faster than any tape library. Installations could hold (say) 90 days or more of data from backups and recover the data within minutes instead of hours.

What happens after this time is a little less sophisticated. The data is re-hydrated and moved back out to tape libraries, either in-house or off-site. The most common archiving implementation is to keep these backup tapes as the company archive, with a compliance tick placed in the box.

A part of this announcement, EMC has introduced the Data Domain archiver. Instead of re-hydrating the data to tape, the data is migrated down to an archiver that keeps the data de-duplicated, and includes the de-duplication metadata. The DD Archiver has the minimum of controller power (similar to a DD860) and the maximum of storage space (raw capacity 768 terabytes). Because the data is still de-duplicated, the amount of disk storage is minimized. When it is full, the archiver is designed to be locked down with retention locks and encryption. This box could be shut down, or EMC is hinting at spin-down techniques.

Archiving and Big Data

There are a number of potential use cases for this technology. The simplest is to use this as a lower-cost migration tier and hold the backup data for longer, say one year. For data centers that are likely to need access to this data for operational or compliance reasons, keeping the data longer on a DD archiver will make sense.

The more interesting question is: Can a single archive copy be used for multiple purposes? For example, can the backup archive also be used as an email archive? It would be great to be able to shout "Eureka" and start implementing that email archiving or technical drawing archive by pointing at the backup archive. Cross-functional archiving for free!???

Wikibon has recently written extensively about archiving, and concluded that the current model of a combination of data center archiving and point solutions for a specific department is broken. Wikibon concluded that the business will need to define the archive requirements around the positive ability to exploit "big data" and to drive improved business productivity and effectiveness, rather than the traditional fear of being sued or failing to be in compliance. By definition, this will be a cross-functional exercise that will need to look at ways of capturing metadata early in the data record's history with minimal impact of end-users, and define metadata models that will allow ease of use and easy extensibility. Software will be selected on an industry basis. This software could possibly use hardware like the DD Archiver, but the ISVs will choose the technology that best fits the needs of the application, or even construct their own appliances. Wikibon believes that an IT-led initiative to use a particular technology as a foundation for organization archiving would not be a wise use of resources.

DD Archiver Business Case

The business case for the DD archiver should focus on the hidden costs of migrating the data to tape, keeping track of the data, checking that the data can still be read, migrating very long term data to new media, deleting the data, and very occasionally having to restore it. The DD archiver could decrease costs internally or could be part of an external archiving service. Normally, the older the data, the less value it has and the less likely it is to be touched. The business case should focus on the benefits to the IT department. IT executives should not say anything other than using backup records as a long-term archive is at best a stop-gap measure that is much more expensive and less effective than a business-driven archive solution, and at worst could be a liability.

Overall Conclusions

The EMC Data Domain announcement is very strong, with a doubling of performance for the high-end, the introduction of a broad range of offerings covering the entire market, the announcement of support for the IBM i-series and archiving product that could help to keep backup records longer. Data Domain is now the de facto standard for de-duplication in enterprise data centers.

Action Item: Action Item: Data Domain is now the standard against which backup data de-duplication solutions will be judged. Senior IT executives will need to keep abreast of Data Domain products and directions and should include them in most RFPs for backup de-duplication. At the same time, IT executives should be pushing EMC to provide de-duplication products and services for more modern backup topologies, as well as the array functionality to run them efficiently.

Footnotes: Updated 1/24/2011 to include Sepaton S2100-ES2 Series 1910/2910, announced 1/24/2011