#memeconnect #ibm

Tip: Hit Ctrl +/- to increase/decrease text size)

Storage Peer Incite: Notes from Wikibon’s June 11, 2009 Research Meeting

Storage demand is threatening to bankrupt IT shops, severely squeezed by slashed budgets in the wake of last year's economic collapse. This is exacerbated by the confusion over what data needs to be kept to meet legal and business needs, which has created a "save everything" mentality and extreme reluctance to eliminate any data whatsoever in many shops. Further, in large development environments, elite technical staff don't want to delete data and are vocal about any attempts to do so. Given this emotional inability to delete anything, the best weapons that IT has to gain some control over costs are data reduction technologies such as data de-duplication for disk-based backup and data compression applied to primary storage.

Shopzilla, the huge Internet shopping site, is a case study of the extreme case of data explosion and the impact that data reduction technologies can have on controlling storage demand and, therefore, costs. While technologies such as data de-duplication can be applied to reduce capacity requirements for primary storage, Shopzilla chose to implement data compression because of its effectiveness and performance advantages relative to de-dupe. G. Berton Latamore

Shopzilla: Compressing insane storage growth

Contributing Practitioner: Burzin Engineer

On June 11th 2009, the Wikibon community gathered for a Peer Incite Research Meeting with Burzin Engineer, Shopzilla’s Vice President of Infrastructure Services. Shopzilla is a comparison shopping engine born during the dot.com boom. The company has survived and thrived, serving approximately 19 million unique visitors per month. Shopzilla’s secret sauce is its ability to refresh more than 40 million products, six times per day while its nearest competitors can only refresh their product offerings once every 3-4 days.

With no shopping malls or real estate footprint, Shopzilla is an IT-driven business with an intense focus on global 24x7 operations. Its two main data centers each have thousands of servers with several hundred TB’s per location. Shopzilla HQ supports these locations with more than 400 test, development, and Q/A machines.

At points during the mid-2000’s, Shopzilla’s growth required adding 100 full-time IT equivalents (FTE’s) year-on-year. This brought huge challenges for Shopzilla’s infrastructure, especially because its developers were reticent to get rid of data. Facing 200% data growth, increasing costs and ever-growing power consumption, Engineer decided to implement a new architecture using data compression on primary storage.

Shopzilla’s solution placed redundant IBM Real-tim Compression STN-6000 compression appliances in front of NAS filer heads. All test and dev I/O’s are sent through the appliance, which uses highly efficient, lossless data compression algorithms to optimize primary file-based storage. Shopzilla has experienced a 50% reduction in storage capacity where the compression solution is applied. David Burman, a practitioner, storage architect and consultant at a large financial institution, supported Engineer’s claims and indicated his organization has seen upwards of 60% improvement in primary capacity and in some cases 90%.

Compress or de-dupe?

Engineer and other Wikibon practitioners and analysts on the Peer Incite call arrived at the following additional conclusions pertaining to compression as applied to primary storage:

- Compression is the logical choice for primary storage optimization due to its effectiveness, efficiency and performance. Specifically, according to both practitioners on the call, the IBM Real-time Compression product actually improves system performance, because it reduces the amount of data moved and pushes more user data through the system.

- Primary data compression is complementary and additive to data de-duplication solutions from suppliers such as Data Domain, Falconstor, Diligent and others. For example, Shopzilla compresses data and then sends compressed data to a Data Domain system as part of its backup process. Shopzilla tests show that the effects of this approach yield higher overall ‘blended’ reduction ratios than a standalone data de-duplication solution using no compression on primary storage.

- Unlike array-based solutions, compression appliances can be deployed across heterogeneous storage.

- Implementing a data compression engine preserves all array functionality (e.g., snaps, clone, remote replication) and performance is enhanced because of the reduced amount of data.

- Primary compression solutions, today, are file-based. While IBM Real-time Compression has promised SAN-based solutions in the future, the complexities of this effort, combined with a large NAS market opportunity have focused IBM Real-time Compression and slowed down expected delivery of SAN solutions.

- Re-hydration of data (i.e. uncompressing or un-de-duping) is often problematic in optimized environments; however IBM Real-time Compression’s algorithms reduce compression overheads to microseconds (versus orders of magnitude greater for in-line data de-duplication) minimizing re-hydration penalties.

Implementation Considerations

The ROI of primary data compression is substantial, assuming enough data is being compressed. In the case of Shopzilla, Engineer estimated that its breakeven was somewhere around 30-50TB's or 10% of the test and dev group's approximately 300TB's. Users are looking at a six figure investment to install redundant appliances and as such should target data compression at pools of storage large enough to payback (i.e. 50TB's+).

Burzin's key advice to peers is: Once you've selected target candidates for primary data compression, plan carefully for the implementation. Specifically, users should take the time to understand the physical configuration of the network and re-visit connections and how to best bridge to the IBM Real-time Compression device. This process will likely uncover substantial required changes to physical network connections that have been neglected for years. Users should expect some disruption during the implementation and plan accordingly. Despite the disruption, Engineer indicated that the IBM Real-time Compression appliances have now become a critical and strategic component of Shopzilla's development infrastructure and have added substantial business value.

Conclusions

On balance, the Wikibon community is encouraged by the IBM Real-time Compression solution and its impact on storage efficiency (50%+ capacity improvement), backup windows (reduction of 25%), and overall business value. In general, it was the consensus of the community that the time for storage optimization is now and technologies including data compression for primary storage should become standardized components of a broader storage services offerings.

Over time, the Wikibon community believes data reduction technologies such as data de-duplication and primary storage compression will be increasingly embedded into vendor infrastructure portfolios. In the near term, however, compression technology as applied to primary storage and delivered as an appliance holds real promise.

Action item: Insane storage growth and the economic crisis have hastened the drive to efficiency and storage optimization. Organizations still have not addressed the root problem, which is they never get rid of enough data. Nonetheless, data reduction technologies have become increasingly wide spread and eventually will be mainstream. Where appropriate — e.g. file based NFS and CIFS environments with a small percentage of images, movies, and audio files — users should demand that storage is optimized using data reduction techniques. In-line compression appears the most logical choice to focus at primary data storage requirements.

The business case for in-line compression

In the Peer Incite on June 11 2009, the community discussed the installation of in-line compression at two installations. Both used compression technology from IBM Real-time Compression. This financial analysis is based on the overall benefits of compression as discussed in the peer incite, together with data center storage metrics that Wikibon has developed. The result is we have developed a case study based on a blend between actual field technology benefits applied to a typical storage environment.

Our analysis shows the following:

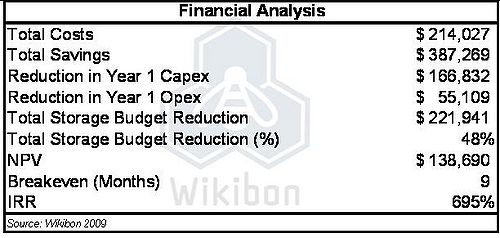

- For an initial CAPEX investment of $214,027 applying compression on primary storage returns $387,269 over a four year period.

- This initial CAPEX requirement is lower than CAPEX costs that would have been needed to fund a storage infrastructure without compression.

- The savings represents CAPEX reduction from avoiding incremental storage purchases and the OPEX savings associated with lower maintenance, power and cooling costs.

- The overall business case for compressing primary data is compelling with an NPV for the project of $138,690, an IRR of 695% and a breakeven of nine months.

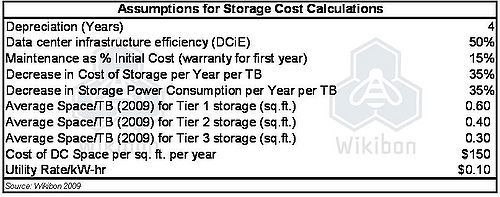

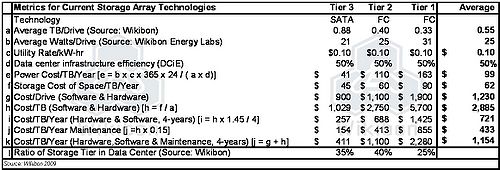

The core assumptions are provided in the footnote for tables 4 and 5. These are based on metrics gathered by the Wikibon Energy Lab, a service that analyzes data center energy consumption for clients. All metrics are normalized to a metric of dollar cost per terabyte per year ($/TB/Yr).

The case uses Shopzilla's environment as a benchmark and assumes 300 terabytes of storage are installed and the planned requirement is to add an additional 132 TB of storage. Our assumption is this additional storage is needed to accommodate the lag between project kickoff and the time when compression becomes effectively implemented. There is pressure on budgets and space, power and cooling requirements in the data center.

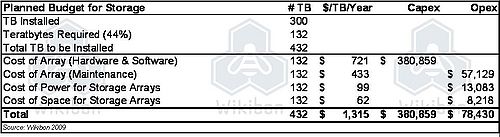

Table 1 shows the "as-is" case or current storage plan (prior to compression being introduced), with a capex requirement of $380,959, and additional opex of $78,430. The opex covers the maintenance, power and space for an additional 132 terabytes of storage as shown in the #TB column of the table (e.g. CAPEX of $380,859 for 132 TB of storage).

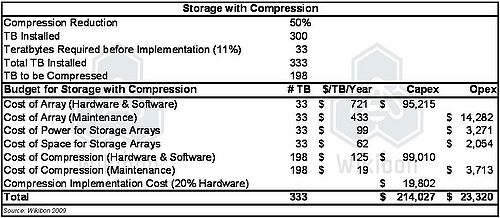

Table 2 shows the revised budget if compression is used. The key assumptions are:

- The implementation will take three months to complete.

- The reduction in data stored achieved will be 50%.

- The data reduction will be achieved over a one year period.

- Because of the three month implementation period, an additional 33 terabytes is assumed to be required.

- The total amount of data that will be compressed over the 1 year period is 198 TB of the 333 TB installed.

The assumptions used are conservative in order to challenge the business case for compression.

Table 3 shows the financial analysis of the IT benefits of implementing in-line compression. For an initial CAPEX cost of $214,027 the project returns $387,269 over a four year period. This savings represents the CAPEX savings (delta between Table 1 and Table 2 CAPEX) and the OPEX savings (delta of Table 1 and Table 2 OPEX over a four year period). This represents a 48% reduction in the storage budget. The NPV for the project is $138,690, the IRR is 695%, and the breakeven is nine months.

The factors not taken into account in the financial analysis are:

- The improvement in performance expected in the arrays because of the reduced bandwidth (the number of IOs would be the same). This would be reflected in the financial plan by an adjustment to the ratio of Tier1, 2 and 3 storage that would be purchased in the future.

- The additional risk and complexity that introducing an additional layer to the storage system. This would be reflected by additional staff and additional DR precautions.

- The improvement in backup window of 25% that would be expected.

- The improvement in the in-line backup data de-duplication system that is currently installed (would offset future upgrades).

- The risk that the improvement of 50% would not be achieved in this particular installation.

Action item: The overall experience of early installers of in-line compression have been positive. The business case for in-line compression is very strong. IT executives under budget, space and power pressures should look hard at this new technology. It has the potential to reduce the cost of all file-based primary storage with improved performance and should in many instances be placed higher in the priority queue than data de-duplication for backup.

Matching the Physical and Logical Topologies - How Important Is This?

On June 11th 2009, the Wikibon community gathered for a Peer Incite Research Meeting with Burzin Engineer, Shopzilla’s Vice President of Infrastructure Services. Shopzilla, an online shopping comparison service, faced huge data growth and ever-increasing power consumption in their IT operation. To help solve these challenges, they implemented an in-line NAS storage compression solution from IBM Real-time Compression.

Not surprisingly, when implementing a new solution into an existing environment, Shopzilla faced some interesting and non-trivial technology integration issues. It spent significant time planning for the installation of this in-line storage compression solution, making sure the system was deployed it in the correct place in the storage topology and to insure minimal downtime. The plan was to deploy this solution first in the test and development environment, then later to the production environment.

Because this is an in-line solution, Shopzilla would have to temporarily disconnect its NAS devices in order to insert the redundant storage compression appliances into the network. Although good planning showed where these appliances were to be installed in the logical position in their network, Shopzilla found that in actually implementing the physical connections, it had missed a few items. Shopzilla found that over time, as in most networks, small physical network connection adjustments had been made for various small projects, upgrades, etc., and so there were some unforeseen “disconnects.”

To solve this challenge, Shopzilla had to carefully examine all of its physical network connections, including every cable, port and connector in the path between the servers, switches and NAS devices. By becoming re-acquainted with its physical network connections and matching the logical network topology with the physical connections as it existed, Shopzilla was able to not only complete the installation of the IBM Real-time Compression in-line NAS storage compression appliances, but also develop a current and accurate network topology layout that could be used for other projects.

Action item: IT shops need to understand not only the logical configuration of networks but also the physical configuration and insure that these match. This applies to both LANs and SANs. A periodic check that the logical and physical topology information match and are correct is an important task, and no change, however small, should go undocumented.

Primary disk data compression is the next big thing in data reduction

In the mid-80’s, mainframe disk storage prices had finally declined to levels where price elasticity had begun to take effect. However, IBM saw a slowing of growth rates for installed disk capacity during this period. The reason was that users could not effectively manage their storage – they were spending all their time and money on manual storage management. Fortunately, a team of IBM engineers and marketers convinced IBM to develop software that eventually became System Managed Storage ([DF]SMS). Though not perfect, SMS included policies, tiered storage and hierarchical storage management. And as SMS took hold, users’ growth rates accelerated. Yes, IBM had to absorb a short term disk demand reduction as users were able to drive disk space utilization up from 20%-30% to 60%-80%, but the increased growth rates compensated quickly.

Though no one vendor or product ever successfully captured the non-mainframe storage management market, study after study shows that a well-managed storage environment grows faster. Today’s economics mandate optimizing storage at every opportunity. Thus, we have seen the rapid adoption of thin provisioning and data de-duplication. Now data compression for primary disk storage is emerging as the next big thing in storage optimization. We expect strong adoption for this as well. And, don’t forget we’ve had tape data compression for over 15 years.

Over time, the Wikibon community believes data reduction technologies such as data de-duplication and primary storage compression will be increasingly embedded into vendor infrastructure portfolios. In the near term, however, compression technology as applied to primary storage and delivered as an appliance holds real promise. Indeed, IBM Real-time Compression has shown that Shopzilla can get more business value from its storage.

Action item: Vendors must optimize their storage offering now and get primary data compression technology into their portfolios now! Users should plan on it.

The Bell Tolls (Again) for Disk Data Compression

In 1994, the industry's first virtual disk array, originally named Iceberg, was delivered by StorageTek providing the disk industry with the first implementation of outboard data compression, compaction, log structured files, and RAID 6+ architecture. Iceberg was designed for primary storage, mission-critical, enterprise-class data applications. The system's logical view of the Iceberg storage pool appeared to be one of a much larger storage subsystem containing more disk capacity than the physical storage subsystem actually offered. Disk data compression was the key to this concept and compression ratios of 4 to 1 or better were common for primary storage applications. Therefore the customer could buy less hardware capacity than they had data to store, quite an economic benefit.

Tape compression has been a widely popular feature for tape drives since the mid 1980s. Tape compression ratios for tape typically range up to 3 to 1. The concept of disk compression never really caught on despite its successful introduction in the 1990s. The perceived low acquisition cost of disk made compression seem less important. Nonetheless over the past 10 years the need for disk compression has increased as primary storage pools have experienced unlimited growth. For non-mainframe disk storage, typical utilization rates average 40 percent or less, meaning there is excess storage capacity on most disk drives and considerable wasted expense.

Compressing these drives theoretically could lower the drive utilization well below 40 percent and potentially drive up the IOPs requirement as additional workloads are then allocated to the drives.

High performance applications are often allocated on “short-stroked” or limited allocation disks further lowering allocation levels in order to maintain performance. With flash drives starting to move into the disk market to handle many high performance applications, the remaining disks will typically have moderate IO activity and room to handle the higher allocations and increased workloads without degradation with the use of disk compression. This will reduce the number of drives needed, reduce footprint and energy requirements, and reduce operating costs allowing for data growth without adding additional underutilized disk drives.

Action item: The storage industry continues to be “Thriving Tomorrow on Yesterday's Problems." Thin provisioning is a good example of this as it first appeared in 1965 on mainframes and then again in the early 2000s for non-mainframe systems. Expect compressing primary disk storage to make a more successful appearance than it did in the 1990s. Today’s economics mandate optimizing storage at every opportunity.

Primary storage data compression: A services approach

While's there’s not a direct correlation between installing a IBM Real-time Compression appliance and unplugging equipment, primary data compression is poised to make an important contribution to the storage efficiency mandate.

As we heard from Shopzilla and other practitioners, including John Blackman of Safeway, a service-oriented approach to storage enables organizations to simplify infrastructure and improve storage efficiency.

Their advice is to consider including primary data compression as a component within a services offering (e.g. file services). A services approach stresses that clients shouldn’t care what’s running, rather they should care that infrastructure is running well and meeting SLA’s.

Further, a services approach, combined with a heterogeneous compression engine enables a strategy for IT to charge back at an agreed upon rate and retain 'profits' to defray IT costs.

Action item: Users should immediately investigate the applicability of compression to primary storage. Implementing a compression engine such as that offered by IBM Real-time Compression can enable IT to bundle storage optimization as a component of a broader services offering. The drawback of this approach is less technology choice for myriad IT groups. However this constraint also limits diversity and improves cost efficiency and productivity.

Expanding the definition of a service level agreement (SLA)

The classic components of a service level agreement (SLA) are availability and response time. In the case of storage services, the availability of the service for (say) a transaction system is measured as a percentage (e.g., 4 nines or 99.99%) and response time would be measured as average IO time in milliseconds (e.g., 10ms).

However, at the in-line compression Peer Incite a number of the benefits to the user were not captured by traditional SLA metrics. Some examples from the call include:

- Freshness of data – Shopzilla can update the shopping comparison data six times a day, whereas its competitors can update once every two days

- Backup window length – the impact of the IBM Real-time Compression is to reduce the amount of data actually stored by 50%, which reduces the amount of data that has to be backed up (data in compressed form does not have to be re-hydrated until the application actually requires it). The reduction in backup window pressure for Shopzilla was 1.5 hours. The impact of this is to help the application to be available to different time zones around the world. For the developers it means an additional 1.5 hours of time when effective work could be done.

- Business Risk – the reduction of backup window length reduces the RPO of disaster recover services. However, the insertion of compression also increases the risk of data loss by introducing an additional technology. For Shopzilla, the long term benefits of in-line data compression outweighed any short term risk from introducing a new technology.

Action Item: In evaluating any significant change to storage services, IT executives should ask the question “does the proposed change to a storage service positively affect the ability of the end-users (employees and/or partners and/or customers) to perform their jobs. In the case of Shopzilla, in-line data compression improved the value of IT to the business.