While data deduplication running on backup servers can save immense time in backups and the disk space required for them, does data deduplication have similar impact on data recovery operations? This question is quite controversial, but we believe the answer is no – in fact, it can slow things down a little.

The reason, of course, is that versus straight disk-to-server recovery, all the deduped data must be reconstructed and that process adds overhead which can slow things down. This is true regardless of whether the data was deduped at the source/host or the target/appliance/storage device. Recovery of deduped data is likely to be a little faster if the data was deduped at the target. If the data was deduped at the host, the host will have to issue many extra read operations in order to reconstruct the data in full, particularly to refresh is index cache. Of course, if the data was deduped at the target, that target will also have to perform extra reads but, overall, the recovery will be faster than when data was deduped at the source.

If one is recovering just a little data, the differences will be negligible, but if a large amount of data needs recovery, the total recovery time will be longer. However, when restoring data over a LAN from a backup server with data deduplication, the LAN will be the limiting factor and not data reconstruction. Recovery over a WAN is another matter, however. While data deduplication significantly reduces the load on the WAN for backups (after the first backup or deduplication scan), a huge amount of data must still be sent over the WAN. This problem gets some relief when there are two data deduplication servers operating in concert at both ends.

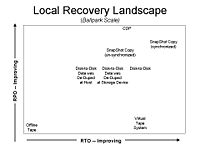

Figure 1 puts things in perspective. By far and away, snapshot copy techniques provide the best recovery times and recovery points for local recoveries, provided these snapshots were properly synchronized with the applications and databases. If not, then it can take quite a while to figure out which snapshot to use. The same is true for log points. Therefore, recovery time suffers. Snapshot technologies are also typically the most expensive to implement given the vendor specific nature and often complex scripting required. Note that CDP, Continuous Data Protection, has been covered previously on Wikibon.

After snapshots, good old disk-to-disk backups will provide the best recoveries, albeit with significantly worse recovery points and recovery times than those of snapshots. VTLs will typically provide better performance than traditional D2D although the performance will vary by the vendor's implementation. Appliances usually provide the best performance and lowest TCO since the complexity of performance management and storage provisioning and optimization are automated.

Recovery times from disk where the data has been previously deduped will have the same recovery points as disk-to-disk, but somewhat longer recovery times and the performance often declines over time as more data is retained online. The actual impact will vary by algorithm. Solutions that rely on forward referencing technology (SEPATON, Exagrid) will provide faster restore performance than those using reverse referencing (Data Domain, EMC, NetApp, Diligent, FalconStor).

Offline tape, of course, offers the worst recovery times, but is the least expensive option. However, with the advent of deduplication solutions, the cost per TB of deduplication technologies can in many cases meet or even beat physical tape.

Action Item: Users considering data deduplication solutions must test recovery processes and verify they can meet their RTOs. Users should also consider synchronized snapshot copies for their tier 1 data – usually the data with the most stringent recovery objectives.

Footnotes: