Storage Peer Incite: Notes from Wikibon’s July 10, 2012 Research Meeting

Recorded audio from the Peer Incite:

Ever since the days of Alan Turing, the term "data" has meant, pretty much exclusively, structured data. That is, structured data is data that fits neatly into rows and columns. With the advent of Big Data, and the proliferation of unstructured data and content, that's all changed.

As we now know, unstructured data and content contains a wealth of valuable information. Any sales person, for instance, knows that people make buying decisions based on emotion, not simply numerical calculations. Sociologists build careers on the study of unofficial influence networks that seldom correspond to office org charts and that often are decisive in both business and personal decisions.

The exciting promise of Big Data technologies is that they bring all of this unstructured data and content into the equation to empower much better decision-making. This is revolutionary. But none of this is automatic. Within five years of the introduction of the IBM 360-65, the revolutionary technology of the 1960s, 80% of department heads in business and government had been replaced. Within a decade of the introduction of the IBM PC, all of the minicomputer companies except HP had disappeared. The people and organizations that can figure out how to harness Big Data to achieve practical business value will gain tremendous competitive advantage. That means that those that do not will be at a tremendous disadvantage.

In the latest Peer Incite, the Wikibon community heard from two pioneers -- Brigham Hyde of Relay Technology Management and Sid Probstein of Attivio -- in harnessing unstructured data and content to achieve real business value. Their particular focus is pharmaceutical research, a very expensive and high-risk business. However, the lessons they presented, which are discussed in the articles in this newsletter, are applicable horizontally to many organizations seeking to achieve business value from Big Data. We at Wikibon believe this to be a particularly significant Peer Incite and are pleased to provide this information to our community. Bert Latamore, Editor

Deriving Business Value from Big Data Requires Unifying Data Types Through Common Ontologies

Introduction

To derive business value from Big Data Analytics, practitioners must incorporate unstructured data & content into the equation. This was the premise put forth by Brigham Hyde of Relay Technology Management to the Wikibon community at the July 10th, 2012 Peer Incite.

Why is unstructured data & content required to derive business value from Big Data?

There is much debate over the definition of Big Data. Some approach it from a tools and technology perspective, while others prefer the “three V’s” definition. However you define it, the end goal of any Big Data project is to deliver business value.

But where exactly does the value in Big Data lie? In a recent Wikibon Peer Incite call, Relay Technology Management’s Dr. Brigham Hyde zeroed in on the answer. Deriving value from Big Data, said Hyde, requires unifying unstructured content & data with structured data in a way that allows end-users to gain deeper insights than possible with analysis of structured data alone.

It is an accepted fact that more data beats smarter algorithms when it comes to analytics. We also know that more than 80% of the world’s data is unstructured or semi-structured. Therefore, to discover truly game-changing insights via analytics, unstructured and multi-structured content & data must be included in the underlying corpus.

What new tools and/or approaches are needed to harness unstructured data & content?

Traditional relational databases and related business intelligence tools are simply not up to the task of processing and analyzing large volumes of unstructured data & content in a time-efficient or cost-effective way. Nor are manual efforts – namely collecting data from various data repositories in multiple silo’ed spreadsheets – adequate for harnessing the value of Big Data.

Therefore, new types of technologies and tools are needed. Specifically, Big Data requires emerging technologies, such as MPP analytic databases, Hadoop and advanced analytic platforms, to meet the volume, velocity, and analytic complexity requirements associated with unstructured data.

There’s more to the equation, however. In some industries such as life sciences and financial services, it is not enough to simply make unstructured content & data analysis available to business analysts and data scientists alongside traditional, structured data analysis. Rather, unstructured content & data must be unified with structured data sources by a common ontological layer that allows users to understand and visualize important correlations between multiple data types.

What are Ontologies and what role do they play in Big Data analytics?

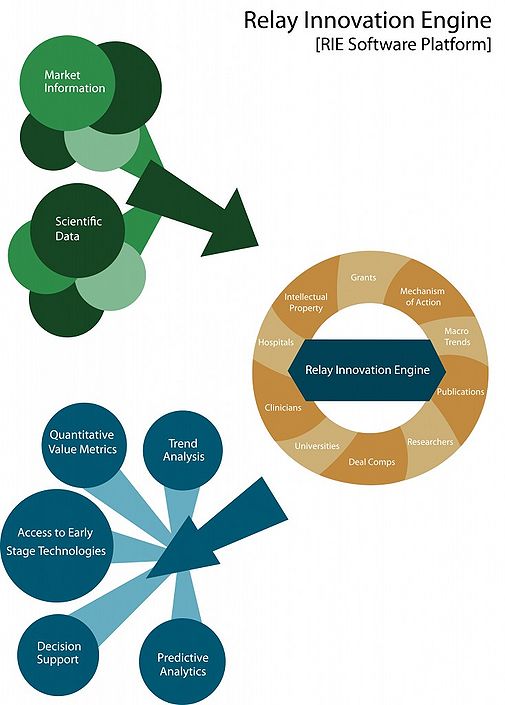

At Relay Technology Management, Hyde and his team have developed a SaaS-based application suite that allows doctors, scientists, and executives in the Life Sciences industry to better evaluate the likely success or failure of emerging drugs and related treatments. Supporting the platform is Attivio’s unified information access platform, which links unstructured content -- like journal articles, press releases, patents and SEC documents -- with structured, transactional data, such as pricing history, associated with a given new drug.

The company has also built nine ontological libraries specific to various sub-verticals in the life sciences market that essentially allow the platform to draw correlations based on sometimes subtle connections between these varying data assets. Hyde explains, “Ontologies are massively important … If I’m talking about lung cancer, it sounds like one thing. It’s not just one thing. There’s small cell lung cancer, there’s non-small cell, there’s different stages. You would also call certain types of lung cancers solid tumors because it’s a tumor type. So understanding ontologically that those things are connected and being able to relate them across relational databases and document sets into one common entity is really the crucial piece.”

In Relay’s case, for example, these ontological libraries allow end-users to not just understand if a given drug or treatment is likely to be successful from a clinical perspective, but to also take into account the state of patents or FDA approval/disapproval when scoring assets.

What are the cultural challenges associated with Big Data Analytics?

Among other capabilities, Relay Technology Management's platform synthesizes the analytics of structured and unstructured data around a given pharmaceutical asset to assign a score to that asset, which Relay calls its Relative Value Index. The RVI allows end-users to more easily compare the likely success or failure of various assets against one another.

However, scientists and others in the life sciences industry have been performing manual-intensive analytics to aid decision making for years. And, for better or worse, they are reluctant to change methods and embrace a new more data-driven approach.

To increase user adoption, Relay understood it needed to get scientists and others to “trust” its RVI. It does so by providing users drill-down capabilities. In other words, Relay allows users to “trust but verify.”

“Scientists in particular are cynical about data … and trends and algorithmically driven things,” said Hyde. “We give them quant, but they are just one click away from the document that is linked to that quantitative measure.”

The result is that users can gain an understanding about how and why Relay assigned a particular RVI to particular asset until such time that they are confident enough in the system to begin trusting the results when a particular threshold is met.

Another important tactic Relay uses to increase user adoption is to partner with data visualization providers Tableau Software and Tibco SPOTFIRE. Such data visualization tools allow users to view data in the manner that makes the most sense to them and to perform hypothesis-driven analysis.

While other techniques may be used, overcoming end-user reluctance to embrace new, data-driven decision making technologies and processes cannot be overlooked when embarking on Big Data Analytics projects.

The Bottom Line

Deriving business value from Big Data Analytics requires unifying diverse data sources, including unstructured data & content, and deriving intelligence across these previously silo'ed data sources. While analyzing any one data source provides marginal value, unified Big Data Analytics returns exponentially more powerful insights.

CIOs must also continue to improve communication and collaboration between IT and business units to ensure successful Big Data projects. Combining internal and external data sets in a meaningful way that enables insightful analysis requires the input of both data architects/engineers – those who know the data structures – and business analysts and other lines-of-business workers – those with domain knowledge who know which questions to ask the data.

Action item: Enterprise CIOs and others responsible for business analytics practices can no longer afford to ignore unstructured data & content, or relegate it to second-class data citizenship. Such unstructured data & content must be integrated into business analytics processes at a foundational level, leveraging an ontological or semantic layer that correlates logical connections across multiple data assets regardless of type. CIOs must also be sure that Big Data platforms built today are flexible enough to handle new and emerging data types in the future and provide useful tools that allow end-users to ask and get answers to their important business questions. Only by fully integrating unstructured data & content into business analytics processes and giving users meaningful ways to derive insights will enterprises reap the true value of Big Data Analytics.

CIOs need to create a shared data vision for the organization to improve the bottom line

Introduction

On July 10, 2012, the Wikibon community participated in a Peer Incite entitled Combining Unstructured and Structured Data to Deliver Big Data Business Value. The community learned from the experiences of Brigham Hyde, Ph.D., managing director at Relay Technology Management, how leveraging both structured and unstructured data, including the full complement of patent documents from the U.S. Patent and Trade Office, can reduce decision-making risk for pharmaceutical companies and their investors. We were also joined by Sid Probstein, CTO of Attivio, a software company specializing in enterprise search solutions and unified information access.

Although the Peer Incite focused on health information, disease, and the mass of data that surrounds pharmaceutical company decisions for moving forward with particular kinds of research, the discussion has clear implications for mainstream CIOs looking at ways to use big data to enhance decision making — and the bottom line — in their own organizations.

Doubtless, many organizations are still living in an insular spreadsheet-centric world. In fact, I’ve seen them. These are the organizations that struggle to make heads or tails of their data, that struggle to develop information sources that can assist in decision-making and that struggle to make a decision stick. Their plight is defined by struggle, even if they don’t know it. The reasons: Lack of completeness in data analysis and, as a result, lack of true faith in the decisions that are derived from such. This results in decisions constantly being rehashed as new details are learned or as people uncomfortable with the incomplete information continue to bring forward concerns.

Although these organizations face difficulty in the short term, their CIOs have the opportunity to map a data vision that brings the organization to true insight derived from complete data powering decisions that are carried out with conviction based on the trust that the full data set engenders. This is how decisions should be carried out.

Two questions are important to consider in any decision:

- How certain are we that the decision we’ve made is the right one based on the data at hand?

- At what point are we certain we’re making the best possible decision?

Getting to a full and complete trust in data can take some time, but the benefits are vast. The vision that carries this forward should consist of three or four “phases” for lack of a better term. The fourth phase will depend on the nature of the organization.

CIOs are often perceived by business unit heads as trying to stay ahead of the data problems. However, they’re also seen as not being that in touch with the people using the data to make the decisions. This disconnect means that the CIO needs to engage broadly across the organization for a Big Data initiative to truly succeed.

Underlying premise

CIOs should start with the idea that the organization wants to begin using unified information access (UIA) on the company’s next project. This environment provides tools that enable broad levels of functionality in search, business intelligence, analytics, workflow, and dashboards.

This means the CIO needs to get the expertise on board, get the infrastructure in place, and merge together existing data silos into a cohesive whole. It also means that everybody should get some level of statistics training in order to be able to understand basic concepts underlying the data. This doesn’t mean going out and hiring a bunch of sophisticated Bayesian modelers. If you provide good tools out of the box, those with enough training will be able to understand what they’re doing.

Get the data structure right

Once you accept the reality of the data situation, you can begin to take real action. First, get the internal data house in order with a focus on improving the overall quality of the data (see Data Quality is too Important to Ignore). Don’t expect internal data cleanup efforts to be easy. They take a lot of work (see Leadership challenges of a data cleansing effort).

During this effort, bring together as many sources of internal data as possible and establish internal data governance structures and processes intended to keep data clean for the future.

Create a data access layer to make the data useful to internal end customers

Keep users engaged in the process by working with the organization to identify tools and services that can be used to access data in a seamless way.

Real business value begins to take place as the organization creates intelligence across what used to be disparate data silos. At this point, the organization should start seeing the value in the process that is taking place as the walls come down and as information sources become more cohesive and more complete, leading to greater levels of confidence in the decisions that are made with the data.

Integrate independent sources of data

But, no matter how much internal data an organization has there is a wider ecosystem from which to pull data. There are formal data sources and more informal ones, such as news articles and the like. The internal data may paint a picture, but the external data will add the frame and the fill in the corners of the canvas.

Every industry has industry-specific data sources that can be leveraged to help improve decisions across the entire industry. After all, if getting your own data house in order results in better support for decisions, what if you could harness the power of decisions made across the entire industry including that of industry analysts and even individual news articles that could have an impact on your direction?

Combine internal and external data sources and determine best ways to monetize data

With a fully integrated set of data elements and fully rationalized reporting, dashboards, business intelligence, and decision-making based on an absolutely complete data picture, decisions can be made with confidence with an understanding for all of the pros and cons that accompany that decision.

Depending on the organization, this could be the time when the initiative can become another line of business. After all, if the data has value to you, some form of the data may have value to others.

Action item: In today’s world, speed is everything, and the faster that an organization can gather information for good decision making, the faster that organization can launch initiatives to support the best decision and get to market. This is what impacts the bottom line in a significant way.

CIOs need to embrace the "information" part of their titles and harness the power of the organization around the shared goal of improved decision-making using quality data. By partnering with the organization as a whole, the CIO can create a vision that improves internal data, integrates external data sources and eventually monetizes the investment.

Technology Integration and Big Data: Extracting Value

Most people think of Big Data as being about volume, but there are other critical dimensions such as velocity and variety. From the Relay TM case, we see that while volume is certainly important, the main driver of business value comes from looking across many disparate sources - both internal and external. A unified view is essential to making this happen.

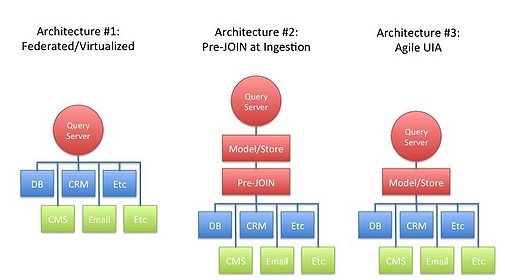

Architecturally, there are a few different routes to achieve unified information access (UIA) across silos. The following diagram presents the three most prevalent options.

- is a federated or virtualized approach. A client calls a query server and provides details on the information it needs. The query server connects to each of many sources, both structured and unstructured, passes the query off to each source, and then aggregates the results and returns them to the client. Building a model like this is complex, although it seems sensible because it doesn't require any normalization. On the other hand, it is also a "brute force" approach that won't perform well on cross-silo analysis when any result set is large.

- is a pre-JOINed approach. Data is ingested and normalized into a single model, following an ETL process. A query server resolves queries against it. This model will have more consistent performance. However, it trades-off flexibility at query time, because in order for a new relationship to be used, all of the data must be re-ingested and re-normalized. The ingestion logic is also challenging as data must be modeled prior to ingestion, and the keys between data items must be pre-defined.

- is the true agile UIA approach. Data is ingested and modeled just as it was in the source repository - typically in tables with keys identifying relationships. Flat repositories like file systems become tables also. This model has consistent performance and offers complete flexibility at query time as any relationship, even one that is not formal in the data, can be used. The ingestion logic is far simpler than in option #2, as it does not require a normalized model, and thus avoids the ETL step.

Selecting one of these architectures depends heavily on the use case. For solutions that simply need to aggregate information from multiple sources, architecture #1 can be made to work, especially if most of the data is structured. Solutions that require relational algebra might try approach #2 if there are relatively few sources, with limited growth of sources over time. It seems to work particularly well for e-commerce sites where the catalog is central to the experience. Architecture #3 is most suited for integrating multiple silos, at scale, across multiple domains, or for solutions that may support numerous types of analysis.

Action item: Use a UIA architecture for an upcoming strategic project. This will get your organization and colleagues thinking about how they can build solutions that connect the dots, instead of just creating more silos that require costly and time-consuming integration efforts.

Big Data Vendors Need To Embrace Data Unification and Scaling Challenges

In the scramble to become relevant in the brave new world of Big Data, a full spectrum of technology vendors from analytics and search vendors to cloud-enabled service providers and high-performance storage manufactures have entered the market.

All of the industry’s largest enterprise-focused vendors have made numerous acquisitions over the last two years to fill out their Big Data solutions portfolio. However, when organizations are challenged with the task of unifying and analyzing massive amounts of data culled from a variety or structured, semi-structured and unstructured data sources, a big vendor solution is not necessarily better.

During a recent Wikibon Peer Incite broadcast featuring Brigham Hyde, Managing Director of life science analytics firm Relay Technology Management and Sid Probstein, CTO for unified information access (UIA) software provider Attivio, panelists and participants discussed performance requirements as well as the need to be able to handle large volumes of data from a variety of sources using a single tool and dashboard.

Hyde discussed the difficulties bio-informatics specialists face when trying to gather all relevant data into one place. “Relational databases don’t scale and have no logical connection to documents and ontological libraries," he said. "Our research demands much more data variety and volume than any traditional, structured database-oriented approach can deliver. We also need to help our customers more easily visualize data and offer them a choice of query options including SQL and contextual search.”

Probstein, shared how Attivio’s Active Intelligence Engine (AIE) allows customers like Relay to quickly build relationships between large structured and unstructured data sources across many different repositories using advanced text search and SQL queries, while enabling concept search and boosting relevancy.

Relay also announced that it has teamed up with Attivio and TIBCO to deliver its Relay Innovation Engine, a SaaS-based solution that puts data discovery in the hands of life sciences companies. TIBCO’s Spotfire offers real-time BI and data visualization capabilities. Hyde remarked, “The combination of Attivio’s AIE, TIBCO’s Spotfire, and Relay’s proprietary ontologies and data architecture gives our customers the ability to access, explore, and visualize massive amounts of data in an easier, more meaningful way.”

Many so-called Big Data tools may work on a small data set but break down when they need to scale. Hyde pointed out that the more relevant data sources you can mine and combine such as patent information, clinical trial and claims information, feeds from collaboration or content management systems, social media sites, and databases, the richer the results. “Human-generated content is the key to enriching information.”

Too many vendors have no concept of why data can get big. A stacks-based, one-of-everything approach creates more complexity and delivers less value. SaaS models for analytics solutions are also becoming much more viable as cloud-enabled solutions continue to gain acceptance in the marketplace. Meanwhile, the market for buying and selling data is exploding in just about every industry, presenting new business opportunities.

Action item: Vendors in or entering the Big Data space need to deliver solutions that handle a variety of structured and unstructured data types and sources. Big Data solutions also need to scale to accommodate ever-increasing volumes of data in a world where the thought process is, “more data is better.” Vendors need to offer SaaS- or cloud-enabled solutions where they host and secure data, and they should also look for ways to monetize their internal data analytics capabilities.

Organizing for Big Data Business Value

In the July 10, 2012 Peer Incite, Brigham Hyde, Ph.D., managing director of Relay Technology Management, Inc.; Sid Probstein, CTO of Attivio; and the Wikibon community discussed best practices for deriving business value from Big Data analytics. The community agreed that the keys to deriving maximum insights from big data analysis are methods and tools to combine structured and unstructured data sources. Relay Technology has leveraged technology from Attivio to analyze high-quality, unstructured data from the patent office, from research papers in medical journals, and from SEC documents, to create insightful linkages to the structured data, including drug usage and pricing.

If the goal of the Big Data initiative, however, is deriving business value, it is just as important to build a data analytics team made up of both analytics specialists and content or business experts. Smaller teams of higher quality experts that include domain-specific business experts will create more business value for organizations than large teams of data analysis professionals who lack an understanding of the language of the domain. Domain experts provide the language, and data scientists provide the structure, and domain-specific linguistic knowledge is vital when building ontologies. These ontologies should be driven by business or domain experts, rather than data scientists and analytics specialists.

Relay was founded by individuals with deep expertise not only in pharmacology but also in investment decision making. By combining their domain expertise with the expertise of the data scientists, Relay is helping investors and drug companies make better drug development decisions for both efficacy and potential for return on investment.

Action item: Organizations that wish to create maximum business value from big data analytics projects should start with small, even two-person, teams, including a data scientist and a business expert. Together, they can develop and curate relevant sources for unstructured data that can be combined with structured data sources, create ontologies for big data analysis that makes sense to the business decision makers, and together derive maximum business value to the organization.

A Checklist for Strategic Big Data Management: from Data Marts to Data Markets

Premise

The effective management use of Big Data is a competitive and survival imperative for all mid-size and large organizations over the next decade.

Big Data Stage 1: Internal Data Ontology

- IT sells and funds a Big Data vision to the board and senior line of business managers, and updates and resells constantly;

- Big Data is not Hadoop, or indeed any software package, set of software packages or piece of hardware;

- Big Data includes structured data, unstructured data, images and video: particular attention should be paid to creating video metadata;

- Big Data becomes more valuable when combined with more Big Data;

- Organizations should start with the Big Data they already create and collect in stage 1;

- Big Data stage 1 is creating a Big Data organization and data ontology;

- The key Big Data resource is data analysts;

- Good Big Data analysts are a scarce and valuable commodity and should work together;

- Four major skills are required for a Big Data analyst team:

- Advanced statistical & numerical skills;

- Deep knowledge of the industry & part of the enterprise they work for;

- Large scale data analysis skills;

- Deep knowledge of industry and government data sources, their reliability, extractability and cost;

- Data analysts should be few, well equipped, and paid well for success;

- The key output metric for data analyst teams is actioned insights;

- Every Big Data insight should result in:

- Changes(s)in business process;

- Business processes monitored by real-time capture of and use of the data found in the original data analysis;

- The big data ontology will extend into the production systems as the systems are modified to help create, index and manage big data metadata in real time.

Big Data Stage 2: Adding External Data

- Stage 2 of Big Data is extending the ontology to external data sources, and linking updates to existing and new applications to enhance the ontology;

- Moving and ingesting large amounts of Big Data should be avoided like the plague:

- The cost of moving Big Data is too high and it takes too long;

- Process remote Big Data remotely and combine extracts remotely or locally;

- Learn how to evaluate and use external sources, government sources, and external data providers;

- As much as possible of external Big Data processing should be outsourced.

Big Data Stage 3: Participating in Data Markets

- Stage 3 of Big Data is creating and selling big data constructs on an ongoing basis. The constructs become ever more complex and valuable, each version building on the last;

- The major revenue and profit from big data will be made by data providers who sell access to (via APIs) and extracts of their data;

- Very large (and potentially profitable) data providers will be companies such as Google, Microsoft, Yahoo, telecommunication companies and Government institutions;

- The value of a specific Big Data models and construct will decline over time as new constructs and connections are created;

- All organizations should be data providers and data consumers, will buy and sell access to data as the paradigm changes from data marts to data markets;

- Creation of Big Data will need to be built-in to applications, products and process design: particular attention should be paid to building in mechanisms to return information about deployment and value of products and services;

- New system designs using flash and other persistent storage technologies will drive a top-down process flow where production application and big data applications run in parallel against the same data input streams, and where the analytics feed back into production applications in real-time.

The winners in big data will be organizations that combine data from many sources, have the skills and DNA to use the results to improve their own endeavors and learn how to trade in the big data market.

Action item: Creating and implementing a Big Data strategy is a vital project that should be strongly coordinated by IT. Big Data works best when connected to other big data, both inside and outside of the organization.