Tip: Hit Ctrl +/- to increase/decrease text size)

Storage Peer Incite: Notes from Wikibon’s November 2, 2010 Research Meeting

Recorded audio from the Peer Incite:

Metadata, data about data, is not a new concept in the IT industry. Low level metadata such as the date and time of the creation of a data entity and identity of the creator, have been captured routinely by a wide variety of applications. To provide one non-technical example, when you look at an edited document in your favorite word processor and see the names of the person who owns it and of the various people who have made edits, you are looking at metadata. To cite another, the relationships among data entities in a relational database are defined in metadata.

Traditionally, metadata has been fairly simple and low-level. In the age of cloud services, however, it is taking on a much more important role, and it is becoming more complex. If you buy books on Amazon.com, for instance, you are familiar with the suggestions it provides based on your past purchases. Those are based on an analysis of metadata showing the relationships between books you buy and other purchasers and other books like those you buy that they purchased. That is getting reasonably complex. But that, we believe, is only the beginning. In the future metadata will span the cloud to link data entities on different computers, in different databases, on different services, to provide services that will become an important part of our lives in the 21st Century.

Today that is impossible, because the basic tools and standards do not yet exist. In their paper "Angels in our Midst: Associative Metadata in Cloud Storage", Dr. Tom Coughlin and Mike Alvarado present a model architecture based on automated tools they call "Guardian Angels" that watch over data relationships and create metadata and a mechanism for managing and securing that metadata, the "Invisible College." They presented this paper at Wikibon's Nov. 2 Peer Incite Meeting. The recording is available in the Wikibon Peer Incite Archive.

All this may sound pretty esoteric, the area of academics and techies in large cloud service providers. But in fact it is important to every organization doing business over the Internet, which today is practically every organization in existence. Complex metadata is the basis for targeted Internet advertising and other automated customer, employee, constituent, and donor management services. Therefore, every organization has a stake in the issue of developing effective standards and management tools for metadata. This means that you need to push your vendors to develop and use open metadata standards. G. Berton Latamore, Editor

Contents |

Metadata in the Cloud: Creating New Business Models

As more valuable personal and corporate information is stored in both public and private clouds, organizations will increasingly rely on an expanded view of metadata to both create new value streams and mitigate information risk. By its very nature, cloud architectures allow greater degrees of sharing and collaboration and present new opportunities and risks for information professionals.

Incremental value from cloud metadata will be created by leveraging "associative context" created by software that observes users and their evolving relationships with content elements that are both internal and external to an organization. This software will create metadata that allows individuals and organizations to extract even more value from information.

These were the ideas put forth by Dr. Tom Coughlin and Mike Alvarado, who presented these concepts to the Wikibon community from a new paper: Angels in our Midst: Associative Metadata in Cloud Storage.

What is Metadata and Why is it Important?

Metadata is data about data. It is high-level information that includes when something was done, where it was done, the file type and format of the data, the original source, etc. The notion of metadata can be expanded to include information about how content is being used, who is using the content, and when multiple pieces of content are being used can relevant and valuable associations be observed?

According to the authors, users should think about the different types and levels of metadata - low-level metadata that provide (for example) information about physical location of blocks, all the way to higher level metadata that can go beyond descriptive to include judgemental information. In other words, what does this content mean and is it relevant to a particular objective or initiative? For example, will I like how it tastes? Will it be cosmetically appealing to me?

By leveraging metadata more intelligently, organizations can begin to extract new business value from information and potentially introduce new business models for value creation. Underpinning this opportunity is the relationships between content elements, which the authors refer to as associative metadata.

What's Needed to Exploit Associative Metadata?

According to Alvarado and Coughlin, if we're going to create associative metadata we need an agent that can be an objective observer. Since individual humans often create many of those relationships, software that is intelligent enough to create new metadata around those interactions and relationships is needed to watch what users are doing. The authors refer to this agent as a "Guardian Angel."

Further, to extend the notion of metadata to an even higher, more rich and complex level, the paper puts forth the notion of "The Invisible College," which is a tool for managing the relationships between Guardian Angels and a useful framework for creating a more intricate system of data systems.

What Different Types of Metadata Exist?

On the call, Todd, an IT practitioner put forth a simple metadata model that included three layers:

- Basic metadata - low level data - e.g. block level information about where data is stored and how often it is accessed.

- File-level metadata - more complex data from file systems.

- Content-level metadata - metadata that might be found in content management systems such as file type and other more meaningful data such as: "Is this email from the CEO?" or "Is the mammogram positive?"

Each metadata type is actionable. For example, basic metadata can be used to automate tiering; file-system data can be used to speed performance, and high-level metadata can be used to take business actions. The key challenge is how to capture, process, analyze, and manage all this metadata in an expedient manner.

How Will these Metadata be Managed?

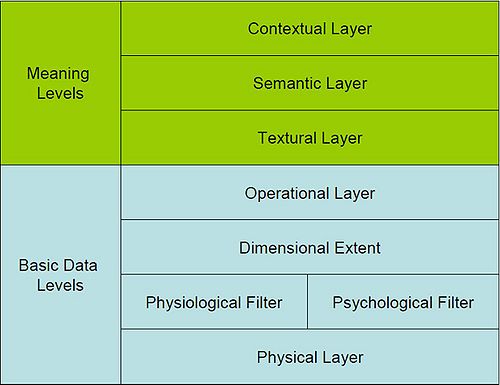

The authors have put forth in their paper a taxonomy for metadata that is very granular but broken down along two high levels:

- Meaning Metadata,

- Basic Metadata.

The paper defines an OSI-like model with a physical layer through operational into semantic and contextual layers. The definition of these layers, their interaction and management, will be vital to harnessing the power of this metadata. A key limiting factor is the raw power of systems and the ability to keep pace not only with user interactions but machine-to-machine (M2M) communications.

The community discussed the possibility of a Hadoop-like framework, Hadoop itself or other semantic web technologies to be applied to evolving metadata architectures. Rather than shoving the data into a big data repository, the idea is to distribute the metadata and allow parallel processing concepts to operate in tandem. By allowing the metadata to remain distributed, massive volumes of data can be managed and analyzed in real or near-real time, thereby providing a step function in metadata exploitation.

Does such a framework exist today specifically designed for cloud metadata management? To the community's knowledge, not per se, but there are numerous open source initiatives such as Hadoop that have the potential to be applied to solving this problem and creating new opportunities for metadata management in cloud environments.

What about Privacy and Security in the Cloud?

Cloud information storage is accomplished by providing access to stored assets over TCP/IP networks, whether public or private. Cloud computing increases the need to protect content in new ways specifically because the physical perimeter of the organization and its data are fluid. The notion of building a moat to protect the queen in her castle is outmoded in the cloud, because sometimes the queen wants to leave the castle. Compounding the complexity of privacy and data protection is the idea that associations and interactions will dramatically increase between users, users-and-machines, and machines themselves.

According to Alvarado and Coughlin, not surprisingly, one answer to this problem is metadata. New types of metadata, according to the authors, will evolve to ensure data integrity, security, and privacy with content that is shared and created by individuals, groups, and machines. For example, metadata could evolve to monitor the physical location of files and ensure that the physical storage of that data complies with local laws that might require that data is not stored outside a particular country.

Location services is currently one of the hottest areas in business but it lacks a mechanism to enable the levels of privacy users desire. The services in the Internet world are often being introduced under conditions where they are outstripping the ability of infrastructure to provide a mature framework for issues like corporate policy or effective policy. Mechanism that are described in the paper can make infrastructure more predisposed to keep new service ideas in sync with necessary protection and other mechanisms which are being added after the fact. If developers had access to a toolkit before they deploy that is easily accessible, we would be ahead of the game.

The authors state the following: "Whatever data system solutions arise, they will have identifiable characteristics such as automated or semi-automated information classification and inventory algorithms with significance and retention bits, automated information access path management, information tracking and simulated testing access (repeated during the effective life time of information for quality assurance), and automated information metadata reporting."

A key concern in the Wikibon community is the notion of balancing information value with information risk. Specifically, business value constricts as organizations increasingly automate the policing of data and information, and striking a risk reduction/value creation balance is an ongoing challenge for CIOs. The bottom line is that the degree of emphasis on risk versus value will depend on a number of factors, including industry, regulatory requirements, legal issues, past corporate history, culture, company status (i.e. private versus publicly traded), and other issues.

When will Architectures and Products Emerge?

Clearly the authors ideas are futuristic in nature, however the value of this exercise is that Alvarado and Coughlin are defining an end point and helping users and vendors visualize the possibility of cloud computing in the context of creating new business models. The emphasis on metadata underscores the importance of defining, understanding and managing metadata to create new business opportunities and manage information risk. The role of metadata in this regard is undeniable and while solutions on the market are limited today, they are beginning to come to fruition in pockets.

Key developments are occurring within standards communities to address this opportunity, and the authors believe that these efforts are beginning to coalesce around the Angel and Invisible College notion. Specifically they cite the evolution of Intel's work on Fodor. As well, clearly mashups using Google mapping capabilities with GPS triangulation extensively use metadata to create new value. Frameworks like Hadoop could be instrumental in providing fast analytics for big data and other semantic web technologies are emerging to address these issues. From a storage perspective, few suppliers are actively talking about this opportunity in their marketing, but several have advanced development projects to better understand how to exploit metadata for classification, policy automation, collaboration, and the like.

Action item: Initial cloud computing deployments have been accelerated due to the economic crisis, and many have focused on reducing costs. At the same time, numerous organizations are enabling new business models using cloud platforms. These initiatives are creating truckloads of data and metadata, and users and vendors must identify opportunities to both harness and unleash metadata to mitigate information risks and at the same time create new value pathways. The degree to which this is possible will be a function of an organization's risk tolerance, its culture, regulatory compliance edicts, and a number of other factors. Whatever the path, metadata exploitation will be fundamental to managing data in the 21st century

Should CIOs Harness or Unleash Metadata?

Harness or Unleash Metadata?

Information metadata is the best wellspring to drive incredibly utility and efficiency for individuals and corporations if it is managed explicitly to drive greater value, relevance, and context. Cloud-based services will catalyze examining new metadata models. CIOs need to craft, then drive, agendas with key stakeholders to adopt as a corporate mission extracting more value from corporate metadata along with roadmaps spanning the entire entity that protects corporate and personal privacy. A vexing issue for CIOs is determining which resources to deploy to create frameworks leveraging, encompassing and capturing existing as well as new metadata.

Priority needs to be given to harnessing, then unleashing, the power of metadata in service of new business models and opportunities. The first step is liberating line managers by explicitly placing value and new business platform creation as end states. The social media revolution has put an end to business as usual. What the Guardian Angles, the Invisible College and the virtual perimeter concepts portray is a future based on self-authorizing, self-defined work groups, business organizations, and trans-corporate entities spanning the globe. CIOs have to articulate aspirational mantras that place their prestige, resources, and courage squarely in support of metadata-centric business and informational models that can dynamically adapt and adjust to new and emerging conditions in global markets.

Action item: Metadata spanning corporate, customer and market domains needs to be defined along with metrics and roadmaps that adapt business management tools to be metadata informed and driven.

Putting Guardian Angels to Work

Guardian angels as a way to create associative metadata is explored in a recent white paper by Mike Alvarado and Tom Coughlin. Guardian Angels are objective observers that watch how content is used by an individual or organization to create associative metadata. In addition these guardian angels can work together on-line through an “Invisible College” to anonymously share allowed information to the mutual benefit of the sharing parties. By this means even more broad associative metadata can be generated through collaborative means. In this professional alert we discuss how IT can implement these concepts in the enterprise environment.

First, how do we make Guardian Angels? We already employ many ways to create and use metadata in the course of our personal and working lives. Ordinary metadata covers basic information about a thing or event. Guardian Angels must rise above this mundane basic metadata to create and manage textual, semantic, and contextual metadata. They must help us find associations and gather our reactions and feelings about content or at least to get a good model of what we tend to do with one sort of content or another. To make this possible we need to broaden the current types of metadata standards to include associative metadata that may be created continuously while data is used. These standards must allow for non-static types of metadata that can be generated by the actions of an individual or work group. Furthermore they must allow for broader metadata associations to be made between other individuals or work groups through the Internet — the Invisible College. These standards must also include methods of encrypting and protecting this higher-level metadata from unauthorized access.

How do we create an Invisible College where these representative Guardian Angels can collaborate to share metadata and create new associative metadata while protecting the privacy of their individual or group clients? One idea of how this can be done is using Hadoop. Hadoop is Java software that supports data-intensive distributed applications that can comprise thousands of nodes and petabytes of data. We need to explore how we can build an anonymous collaborative metadata social environment where metadata associations between individuals or work groups can be created.

If we can create these Guardian Angels and the Invisible College where they can work together to create higher level associative metadata we will provide a whole new tool for social networking and for the creation of searchable and usable content. In addition we will create new tools for efficient asset use and new bridges between individuals, corporations and cultures.

Action item: Organizations must begin to build associative metadata agents to help enterprises better manage cloud initiatives. Associative metadata models can enable both value creation and risk reduction which when balanced can support new business models that are more flexible, global, facile, and faster to market.

Footnotes: Read the White Paper

Metadata Helping Organizations Say Yes to Cloud Services

Well-functioning organizations have developed structures to balance requirements for revenue creation, cost containment, and risk management to deliver predictable profit and growth. Within most organizations, the IT department is often charged with many of the cost-reduction initiatives, legal, audit, security, and compliance departments are charged with risk reduction, and the line-of-business is charged with driving increased value and revenue.

The development and evolution of private- and public-cloud services promise decreased costs for the IT department through super-consolidation and the efficiency of shared infrastructure services. Business units see cloud-based services enabling increased revenue and value, not only by offering a more scalable and flexible infrastructure but also by leveraging the combined data of the organization and the knowledge gleaned from other users of the cloud.

Those charged with risk management will be appropriately concerned about data privacy, data security, data loss, and legal and regulatory compliance for both public and private clouds. That said, companies that fail to embrace private and public cloud approaches run the risk of revenue stagnation and high costs, which promises certain, if slow, death.

Cloud metadata is the key to satisfying the concerns of risk managers, while enabling IT and revenue-producing business units to fully embrace and exploit these new service-delivery models. Such a model of cloud metadata is detailed in a document created by Tom Coughlin and Mike Alvarado entitled "Angels in our Midst: Associative Metadata in Cloud Storage."

For risk managers concerned about placing data in a shared infrastructure, the "Basic Data Levels" of metadata, described in the first four layers of the model, can be used to control access, determine which files and data should be encrypted, and control where data is allowed to move and be shared both within and outside the walls of the corporation. For risk managers concerned about compliance with security and privacy laws, the "Meaning Levels" of metadata, described in the top three layers of the model, can enable the analysis of customers and their relationships while ensuring that customer-unique identifying information is protected.

Organizations need to consider all three dimensions: revenue and value creation, cost control, and risk management. Organizations will have differing views of the appropriate balance among revenue, cost, and risk, depending upon their industry, company history, financial position, and extent of regulatory oversight, but all three constituencies must have a seat at the table. It’s always easy to kill something by saying it’s too risky. The inherent risk, however, lies in not finding ways to say ‘yes’ and being left behind.

Action item: With the increased availability of private- and public-cloud infrastructure and applications, organizations should bring together the key stakeholders for revenue growth, cost containment, and risk management. The priority of the stakeholders should be to establish and leverage a new hierarchy of metadata to enable organizations to manage risk while exploiting the cost benefits and value creation of cloud-based infrastructure.

Metadata for Big Data and the Cloud

You are driving with the family on a long journey at 7p.m. Your dashboard computer displays a selection of restaurants and hotels that meet your budget, culinary preferences, and location, with a special offer for a family room. There is an attractive offer from a hotel if you drive another 20 miles. Behind this display is derived from a large amount of data put into context – and the only way to provide such information cost effectively is to use metadata inferences in real or next-to-real time.

Metadata, the data that describes data, becomes an imperative in the world of “Big Data” and the cloud. As more of the data is distributed in the cloud and across the enterprise, the model of holding central databases becomes less relevant, especially for unstructured and semi-structured data. Moving vast amounts of data from one place to another within or outside the enterprise is not economically viable. It is faster and more efficient to select the data locally by shipping the code to the data, the Hadoop model. Good metadata is a key enabler of this approach.

There is already some metadata in place; files have a date created/modified and file size, JPEGs have data about the camera settings and location, and there are many other examples. But metadata standards are fragmented and incomplete, and cracking open files to investigate properties requires too much compute and elapsed time.

A paper by Tom Coughlin and Mike Alvarado entitled "Angels in our Midst: Associative Metadata in Cloud Storage" is an interesting attempt to put a framework model (Figure 1) in place for metadata. The authors have taken an OSI-like layered model, split into to major components:-

- Basic Data Levels – four layers that focus on traditional metadata

- Meaning Data Levels – three layers that focus on meaning and context

Source Angels in our Midst: Associative Metadata in Cloud Storage, Tom Coughlin and Mike Alvarado downloaded 11/3/2010 from http://tinyurl.com/28t2fq3

IT organizations and vendors should recognize that completely new models of doing business are evolving that are enabled by an effective metadata model that has industry acceptance. Within IT, metadata can be used to assist in deleting data, as well as enabling more effective utilization of data value.

Current methods of inferring metadata retrospectively are inadequate.

Metadata should be captured as close as possible to the time that the data is created or accessed, and there must be automation with immediate override in the capture of metadata. To meet national and international concerns about privacy, metadata must include strong access and security controls, with an emphasis on the user override (what Coughlin and Alvarado describe as a “Guardian Angel”).

Action item: There should be strong cross-industry support from ISVs, hardware vendors and users for the creation of metadata models and standards. Apple, Google, Microsoft and other software developers of semi-structured data have particular responsibilities to create open metadata standards.

Will New Metadata Models Get Rid of Centralized Repositories?

Today, many if not most organizations use an information management approach that shoves everything that's potentially risky (e.g. e-mails) into a centralized archive. By centralizing content, organizations can (in theory) more easily search, discover, and purge key corporate information assets. Frequently, in certain industries, many of these assets aren't purged and are kept 'forever' because organizations are often afraid to delete sensitive data for fear of litigation down the road. Moreover, often when data is thought to be deleted, it isn't because it 'lives' in other locations - i.e. on user laptops, mobile devices, or in collaborative and social media tools.

New cloud metadata models can be used to identify information about information and eliminate huge amounts of 'stuff' by enabling the defensible deletion of unneeded or unwanted data. By investigating the metadata, organizations can more quickly and efficiently determine what data needs to be purged and this process can be automated by leveraging metadata automation strategies.

At the 11/2/2010 Peer Incite Research Meeting, Tom Coughlin and Mike Alvarado introduced the concept of a 'Guardian Angel' which can strengthen the defensible deletion of information assets. The advantage of the Guardian Angel - which is software that observes user and machine interactions, is that it can automatically create metadata 'on the fly' at the point of creation or use - such that information can be auto-classified and subsequently acted upon.

However organizations today lack robust metadata management capabilities that would enable the proactive management and defensible deletion of certain corporate information assets. The opportunity exists for organizations to expand their scope of metadata management and clearly define, organize, manage, and exploit metadata to explicitly initiate strategies for getting rid of unwanted/unneeded data on a proactive and automated basis.

Action item: The promise of automated and defensible deletion of risky or unwanted corporate assets has been the holy grail of information management. New metadata models are emerging that expand the notion of metadata, specifically accommodating cloud computing and collaboration whereby metadata is automatically created and classified upon data creation and use. Organizations must begin to refine metadata management models with an explicit goal of automating the deletion of unwanted/unneeded information assets defensibly. This approach will dramatically decrease the risk of cloud adoption and support new business models that are emerging around both public and private cloud computing.