Bringing a new pharmaceutical drug to market is one of the riskiest and most expensive endeavors a company can undertake. Only 10%-12% of new drugs make it from the early phases of the drug discovery process to the consumer market. While the cost per successful drug varies widely by company, the industry average is around $4 billion.

The process is time-intensive as well. On average taking a successful drug to from discovery to market takes 12 years. But close to 90% of drugs never make it that far.

There is no shortage of reasons a promising drug might fall short of expectations at some point during the decade-plus discovery and clinical trial process. They range from the clinical – i.e. the new drug causes adverse patient reactions when it comes in contact with another agent, or clinical trials simply show the drug is not as effective as hoped – to the financial – a rival, more effective drug makes it to market first or the regulatory environment makes it impractical to market the drug to the target population.

Pharmaceutical makers are constantly on the look out for ways to improve the odds of getting new drugs through the discovery pipeline to market as quickly and cost effectively as possible, all the while mitigating risk to the company and delivering maximum benefit to patients. One such method is the application of Big Data – including data governance, data mining, and predictive analytics – to help pharmaceutical makers and researches make smarter decisions about which drugs to pursue.

Early adopters of Big Data technologies and methodologies in the pharmaceutical industry are mining vast troves of clinical, market, and legal data to identify which drugs under consideration for development have the best chance of navigating the grueling path from discovery to pharmacy shelves. Among the techniques used are:

- Combing historical, clinical data related to previous trials of similar agents and/or combinations of agents to identify negative or otherwise adverse effects on trial patient populations.

- Predicting the likely profitability of a given drug based on historical analysis of similar agents, taking into account the current and likely future regulatory environment and market conditions, and the size of the addressable market.

- Understanding trends in applicable federal and regional regulations, including patent law related to commercial pharmaceuticals vis-à-vis generic alternatives, in a given area, and estimating the cost-impact of compliance across the full spectrum of the drug lifecycle.

- Analyzing clinical data in near real-time to keep abreast of the latest clinical research related to current drugs under development in order to adjust the clinical trial process to take advantage of new insights gleaned from other researchers and clinicians.

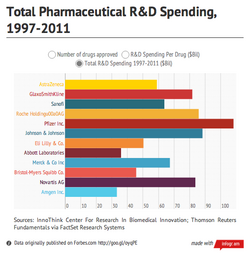

A number of pharmaceutical makers are already embracing Big Data, among them, Bristol Myers Squibb. The New York-based pharmaceutical maker, which has spent nearly $46 billion on research and development since 1997 bringing 11 successful drugs to market, indexes hundreds-of-thousands of clinical documents per year in pursuit of insights that will improve the drug discovery process. BMS recently began using software from HP Autonomy to analyze and surface this relevant outside research and market data, which takes many formats, to its clinical researchers and scientists.

As evident by the workloads referenced above, Big Data as applied in the pharmaceutical industry requires the integration and analysis of various types of data and information found both inside but primarily outside the four walls of any one pharmaceutical maker. This requires investment in Big Data tools, technologies, and services such as:

- Infrastructure and related software such as Hadoop and data & application integration software, with the ability to process, merge and store multi-structured data from a variety of sources.

- Information governance tools to apply common metadata definitions to multi-structured, multi-sourced data and to ensure applicable data governance rules are applied throughout the discovery process.

- Natural language processing and text analytics software to enable researches to identify sometimes subtle but critical relationships among varying data sets.

- Data visualization software that allows researchers and Data Scientists to intuitively explore large, multi-structured data sets and “tell a story” to non-statisticians such as executives, investors and regulators.

Pharmaceutical makers are just one of the constituents in the drug discovery process. Others, such as investors, should also consider Big Data and analytics-led approaches to assist in making wiser decisions about which drugs are likeliest to result in long-term and sustainable benefits.

Action Item: The first priority of those in the pharmaceuticals industry must be the welfare of patients. Specifically, this means developing the most effective drugs and treatments for the world’s most intractable and destructive diseases and illnesses. To do so cost-effectively – which ultimately impacts the price of drugs once they reach market – researchers and clinicians should utilize new and emerging Big Data-led approaches to add intelligence and efficiency to the drug development process without sacrificing safety or efficacy.

Footnotes: For a list of Wikibon clients, click here.