#memeconnect #emc #bd

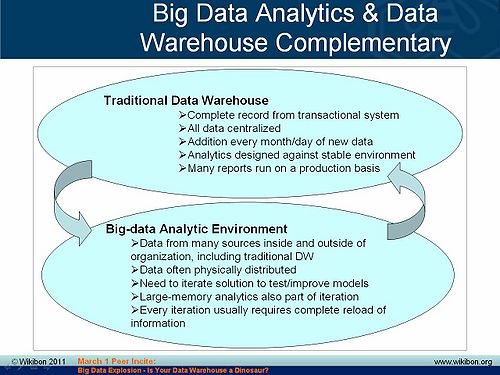

Traditional data warehouse infrastructure and emerging big data applications, while distinctly different in terms of business drivers, architectures, operational procedures, skills sets, and use cases, in many ways complement each other and over time will both become sources of enormous leverage for many enterprises.

Today’s data warehousing space is characterized by legacy system complexity, performance woes, and generally an operational support and reporting-driven model. This mindset is shifting however toward increased focus on finding ways to improve efficiencies, cut costs and generate revenues. From an architectural perspective, this market is being disrupted by the introduction of appliance-based data warehouse systems generally and Oracle Exadata specifically. The aggressive moves by Oracle, which is using its Sun acquisition and a vertical integration strategy to compete, has caused a chain reaction in the industry and led to strong user awareness of appliance solutions; and competitive acquisition responses from EMC (Greenplum), IBM (Netezza) and HP (Vertica) as well as emergent partnerships such as HP and Microsoft.

At the same time the so-called “Big Data” explosion” is being driven by Internet giants such as Google, Yahoo, Facebook, Twitter, Bit.ly, LinkedIn, etc., and startups such as Cloudera, ClickFox, Membase, Karmasphere, and many others. The big data movement is spilling into mainstream enterprises (e.g. BofA, GE, ComScore and the U.S. Army) and is bringing critical organizational questions to CIOs and CTOs related to how best exploit this emerging trend.

These were some of the key themes discussed at the March 1, 2011 Wikibon Peer Incite Research Meeting on big data. The agenda of the research meeting was to present key findings and conclusions from a recent study of 40 Wikibon community members that included dozens of IT practitioners, data scientists, academics, business leaders and technology “alpha geeks.” The findings were presented by Wikibon CTO David Floyer and assessed by members of the Wikibon community.

Contents |

Key Findings on Data Warehouse Trends

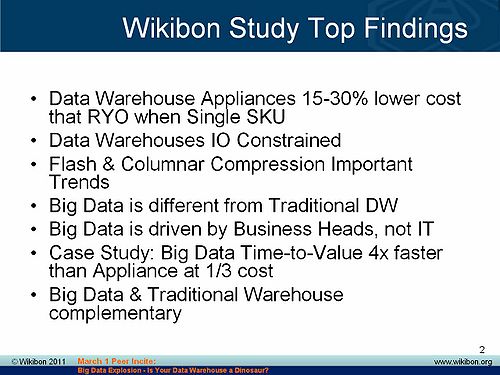

A main catalyst for the study was the disruptive force of appliances and specifically Oracle Exadata. Based on economic models and discussions with data warehouse practitioners, Wikibon estimates that appliance-based solutions are between 15%-30% less expensive on a total-cost-of-ownership (TCO) basis than “roll-your-own” solutions. Generally the cost savings trend toward the high end of this scale, but the high expense of Exadata licenses tempered the savings for many users.

In addition, the study revealed that:

- Virtually all practitioners indicated that they were I/O constrained. Users are looking to flash storage and columnar compression to ease performance bottlenecks and lower costs by allowing more data to be stored longer.

- While big data and traditional data warehousing are quite different, practitioners indicate that data warehouses are already feeding big data apps, and over time they believe that big data apps will in turn feed traditional data warehouses.

Practitioners almost universally cited performance, capacity and complexity challenges. For example, here are some direct quotes from data warehouse professionals:

“We are constantly chasing the chips. If Intel puts out a new microprocessor, we can’t get it in here fast enough.” Financial Services

“Our data warehouse is like a snake swallowing a basketball.” -Financial Services

“We’re running hard to stay still. We have a patchwork infrastructure with one of everything. We still have not gotten traditional DW/BI working and we’re not even thinking about big data.” –Mortgage Co

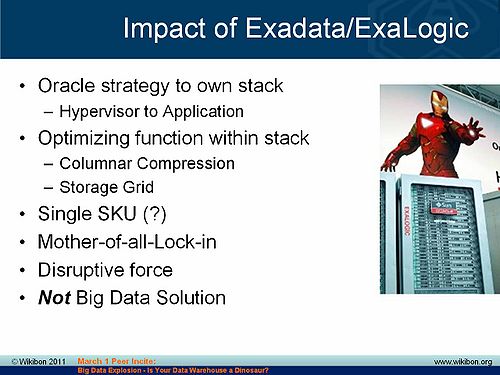

Impact of Oracle Exadata/Exalogic

Exadata is a disruptive industry force. Oracle's acquisition of Sun has hastened the vertical integration movement, and Exadata has been brilliantly positioned by Oracle to gain share on Oracle's major systems and storage competitors. Oracle is optimizing function and is attempting to own the stack from systems to applications. Additionally, Oracle is streamlining functions within the stack, leveraging its middleware extensively and at the same time pushing certain functions to its storage grid. Oracle’s extensive use of Infiniband as a systems-to-systems and systems to storage interconnect is a strong networking play designed for low latency and high performance switching.

- The single SKU approach of an appliance is alluring because it minimizes complexity, speeds deployment, and simplifies patches. However some practitioners questioned the merits the Exadata single SKU approach because of concerns over accommodating diverse operating systems beyond Linux. In addition, to scale resources in a single SKU model they need to buy a full appliance (for example to scale storage independently of compute). While this can be done through an Ethernet connection off of an appliance, it breaks the single SKU model.

- Exadata is a masterful lock-in strategy by Oracle. It’s appeal is very high because it’s essentially the iPhone of data warehouse appliances. At the same time, Oracle’s high degrees of vertical integration fully expose customers to lock-in.

- As a result of these factors, RYO data warehouse solutions will still command a good portion of the marketplace. These solutions integrate best-of-breed servers (dominated by IBM, HP and Dell) and Storage (dominated by EMC and followed by IBM and HP).

- Exadata and products like it are not well-suited for big data. High degrees of hierarchy and locking make it ideal for traditional DW use cases while big data apps, in contrast, will often thrive with a shared-nothing approach with lots of inherent parallelism (e.g. Greenplum).

“If you look at the Exadata in its current iteration, architecturally it’s very promising because they’re using solid state not as solid state storage but as flashed memory…” -Insurance

The Big Data Tsunami

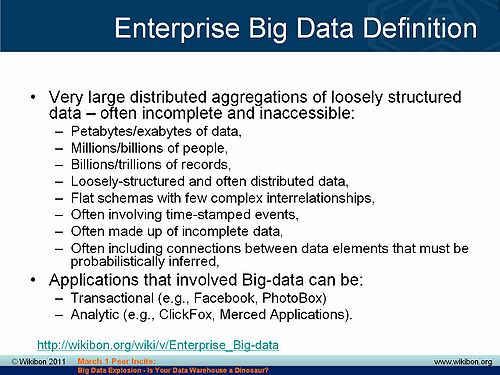

The term “big data” refers to data that is too large, too dispersed, and too unstructured to be handled using conventional methods. The big data movement was hatched out of efforts by search companies (e.g. Google and Yahoo) to handle massive volumes of unstructured information on the Web. This vast amount of information was too unwieldy to reside in traditional relational database management systems, and search companies developed techniques to process, manage, and analyze this largely unstructured and disbursed data. Unlike traditional data warehousing systems that rely on a centralized set of compute, storage, database, and analytic software resources, so-called big data systems move smaller amounts of function (megabytes) to massive volumes of dispersed data (petabytes) and process information in parallel. While trends in big data are alluring, traditional data warehousing remains a $10B+ market worldwide consuming the vast majority of spending (90%) compared to emerging big data markets.

- Big data applications can be both transactional (e.g. uploading Facebook pictures) or analytical (e.g. ClickFox, LinkedIn, Bit.ly, etc).

- Big data apps have traditionally been built by techies, but as the trend goes mainstream, business heads are driving ways to monetize information and build data products.

- Big data apps are often also very industry specific – for example focused on geological exploration in energy, genome research, medical research applications to predict disease, predicting terrorist threats, etc.

Case Study in Big Data Using MPP Architectures

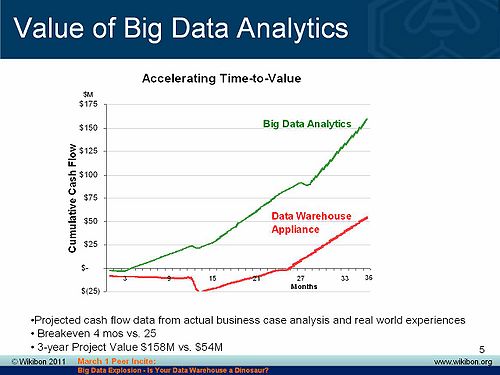

Wikibon extensively modeled the economic impact of appliances as compared to RYO systems. It also studied the impact of shared nothing / MPP appliances in big data apps as compared to traditional data warehouse appliances. The impact is dramatic as shown in the chart below. The data depicts the influence of data warehouse architectures in big data applications. The vertical axis shows cumulative cash flow and the horizontal axis displays time. In this case, a mobile phone operator used an MPP architecture from Greenplum to dramatically accelerate time-to-value and break-even time tables.

- Big data is more real-time in nature than traditional DW applications.

- Traditional DW architectures (e.g. Exadata, Teradata) are not well-suited for big data apps.

- Shared nothing, massively parallel processing, scale out architectures are well-suited for big data apps (but not so much for traditional DW use cases).

“With Greenplum we could analyze and implement change within a quarter compared to taking a year with traditional DW. This was worth $10s of millions in revenue” -Mobile Phone Operator

Is Your Data Warehouse a Dinosaur?

Big data and traditional DW apps are distinctly different, and key issues remain for CIOs regarding how to organize teams to exploit the big data opportunity. Initially, successes are being seen as skunkworks blossom, but eventually big data initiatives will require strong governance and oversight, especially as they are monetized.

The following additional key findings are relevant:

- Traditional DW apps predominantly see incremental growth (with intermittent step functions). In other words, at the end of each month, traditional DW apps might ingest 10% more data – unless there’s been some regulatory or other event requiring a step-function increase in database size.

- Big data apps typically require a complete re-load of the data and operate on the entire data sets (no sampling).

- Traditional DW data sources tend to be from internal sources, while big data apps take feeds from internal and external sources and very large Internet databases

The bottom line is traditional data warehouses are not dead, they are being complemented by new and emerging big data apps. These newer applications will take feeds from corporate data warehouses and feed back analytics to the main enterprise warehouse over time.

Action Item: While traditional data warehouses remain the lion's share of investment for IT organizations, increasingly, emerging big data applications will deliver value streams of an accelerating nature. Business leaders, CIOs, and IT organizations must collaborate to tap this new source of value and organize to exploit information as a new competitive differentiator. Initially, these initiatives should be separate from traditional DW/BI efforts so as not to dilute innovation. Over time, a data value group will emerge that will play a critical role in delivering future revenue to enterprises. CIOs should plan accordingly and construct a five year plan to evolve this role and the skill sets needed to thrive in this new world.

Footnotes: