with David Vellante

Contents |

Executive Summary

Legacy data warehouse and business intelligence systems have failed to deliver on their promises. Visions of 360-degree views, predictive analytics, major productivity enhancements, and the like have not lived up to expectations. Today’s DW and BI systems are patchworks of myriad technologies, trial and error, performance bottlenecks, and elapsed time intervals. While organizations have become reliant on such systems, CIOs need to re-think analytics strategies and invest in new data architectures comprised of Open Source and proprietary software, commodity components, and diverse data sets that can be customized to extract value from data and deliver competitive advantage. Fundamental to success is the ability to bring together structured and unstructured data and provide a “software-led” analytics system in near real-time.

IT’s Shifting Value Proposition

Historically, technology suppliers have been the main spring of value creation in the IT business. What technology vendors did with operating systems, microprocessors, networks, databases, and so forth created trillions of dollars in value for powerful IT suppliers and set the direction of the entire business. It also led to tremendous waste and under-utilization of expensive assets.

Leading practitioners in the Wikibon community report this dynamic is changing. While virtualization catalyzed the “do more with less” mantra and dropped cost savings to the bottom line, it hasn’t done much to drive value through deep integration with business lines. Forward-thinking organizations recognize that data is becoming the new source of competitive advantage, and organizations are re-thinking value creation and investing in new analytics infrastructure.

We believe that Big Data practitioners will create more value than Big Data technology suppliers going forward. In other words, what users do with Big Data will become more important to value creation than vendor activities. Therefore, organizations need to re-assess and re-tool their strategies, data architectures, processes, and skill sets to create differentiable competitive advantage.

Enterprise CIOs face a difficult, but we believe obvious, decision. They can continue to deliver incremental value through cumbersome and fundamentally flawed data infrastructure and inch their companies forward, or they can re-cast their data processes, infrastructure, and monetization strategies and make data a competitive differentiator.

In our view, the proliferation of machine-generated, social media, geo-location and countless other types of multi-structured data and the need to analyze and act on that data in real time is forcing CIOs to radically alter the way they approach information technology. The "old way" of processing data failed to live up to expectations even before the Big Data onslaught began. Now, traditional data management tools, technologies and infrastructures aren't just failing to live up to expectations, they're buckling under the added weight and threatening to bring down laggard enterprises with them. As one Wikibon practitioner said, “My data warehouse infrastructure is like a snake swallowing a basketball, it just can’t keep up to the demands of my business.”

CIOs face an inflexion point and must decide how to respond to the new reality that is Big Data. They have three options.

- Do nothing: This is a strategy, just not a very good one in these times;

- Hedge on emerging Big Data technologies like Hadoop, making marginal investments in connecting existing DW/BI systems to new platforms and taking half-measures in the hopes of keeping up with their more forward thinking peers;

- Go all-in on Big Data: Manage the decline of "traditional" methods of data management and invest in new, agile, scalable, and service-oriented data management and infrastructure approaches that enable their enterprises to outflank the competition.

The decision is an important one, both professionally for CIOs and financially for their organizations. And now is the time to make the decision.

Time is Not on Your Side

In the last century, businesses had the luxury of taking days, weeks, or even months to respond to changing market conditions, unexpected moves from competitors, and complaints from angry customers. With the emergence of the Internet as an e-commerce platform, the pace of decision-making picked up somewhat during the second half of the 1990's. But even then, businesses were still not expected to make complex, strategic, or even tactical-level decisions in real time.

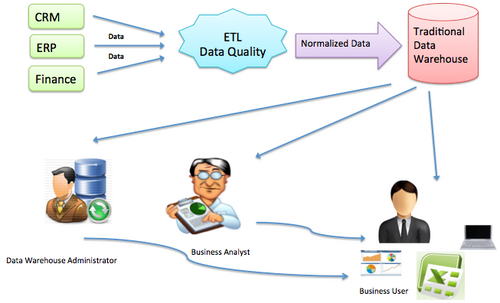

It was in this environment that the data management field developed. While a major leap forward from the days of pen-and-paper, the formula was fairly simple and straight-forward: Transactional systems produced predictable and small amounts of mostly customer and sales-specific data. Data was extracted from transactional systems, transformed or otherwise massaged to fit a predetermined data model, then loaded into a separate data warehouse; Administrators then ran reports against the warehouse, which were distributed to various lines of business. The goal was to allow enterprises to make better, data-driven decisions.

The entire process of modeling and deploying an enterprise data warehouse and related hardware often took months, sometimes years to complete. Many were never completed at all. Even those that did materialize after months or years of effort lacked timeliness, with data often updated only weekly or daily and reports routinely landing on executives' desks only after significant lag time had elapsed. And pity the business user that asked IT to add or change the dimensions of a report.

Then towards the end of the 90's, the concept of self-service business intelligence (BI), or "BI for the masses", came into vogue. The idea was to provide regular business-users with access to easy-to-use dashboards, report builders, and other tools to perform more customized data analysis. But for the most part that vision was never realized, and BI adoption petered out at around 20% penetration in the typical company. The end result at most enterprises were stacks of out-of-date, unhelpful reports collecting dust, supported by rigid, slow moving infrastructures. The dream of data-driven decision-making remained just that - a dream.

Half-Measures Not Enough

Barely a decade into the next century, the landscape looks dramatically different. Big Data pioneers like Facebook, Google, LinkedIn, and Amazon have shown the world what is possible when Big Data is harnessed in cutting-edge ways, and the idea that enterprises must recommit themselves to becoming data-driven organizations is now a widely held truism. To wit, data analytics is routinely cited by CIOs as among their top one or two priorities year after year.

Yet many CIOs are still reluctant to throw their lot in with the new breed of Big Data technologies and approaches, instead looking for the least disruptive ways to ease into this brave new world. This could be because some of these new Big Data technologies have exotic and vaguely threatening sounding names like Hive and Oozie. Or maybe it’s because many of these new technologies and tools are Open Source and are still maturing. Or maybe it’s just the fear of the unknown.

Whatever the reason, CIOs that believe they can survive and thrive in the Era of Big Data by making marginal improvements to existing data management technologies and infrastructures are mistaken. This approach, epitomized by the slew of Hadoop "connectors" that data management incumbents like Teradata, EMC, and Oracle have brought to market in attempts to grab their piece of the Big Data pie, will not work in the long-term. Simply put, batch loading day old (or older) data from Hadoop into an RDBMS just won't cut it. The speed of business today is simply too fast.

Rather, the enterprises that come to dominate the 21st century will be those that invest in new technologies and processes that allow them to ingest, analyze, and act on streaming data from all manner of sources in real-time and develop closed-loop feedback systems to continually optimize business processes, while simultaneously supporting deep, historical analytics capabilities to identify strategic insights. From a technology perspective, this requires an unequivocal embrace of the new breed of Big Data technologies including flash storage, massively parallel analytic engines, Hadoop, interactive data visualizations, predictive analytics and agile application development tools. From a processes perspective, this requires organizations to adopt new methodologies such as DevOps and software-led infrastructure (SLI).

The good news is that today there are a number of vendors on the market that make it possible to turn this Big Data and SLI vision into reality, and their numbers are growing. Companies like Hadapt, Continuity, Datameer and Fusion-io have left behind the outdated, rigid, slow technologies and approaches of old (that never achieved their original, much more limited goals anyway) for radically new approaches that offer the enterprise levels of speed, agility and – ultimately - profitability never before possible.

Integration and Monetization

Wikibon users indicate their two most pressing challenges with Big Data are:

- Integrating structured, multi-structured and unstructured data from various source system together in a common platform.

- Monetizing Big Data, or turning Big Data into actionable insights that drive revenue.

Traditional database vendors are quick to position Hadoop as little more than a glorified ETL layer, a batch system for putting structure around unstructured data. Oracle’s Larry Ellison, for example, has described his company’s approach as “Big Data meet Big Iron.” For Ellison and his colleagues in the legacy database world, Hadoop is just a staging ground to store and structure Big Data before shipping it off to a traditional RDBMS (running on millions of dollars worth of proprietary hardware), where business users can query the data in real time via SQL calls. As stated above, Wikibon believes this is a fundamentally flawed model that will not work in the longterm.

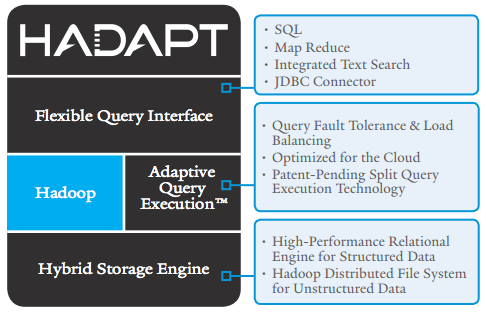

Instead, Wikibon believes CIOs must adopt a simpler, more elegant, more cost-effective model to support Big Data in the enterprise. One such example offered by Hadapt. Based in Cambridge, Mass., Hadapt’s raison d’etre is to provide the enterprise with a single, comprehensive analytic platform for all data types. Founder Daniel Abadi fundamentally believes there is no practical reason Big Data practitioners must use two distinct data platforms taped together via connectors.

Rather, Abadi maintains the current connector model of Big Data is an accident of history. Relational technology, and the vendor ecosystem built to monetize it, was close to 30-years-old and well entrenched in the enterprise when new forms of multi-structured data began growing at unprecedented rates. Rather than designing and building a comprehensive Big Data platform from scratch, legacy RDBMS vendors instead opted to stitch together existing databases and tools to handle the job.

But building a comprehensive analytic Big Data platform from scratch is precisely what Abadi and his team set out to do four years ago. The result is the Hadapt Adaptive Analytic Platform, version 2.0 of which was released this week.

The platform is designed to run both large-scale MapReduce jobs and real-time SQL queries across all data types – structured, multi-structured, and unstructured – stored on a single cluster of commodity hardware. Data can be accessed with existing SQL-optimized tools such as Tableau Software’s data visualization suite, opening up the benefits of Big Data Analytics to a large swath of the enterprise in a cost-effective and scalable way.

Hadapt’s platform is obviously less mature than 30-year-old relational database systems, as outlined in this post from Curt Monash. But it provides significantly faster SQl-style analytics than Hive, the open source Hadoop-based data warehousing framework that has largely languished in recent years. And with Hadapt’s engineering ranks growing, users will undoubtedly see the platform’s SQL capabilities steadily improve until it reaches parity with most RDBMS engines.

Hadapt, of course, is just one example of the new, simplified, agile architecture Wikibon believes CIOs must embrace to fully leverage Big Data in the enterprise. Other vendors, such as those mentioned above and numerous other startups (and increasingly mega-vendors), are likewise working hard to bring their versions of this vision to fruition. The overarching goal that binds them all is the determination to bring together all data types in a single architecture - if not a single platform, as Hadapt's approach does - by leveraging emerging technologies such as (but not limited to) Hadoop and MapReduce, MPP analytic databases, flash memory, and advanced analytics.

Early adopters that employ this “clean slate” approach to Big Data will avoid myriad complicating factors and roadblocks – performance and scalability issues, difficulty adapting to new workload requirements - that purveyors of the connector approach are already encountering. But this doesn’t mean that CIOs should rip and replace all their legacy data management tools and technologies immediately. Far from it, Wikibon believes CIOs must think like portfolio managers, re-weighing priorities and laying the groundwork toward innovation and growth while taking necessary steps to mitigate risk factors. In most cases, multiple technologies will play a part. But they should be integrated together (and with existing legacy database and data management technologies) via common metadata layers.

The pace of this transition will vary from enterprise to enterprise depending on factors including business use cases, corporate culture, existing skill sets, and the current condition of data management infrastructure and systems. But this is a transition that CIOs must make. The companies that succeed will be those that make the best use of data to drive timely decision making, leading to increased revenue and improved operational efficiency.

Action Item: Wikibon believes CIOs should consider six factors with respect to this new world:

- View data as a strategic asset and lay out a five-year strategic roadmap to leverage data for competitive advantage;

- Look outside the four walls of the enterprise for external sources of data, and identify those data sources that will add value to analytic processes.

- Develop a powerful but agile data architecture that can accommodate multi-structured data, operate in real time, and adjust to changing workload requirements.

- Assess the existing skill sets in the enterprise, identifying those areas that need improvement in order to take full advantage of data-driven decision making and support new data architectures.

- Organize data-centric processes around this new world and manage the decline of legacy data management tools and technologies carefully but as expediently as possible.

- Start with small but strategic projects that deliver fast time-to-value and invest in areas that demonstrate bottom line business results quickly.

Footnotes: For a list of Wikibon clients, click here.