Contents |

Executive Summary

On the eve of VMworld 2014, it is apposite to review the consolidation achieved with virtualization and examine the significant journey to compatible orchestration of both private and public clouds to create an automated hybrid cloud.

The IT imperative is to change IT from just keeping the lights on for existing applications (~80%+ of most current IT budgets) to releasing IT's potential as a key contributor to decreasing business costs and increasing revenues. Enterprise IT takes more than a year to develop and update new applications and to deploy new technologies. To meet the increasing pace of business, enterprises have to move to developing and changing applications and deploying new technologies in months, not years.

IT technology is changing at a pace never before seen. The mega-trends are mobile, social, Big Data, cloud, and the industrial Internet, and extending the traditional scope of enterprise IT. For example, Wikibon forecasts that the total worldwide value created by the industrial Internet alone will be $1,279 billion in 2020, with an IT spend of $514 billion.

Within IT, converged and hyper-converged infrastructures, software-led data centers, and mega data-centers will help create orchestrated and automated private and public clouds. These and new storage technologies such as flash are rewriting the rules for application design and business organization; within IT they are transforming the rules for system topologies and system management.

In this Professional Alert, Wikibon examines the virtualization vision and progress to date, including the vision and progress of the collective leading framework contenders for hybrid clouds, namely Amazon, Google, OpenStack, Microsoft, and VMware (together with EMC Foundation partners EMC & Pivotal).

Why Hybrid Cloud

Most IT organizations have islands of automation usually dedicated to different workloads, such as Microsoft, Web, end-user productivity, and mission critical applications. The resources in each island are managed by separate server, network, and storage teams. Often each island has one solution for server, network, and storage services, including vendor, resource allocation, and backup & recovery services. Within each island one size fits all, managed by workload throughput rather than application SLAs. The majority of workloads are on-site, with some co-location, public cloud, Software-as-a-Service (SaaS), etc.

In this environment moving workloads between IT islands and integrating server, storage and network teams is difficult. Providing application-specific SLAs is almost impossible. From an OPEX viewpoint, 80%-90% of resources are consumed by applications supporting the core business applications, which only generate 10%-20% of the value to the organization. The best starting point for improvement is to orchestrate and automate the supporting applications to radically reduce the staff and other resources required. The key requirement for optimization is self-service portals with a limited menu of options which are completely automated. For storage, the options should include backup and recovery processes by recovery point objective (RPO), recovery time objective (RTO), and performance requirements. Enabling end-users to change options dynamically allows them to try out lower-cost options rather than over-provisioning. Time & effort to implement is a key metric.

For the 10%-20% of core mission critical applications that make up 80%-90% of the value delivered, the key problem is increasing time-to-value. In these environments, change is strongly discouraged because of the risks to availability. By focusing orchestration and automation to enable faster time-to-change and time-to-scale, much greater value can be delivered to the business from earlier implementation of and updates to applications.

In most organizations, the core mission-critical applications will often be placed in a private cloud. Many of the supporting applications and test & development activities will often be placed in public clouds. So what is the optimum strategy?

- Strategy 1: Private Cloud for mission-critical and public for everything else:

- An initial popular strategy strategy was to split the workloads between a private cloud dedicated mainly to mission-critical applications and public cloud for test/dev and ancillary applications and functions. This approach was popular since many studies have shown that applications with less change and high IT resource requirements are cheaper to run in-house. The public cloud was more suitable for more variable applications with more change. The theory was to move the supporting applications to the public cloud, which could act as a "bursting" resource if the private cloud resources were not sufficient at any point in time.

- However, it soon became clear that this often would not work because data is very heavy. It can take days to move between sites. Bursting is only practical with stateless applications, which are a small minority of enterprise applications. This approach at best creates short term gains within new islands of IT and, at worst, can be a long-term disaster.

- Strategy 2: Public cloud only:

- For many organizations, the risk of writing contracts with service providers that could survive the SP's going out of business (who owns the data? Can the data be used to increase value to creditors?) is a major concern.

- The potential lack of control on privacy, security, confidentiality, and audit-ability is often daunting to manage.

- For high-volume applications that do not change often, the price structure of private clouds means greater costs than keeping them in-house.

- The cost of movement off a private cloud to another service is high.

- For small organizations and start-ups, a public cloud-only strategy is often justified. There is continuous improvement in delivering service offerings such as AWS, which can extend the boundary for some mid-sized organizations.

- A better solution for most IT organizations is to place a private cloud in a co-location facility physically close to the public cloud(s). This approach is used very successfully by customers of mega-data-centers such as Equinix, IO and SuperNap. This is a type of hybrid cloud solution (see next section).

- Strategy 3: Hybrid Cloud:

- Many cloud services are available. Many are specific to a vertical. Some need to be close to the Internet or the industrial Internet. Some will need to be close to the data center to integrate with in-house data, while some will need to be close to other cloud solutions.

- For a significant majority of mid-sized and larger users, hybrid cloud is the only strategic choice.

- Common orchestration and automation processes that will allow movement of applications and data from one cloud to another are a strategic imperative.

Bottom Line: The vast majority of organizations should plan for a hybrid cloud solution with multiple public and private locations. Any proposed strategy for private only or public only should be critically reviewed.

Hybrid Cloud Contenders

The key contenders are:

- Amazon AWS:

- Public Cloud, with AWS Direct Connect for private storage attachment,

- Automation via APIs with focus on applications,

- In a "Race to Zero" with Google and Microsoft.

- Google Cloud Platform Live:

- Public cloud,

- Focus on mobile & social,

- Formal Announcement of Google Cloud Platform Live November 4, 2014.

- OpenStack:

- Private cloud, hybrid cloud, used in public cloud,

- Broad open source ecosystem with momentum,

- Users often considering OpenStack with Open Compute led by Facebook.

- Microsoft Windows Azure:

- Public and hybrid cloud,

- Momentum, especially with Microsoft end-user & enterprise software.

- VMware vCloud Hybrid Services (vCHS):

- Extended private-to-hybrid cloud,

- Rebranded as VMware vCloud Air,

- EMC Federation platform, including EMC Hybrid Cloud & Pivotal.

Storage vendors are making significant investments in storage frameworks to enable storage orchestration and automation. Key storage vendors include:

- Dell Cloud Services,

- EMC Hybrid Cloud,

- HP Hybrid Cloud with HP Helion,

- IBM Dynamic Cloud with ISIS,

- NetApp Work Flow Automation (WFA).

The Business Case for Hybrid Cloud

Wikibon has done some initial work on the business case of hybrid cloud. Our initial finding is 90%+ of the value comes from the business, through faster time-to-deploy and change, and increased availability of resources to develop new applications. About 10% of value comes from lower IT costs and in some cases lower IT budgets, particularly from lower OPEX costs for supporting applications.

The importance of this finding is that a drive towards orchestration and automation within a hybrid cloud strategy should agree with and be driven by business strategy. Optimizing for business value will probably lead to an increase in IT spend.

Characteristics of Orchestration & Automation Optimization

A key objective is to remove the islands of IT and provide a common framework with common processes & procedures for private and public cloud environments. Characteristics include:

- The implementation is application centric, focusing on optimizing resources to meet the SLA requirements of each application.

- It is managed by single IT cross-functional team, optimizing for compute, storage, and network resources.

- This allows the development of Dev/Ops and Cloud/Ops teams that make operational efficiency, automation, and self-tuning part of application design.

- Applications should move towards being able to monitor required SLAs and select resources dynamically to ensure compliance.

- System reliability & availability (RPO & RTO) and backup methods should be applied on an application basis.

- Location of workloads and data should be fungible, but data should be migrated over long distances as little as possible.

- Resource Pools should be created with different performance and reliability characteristics for all resources such as network, storage, and compute.

- Applications should have the ability to dynamically connect and use all network types.

Assessing the Journey to the Hybrid Cloud - a Storage Perspective

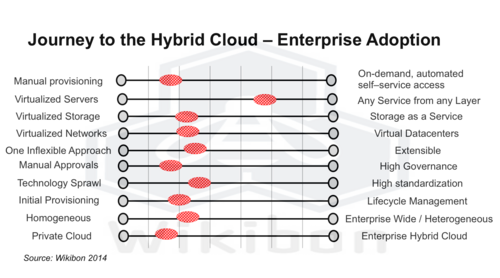

Figure 2 shows the enterprise view of the journey to the hybrid cloud. Using VMware as the cloud model, Figure 2 shows that server virtualization has been very strongly adopted by enterprises. However, other areas of virtualization, orchestration and automation have not been adopted. For some areas such as storage virtualization, VMware technologies such as vSAN and vVols need flash to provide the necessary speed, and need different ways of managing the storage to make it cost effective.

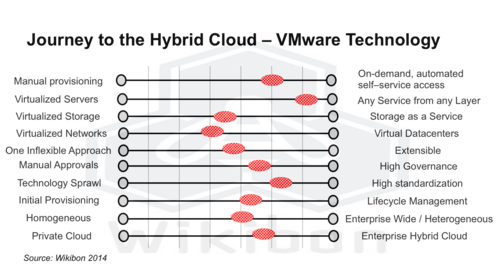

However, Figure 3 shows that VMware has most of the enabling technologies available or is working to deliver them in a consumable fashion.

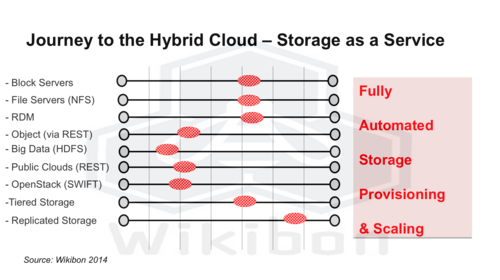

One of the most critical areas of development is storage. Making storage into a service is key to fully automated storage provisioning & scaling. Figure 4 shows the different components necessary for a full storage service. The areas of improvement are object storage, low cost storage services for Big data where the application is providing the storage services, AND APIs for the management of the public clouds mentioned above, including the OpenStack environment. The OpenStack integration was announced at VMworld 2014.

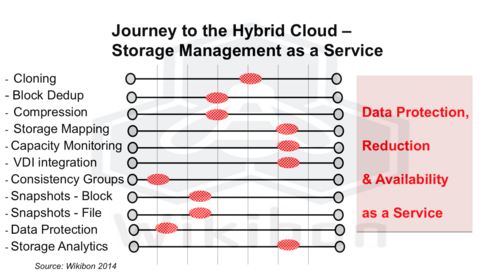

The final area of progress that is required is to enable storage management as a service, as shown in Figure 5. This will allow data protection, data reduction through de-duplication and compression, and availability as a service. One of the areas that needs attention is the ability to provide application consistent backups within a VM and across applications spanning multiple VMs, so that recoveries are much faster and more reliable. The announcement of vVols at VMware 2014 is a good step in providing snapshot services, hopefully both in VMware and in attached storage controllers. Also key is providing data protection services by application, so that RPOs and RTOs reflect the requirements of the application (avoiding one size fits all), and that charge back and show back can ensure that protection services are applied to meet real business needs. Storage analytics are needed and provided to vCOPS to enable storage the fastest "time to innocence" in the case of an application performance issue.

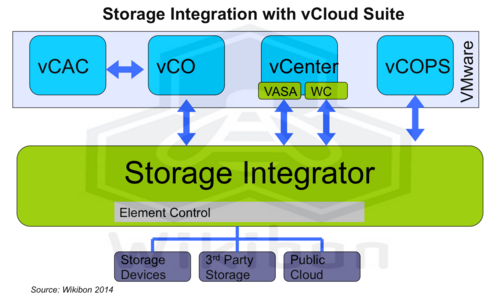

Figure 6 shows the complex set of APIs enable storage integration between VMware, storage devices and cloud storage. It is vitally important to simplify and enable these, and ensure that storage can perform effectively.

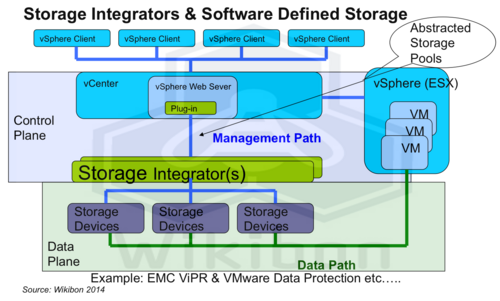

Figure 7 shows the abstraction of storage into storage pools and the separation of the control plane and the data plane (through services such as ViPR and VMware data protection). This is the final step in enabling storage to fully participate in a software-led infrastructure and software-led storage.

Overall, storage services are one of the most complex areas of enablement on the journey to the hybrid cloud.

Action Item: The journey to orchestration and automation with a hybrid cloud environment is a journey to increase the value of applications to the end-user. As a consequence, this project requires:

- Business-led Initiatives,

- An Application Focus,

- Clear Stages,

- Clear Goals for each stage, and,

- Constant Re-planning.

In future work, Wikibon will explore and assess the storage frameworks that are available in the market today. In our preliminary work, we have found that for many enterprise customers, the VMware framework is the most advanced and builds on existing VMware software infrastructure. The EMC Hybrid Cloud initiative is a pioneering framework which supports the VMware vCloud Air initiative very well and is also designed to connect to OpenStack, AWS, and Windows Azure. The combination of VMware vCloud and EMC Hybrid Cloud initiatives are well documented and functionally rich and have a significant lead over other combinations at the moment.

However, it is early days in this journey, and other cloud storage vendors have interesting architectures and approaches that should be considered.

There is a philosophy from all the storage vendors that significant function and control should reside in the SAN and in the storage array controller in particular. Figures 6 and 7 show the complexity and APIs that are required for this type of solution. There are other approaches from established and stealth software-defined storage that are taking a more system-centric or server-centric view, using hyper-converged infrastructure. Again, it is early days in the journey to orchestration and automation within the hybrid cloud and there is room for more than one solution.

Footnotes: