#memeconnect #fs

Tip: Hit Ctrl +/- to increase/decrease text size)

Storage Peer Incite: Notes from Wikibon’s November 9, 2010 Research Meeting

Recorded audio from the Peer Incite:

The title of this week's newsletter, "Backup is Broken" is purposely controversial, and you may say, "not in my shop it isn't." But if your shop is using traditional backup -- taking each application offline at night in turn to backup all associated data to tape -- then whether you know it or not, your backup is broken and in increasingly desperate need of replacement.

There are a list of reasons for this, many of which are discussed in the articles below. But the overall reason is that in a world where business runs 24X7, where budgets are under unrelenting pressure, and where a small difference in efficiency in terms of bottom line expense control, capex savings, or, perhaps most important, customer service in a virtual, world-wide marketplace can become a huge competitive advantage or disadvantage, depending on which side of the coin you are on, companies can no longer afford the luxury of traditional backup that interrupts operations. And most of all they cannot afford the long, slow restorations after a data loss, when someone has to drive out to the off-site storage facility and hunt for the right tape, then mount it and run a long, temperamental restoration.

And they cannot afford to lose hours of data in a disaster. Today all companies are data driven. Even a small data loss can create a huge business disaster. Data must be protected effectively and continuously, and at a much lower cost. For these business reasons, traditional data backup is broken, regardless of how well it is running in your shop. Your job, and the business you work for, may depend on you realizing that. G. Berton Latamore, Editor

Contents |

Backup is Broken: Data Protection as a Service is the Fix

In and of itself, backup delivers no tangible business value. At the same time business are clamoring for more demanding RTO and RPO capabilities while CEOs are mandating that IT becomes more pay-as-you-go (i.e. IT-as-a-service). New technology enablers such as virtualization and cloud computing combined with continuous data protection are changing the way organizations are thinking about backup and recovery. No longer is the notion of backing up a server the most viable model. Rather we are moving to a model where we are protecting users and services. This vision requires new thinking about data protection as a service where all the associated components of that service (e.g. the server image, database, services, etc.) are protected as a whole.

This was the general message put forth at the November 9th 2010 Wikibon Peer Incite Research Meeting where storage industry executives and practitioners gathered to discuss how virtualization and cloud computing are changing backup and recovery. The Wikibon community was joined by:

- Justin Bell, Network Engineer at Strand Associates,

- Mark Hadfield, CEO of nScaled, Inc. a cloud service provider, and,

- Jim McNiel, CEO of FalconStor Software, a data protection technology company.

Why is Backup Broken?

According to Falconstor’s Jim McNiel there are four main issues practitioners face that underscore the challenges of backup today:

- Data growth – structured data is growing at 25% per annum and unstructured data well over 60%; IT budgets just can’t keep pace.

- Server virtualization – concentrates a lot of compute and I/O into fewer physical resources that are constrained. This exacerbates the problem of getting data out of the server to a safe place in a timely manner. At the same time, while virtualization ‘breaks’ backup, it affords an opportunity to recover data in new ways.

- CAPEX and OPEX pressures – IT needs to do more with less and yet we have many organizational silos related to data protection – groups that look after high availability, data protection, backup/recovery, archive, disaster recovery, etc., which often need to be rationalized.

- Complexity of storage solutions – SATA, SAS, SSD, public and private clouds, different data protection software models, incompatible forms of deduplication, etc – customers are confused as to how to get to an efficient operating model, especially as many data protection decisions are made by bespoke departments.

In addition, according to McNiel, the backup window is becoming extinct and as organizations move to a 24X7 mandate, the ability to protect data has become more and more difficult.

A Practitioner’s Perspective

Justin Bell is a network engineer at Strand Associates. In a previous Peer Incite meeting, Bell described how Strand, a 380 person architectural firm with several remote offices, was struggling with backup and recovery. The firm was backing up onto tape, and often admins or even architects were responsible for the daily backup and, when required, recovery. Not only was this sapping productivity from the remote offices but the organization was exposed to a catastrophic data loss as 60% of its backups failed, and it had no disaster recovery strategy.

Bell architected a remote office backup solution using an iSCSI SAN in the remote offices with a continuous data protection solution using a Falconstor write splitter. Strand takes hourly snapshots using CDP, which has protected the company’s data and lowered RPO to an hour. Remote sites push the changes nightly to Strand’s Madison headquarters, where 35 days of snapshots are maintained for the remote sites and one year’s worth of data is stored offsite on tape. We asked Bell if he agreed that his backup was previously broken and where it is today. Bell agreed that his backup was broken prior to the new architecture. He likened his backup to an old car moving down the highway with smoke pouring out the back end. The car still moved, but it really wasn’t adequate. Backup today at Strand is much better – “not broken but there’s room for improvement” according to Bell. He cited two areas of potential improvement for his backup, including:

- Automated disaster recovery (i.e. pushbutton DR) to bring up a DR site is lacking,

- Easier use – so that other less trained professionals can do recovery.

From Bell’s standpoint, his backup is not broken, especially since Strand was coming from such an exposed position, but improvement is needed. Bell’s situation is similar to that of many companies – backup and recovery are working well and don’t need to be overhauled. That stated, for those organizations aggressively pursuing virtualization, many opportunities for improvement exist.

The Cloud Service Provider Perspective – Do More With Less

Mark Hadfield, CEO of nScaled, didn’t agree that backup was broken, because many customers are successfully doing backup and recovery today and protecting their businesses. However what people are realizing, according to Hadfield, is that virtualization and cloud computing are allowing organizations to do more with less, and disaster recovery backups and moving data offsite are intrinsically linked. In addition, consolidation through virtualization, while cost effective, also consolidates risk, increasing the importance of DR and offsite backup.

Hadfield also cited Amazon as a highly visible entity that is bringing down the cost of computing. His contention, which is frequently echoed in the Wikibon community, is that many organizations are sick and tired of managing their own data centers and are looking for alternatives that are integrated solutions, less overhead intensive, and more cost effective than managing their own data centers.

Do Clients Need to Rip and Replace?

If backup is indeed broken or in need of a facelift, are customers faced with no alternative other than expensive rip and replace? Wikibon member John Blackman was unable to join the call but provided some excellent perspective from a seasoned IT practitioner.

First, Blackman’s perspective is that if backup is broken, it’s broken at the SLA level. End customers expect no loss of data but they don’t necessarily want to pay for that service level. When they find out how much it will cost to provide zero data loss they say no and then scream when they lose data. Part of this problem is that IT and IT management are not setting the right expectations and clearly defining them for the management team.

Blackman contends that organizations need to educate, communicate, and collaborate and accept that the ‘way it’s been done for years’ isn’t necessarily the best way. This is not a technical issue but one of overcoming cultural tensions, politics, and setting proper business goals.

The Wikibon community generally agrees with Blackman that rip and replace is not a viable option. “Clients need to work within their organizations to clearly define, communicate, and manage the service levels and customer expectations;” says Blackman. “Backup systems are only products, and when you think in terms of service – solution – components – products – vendors” the equation is simplified. Specifically, service drives the solution, solution drives the component, components drive the products and products determine the vendor.

Data Protection as a Service

Increasingly, IT organizations are streamlining processes in an effort to reduce costs and do more with less. A well-defined, menu-based set of data protection services that are communicated clearly throughout the organization is fundamental to delivering more efficient IT and ultimately IT-as-a-service.

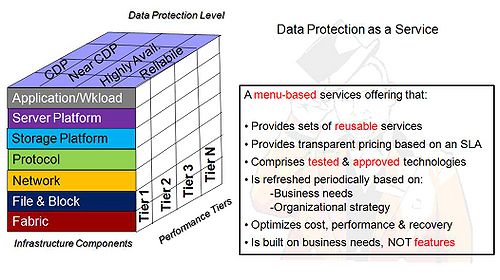

Figure 1 shows a model of data protection as a service. It is a modified version of a model put forth by John Blackman to the Wikibon community in 2008. The model shows three dimensions including infrastructure components, data protection levels and performance tiers. The business determines the service level required and the intersection of the three dimensions determines the solution that is offered. Pricing is a function of the cost as determined by the intersection of the three dimensions.

By presenting data protection as services instead of technology as an option to business lines, IT organizations can reduce complexity and build a portfolio of reliable and trusted infrastructure to support applications. This approach can eliminate one-off solutions and minimize data protection stovepipes. The notion of backing up servers in this model is replaced by providing data protection services that align with business objectives.

Action item: CIOs are increasingly frustrated by infrastructure that lives in silos. Data protection strategies that rely on leading with technologies rather than services are becoming outdated and will increase costs. By 2015, 70% of IT organizations will be delivering some form of IT-as-a-service where self-service, pay-as-you-go transparency and simplified IT are fundamental underpinnings of the technology group. Virtualization and cloud computing offer new opportunities and organizations should begin to architect data-protection-as-a-service, building on the themes of do more with less and overall IT-as-a-service strategies.

To the Cloud and beyond: Service-led backup strategies

Data growth is now one of the top three issues for CIOs. This data growth is not coming from structured data growing at 15-25%/year, but from unstructured data growing at least twice that rate. The value of unstructured data is mixed; some is very important, but most can be considered digital trash.

Traditional backup mechanisms based on incrementals and full backups were designed for small amounts of valuable data and are not holding up in this new world. Backup windows and complex recovery mechanisms are the main issues. The problems with traditional systems can be ameliorated with a short-term roadmap to reducing backup pain by using disk-to-disk backup and data de-duplication. However the fundamental problems with traditional approaches cannot be fixed within this model, and in general continued investment in such models should be held to a minimum.

Wikibon sees three key components of a successful backup strategy:

- Move progressively towards a continuous backup model, which relies on consistent snaps and continuous background movement of data to on-site and offsite data repositories.

- Migrate toward a services model for backup and recovery, where the level of recovery is determined within a self-service model by the line of business, and the IT infrastructure resources for service delivery are spread over internal and external (cloud) services.

- Increase the value of data by integrating backup and recovery services with archiving and data mining services, utilizing a common metadata model.

The benefits of this approach are:

- Backup windows are eliminated.

- Service levels of how often data is backup up, how quickly it can be restored and how efficiently historical data can be accessed can be dynamically dialed up or down according to business requirements.

- Cloud service providers can be used for some parts of the strategy, with on-site services providing the higher performance components of backup and recovery.

- The lines of business have a direct stake in setting service and budget levels appropriate to their mission.

- The extended use of data for archiving and data mining adds business value to backup and recovery.

Action item: CIOs and CTOs need to work within their organizations to clearly define, communicate, implement and manage continuous-backup service levels and customer expectations. At the same time, business managers need to understand the requirement to invest in recovery services that will be infrequently used, but are essential part of their own customer service commitments.

The roadmap to eliminating backup pains

The broken state of backup mainly refers to the pain associated with backup and recovery processes. Backup administrators complain about two main points. The first is the “backup window,” the time available to perform and finish the backup, which is directly related to the backup process itself. The second is related to the efficiency of the restore and recovery process, the recovery time and recovery point objectives. While the recovery point objectives (RPO) and recovery time objectives (RTO) are essential to overall business performance, recovery remains an occasional process. On the other hand, the pain related to the backup window is a daily occurrence that has a direct impact on IT staff productivity and the efficiency of primary business services.

The roadmap to eliminate backup window pains starts with small non-disruptive changes to the backup infrastructure. Tape backup processes can be significantly accelerated by seamlessly introducing disk into the mix. Adding a VTL or a file-interface data deduplication target can seamlessly change the disk-to-tape backup process into a disk-to-disk backup, which can significantly accelerate backups to stay within the backup window boundaries. This addresses the back end of the backup process and the overflow of backup data and ameliorates the effect of the shrinking backup window but still does not address the cause.

In order to deal with the cause of the backup window we need to address the front-end of the backup process in one of two ways. One is to redirect the source of the backup process to the SAN infrastructure rather than backing up the server infrastructure. Leveraging clean snapshots or copies of the data to perform backups removes the impact on the server infrastructure and primary service applications and greatly reduces the backup window. You still have to consider network traffic during the backup process, but this isolates backup operations from the production environment. Also, this method further accelerates backups by running over the SAN infrastructure rather than the LAN.

To cross the last chasm towards next-generation backup and recovery solutions, and in order to completely eliminate the backup window, we need to change the backup batch job into a continuous or near-continuous process that has limited to no impact on the server and application infrastructure. This is especially true in a virtual server infrastructure environment, where CPU and network resources are highly utilized. This continuous data protection method (CDP) also has the benefit of reducing RPO from 24 hours to near zero data loss. In addition, CDP can transform the restore process into a recovery process to meet very aggressive RTOs that are measured in minutes instead of hours or days.

Action item: Evaluate your current backup processes and determine how they align with your business goals; in many cases small changes can make a big difference. If you can tolerate hours of data loss and restore speed is not too critical changing to a D2D backup can be enough. If you have aggressive RPO and RTO, take a serious look at redesigning your backups and implementing a CDP solution, it doesn’t have to be expensive to work.

If Backup Is Broken, When Will You Fix It?

For most organizations, data growth, combined with profound shifts in how applications are run and how business processes are supported, ensures that if backup is not currently broken it will be soon. Increasingly, businesses operate around the clock, around the globe and through the weekend, so that the traditional method of taking applications off line at night and on weekends to perform incremental and full backups is nonviable.

With the migration of production applications to a virtual-server environment, gone are the days when a taking down a single server takes down a single application. Virtualization enables 10-1 or even 20-1 server consolidation and application mobility from one physical server to another. These combine to dramatically increase the chances of affecting critical applications when performing off-line server backups.

Applications no longer exist as standalone pillars. Composite applications pull information from a wide variety of individual applications to enable a business process. Disrupting the availability of any single application to perform a backup may impact the ability to deliver on the entire business process.

While cost is a factor, cost alone will be insufficient at many organizations to justify a redesign of data protection and application or business-process recovery. Few organizations can accurately report the cost of backup and recovery, nor will they allocate scarce resources to measure it. In addition, organizations have made substantial investments in equipment, media, people, training, and documentation, and many, if not most, will delay change by applying upgrades and short-term bolt-on fixes to areas of greatest pain while preserving well-understood, if increasingly inadequate approaches. A complete redesign of data protection requires substantial capital investment, which, though it may have a quick ROI, may be politically unpopular, particularly in a time of constrained IT budgets and limits on staff.

Two approaches, which are sometimes combined, provide the potential to actually measure the cost of data protection and application recovery going forward and ensure that the combined forces that are weakening current backup approaches can be overcome. The first is point-in-time snapshot, which enables an application-consistent view of the data, which can be created in a matter of seconds and used as a source file for remote replication or traditional backup. These snapshots provide roll-forward, roll-back capabilities at the application, and, if done correctly, business process level. The second approach is replication of de-duplicated data to the cloud. This offers organizations the ability to have near-infinite scalability in the off-site data repository, while controlling bandwidth costs for the replication. Combined, these approaches offer scalable data protection without significantly impacting application availability.

Action item: Before developing a new architecture for data protection and business process recovery, the business unit, risk management, security, compliance, and information technology teams must all sit together at a table to ensure that their combined needs and concerns are addressed. Because business units ultimately pay for IT services, in most organizations IT alone can not push through a re-design, nor will potential cost savings always justify the investment. IT can, however, become the catalyst for the corporate-wide decision by providing accurate estimates of the cost of downtime for the organization and a date-certain by which current processes will no longer be able to ensure application or business-process recovery at all. For many organizations, that day is today.

Creating Dependable Protection - 8 Ways to Fix CDP

Traditional backup is broken in the sense that it’s old, outdated technology and we can do better. CDP is a better option, but it still has some growing to do. Vendors need to take the time to look at it from the practitioner's point of view and make CDP more automatic and simple. This is what we need:

•Give me a cost benefit - I know everyone asks for this, but I really do need to do more with less. So, make the technology affordable, put it on commodity hardware, help me reduce my over-head, and give me clear business benefits.

•Give me self-service - Why do I need to restore files or e-mails for users if they can do it themselves? I’d like my users to be able to right-click a file or folder and see the previous versions so they can perform a restore themselves.

•Give me a way to verify backups - If I’m going to trust you with my data, and most likely my job, then I need a way to verify that every block is exactly where it should be. If you could verify every block is where it should be on a regular basis and let me know that it’s going to work that would be even better.

•Give me visibility into my backup/data protection - I need a report to show my boss and my auditors how we are protecting our data, how often it’s replicated, and how often we fall out of our parameters. I need to be able to select the data I want and customize my reports. If I could get all the performance and backup data in a database and make my own report, it would be even better.

•Give me proactive alerting - I need to know if my mirror is out of sync, if I’ve missed a snapshot, or if my off-site replication had a problem. I need to know immediately and I need to know what steps to take to resolve the situation. I can’t tell my users or my management that we have CDP and hourly snapshots if there is a chance we don’t.

•Give me a button to activate DR mode - If my primary environment goes down, I need to get my fail-over up and running quickly. Mount up the mirrors of my system as VMs on the CDP hardware, and do it fast. I don’t want to specify which servers to recover; rather, I want to specify which services I need to recover. I need the recovery to be smart enough to know that if I need my Web app recovered it needs to make sure the back-end SQL server is up, too. I want this process to be easy enough for my intern to do.

•Give me a good way to seed the initial replication - I need to have this system up and running shortly after I purchase it. I can’t wait for two weeks to replicate my data the first time, so I need something I can copy my data to and ship to my remote site. I don’t care what it looks like, or how fast it is, as long as I don’t have to pay too much for it and it’s easy to ship.

•Give me the cloud - I need to have a site to send my off-site copies to, and I don’t want to manage the infrastructure. I need to have all the functionality mentioned above, I need five 9’s up time, I need good technical support, and I need it to be cheap.

Action item: Simplify and demystify the backup and recovery process by delivering data protection as a service. Rethink the way you deliver your products and take a data protection as a service mentality. Help me deliver data protection as a service to my business units and users.

Time to Get Rid of the Backup Window

A big challenge for IT administrators today is the growth of the backup window due to the increasing volume of data creation. At the same time, due to server virtualization and a global workforce, systems have less and less slow periods, and downtime is anathema to business. We can’t continue to operate with a big open backup window – we need to eliminate this altogether.

The goal of reducing/eliminating the backup window is not new. When Fibre Channel fabrics were first rolling out ten years ago, they promised to take the bandwidth hog of backup off of the shared LAN and onto the SAN. It takes more than new infrastructure to conquer this issue.

Using the storage capabilities of snapshots and replication (with de-duplication) allows the backup challenge to be removed from the server and centrally managed by storage. Previous attempts to fix backup used the same processes over faster and more efficient technologies. There is a limit to how far the old ways can go, instead of trying to continue to make incremental changes, backup needs a revolutionary approach which eliminates the backup window entirely. The challenges of data growth and 24x7x365 business will become more demanding the longer you put off transforming your backup process.

Action item: Organizations need to eliminate the backup window. How? By transforming the old method of backing up the host into taking snapshots that are efficient and redirected to the SAN vs. the server infrastructure, so that copies of the data are used instead of the primary copy. Alternatively, instead of using a batch backup job, use continuous replication to create a backup job that is not as heavy on the primary workload as the entire batch.