Originating Authors: David Floyer and Nick Allen

This research is the next phase of the Wikibon community's original work on automated tiered storage management (ATS). Sub-LUN automated tiering for Tier 1 and Tier 1.5 class storage arrays is a relatively new (mid 2011 GA) technology and Wikibon analysts are publishing this work to help practitioners understand the state of the technology, the benefits, the risks and the vendor landscape. This research was first published on December 2 2011, and was updated on December 21 2011 as a result of feedback from vendors and the Wikibon community (see Footnote 1 for more details).

Wikibon found that there are very different philosophies in implementing ATS. There are two major approaches and a hybrid:

- The IBM Easy Tier implementation emphasizes the automation of all the storage volumes in a storage array with ATS, with few controls that allow the storage administrator or database administrator (DBA) to fine-tune how specific volumes should be managed. The fundamental philosophy is "The system can do a better job than IT professionals in setting up the automation environment, and can change faster than IT professional as the environment changes."

- EMC's FAST VP, as another example, implements similar levels of automation, but places a much greater emphasis on providing IT professionals a fine level of control for specific data volumes. The fundamental philosophy is that the lines of business require the ability to ensure that the performance of specific high-value applications meets the required service level agreement (SLA).

- HP's Adaptive Optimization started life being in the automation camp, but has since included more "knobs" to assist storage and database administrators.

This study centered on the requirements of ATS on Tier 1 and Tier 1.5 arrays; it is assumed that the workloads supported by these arrays are large-scale, very high-value applications with aggressive, consistent performance and very demanding RPO & RTO requirements. Wikibon found that level of maturity of the ATS implementations from all vendors was not sufficient for full automation of all volumes within an array for these workload assumptions. In addition, the storage administration/DBA processes in managing automation in an ATS environment are not mature, and carry the low but real risk of unforeseen dysfunction causing a dramatic failure to meet SLAs. Wikibon recommends the more pragmatic approach of ensuring the quality of service, by using (if necessary) fine controls to isolate specific volumes.

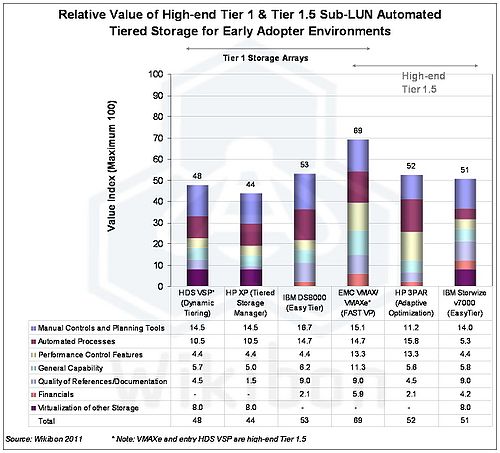

As a result of these findings, Wikibon included and weighted more heavily ATS functionality and services that address the planning and setup of the initial environment and help provide management, automation, monitoring and remediation of the ATS environment to ensure applications SLAs are met. Other ATS functionality and financials were given lower weightings. The Wikibon analysis found that the EMC FAST VP implementation with greater fine-control capabilities is more robust for early adoption in workloads typically found on Tier 1 and high-end Tier 1.5 arrays. The ROI is good.

Users are cautioned that functionality in this market is changing rapidly and as the technologies mature and organizations become more experienced with ATS systems, the value contribution of individual attributes will likely change. Wikibon expects that greater maturity and confidence in ATS automation will lower the cost of implementation and increase the value with lower CAPEX and improved risk. Wikibon expects that the ROI will improve from good to excellent. Wikibon will continue to monitor the adoption of ATS , and will analyse additional workloads and technologies. In particular, we plan to track the business case for ATS adoption as the market matures.

Contents |

Introduction to Automated Tiered Storage

Automated Tiered Storage (ATS) is the ability to automatically place data in the correct storage technology to provide the necessary performance at minimum cost. The ATS implementation is built on virtualized pools of storage and allows data to move between these pools based on analysis of usage metadata collected over time. The implementation is in general more accurate than traditional storage caching approaches, and provides more savings. The resources required to drive an ATS implementation are greater than cached approaches, but in general will offer greater control and higher efficiency. Many installations will use both approaches in tandem, using caches (both RAM and Flash) for volumes with very high locality of reference, and using ATS for the majority of the volumes.

The Business Case for Automated Tiered Storage

The initial implementations of automated tiered storage (ATS) were volume based – whole volumes were moved to a different tier of storage according to the usage characteristics of the volume. This had some take-up in enterprise data centers, but because of the impact of moving a whole volume on performance, this transfer was almost always performed as a background task during periods of low storage activity. The introduction of sub-volume-based (often called Sub-LUN) ATS has significantly increased the interest in ATS, as now only small blocks of data need to be moved. This allows dynamic movement of data at any time and has enabled much better business cases to be made, and has resulted in many more ATS deployments. The current business case for tiered storage is simple – reduced cost of storage and reduced cost of managing storage. The long-term belief is that risk can also be reduced, as the products improve and users become comfortable with the new business processes required to manage tiered storage. The estimates of savings are:

- Cost of Storage (CAPEX): By allowing data to be migrated down according to actual usage, data can be optimally placed in the storage hierarchy, and less SSD and high-performance disk (SAS or FC) is required. Wikibon has found a range of savings from 15% to 30%, depending on how aggressive the implementation. Wikibon believes that for early adopters, an expectation of a 20% reduction in acquisition cost over the life of an array is reasonable. (Note: there is significant benefit that can be derived from virtualized storage pools and thin provisioning, in the range of 15-30%. ATS and virtualized storage pools/thin provisioning may be implemented together, but the benefits of storage pools and thin provisioning can be obtained independently from ATS, and should not be included in the ATS business case. Virtualized storage pools are a pre-requisite for sub-LUN ATS).

- Cost of Storage Management (OPEX): fewer storage administrators and database administrators are required. Wikibon has found a range of savings from 10% to 20%, depending on how aggressive the ATS implementation. As there is a requirement for a different kind of storage management to monitor risk, Wikibon believes that for early adopters, an expectation of 10% is reasonable. As ATS matures, this should rise to 20% and higher.

- Reduced Risk: Automation of the complex task of storage placement should in theory help reduce the risk of not meeting service level agreements with end-users, and improving availability. However, in the early stages of development of ATS and early adoption experience, Wikibon does not believe that it is reasonable to include risk reduction in a business case. Risks could actually be increased if there are not adequate implementation safeguards, and if the correct monitoring capabilities are not implemented.

The bottom line is that the potential savings are meaningful. Specifically, our research with early practitioners suggests that ATS is an evolutionary technology that will be widely adopted and shave storage TCO by 20% over a four-year period. Wikibon believes that while the early implementations of ATS will be measured, ATS will become widely adopted over the next five years for all classes of workload. As the ATS products and data center processes mature, Wikibon believes that risk reduction will become the most significant driver as automation will lead to higher availability and a better user experience. We caution users however to gain experience with ATS prior to arguing for reduced risk as a near term business benefit, especially for mission critical data.

Different Tiers of Storage Arrays

For ATS to be ready for Prime Time, it must be able to deal with all workload types, including the most challenging workloads. Wikibon has defined types of storage arrays based on their ability to meet different performance and availability workload requirements. The types of storage arrays defined are:

- Tier 1 Storage Arrays: Tier 1 storage arrays support very large-scale, very high-value applications that have aggressive, consistent performance and very demanding RPO & RTO requirements – “Storage that takes a lickin' and keeps on tickin." This includes not only the characteristics of the storage array, but the services, infrastructure, history and ethos of the array vendor. A more formal discussion can be found at this Wikibon Posting. Tier 1 storage arrays include EMC VMAX, IBM DS8000, HP XP, and Hitachi VSP.

- Tier 1.5 Storage Arrays: Tier 1.5 storage arrays support large scale applications that have requirements for good consistent, performance in mixed workloads and high availability requirements. Tier 1.5 arrays lie between Tier 1 arrays and modular Tier 2 arrays. There are a large number of 1.5 storage arrays from all the major vendors but few approach the robust availability characteristics of true tier 1 arrays. For this analysis we include IBM Storwize V7000, EMC VMAXe, HP 3PAR and low-end Hitachi VSP configurations as Tier 1.5.

- Tier 2 Storage Arrays: Tier 2 storage arrays are modular with a dual controller architecure, and have less automated functionality than Tier 1 or Tier 1.5 storage arrays. They are significantly cheaper on a cost/GB basis from a CAPEX perspective.

- Tier 3 Storage Technology: Tier 3 storage is used mainly for backup and archiving, with low access requirements. There are a broader set of requirements for this tier, including immutability, long term retention and distribution of data. It is an area of significant change.

Just to be clear, there are two types of storage tiers: there are tiers of storage arrays (Tier 1 through 3), and tiers within the storage arrays (SSDs through SATA). In this study Wikibon is researching the ATS capabilities of Tier 1 arrays and the high-end of Tier 1.5 arrays to confirm that ATS is ready for prime time, i.e., will work with the most challenging workloads. The list of high-end arrays selected to make that assessment includes:

- EMC VMAX/VMAXe*, using FAST VP ATS software (Tier 1 array)

- Hitachi VSP*, using Dynamic Tiering ATS software (Tier 1 array)

- HP XP, using Tiered Storage Manager ATS software (Tier 1 array)

- IBM DS8000, using Easy Tier ATS software (Tier 1 array)

- HP 3PAR, using Adaptive Optimization ATS software (High-end Tier 1.5 array)

- IBM Storwize v7000, using Easy Tier ATS software (High-end Tier 1.5 array)

- *Note: Both EMC VMAXe and HDS VSP low end configurations are positioned in the high end of Tier 1.5. From a software perspective both products implement ATS identically to their Tier 1 brethren. As such we have grouped these products for simplicity of presentation.

The requirements for the lower end of Tier 1.5 and Tier 2 are likely to be different, and could be simpler and more automated. Wikibon expects to address ATS on more storage arrays at a later time.

ATS Evaluation Criteria & Methodology

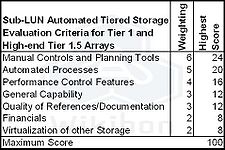

Wikibon looked at the ATS functionality for Tier 1 and high-end Tier 1.5 arrays required for "prime time" mission critical workloads. Wikibon talked extensively to Wikibon members that had implemented or were implementing ATS for mission critical workloads and other workloads. The executives in data centers with Tier 1 storage are generally cautious about introducing new technologies, but Wikibon found there was high activity and expectation that they would deploy ATS. Wikibon discussed it's members the importance functionality requirements for this early adopter stage. Table 1 gives the evaluation criteria functionality groups that Wikibon developed and used in this analysis, and the relative weightings. Because ATS is in the early adopter phase, high weightings were given to planning capabilities, control mechanisms, and the ability to automate processes. Less weighting was given to general capabilities and financials. Table 1 also shows that maximum score that is available within each criteria, with the overall maximum score being set at 100.

Wikibon expects that as products mature and understanding increases with adoption, the evaluation criteria and weightings will change. The requirements and criteria for ATS on lower storage array tiers will be different, and it would be misleading to apply the findings of this study to other workloads/storage tiers.

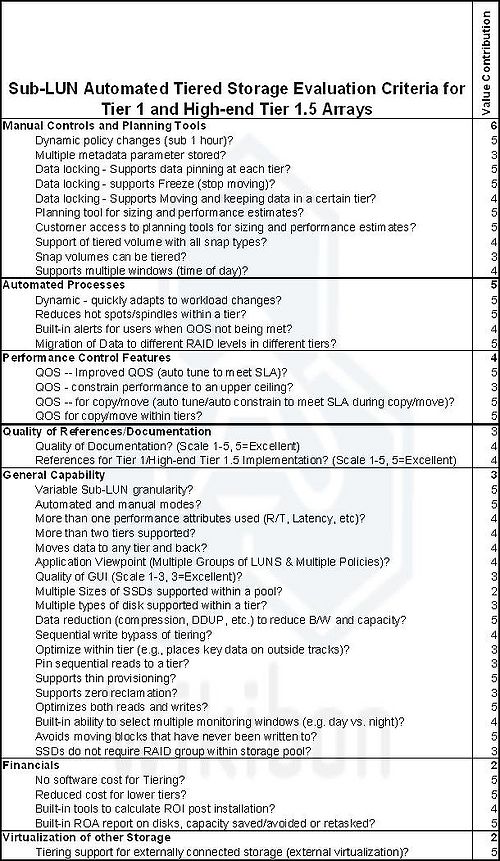

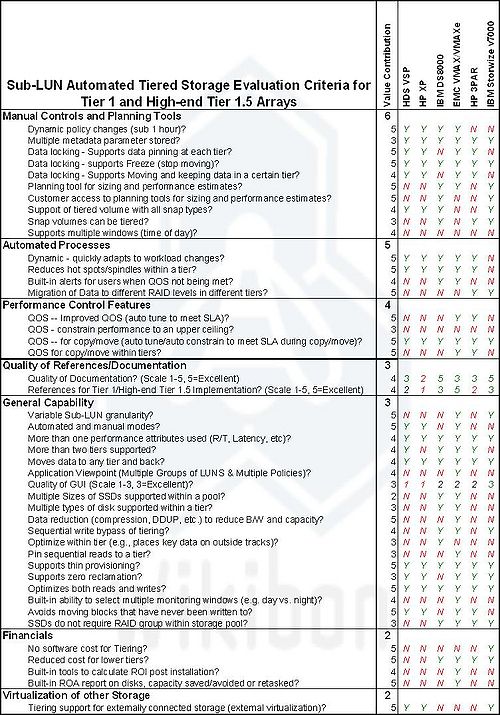

Within each of the evaluation criteria are specific functionalities, as shown in table 2, together with the relative importance within the criterion on a 5 point scale. All of these functionalities are assessed for each of the storage arrays as available/not available (Y/N), except a question on Quality of GUI, which is assessed on a three point scale and two questions on quality of documentation and references, which were assessed on a five point scale.

The average weighted score is then summed for each evaluation criterion, and then normalized to a maximum overall score of 100 (See Footnote 2 for additional details on the methodology).

Key Findings

The results for the early adoption stage of ATS implementation are shown in figure 1.

Wikibon performed a detailed analysis of vendor specification sheets and requested and conducted interviews with all the vendors included in this study. HP is the only vendor that has not responded to date on the HP XP arrays. The XP array is OEMed from Hitachi, and although it is very similar to the HDS VSP, we expect there are differences, especially as the HP XP array does not support 2TB 3.5 inch SATA/SAS hard disk drives; it supports the higher cost/higher speed 900GB 6G 10K SAS hard disk drive which are less suitable for the lowest tier. For the moment, Wikibon has assessed the HDS VSP to be the same as the HP XP except for multiple tier support, quality of documentation and references. In addition, Wikibon conducted in-depth interviews with IT practitioners across a wide range of Tier 1 and Tier 1.5 solutions. This research is an ongoing effort which began in 2010 and we continue to solicit feedback from the Wikibon community on this topic and will update the research as warranted vendors respond and practitioners provide peer review.

The key findings of this study include:

- There is a wide range of quality of implementation in the marketplace. EMC was an early Tier 1 ATS developer, and the company has a strong lead at the moment.

- There is a difference of philosophy illustrated by the approach of EMC with FAST VP, which has of a large number of “knobs” which can be used to control ATS production performance. The IBM Easy Tier approach on the other hand is to provide a “black-box” with very few knobs. Hitachi and the HP 3PAR/HP XP have taken a mixed approach meaning they have fewer controls than the EMC VMAX, but more than IBM Easy Tier.

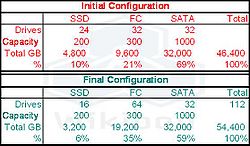

- The disadvantage of a fully autonomic approach is that the planning phase is far more difficult to execute today. Early users indicate that lots of trial and error is necessary to understand and optimize ATS. An example of the difference of initial configuration that was implemented, and the final configuration that was required to actually meet the SLAs is given in Footnote 3 below. While the processes for assessment have improved, this example shows that careful planning and testing are still required.

- The planning phase is most the critical today to reduce risk and ensure ROI. The benefits of ATS come from using more SATA drives, but users indicate that getting the balance correct between the two or three layers is complex. It is easy to under-scoped the amount of high performance disk, and care should be taken in configuring the initial system. Over time, changing the ratio to include more SATA is likely to be optimum.

- Over time, as ATS algorithms improve and organizations tune processes, increased automation will become less risky and more acceptable. Specifically, as the technology matures, increased automation (i.e. more dependence on the system) will become the norm.

- Tools to map today's environment to the future state are lacking and often highly reliant on vendor services to specify the system (pre-installation). As well, post installation performance monitoring tools are rudimentary.

- Missing in the ATS solutions analyzed was the ability to manage quality of service by limiting the maximum IO resources available to volumes and applications. This will become increasingly important as flash storage takes over more of IO activity from high-performance disk. IO tiering will need to be part of the automation process.

- Despite certain deficiencies and concerns, users are exceedingly enthusiastic about ATS and expect to consistently increase adoption. Clear ROI is being realized and costs are being cut by 20-30%.

There were two major concerns expressed by the users that Wikibon interviewed. They were:

- Ensuring that specific workloads would get the performance they required;

- Avoiding the scenario of a “rogue” workload causing the ATS software to make poor decisions.

The users we interviewed had clear examples of things that had gone wrong. In one case the initial configuration chosen for the array was too heavy on SSDs, and too light on high performance disk. The planning tools had not picked up the fact that the performance “skew” was very flat, because of aggressive periodic movement of less active Tier 1 array data to Tier 2 arrays. The details are shown in table 4 in Footnote 3 below. In another case a report run once a month was able to commandeer the majority of the available storage resources and impact the mission critical services.

One of the ATS pieces missing for all vendors except IBM is the availability of tools that can be used by the potential user to predict the performance independent of vendor support. This is a clear sign that these tools are still in “learning mode”, and there is still black-box magic art in configuring solutions.

However, these initial problems should not overshadow the fact that customers were using ATS, were using it in 100% automation mode, and were expecting to use it more aggressively. They were pleased with the progress and savings they had achieved, and believed they could get more from broader adoption of ATS technology.

Advice for Practitioners

Automated tiering will increasingly evolve and become a staple of high end SAN systems. Early adopters should consider the following:

- Planning is the most critical phase and where most practitioners of mission critical apps said they wished they'd been more diligent.

- Although initial configurations should be lower cost, don't expect to save all the money immediately. As experience is gained with ATS, there will be benefits from greater efficiencies as less frequently accessed data placement is optimized. Future upgrades will be with higher-density lower-cost storage, as older volumes trickle down over time to the lowest tier.

- Pay attention to processes-- specifically how to avoid rogue jobs dominating the system and when to intervene and override the automated system.

- Monitor the performance of ATS systems against established SLAs and set new baselines as warranted.

- Don't set objectives that are overly aggressive. Vendor claims may ultimately be realized but be careful out of the box.

- Negotiate for a little extra high speed disk and SSD as a hedge and quid pro quo for the initial investment in ATS software. This will reduce the risk of variance in data "skew" initially. The system can be balanced later with the addition of mainly SATAdrives. Work closely with your vendor on optimal cconfigurations.

- While automation is goodness beware of too much "black box". For application data typically found on Tier-1 arrays, Wikibon believes that knobs are required during the current early phase of ATS adoption. Wikibon believes that for less mission critical data, the “knob-free” approach is good, and over time will be preferable for most workloads as the algorithms used in the ATS software improve from all vendors. The benefits of automation will be lower storage administrative costs, lower risk and a better return on investment.

Automated tiering will increasingly be adopted in workloads running on Tier 1 and Tier 1.5 arrays, especially as flash permeates the storage hierarchy. There are major differences in vendor philosophies and degrees of manual control that are allowable and achievable today. ATS is ready for prime time workloads, but be prudent in planning and understanding process changes that will be required to succeed. This dynamic will be constantly fluctuating based on maturity of ATS software, user experience and a number of other factors including process maturity, business mandates and adoption of IT-as-a-Service. Wikibon practitioners are encouraged to check in periodically, ask a question of the community and share best practice advice.

Action Item: CXOs and senior storage executives should understand automated tiered storage is cost effective and will be widely adopted for all workloads, and should include ATS on RFPs for Tier 1 & high-end Tier 1.5 storage arrays. In the current early adopter phase, the most important selection criteria should be “how-to” vendor expertise, the ability of the vendor to analyze the current environments (and allow the storage staff to analyze using the same tools), the ability of the vendor to supply tools to retain mission critical user confidence, and the vendor’s willingness to propose and guarantee the results. The key IT process that should be reviewed in depth before deployment is how the storage service level agreements are monitored against the quality of service metrics available from the storage system. The processes to remediate out-of-line situations should be thoroughly tested before going live.

Footnotes: Footnote 1: As a result of feedback from vendors (particularly HP) we were able to get additional documentation and feedback about the automated tiering technologies. In particular, we were able to get a better insight into the tuning capabilities. As a result of feedback from the community, we added a section on documentation and references, as these were seen as important criteria for selection. As a result these amendments, all the figures and tables have been updated. If readers are interested in the differences, they can be found by looking at the history tabs for the article and the images.

Footnote 3:

Table 4 illustrates the challenge of correctly configuring the array with the optimum balance of storage in the different tiers. In this case the initial configuration chosen and implemented for the array was too heavy on SSDs, and too light on high performance disk. The planning tools had not picked up the fact that the performance “skew” was very flat, because of aggressive periodic movement of less active Tier 1 array data to Tier 2 arrays. The final configuration shows what was required and implemented after the initial configuration failed to meet the performance requirements. The vendor made the changes without additional charges.