What Storage Folks do not get about Fusion-io

From Wikibon

#memeconnect #fio

Fusion-io and other vendors who provide flash storage through the PCIe interface are often criticized by storage companies as having ‘no legs,’ implying that they will be taken out by larger players (either a storage company or a processor technology company) as the market matures. Storage folks in particular talk about the imperative that data should be shared across all the servers, so that the resources can be shared.

What many storage pundits don’t get or at least won't admit are the following key points:

- Storage sucks. Sorry, but it's the truth. Storage is painfully slow. The system is waiting for spinning, mechanical rust. This arcane approach, which has been in place for more then five decades, is forced on systems architects and application developers. Access to mechanical storage is so, so slow – measured in milliseconds. Even the use of flash storage technologies that use the ancient channel and storage protocol paradigm are still very slow compared with processor and RAM speeds.

- From a systems perspective, the only thing good you can say about storage is it’s the only technology that is persistent except for very expensive RAM storage protected by batteries. This fact has allowed the storage industry to extract rents from users for decades.

- For two decades, function has migrated away from the server out over the channel to the storage array. Much of this has been for practical reasons related to sharing data. Sharing is good, but the fundamental constraints of the spinning disk architecture remain. There has been substantial innovation in attempting to mitigate the slowness of mechinical spinning disks, but at best they have been palliative.

- Systems and application architects take a different view. For the first time in history, they have the opportunity to have a persistent resource on the “right” side of the channel. Flash storage sitting next to the processor can be regarded as an extension to RAM storage, with the huge advantage that it is, like disk, persistent and way less expensive (e.g six times cheaper than RAM1). That dramatically simplifies architectures, operating systems, database systems, and even hypervisors. Technical developers are architecting systems where the active data is stored on flash storage next to the processors. If that data is needed, the processing is moved to the node where the data is stored. It’s a shared nothing model that enables data to be hashed and spread across nodes and introduces a new model of shared data. Of course a traditional slow high-density low-cost storage network will remain for inactive data. Traditional storage architectures won't sit idle. They will improve but not at a disruptive pace. Within a few years most new high-performance systems will have large amounts of flash storage next to the processors; a tablet on steroids.

Is Facebook a Bellwether or an Outlier?

The first designers of these systems are the social media giants, who have found that systems with traditional SAN architectures cannot provide the power and flexibility required for them to grow. They simply don’t do mega-Web scale. While not everyone agrees, Wikibon feels that Web 2.0 leaders are a harbinger of future systems designs.

As Little’s Law implies, system architects look for the queues in any system and apply technology to reduce the bottlenecks. Fusion-io, and maybe some other vendors in this space (e.g. Oracle) have it right. They understand that it’s not about hardware, rather the secret is in IP that allows systems to read and write directly from the processor to flash in a single pass with no cumbersome, multi-phase commits required in traditional storage, file system and database protocols.

A leading example is Fusion-io’s Virtual Storage Layer (VSL), which is like IBM mainframe MVS (Multiple Virtual Storage) architecture. Essentially this resource creates 2TB blocks of virtual addressable data, meaning you can design terabytes of address space that scale to incredible levels (i.e. address space limitations are blown away). This is achieved with a combination of flash controller architecture and server software that provides multiple virtual address spaces to be mapped onto a log-structured flash memory. This allows the processor to store data 'synchronously' to flash – once the write is complete it is guaranteed to be written to persistent flash.

From a software point of view, VSL provides a large virtual resource that enables designers to get rid of 99% of the IOPs. And as the saying goes, “the best I/O is no I/O.” The remaining 1% of I/O will use the traditional high capacity disk storage shared over storage networks within the data center and in the cloud.

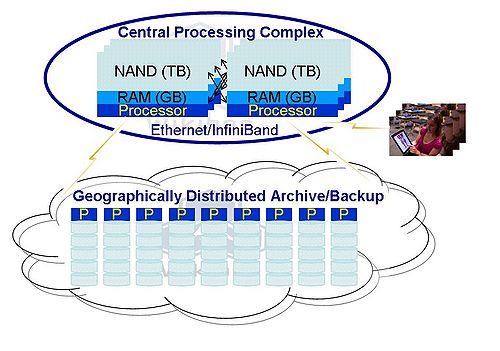

This model has been dubbed "Lean Computing" by Wikibon, and is shown in Figure 1. Early development and adoptions of this model are likely to be with the Linux operating system and noSQL databases or shared nothing SQL databases such as Clustrix and NimbusDB.

Source: Littles Law and Lean Computing, Wikibon, 2011

Will the Whales Swallow the Minnows?

Companies like Intel clearly are going to get into this space in a big way by embedding such technologies into systems design. How will the likes of Fusion-io compete? The way startups always compete. Being first, being fast, innovating and getting OS suppliers, database vendors, and ISVs to exploit the architecture. Some of the innovations will come from the SSD Form Factor Working Group with improvements to the PCIe form factor such as multiple power sources, hot-plugability and dual ports.

Does Fusion-io have a window of opportunity? Absolutely. Intel moves architectures at a pace of about every five years. Fusion-io must move faster… much faster. In the meantime, companies like IBM, HP, Oracle, and Dell are watching Fusion-io very closely and likely will be introducing their own architectures over time. Maybe IBM's PCM technology will even make it out of the laboratories some day.

The bottom line is Wikibon believes this trend is highly disruptive and game changing with respect to systems design. Fusion-io is uniquely at the forefront of this sea change and has a big lead on competitors. Is that lead insurmountable? No, however it's substantial and the key for Fusion-io is execution. It's announced IPO will serve, we believe, to sharpen that execution and place increased (good) pressure on an already talented team. Competition in the marketplace confirms Fusion-io’s vision and increases, not decreases its value.

Action Item: New system architectures are emerging and while shared storage models will remain, new thinking about systems design is presenting radical opportunities for application designers to deliver order-of-magnitude increases in performance and application functionality. Fusion-io is leading the way, and observers should pay close attention to its moves. While execution will ultimately decide Fusion-io’s fate, its technical vision is spot on for cloud-scale applications.

Footnotes: 1 See Figure 3 from "Littles Law and Lean Computing"