Quieting Noisy Neighbors In Cloud Services

From Wikibon

Storage Peer Incite: Notes from Wikibon’s December 18, 2012 Research Meeting

Video & Audio recordings from the Peer Incite:

"Noisy neighbors," applications that put large demands on the server, storage, database or network, negatively impacting the performance of other vital applications that share those resources, have been a problem both inside corporate datacenters and, in particular, on SaaS and IaaS solutions. Inside the data center, at least IT has some control over the situation. In a multi-tenancy environment, where the problem comes from some other entity sharing the service, IT can only complain to the service provider. This has been a major reason that companies do not entrust Tier-1 applications to public cloud services.

On Tuesday, December 18, 2012, Matt Wallace, Director of Cloud Architecture at co-location and IaaS provider ViaWest, dropped by the Peer Incite meeting to discuss his company's experiences and specifically how SSD array maker SolidFire provides a unique solution to the "noisy neighbor" problem.

ViaWest was originally a co-location service provider that has evolved to also offer IaaS solutions in the geographic U.S. Western states. It has major data centers in four Western states and is just opening a data center outside Las Vegas.

As a result it offers an unusual mix of services. It remains a major co-location service provider. It also provides traditional outsourcing services via the Cloud in which it runs dedicated hardware/software combinations in its data centers for individual customers. And it provides a full IaaS solution based on multi-tenancy of shared hardware, software, and network infrastructure.

It uses a variety of hardware including, on the storage side, EMC VNX dedicated arrays for individual customers, NetApp and 3PAR. Its internal networking is 10 Gig Ethernet using Cisco and Arista gear with multiple short links to provide very high speed data access. Its data centers are highly virtualized, using mostly VMware with some other hypervisors in specific areas mostly for individual clients.

Noisy Neighbors ViaWest has pretty much solved the problem of network overloads with 10 Gig Ethernet. However, it realized the impossibility of guaranteeing consistent QoS for high-performance, high-volume applications when using traditional HDD arrays, even in a dedicated environment. That sent Wallace on a quest for a better answer among the emerging SSD vendors.

He talked to several, including WhipTail and Pure Storage. Their answer, he said, was to provide large numbers of IOPS and basically hope that things sorted out so that each individual customer got the performance it needed. That was not good enough.

SolidFire stood out because its tool set allows him to allocate a specific number of IOPS to specific customers or applications regardless of the demands of other customers. "With SolidFire the granularity is unique. Nothing else allows you to deliver SLAs in this way."

This eliminates the need for massive over-provisioning, which can actually save money in some situations over using traditional HDD arrays. For instance, he said, he was talking to a ViaWest engineer working with a customer that needs a guaranteed 7,000 IOPS for a 2 Tbyte database. With SolidFire that can simply be dialed in. In that kind of situation, it may well be cheaper to use SolidFire than traditional disks both in total initial cost of the array and in power and cooling and other operational costs.

ViaWest is still testing the SolidFire solid-state array. He said that so far 2-4 milliseconds is the worst performance they have seen on long, high-demand runs. Typically, the array delivers 400-700 microseconds for 75%-90% of all reads and writes. And when the array fills up or slows down, "the way they distribute blocks across the entire array gives amazing scalability by taking advantage of increasing number of nodes." Increasing capacity is as simple as plugging in more storage or adding a node - the system automatically starts using the increased capacity without human intervention or any interruption of operations.

ViaWest is in the process of upgrading to CloudDirector 5.1, which supports storage tiering, as part of its VMware architecture. Wallace anticipates using this capability to create a tier 1 of SolidFire with two lower tiers using traditional HDD arrays, one for all operational data not on SolidFire and the other for archiving.

"The Cloud has many advantages. You avoid CapEx expenditures and can burst usage to support sudden demand increases or grow more slowly as your business grows, without having to guess today what you will need in two or three years," he said. You can react swiftly to sudden changes in the business such as new business opportunities. And now with SolidFire you can have the same kind of scale-on-demand and guaranteed QoS in your Cloud storage that you have with CPUs. The big noisy-neighbor problem that was the major trade-off is eliminated." Bert Latamore, Editor

QOS for IOPS Enables Tier-1 Apps in the Cloud

Tier-1 database application owners have long argued against putting their performance-sensitive applications in a private or public cloud offering. Server and network virtualization and dynamic workload balancers make it relatively straightforward to provide compute and network quality-of-service (QOS) guarantees within a multi-tenant infrastructure.

But, says Matt Wallace, Director of Cloud Infrastructure at ViaWest, who was a guest in Wikibon’s December 18 Peer Incite, cloud-services providers using traditional storage offerings can only meet the QOS IOPS requirements through dedicated storage systems or massive over-provisioning of networked storage. Both of these approaches are expensive, offer no leverage, and fail to provide some of the core requirements to be considered a true cloud offering:

- Self service,

- Scalability,

- On-demand provisioning, and,

- Pay as you go.

Tier-1 applications in public cloud services are particularly challenging, because the application owner has no control of and no visibility into neighbors that might impact storage IOPS performance. Without performance guarantees on an application-by-application basis, noisy neighbors can disrupt application performance. Even when providers offer a guaranteed percentage of available IOPS, this approach only ensures that everyone in the cloud suffers equally from a noisy neighbor.

ViaWest, one of the largest privately-held data center hosting providers in the U.S., has gotten around this challenge by leveraging new technology from SolidFire. The SolidFire solution is much more than blazing performance. As Wallace explained, great performance is available from a variety of all-flash storage array suppliers, such as WHIPTAIL and Pure Storage. However, of the solutions evaluated, only SolidFire had the built-in QoS capabilities, which provided true differentiation.

As anyone who has driven knows, if you slow from 70 miles per hour to 40 miles per hour, it will feel as if you are crawling. Service providers need to be mindful of the negative perception of declining performance, even when performance stays within contracted ranges. QOS management needs to include maximum performance along with guaranteed minimum performance. Without these limitations on available IOPS, applications will do their best to consume all available resources, providing great initial performance that declines as new applications come into the neighborhood or as applications spike.

Action item: The absence of IOPS QOS management was a major impediment to running Tier-1 apps in the cloud. Because the technology is new, ViaWest performed extensive testing on SolidFire. The early results and feedback from this demanding customer, especially given its embedded QOS management capabilities, argues for serious consideration from anyone running a shared infrastructure, whether public or private cloud.

CIOs: Ensure Success By Providing Scale and Hitting Performance Targets

At the December 18, 2012 Wikibon Peer Incite Research Meeting we heard how ViaWest is enabling tier 1 applications to move to the cloud. For CIOs, there is an ever-growing number of options for how to best handle mission-critical tier 1 applications, even when scale is huge.

As technology environments mature over time, CIOs have had a lot of changes over the years, from workloads running on one-to-one physical servers to highly virtualized environments running a good chunk of a company’s workloads. However, as time goes on CIOs are beginning to consider cloud services for a couple of reasons:

- Reduced CAPEX: As CIOs buy services from a cloud provider, those services hit the OPEX budget rather than the organization’s CAPEX lines, meaning that the services are expensed immediately rather than having to undergo a depreciation schedule over multiple years. This can be desirable when it comes to tax treatment.

- Scale on demand: Some large enterprise CIOs have avoided virtualizing mission-critical tier 1 apps due to scalability issues. A true Cloud service provider can offer solutions that scale to fit existing needs and that provide for bursting too much greater capacity as situations demand or as workloads are planned to grow. For example, a tax software provider may want to procure much more scale during tax season. Likewise, another organization may see an unexpected surge in workloads needs due to some event taking place that drives business.

With little to no capital outlay, CIOs can create on-demand environments that scale to meet just about any demand.

But, not all Cloud services are equal. Obviously, ViaWest has competitors and those competitors include Amazon. But since Amazon is the big player in the space, comparisons to the services provided by Amazon are often made. This brings up some additional decision points that need to be made by CIOs looking at cloud as a solution:

- Partnerships: According to Matt Wallace, Director of Cloud Architectures at ViaWest, customers coming to ViaWest are getting a complete, supported solution along with managed services and excellent support. According to Wallace, customers considering Amazon that are in need of deployment assistance will need to look for third-party integration partners to help with the project. This can add additional complexity and cost for CIOs who may prefer a simpler, single-party solution.

- Infrastructure: Obviously, not all cloud providers are created equal, and CIOs who intend to place their trust in a provider must do due diligence to ensure that selected providers can adequately meet the workload needs of the organization.

In addition, Wallace provides invaluable advice regarding storage and the issue of “noisy neighbors.” In such environments, storage IO workloads between tenants negatively impacts the scale and production of other tenants, resulting in imperfect working conditions and lack of ability to set expectations around workload requirements. For mission-critical applications, this is a non-starter.

To combat this issue, ViaWest has solutions that can support network-stored virtualized database servers with guaranteed performance levels, even in multi-tenant environments.

Major metrics for ViaWest include write latency and IOPS. Latency metrics include the actual process of writing data to multiple SAN arrays as well as the latency introduced due to the need to communicate across the network. ViaWest indicates that it tops out at about 15,000 IOPS on a single network-connected volume.

But this noisy neighbor issue isn’t just a Cloud service provider problem. Even large data centers might suffer from noisy neighbor issues between applications. These issues can result in similarly negative outcomes and are often the result of mixed IO patterns making it difficult to prioritize workloads or by insufficient network connectivity between hosts and storage resources.

Action item: CIOs with significant throughput and latency needs must ensure that their storage platforms can scale both up and out so that all possible scenarios can be covered. ViaWest has chosen SolidFire as their storage platform of choice and is able to deploy it in their multitenant environment and guarantee specific performance metrics. CIOs can get the same benefit with the right storage solution to ensure that they are able to meet the ongoing demands of the business.

Architecting for Tier-1 Apps

Tier-1 applications require infrastructure that is tuned for specific requirements. That has traditionally led IT staffs to create isolated silos of resources for these applications. IT now can choose among several paths to eliminate the silos for specific applications. With vSphere 5, server virtualization removed the technical barriers for mission-critical applications.

On the December 18, 2012 Peer Incite, the Wikibon community spoke with ViaWest, a cloud service provider that can support the rigorous demands of tier 1 applications. Unlike most cloud services, which offer one infrastructure to a relatively homogenous customer base, ViaWest provides guaranteed quality-of-service (QoS).

ViaWest Director of Cloud Infrastructure Matt Wallace points out that the network can easily become the choke point of an architecture. His current design uses Cisco UCS, which provides a convergence of compute and network, along with all-flash SolidFire storage arrays. Matt’s POD design scales up to 1 million IOPS, which drives 32Gb/sec. Each POD is an independent failure zone that doesn’t rely on other equipment.

ViaWest finds that its high-performance 10Gb Ethernet solution is a competitive advantage compared to market-leading Amazon Web Services (AWS). The AWS customer network is 1Gb and customers cannot specify a high-performance option. Matt sees a continued large opportunity to expand cloud services to 40Gb and 100Gb Ethernet options. As discussed in Wikibon’s Converged Infrastructure Moves from Infant to Adolescent, flash offerings are still working their way into different aspects of the stack, and none currently offer the QoS capability found with SolidFire.

Action item: By their very definition, Tier-1 applications are critical to the operations of a business, so CIOs must be careful as to how they are deployed and supported. Companies can now start moving away from custom deployments of infrastructure and move Tier-1 deployments into server virtualization solutions or to cloud services such as those offered by ViaWest.

Role of Flash Arrays in Overcoming Customer Resistance to Moving Tier One Apps to the Cloud

For enterprises that are heavily reliant on the Web to deliver their products and services, speed and reliability are key to customer satisfaction and retention. Application response times are generally measured in milliseconds or even microseconds. For larger enterprises, creating and maintaining an infrastructure to support a variety of lower latency Web-based applications may be cost prohibitive. For small enterprises, so called Infrastructure-as-a-Service (IaaS) solutions make their businesses possible.

However, as many disgruntled Netflix customers found out on Christmas day, being supported by a big and well-known cloud service provider (CSP), such as Amazon AWS, does not guarantee quality of service – or any service at all. While the details of the outage may be unclear, the event amplifies doubts about CSPs and, at the same time, offers opportunities for smaller more innovative service providers to emerge.

At the December 18, 2012, Wikibon Peer Incite Research Meeting, we learned how ViaWest has differentiated itself in the CSP marketplace. ViaWest has architected and built its own “converged infrastructure” leveraging best-of-breed components including VMware-based vCloud, Cisco UCS servers, 10 gigabit Ethernet switches and several storage solutions including SolidFire high performance SSD which includes a guaranteed quality of service (QoS) for cloud service providers.

According to Matt Wallace, Director of Cloud Architecture for ViaWest, in order to meet and exceed customer expectations for tier 1 application performance, speed and consistency are critical. "QoS at the storage level enables multi-tenancy and lower cost than dedicated servers and traditional hard disk (HDD) storage," he says. "HDD arrays don’t support QoS consistency as Web traffic can be very “bursty” or unpredictable and IO-intensive." ViaWest offers additional “configurable” services including; complex hosting, co-location, and managed services.

CSPs, such as Amazon AWS, are typically less flexible in how an application is supported or hosted and are less able handle bursty, sporadic, random IO traffic – unless the customer is willing to pay an additional premium for more service. As pointed out by Wikibon’s Dave Vellante in his related note, "Eleven Questions to Consider Before Moving Tier One Apps to the Cloud", extensibility, flexibility, network performance, reliability and uptime, security concerns, compliance practices, data governance, response time and latency management are all key considerations for organizations contemplating moving their applications to a CSP. Many CSPs are unable or unwilling to supply an SLA to adequately cover all of these requirements.

Flash and QoS

In contrast to mechanical-based HDD which has moving parts, a semiconductor-based solid state drive (SSD), such as NAND Flash SSD, is much better suited as a storage platform for computation as well as IO. QoS guarantees can eliminate objections from customers when considering Tier 1 apps in multi-tenant environments including Cloud-based applications.

A typical ViaWest SLA includes a QoS guarantee of 1TB of storage and 10k IOPS (IOs per second). ViaWest believes setting a performance expectation upfront by guarantying dedicated storage resources gives it a competitive edge. QoS means guaranteed performance levels, not over-delivering. If Tier 1 apps are going to move to the Cloud, response time variability is potentially problematic, and meaningful QoS guarantees, therefore, essential.

Wallace cites SolidFire as the only all-flash array vendor that he evaluated willing to provide a QoS capability. Other vendors Wallace spoke with told him, “Our arrays are so fast you don’t have to worry about QoS.” That answer wasn’t satisfactory for Wallace.

Action item: CSPs will need to avoid creating bottlenecks in their infrastructure if they expect to attract and keep tier 1 application clients. While bottlenecks can occur throughout the infrastructure, including in the network fabric and controllers, SSD and flash storage arrays have proven their utility with IO-intensive apps such as Web-based applications and database queries. Therefore, deploying flash will help meet SLAs and eliminate customer objections.

CSPs should also avoid performance degradation and, at the unset of customer relationships, meet performance requirements. This should be accomplished without over-provisioning each customer as, without limiters, applications may execute performance land-grabs.

Flash vendors wanting to establish a footprint in the CSP space will need to add more QoS capabilities to meet performance consistency requirements for multi-tenanted environments and to compete in an evolving marketplace.

Eleven Questions to Consider Before Moving Tier One Apps to the Cloud

At the December 18, 2012 Wikibon Peer Incite Research Meeting we heard how ViaWest is enabling tier 1 applications to move to the Cloud. This is good news for organizations. Just as we saw virtualization / private cloud applications move from predominantly test and dev up the food chain into tier 1 apps, it appears that infrastructure-as-a-service providers are beginning to step up to the plate and enable similar capabilities, built on all flash arrays (for example) and pinning quality of service to app delivery.

For CIOs this brings the issue of data governance front-and-center. Specifically, who is responsible if something goes wrong? Security, privacy, data protection issues...all the bugaboos of cloud, now become front and center items for CIOs and their staffs. In the experience of Wikibon practitioners knowledgeable in these matters, cloud service providers take little to no responsibility for data governance. Instead, the governance risk falls squarely on the shoulders of the customer. A thorough read of the SLAs of any cloud service provider makes this pretty clear.

That said, SLAs and policies of CSPs vary widely and can have major impacts on how data governance processes will be addressed in the cloud and what risks customers will need to absorb. In particular, the degree to which the CSP collaborates and shares risk with customers on such issues is fundamental to understanding data liabilities.

Amazon AWS, for example, for all its benefits, is the poster child for data governance red flags to CIOs. Its SLAs are tuned for self-service and scale, not for customization and aligning with the edicts of most mid- to large-sized organizations. To the extent that tier 1 apps (often running on block-based storage) will reside in the cloud, customers should understand the risks associated with data governance and the degree to which CSPs will support organizational goals.

The following eleven questions should be considered when moving any apps but tier 1 apps especially to the cloud:

- Can the CSP really support enterprise apps beyond test and dev?

- How flexible is the CSP with respect to the terms and conditions of SLAs – e.g. will the CSP alter the terms to meet my organizational needs?

- How robust is the network and how will latency impact the performance of my tier 1 apps?

- What happens when the CSP takes an outage? What is the penalty?

- How flexible is the CSP with regard to security/auditing /compliance practices?

- Can my auditors go on site to inspect the facility?

- Will the CSP security team meet with my security team?

- How are security incidents defined and reported, and is there flexibility in the processes – or it is “one size fits all?”

- Where will my data be stored, and is there transparency regarding physical location of data?

- What type of access do I have to the CSP's professionals, what does fast response time cost, and what’s the CSPs track record with regard to support?

- Complexity - What changes do I have to make to my applications to make them run properly in the cloud…i.e. are there nuances around latency management, location management, SLA management.

Action item: Inevitably, tier 1 apps will move to the cloud over time, and there's clear evidence it's happening sooner rather than later. The benefits of cloud are well understood but exposures remain. Depending on the selection of service provider, the rewards may often not outweigh the risks. CIOs must consider the policies and posture of Cloud service providers and the impacts on data governance strategies prior to developing Cloud plans. The degree to which the CSP will collaborate with customers and the flexibility of those service providers with regard to transparency, access to human capital resources and willingness to adjust Ts and Cs, are important indicators as to how much risk the CSP is truly willing to share.

Software-led Infrastructure: Optimizing Database Deployment

Executive Summary

Wikibon is extensively researching Software-led Infrastructure and how it contributes to both lower IT costs and improved application delivery and flexibility for the business.

Using Software-led Infrastructure (SLI) principles will allow databases to be run at significantly lower cost and with improved flexibility. For example, in a traditional database deployment a typical database environment using Oracle Enterprise Edition will be at least 30% more expensive to run than in a Software-Led Infrastructure environment.

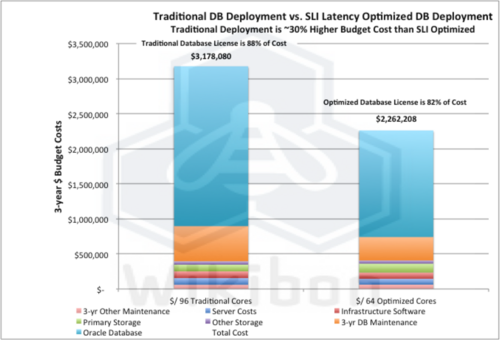

Figure 1 shows the hard cost overall savings from changing a traditional physical deployment to an SLI deployment. The database license cost is 88% of the cost in a traditional physical database deployment.

In our example, an SLI deployment would reduce the number of cores required from 96 to 64, while requiring more spending on servers, storage components, and virtualization. In the net, however, the overall cost can be reduced by about 30% as a function of the more efficient use of database licenses. In the traditional case, database licensing comprises 88% of costs vs. 82% in the SLI case. In addition, the SLI deployment has the added benefits of more high availability options, higher flexibility both to deploy and update, and greater ease to automate and manage.

Setting up a Software-Led Infrastructure will also enable easier migration of suitable workloads into the cloud, which will itself have to be deployed in an effective Software-Led Infrastructure.

The bottom line: By using SLI principles, virtualizing and using more expensive servers and blazing fast storage, the number of cores can be reduced by 33%. The reduction in additional Oracle Enterprise Edition licenses costs pays for the full cost of the enhanced servers and storage twice over. The hardware and a backup system come for free!

SLI Database Challenges

The most costly IT budget line item is enterprise software, and the largest component of the enterprise software budget is database software (whether from IBM, Microsoft or Oracle). Software licensing costs are high and complex, and related to the power of the processor. For example, the Oracle database edition license cost is $47,500 per processor (see footnotes for a definition of processor).

While database cost savings are a compelling argument for SLI, users need to carefully consider the impact on mission-critical application performance and best practices to mitigate the challenges. Software-Led Infrastructure has five fundamental characteristics, which are listed below in order of importance to database processing. The technical and management challenges of database environments are included for the first two elements.

- Pervasive Virtualization and Encapsulation:

- Databases are often the key component of application systems and require good performance and availability. Virtualization of databases can affect performance with the processor overhead of virtualization (around 10%).

- Distributed High Performance Persistent Storage:

- In a virtualized system, the IO goes through the hypervisor, which gives rise to a virtualization IO “tax”, blending the IO from multiple systems and making the normal caching mechanisms of disk-based IO less effective (about 15% impact on IO performance).

- The biggest constraint of database performance is almost always storage performance, especially where the storage is to hard disk drives (HDD), each with limited IOs per second and limited bandwidth. The result of using a disk environment where a large number of IOs go to HDD is high-average IO times and very high variance. Wikibon research shows the difference between a good HDD array (average 5ms, variance 169) and a good flash-only (or a tiered storage environment with a very high percentage of flash) array (average 2ms, variance 1).

- The sharing of virtualized system resources can lead to the “noisy neighbor" phenomenon, where a rogue job can interfere with the central application performance. A frequent example is a long-running, badly written inquiry that locks up the database, preventing more important production work.

- The sharing of virtualized system resources can lead to the “noisy neighbor phenomenon, where a rogue job can interfere with the central application performance. A frequent example is a long running badly written enquiry that locks up the database, creating poor IO response times and increased variance for more important production work.

- SQL databases are sensitive to variance because of the read and write locking mechanisms. Extended locking chains lead to threads timing out, with subsequent major disruption to the end-user experience. This is especially the case with virtualized databases, and the processors and storage must be balanced for a virtualized environment.

- Storage systems supporting virtualized databases need the ability to guarantee IO performance response time and variance, using a combination of secure multi-tenancy capabilities and upper and lower capping of IO rates (IOPS) for database environments.

- Intelligent Data Management Capabilities:

- This supports the ability to allow management across the system as a whole. The key is ensuring that all metadata required for automation management is available from all system hardware and software components via industry standard APIs.

- Unified Metadata about System, Application, & Data:

- This provides a longer-term SLI requirement to ensure consistent rich metadata, which allows rapid access.

- Software Defined Networking.

The negative impacts on database systems are why databases have been the last software class to be virtualized. However, this research shows that utilizing best practice will allow all databases to be virtualized, assuming that the ISVs have provided the necessary certification. Under these conditions, virtualization will reduce costs, provide alternative high-availability options, and improve business flexibility with improved time to implement and time to change.

SLI Best Practice for Database

Best practice for database infrastructure should include the following:

- Memory optimization on the servers to ensure that memory is not a constraint on system performance:

- Optimize does not mean maximize! The number of cores should be optimized for the database environment.

- Memory size should be optimized. Memories that are too large can negatively impact performance if the l1/l2 cache hit rates are affected.

- In Figure 1, a 30% uplift is assumed per optimized server core to increase memory and balance the power of each core in a virtualized environment. Overall the number of cores is reduced from 96 to 64. The decrease comes from increased power and memory of the servers, better utilization of server resources from the decreased multi-programming levels and more efficient locking mechanisms, and reduced IO times (see lower latency storage below).

- Database system virtualization onto physical servers by database type (e.g., Oracle Enterprise Edition), and virtual database spreading across some or all of the physical servers (e.g., a virtual Oracle RAC instance on each physical server).

- Deployment of the lowest latency storage possible with the minimum possible “noisy neighbor” interference, while ensuring that RPO and RTO SLA objectives can be met:

- The IO average response time should be low (<2 ms).

- The variance of IO response time should also be very low. An example of a variance definition could be that the percentage of IOs exceeding 8ms should be significantly less than 1%, and the number of IOs that exceed 64ms should be zero. Better variance should be traded for slightly longer average response times.

- In Figure 1, the cost of storage is assumed to twice that of traditional storage. The storage is flash-based, with more than 99% of IOs coming from the flash tier.

- The combination of higher server costs, low latency low variance IO performance and ability to share the load across virtualized resources will increase the efficiency of the server systems. This is key to reducing the number of cores in Figure 1 from 96 to 64 while maintaining the same database performance profile.

- Implementation of effective tools to identify the root cause for performance issues,

- Implementing requirements that all components of the system be manageable (now or in the near future) by automation software via restful APIs.

The benefits shown in Figure 1 come about from improving the utilization of Oracle licenses. This does not directly lead to lower costs but can help avoid increases in license fees, especially during enterprise agreement negotiations. This is a complex area, and Wikibon has tackled this issue in research entitled Oracle Negotiation Myths and Understanding Virtualization Adoption in Oracle Shops. The negotiation should focus less on license savings and more round the better use and greater benefit that the organization will get from its Oracle deployments and the greater likelihood that Oracle will be used in future projects.

Also not included in Figure 1 are the very real benefits of improved flexibility and improved options for availability. Applications with lower RPO/RTO requirements than a full Oracle RAC implementation may benefit from using virtualization fail-over capabilities. Improved speed of deployment and speed to deliver change will lower IT operational costs and increase the business value delivered to the line of business.

Future Wikibon Software-led Research

Software-Led Infrastructure will continue to be a major research area for Wikibon in 2013 with a special focus on database environments. Wikibon will be publishing details of the assumptions in Figure 1 as well as case studies of successful implementations in much greater detail.

Action item: CIOs should ensure the alignment of objectives by including some level of database software license responsibility within the IT operations budget. Moving to a full Software-Led Infrastructure should be clearly set as an objective. This should include the ability to migrate suitable workloads to the cloud if financially attractive. All database software that is certified for virtualization should be run on an optimized environment that includes suitable virtualization tools and optimized servers and storage. In time this should include automated operations.

Footnotes: The term "per processor" for is defined with respect to physical cores and a processor core multiplier (common processors = 0.5 x the number of cores). For example, an 8-processor, 32-core server using Intel Xeon 56XX CPUs would require 16 processor licenses. Other Oracle database features are also charged per processor. For example, the Real Application Clusters (RAC) feature costs $23,000 per processor.